GAO-12-208G, Designing Evaluations: 2012 Revision

GAO-12-208G, Designing Evaluations: 2012 Revision

GAO-12-208G, Designing Evaluations: 2012 Revision

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

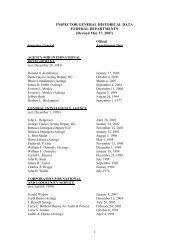

Table 3: Common Designs for Outcome <strong>Evaluations</strong><br />

Chapter 4: Designs for Assessing Program<br />

Implementation and Effectiveness<br />

Evaluation question Design<br />

Is the program achieving its desired outcomes or having other<br />

important side effects?<br />

Do program outcomes differ across program components,<br />

providers or recipients?<br />

Assessing the Achievement<br />

of Intended Outcomes<br />

Assessing Change in<br />

Outcomes<br />

Source <strong>GAO</strong>.<br />

• Compare program performance to law and regulations,<br />

program logic model, professional standards, or<br />

stakeholder expectations<br />

• Assess change in outcomes for participants before and<br />

after exposure to the program<br />

• Assess differences in outcomes between program<br />

participants and nonparticipants<br />

Assess variation in outcomes (or change in outcomes) across<br />

approaches, settings, providers, or subgroups of recipients<br />

Like outcome monitoring, outcome evaluations often assess the benefits<br />

of the program for participants or the broader public by comparing data on<br />

program outcomes to a preestablished target value. The criterion could<br />

be derived from law, regulation, or program design, while the target value<br />

might be drawn from professional standards, stakeholder expectations, or<br />

the levels observed previously in this or similar programs. This can help<br />

ensure that target levels for accomplishments, compliance, or absence of<br />

error are realistic. For example,<br />

• To assess the immediate outcomes of instructional programs, an<br />

evaluator could measure whether participants’ experienced short-term<br />

changes in knowledge, attitudes, or skills at the end of their training<br />

session. The evaluator might employ post-workshop surveys or<br />

conduct observations during the workshops to document how well<br />

participants understood and can use what was taught. Depending on<br />

the topic, industry standards might provide a criterion of 80 percent or<br />

90 percent accuracy, or demonstration of a set of critical skills, to<br />

define program success. Although observational data may be<br />

considered more accurate indicators of knowledge and skill gains than<br />

self-report surveys, they can often be more resource-intensive to<br />

collect and analyze.<br />

In programs where there are quantitative measures of performance but<br />

no established standard or target value, outcome evaluations at least may<br />

rely on assessing change or differences in desired outputs and outcomes.<br />

The level of the outcome of interest, such as client behavior or<br />

environmental conditions, is compared with the level observed in the<br />

absence of the program or intervention. This can be done by comparing<br />

Page 36 <strong>GAO</strong>-<strong>12</strong>-<strong>208G</strong>