High Performance Computing for Hyperspectral ... - IEEE Xplore

High Performance Computing for Hyperspectral ... - IEEE Xplore

High Performance Computing for Hyperspectral ... - IEEE Xplore

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

534 <strong>IEEE</strong> JOURNAL OF SELECTED TOPICS IN APPLIED EARTH OBSERVATIONS AND REMOTE SENSING, VOL. 4, NO. 3, SEPTEMBER 2011<br />

as degree of parallelization, amount of communication overhead<br />

in algorithms, and load balancing strategies used.<br />

B. Heterogeneous Implementation<br />

In order to balance the load of the processors in a heterogeneous<br />

parallel environment, each processor should execute an<br />

amount of work that is proportional to its speed [17]. There<strong>for</strong>e,<br />

the parallel algorithm in Section IV-A needs to be adapted <strong>for</strong><br />

efficient execution on heterogeneous computing environments.<br />

Two major goals of data partitioning in heterogeneous networks<br />

are [18]: 1) to obtain an appropriate set of workload fractions<br />

that best fit the heterogeneous environment, and 2) to<br />

translate the chosen set of values into a suitable decomposition<br />

of total workload , taking into account the properties of the<br />

heterogeneous system. In order to accomplish the above goals,<br />

we have developed a workload estimation algorithm (WEA) <strong>for</strong><br />

heterogeneous networks that assumes that the workload of each<br />

processor must be directly proportional to its local memory and<br />

inversely proportional to its speed. Below, we provide a description<br />

of WEA, which replaces the data partitioning step in the<br />

parallel algorithm described in Section IV-A. Steps 2–6 of the<br />

parallel algorithm in Section IV-A would be executed immediately<br />

after WEA. The input to WEA is a hyperspectral data cube<br />

, and the output is a set of spatial-domain heterogeneous<br />

partitions of :<br />

1) Obtain necessary in<strong>for</strong>mation about the heterogeneous<br />

system, including the number of workers , each processor’s<br />

identification number , and processor<br />

cycle-times .<br />

2) Set <strong>for</strong> all .<br />

In other words, this step first approximates the<br />

so that the amount of work assigned to each processing<br />

node is proportional to its speed and<br />

<strong>for</strong> all<br />

processors.<br />

3) Iteratively increment some until the set of best<br />

approximates the total workload to be completed, i.e.,<br />

<strong>for</strong> to , find so that<br />

,andthenset<br />

.<br />

4) Produce partitions of the input hyperspectral data set, so<br />

that the spectral channels corresponding to the same pixel<br />

vector are never stored in different partitions. In order to<br />

achieve this goal, we have adopted a methodology which<br />

consists of three main steps:<br />

a) The hyperspectral data set is first partitioned, using<br />

spatial-domain decomposition, into a set of vertical<br />

slabs which retain the full spectral in<strong>for</strong>mation at the<br />

same partition. The number of rows in each slab is set<br />

to be proportional to the estimated values of ,<br />

and assuming that no upper bound exist on the number<br />

of pixel vectors that can be stored by the local memory<br />

at the considered node.<br />

b) For each processor , check if the number of pixel<br />

vectors assigned to it is greater than the upper bound.<br />

For all the processors whose upper bounds are exceeded,<br />

assign them a number of pixels equal to<br />

their upper bounds. Now, we solve the partitioning<br />

problem of a set with remaining pixel vectors over<br />

the remaining processors. We recursively apply this<br />

procedure until all pixel vectors in the input data have<br />

been assigned, thus obtaining an initial workload distribution<br />

<strong>for</strong> each . It should be noted that, with the<br />

proposed algorithm description, it is possible that all<br />

processors exceed their upper bounds. This situation<br />

was never observed in our experiments. However,<br />

if the considered network includes processing units<br />

with low memory capacity, this situation could be<br />

handled by allocating an amount of data equal to the<br />

upper bound to those processors, and then processing<br />

the remaining data as an offset in a second algorithm<br />

iteration.<br />

c) Iteratively recalculate the workload assigned to each<br />

processor using the following expression:<br />

where denotes the set of neighbors of processing<br />

node ,and denotes the workload of (i.e., the<br />

number of pixel vectors assigned to this processor)<br />

after the -th iteration. This scheme has been demonstrated<br />

in previous work to converge to an average<br />

workload [51].<br />

The parallel heterogeneous algorithm has been implemented<br />

using the C++ programming language with calls to standard<br />

message passing interface (MPI) 10 library functions (also used<br />

in our cluster-based implementation).<br />

C. FPGA Implementation<br />

Our strategy <strong>for</strong> implementation of the hyperspectral unmixing<br />

chain in reconfigurable hardware is aimed at enhancing<br />

replicability and reusability of slices in FPGA devices through<br />

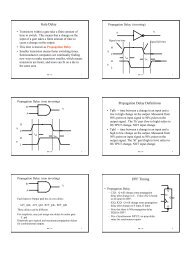

the utilization of systolic array design [14]. Fig. 2 describes our<br />

systolic architecture. Here, local results remain static at each<br />

processing element, while a total of pixel vectors with<br />

dimensions are input to the systolic array from top to bottom.<br />

Similarly, skewers with dimensions are fed to the systolic<br />

array from left to right. In Fig. 2, asterisks represent delays.<br />

The processing nodes labeled as in Fig. 2 per<strong>for</strong>m the<br />

individual products <strong>for</strong> the skewer projections. On the other<br />

hand, the nodes labeled as and respectively compute<br />

the maxima and minima projections after the dot product calculations<br />

have been completed. In fact, the and nodes<br />

avoid broadcasting the pixel while simplifying the collection<br />

of the results.<br />

Based on the systolic array described above (which also allows<br />

implementation of the fully constrained spectral unmixing<br />

stage) we have implemented the full hyperspectral unmixing<br />

chain using the very high speed integrated circuit hardware description<br />

language (VHDL) 11 <strong>for</strong> the specification of the systolic<br />

array. Further, we have used the Xilinx ISE environment<br />

and the Embedded Development Kit (EDK) environment 12 to<br />

10 http://www.mcs.anl.gov/mpi.<br />

11 http://www.vhdl.org.<br />

12 http://www.xilinx.com/ise/embedded/edk_pstudio.htm.<br />

(8)