Sage Reference Manual: Numerical Optimization - Mirrors

Sage Reference Manual: Numerical Optimization - Mirrors

Sage Reference Manual: Numerical Optimization - Mirrors

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

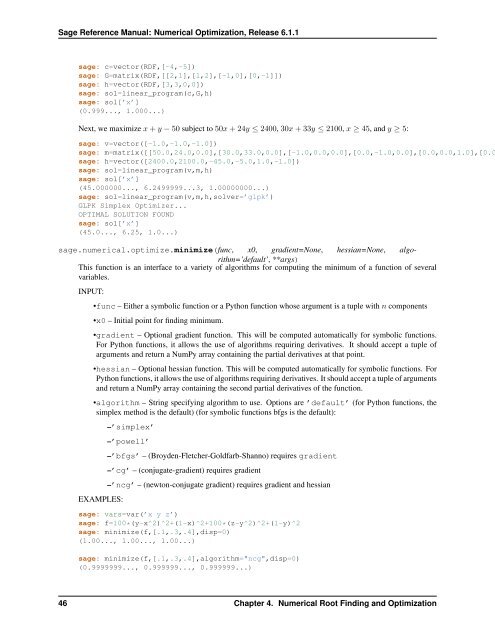

<strong>Sage</strong> <strong>Reference</strong> <strong>Manual</strong>: <strong>Numerical</strong> <strong>Optimization</strong>, Release 6.1.1<br />

sage: c=vector(RDF,[-4,-5])<br />

sage: G=matrix(RDF,[[2,1],[1,2],[-1,0],[0,-1]])<br />

sage: h=vector(RDF,[3,3,0,0])<br />

sage: sol=linear_program(c,G,h)<br />

sage: sol[’x’]<br />

(0.999..., 1.000...)<br />

Next, we maximize x + y − 50 subject to 50x + 24y ≤ 2400, 30x + 33y ≤ 2100, x ≥ 45, and y ≥ 5:<br />

sage: v=vector([-1.0,-1.0,-1.0])<br />

sage: m=matrix([[50.0,24.0,0.0],[30.0,33.0,0.0],[-1.0,0.0,0.0],[0.0,-1.0,0.0],[0.0,0.0,1.0],[0.0<br />

sage: h=vector([2400.0,2100.0,-45.0,-5.0,1.0,-1.0])<br />

sage: sol=linear_program(v,m,h)<br />

sage: sol[’x’]<br />

(45.000000..., 6.2499999...3, 1.00000000...)<br />

sage: sol=linear_program(v,m,h,solver=’glpk’)<br />

GLPK Simplex Optimizer...<br />

OPTIMAL SOLUTION FOUND<br />

sage: sol[’x’]<br />

(45.0..., 6.25, 1.0...)<br />

sage.numerical.optimize.minimize(func, x0, gradient=None, hessian=None, algorithm=’default’,<br />

**args)<br />

This function is an interface to a variety of algorithms for computing the minimum of a function of several<br />

variables.<br />

INPUT:<br />

•func – Either a symbolic function or a Python function whose argument is a tuple with n components<br />

•x0 – Initial point for finding minimum.<br />

•gradient – Optional gradient function. This will be computed automatically for symbolic functions.<br />

For Python functions, it allows the use of algorithms requiring derivatives. It should accept a tuple of<br />

arguments and return a NumPy array containing the partial derivatives at that point.<br />

•hessian – Optional hessian function. This will be computed automatically for symbolic functions. For<br />

Python functions, it allows the use of algorithms requiring derivatives. It should accept a tuple of arguments<br />

and return a NumPy array containing the second partial derivatives of the function.<br />

•algorithm – String specifying algorithm to use. Options are ’default’ (for Python functions, the<br />

simplex method is the default) (for symbolic functions bfgs is the default):<br />

EXAMPLES:<br />

–’simplex’<br />

–’powell’<br />

–’bfgs’ – (Broyden-Fletcher-Goldfarb-Shanno) requires gradient<br />

–’cg’ – (conjugate-gradient) requires gradient<br />

–’ncg’ – (newton-conjugate gradient) requires gradient and hessian<br />

sage: vars=var(’x y z’)<br />

sage: f=100*(y-x^2)^2+(1-x)^2+100*(z-y^2)^2+(1-y)^2<br />

sage: minimize(f,[.1,.3,.4],disp=0)<br />

(1.00..., 1.00..., 1.00...)<br />

sage: minimize(f,[.1,.3,.4],algorithm="ncg",disp=0)<br />

(0.9999999..., 0.999999..., 0.999999...)<br />

46 Chapter 4. <strong>Numerical</strong> Root Finding and <strong>Optimization</strong>