here - Joint Mathematics Meetings

here - Joint Mathematics Meetings

here - Joint Mathematics Meetings

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Our heroesMarc KacStephen O. Rice1914–1984 1907–1986

Real roots of algebraic equations (Kac, 1943)f (t) = ξ 0 + ξ 1 t + ξ 2 t 2 + · · · + ξ n−1 t n−1

Real roots of algebraic equations (Kac, 1943)f (t) = ξ 0 + ξ 1 t + ξ 2 t 2 + · · · + ξ n−1 t n−1P{ξ = (ξ 0 , . . . , ξ n−1 ) ∈ A} =∫Ae −‖x‖2 /2(2π) n/2 dx (ξ ∼ N(0, In×n ) )

Real roots of algebraic equations (Kac, 1943)f (t) = ξ 0 + ξ 1 t + ξ 2 t 2 + · · · + ξ n−1 t n−1P{ξ = (ξ 0 , . . . , ξ n−1 ) ∈ A} =Theorem: N n = the number of real zeroes of f∫Ae −‖x‖2 /2(2π) n/2 dx (ξ ∼ N(0, In×n ) )E{N n } =4 π∫ 10[1 − n 2 [x 2 (1 − x 2 )/(1 − x 2n ] 2 ] 1/21 − x 2 dx

Zeroes of complex polynomialsf (z) = ξ 0 +a 1 ξ 1 z+a 2 ξ 2 z 2 +· · ·+a n−1 ξ n−1 z n−1 , z ∈ C.

Zeroes of complex polynomialsf (z) = ξ 0 +a 1 ξ 1 z+a 2 ξ 2 z 2 +· · ·+a n−1 ξ n−1 z n−1 , z ∈ C.

Zeroes of complex polynomialsf (z) = ξ 0 +a 1 ξ 1 z+a 2 ξ 2 z 2 +· · ·+a n−1 ξ n−1 z n−1 , z ∈ C.

Zeroes of complex polynomialsf (z) = ξ 0 +a 1 ξ 1 z+a 2 ξ 2 z 2 +· · ·+a n−1 ξ n−1 z n−1 , z ∈ C.Shiffman

Thinking more generally:1: f : R N → R N , random{}E #{t ∈ R N : f (t) = u}= ?

Thinking more generally:1: f : R N → R N , random{}E #{t ∈ R N : f (t) = u}= ?2: f : M → N, random, dim(M) = dim(N)E {#{p ∈ M : f (p) = q}} = ?

Thinking more generally:1: f : R N → R N , random{}E #{t ∈ R N : f (t) = u}= ?2: f : M → N, random, dim(M) = dim(N)E {#{p ∈ M : f (p) = q}} = ?3: In another notation:E { #{f −1 (q)} } = ?

Thinking more generally:1: f : R N → R N , random{}E #{t ∈ R N : f (t) = u}= ?2: f : M → N, random, dim(M) = dim(N)E {#{p ∈ M : f (p) = q}} = ?3: In another notation:E { #{f −1 (q)} } = ?4: More generally:f : M → N, dim(M) ≠ dim(N), D ⊂ NIn this case, typically,dim ( f −1 (D) ) = dim(M) − dim(N) + dim(D),and it is not clear what the corresponding question is.

The original (non-specific) Rice formula◮ U u ≡ U u (f , T ) ∆ = #{t ∈ T : f (t) = u, ḟ (t) > 0}

The original (non-specific) Rice formula◮ U u ≡ U u (f , T ) ∆ = #{t ∈ T : f (t) = u, ḟ (t) > 0}◮ Basic conditions on f : Continuity and differentiability

The original (non-specific) Rice formula◮ U u ≡ U u (f , T ) ∆ = #{t ∈ T : f (t) = u, ḟ (t) > 0}◮ Basic conditions on f : Continuity and differentiability◮ Plus (boring) side conditions to be met later

The original (non-specific) Rice formula◮ U u ≡ U u (f , T ) ∆ = #{t ∈ T : f (t) = u, ḟ (t) > 0}◮ Basic conditions on f : Continuity and differentiability◮ Plus (boring) side conditions to be met later◮ Kac-Rice formula:∫ ∫ ∞E {U u } = y p t (u, y) dydtT 0∫ ∫ ∞= p t (u) y p t (y ∣ ∣u) dydt∫T0{= p t (u) E |ḟ (t)| 1 (0,∞) (ḟ (t)) ∣ }f (t) = u dtTw<strong>here</strong> p t (x, y) is the joint density of (f (t), ḟ (t)),p t (u) is the probability density of f (t), etc.

The original (non-specific) Rice formula◮ U u ≡ U u (f , T ) ∆ = #{t ∈ T : f (t) = u, ḟ (t) > 0}◮ Basic conditions on f : Continuity and differentiability◮ Plus (boring) side conditions to be met later◮ Kac-Rice formula:∫ ∫ ∞E {U u } = y p t (u, y) dydtT 0∫ ∫ ∞= p t (u) y p t (y ∣ ∣u) dydt∫T0{= p t (u) E |ḟ (t)| 1 (0,∞) (ḟ (t)) ∣ }f (t) = u dtTw<strong>here</strong> p t (x, y) is the joint density of (f (t), ḟ (t)),p t (u) is the probability density of f (t), etc.◮ T = [0, T ] is an interval. M is a general set (e.g. manifold)

The original (non-specific) Rice formula: The proof◮ Take a (positive) approximate delta function, δ ε , supported on[−ε, +ε], and ∫ R δ ε(x) dx = 1.

The original (non-specific) Rice formula: The proof◮ Take a (positive) approximate delta function, δ ε , supported on[−ε, +ε], and ∫ R δ ε(x) dx = 1.

The original (non-specific) Rice formula: The proof◮ Take a (positive) approximate delta function, δ ε , supported on[−ε, +ε], and ∫ R δ ε(x) dx = 1.∫1 =Rδ ε (x − u) dx =∫ t u1t l 1|ḟ (t)| δ ε (f (t) − u) 1 (0,∞) (f (t)) dt∫ t u1= lim |ḟ (t)| δε→0ε(f (t) − u) 1 (0,∞) (f (t)) dtt1l

The original (non-specific) Rice formula: The proof◮ Take a (positive) approximate delta function, δ ε , supported on[−ε, +ε], and ∫ R δ ε(x) dx = 1.∫1 =Rδ ε (x − u) dx =∫ t u1t l 1|ḟ (t)| δ ε (f (t) − u) 1 (0,∞) (f (t)) dt∫ t u1= lim |ḟ (t)| δε→0ε(f (t) − u) 1 (0,∞) (f (t)) dtt1lU u (f , T ) = lim |ḟ ε→0∫T(t)| δ ε(f (t) − u) 1 (0,∞) (f (t)) dt

The original (non-specific) Rice formula: The proof◮ So far, everything is deterministicU u (f , T ) = lim |ḟ (t)| δ ε (f (t) − u) 1 (0,∞) (f (t)) dtε→0∫T

The original (non-specific) Rice formula: The proof◮ So far, everything is deterministicU u (f , T ) = lim |ḟ (t)| δ ε (f (t) − u) 1 (0,∞) (f (t)) dtε→0∫T◮ Take expectations, with some sleight of hand{ ∫E{U u (f , T )} = E limε→0 T∫===∫T{lim Eε→0lim∫ ∞T ε→0 x=−∞∫ ∞∫T0}|ḟ (t)| δ ε(f (t) − u) 1 (0,∞) (f (t)) dt}|ḟ (t)| δ ε (f (t) − u) 1 (0,∞) (f (t)) dt∫ ∞y=0|y| p t (u, y) dydt|y| δ ε (x − u) p t (x, y) dxdy dt

Constructing Gaussian Processes◮ A (separable) parameter space M.

Constructing Gaussian Processes◮ A (separable) parameter space M.◮ A finite or infinite set of functions ϕ 1 , ϕ 2 , . . . , ϕ j : M → Rsatisfying∑ϕ 2 j (t) < ∞, for all t ∈ M.j

Constructing Gaussian Processes◮ A (separable) parameter space M.◮ A finite or infinite set of functions ϕ 1 , ϕ 2 , . . . , ϕ j : M → Rsatisfying∑ϕ 2 j (t) < ∞, for all t ∈ M.j◮ A sequence ξ 1 , ξ 2 , . . . , of independent, N(0, 1) randomvariables,

Constructing Gaussian Processes◮ A (separable) parameter space M.◮ A finite or infinite set of functions ϕ 1 , ϕ 2 , . . . , ϕ j : M → Rsatisfying∑ϕ 2 j (t) < ∞, for all t ∈ M.j◮ A sequence ξ 1 , ξ 2 , . . . , of independent, N(0, 1) randomvariables,◮ Define the random field f : M → R byf (t) = ∑ jξ j ϕ j (t)

Constructing Gaussian Processes◮ A (separable) parameter space M.◮ A finite or infinite set of functions ϕ 1 , ϕ 2 , . . . , ϕ j : M → Rsatisfying∑ϕ 2 j (t) < ∞, for all t ∈ M.j◮ A sequence ξ 1 , ξ 2 , . . . , of independent, N(0, 1) randomvariables,◮ Define the random field f : M → R byf (t) = ∑ jξ j ϕ j (t)◮ Mean, covariance, and variance functionsµ(t) = ∆ E{f (t)} = 0C(s, t) = ∆ {[f (s) − µ(s)] · [f (t) − µ(t)]} = ∑ ϕ j (s)ϕ j (t)σ 2 (t) = ∆ C(t, t) = ∑ ϕ 2 j (t)

Existence of Gaussian processesTheorem◮ Let M be a topological space.◮ Let C : M × M be positive semi-definite.◮ Then t<strong>here</strong> exists a Gaussian process on f : M → R with meanzero and covariance function C.◮ Furthermore, f has a representation of the formf (t) = ∑ j ξ jϕ j (t), and if f is a.s. continuous then the sumconverges uniformly, a.s., on compacts.

Existence of Gaussian processesTheorem◮ Let M be a topological space.◮ Let C : M × M be positive semi-definite.◮ Then t<strong>here</strong> exists a Gaussian process on f : M → R with meanzero and covariance function C.◮ Furthermore, f has a representation of the formf (t) = ∑ j ξ jϕ j (t), and if f is a.s. continuous then the sumconverges uniformly, a.s., on compacts.Corollary◮ If t<strong>here</strong> is justice in the world (smoothness and summability)ḟ (t) =∂ ∂t f (t) = ∑ jξ j ˙ϕ j (t),and so if f is Gaussian, so is ḟ . W<strong>here</strong> ḟ is any derivative on nice M.

Existence of Gaussian processesTheorem◮ Let M be a topological space.◮ Let C : M × M be positive semi-definite.◮ Then t<strong>here</strong> exists a Gaussian process on f : M → R with meanzero and covariance function C.◮ Furthermore, f has a representation of the formf (t) = ∑ j ξ jϕ j (t), and if f is a.s. continuous then the sumconverges uniformly, a.s., on compacts.Corollary◮ If t<strong>here</strong> is justice in the world (smoothness and summability)ḟ (t) =∂ ∂t f (t) = ∑ jξ j ˙ϕ j (t),and so if f is Gaussian, so is ḟ . W<strong>here</strong> ḟ is any derivative on nice M.Furthermore◮ E{ḟ (s)ḟ (t)} = E{ ∑ ξ j ˙ϕ j (s) ∑ ξ k ˙ϕ k (t) } = ∑ ˙ϕ j (s) ˙ϕ j (t)◮E{f (s) ḟ (t)} = E { ∑ ξ j ϕ j (s) ∑ ξ k ˙ϕ k (t) } = ∑ ϕ j (s) ˙ϕ j (t)

Example: The Brownian sheet on R N

Example: The Brownian sheet on R NE{W (t)} = 0E{W (s)W (t)} = (s 1 ∧ t 1 ) × · · · × (s N ∧ t N ).w<strong>here</strong> t = (t 1 , . . . , t N ).

Example: The Brownian sheet on R NE{W (t)} = 0E{W (s)W (t)} = (s 1 ∧ t 1 ) × · · · × (s N ∧ t N ).w<strong>here</strong> t = (t 1 , . . . , t N ).◮ Replace j by j = (j 1 , . . . , j N )⇒ϕ j (t) = 2 N/2 N ∏i=12(2j i + 1)π sin ( 12 (2j i + 1) πt i)W (t) = ∑ · · · ∑ξ j1 ,...,j Nϕ j1 ,...,j N(t)j 1j N

Example: The Brownian sheet on R NE{W (t)} = 0E{W (s)W (t)} = (s 1 ∧ t 1 ) × · · · × (s N ∧ t N ).w<strong>here</strong> t = (t 1 , . . . , t N ).◮ Replace j by j = (j 1 , . . . , j N )⇒ϕ j (t) = 2 N/2 N ∏i=12(2j i + 1)π sin ( 12 (2j i + 1) πt i)W (t) = ∑ · · · ∑ξ j1 ,...,j Nϕ j1 ,...,j N(t)j 1◮ N = 1W is standard Brownian motion.The corresponding expansion is due to Lévy, and thecorresponding RKHS is known as Cameron-Martin space.j N

Constant variance Gaussian processes◮ We know that∑◮ f (t) = ξj ϕ j (t)◮ ḟ (t) = ∑ ξ j ˙ϕ j (t)∑◮ E{ḟ (s)ḟ (t)} = ˙ϕ j (s) ˙ϕ j (t)◮ E{f (s)ḟ (t)} = ∑ ϕ j (s) ˙ϕ j (t)

Constant variance Gaussian processes◮ We know that∑◮ f (t) = ξj ϕ j (t)◮ ḟ (t) = ∑ ξ j ˙ϕ j (t)∑◮ E{ḟ (s)ḟ (t)} = ˙ϕ j (s) ˙ϕ j (t)◮ E{f (s)ḟ (t)} = ∑ ϕ j (s) ˙ϕ j (t)◮ Thus, ifσ 2 (t) = E{f 2 (t)} = ∑ ϕ 2 j (t)is a constant, say 1, so that ‖{ϕ j (t)}‖ l2 = 1

Constant variance Gaussian processes◮ We know that∑◮ f (t) = ξj ϕ j (t)◮ ḟ (t) = ∑ ξ j ˙ϕ j (t)∑◮ E{ḟ (s)ḟ (t)} = ˙ϕ j (s) ˙ϕ j (t)◮ E{f (s)ḟ (t)} = ∑ ϕ j (s) ˙ϕ j (t)◮ Thus, ifσ 2 (t) = E{f 2 (t)} = ∑ ϕ 2 j (t)is a constant, say 1, so that ‖{ϕ j (t)}‖ l2 = 1◮ thenE{f (t)ḟ (t)} = ∑ ϕ j (t) ˙ϕ j (t)= 1 2∑ ∂∂t ϕ2 j (t)= 1 ∂ ∑2 ϕ2∂t j (t)= 0

Constant variance Gaussian processes◮ We know that∑◮ f (t) = ξj ϕ j (t)◮ ḟ (t) = ∑ ξ j ˙ϕ j (t)∑◮ E{ḟ (s)ḟ (t)} = ˙ϕ j (s) ˙ϕ j (t)◮ E{f (s)ḟ (t)} = ∑ ϕ j (s) ˙ϕ j (t)◮ Thus, ifσ 2 (t) = E{f 2 (t)} = ∑ ϕ 2 j (t)is a constant, say 1, so that ‖{ϕ j (t)}‖ l2 = 1◮ thenE{f (t)ḟ (t)} = ∑ ϕ j (t) ˙ϕ j (t)= 1 2∑ ∂∂t ϕ2 j (t)= 1 ∂ ∑2 ϕ2∂t j (t)= 0◮ ⇒ f (t) and its derivative ḟ (t) are INDEPENDENT. (uncorrelated)

Constant variance Gaussian processes and Kac-Rice◮ Generic Kac-Rice formula∫ {E {U u } = p t (u) E |ḟ (t)| 1 (0,∞) (ḟ (t)) ∣ }f (t) = u dtT

Constant variance Gaussian processes and Kac-Rice◮ Generic Kac-Rice formula∫ {E {U u } = p t (u) E |ḟ (t)| 1 (0,∞) (ḟ (t)) ∣ }f (t) = u dtT◮ But f (t) and ḟ (t) are independent!

Constant variance Gaussian processes and Kac-Rice◮ Generic Kac-Rice formula∫ {E {U u } = p t (u) E |ḟ (t)| 1 (0,∞) (ḟ (t)) ∣ }f (t) = u dtT◮ But f (t) and ḟ (t) are independent!◮ Notation1 = σ 2 (t), λ(t) ∆ = E{[ḟ (t)] 2 }

Constant variance Gaussian processes and Kac-Rice◮ Generic Kac-Rice formula∫ {E {U u } = p t (u) E |ḟ (t)| 1 (0,∞) (ḟ (t)) ∣ }f (t) = u dtT◮ But f (t) and ḟ (t) are independent!◮ Notation1 = σ 2 (t), λ(t) ∆ = E{[ḟ (t)] 2 }◮ Rice formula (+ε)E {U u } = e−u2 /22π∫T[λ(t)] 1/2 dt.

Constant variance Gaussian processes and Kac-Rice◮ Generic Kac-Rice formula∫ {E {U u } = p t (u) E |ḟ (t)| 1 (0,∞) (ḟ (t)) ∣ }f (t) = u dtT◮ But f (t) and ḟ (t) are independent!◮ Notation◮ Rice formula (+ε)1 = σ 2 (t), λ(t) ∆ = E{[ḟ (t)] 2 }E {U u } = e−u2 /22π◮ If λ(t) ≡ λ, we have the Rice formula∫TE {U u } = T λ1/22π[λ(t)] 1/2 dt.e −u2 /2

Gaussian Kac-Rice with no simplificationE {U u (T )} =∫Tλ 1/2 (t)σ −1 (t)[1 − µ 2 (t)] 1/2 ϕ( ) m(t)σ(t)× [ 2ϕ(η(t)) + 2η(t)[2Φ(η(t)) − 1] ] dt

Gaussian Kac-Rice with no simplificationE {U u (T )} =∫Tλ 1/2 (t)σ −1 (t)[1 − µ 2 (t)] 1/2 ϕ( ) m(t)σ(t)× [ 2ϕ(η(t)) + 2η(t)[2Φ(η(t)) − 1] ] dtm(t) = E{f (t)}σ 2 (t) = E{[f (t)] 2 }λ(t) = E{[ḟ (t)] 2 }µ(t) =E{[f (t) − m(t)] · [ḟ (t)]}λ 1/2 (t)σ(t)η(t) = ṁ(t) − λ1/2 (t)µ(t)m(t)/σ(t)λ 1/2 (t)[1 − µ 2 (t)] 1/2

Gaussian Kac-Rice with no simplificationE {U u (T )} =∫Tλ 1/2 (t)σ −1 (t)[1 − µ 2 (t)] 1/2 ϕ( ) m(t)σ(t)× [ 2ϕ(η(t)) + 2η(t)[2Φ(η(t)) − 1] ] dtm(t) = E{f (t)}σ 2 (t) = E{[f (t)] 2 }λ(t) = E{[ḟ (t)] 2 }µ(t) =E{[f (t) − m(t)] · [ḟ (t)]}λ 1/2 (t)σ(t)η(t) = ṁ(t) − λ1/2 (t)µ(t)m(t)/σ(t)λ 1/2 (t)[1 − µ 2 (t)] 1/2◮ Very important fact: Long term covariances do not appear inany of these formulae.

Some pertinent thoughts◮ Real roots of Gaussian polynomials The original Kac resultnow makes sense:f (t) = ξ 0 + ξ 1 t + · · · + ξ n−1 t n−1E{N n } = 4 π∫ 10[1 − n 2 [x 2 (1 − x 2 )/(1 − x 2n ] 2 ] 1/21 − x 2 dx

Some pertinent thoughts◮ Real roots of Gaussian polynomials The original Kac resultnow makes sense:f (t) = ξ 0 + ξ 1 t + · · · + ξ n−1 t n−1E{N n } = 4 π∫ 10[1 − n 2 [x 2 (1 − x 2 )/(1 − x 2n ] 2 ] 1/21 − x 2 dx◮ Downcrossings, crossings, critical pointsCritical points of different kinds are just zeroes of ḟ withdifferent side conditions. But now second derivatives appear,calculations will be harder. (f (t), f ′′ (t) are not independent.)

Some pertinent thoughts◮ Real roots of Gaussian polynomials The original Kac resultnow makes sense:f (t) = ξ 0 + ξ 1 t + · · · + ξ n−1 t n−1E{N n } = 4 π∫ 10[1 − n 2 [x 2 (1 − x 2 )/(1 − x 2n ] 2 ] 1/21 − x 2 dx◮ Downcrossings, crossings, critical pointsCritical points of different kinds are just zeroes of ḟ withdifferent side conditions. But now second derivatives appear,calculations will be harder. (f (t), f ′′ (t) are not independent.)◮ Weakening the conditions In the Gaussian case, only absolutecontinuity and the finiteness of λ is needed. (Itô, Ylvisaker)

Some pertinent thoughts◮ Real roots of Gaussian polynomials The original Kac resultnow makes sense:f (t) = ξ 0 + ξ 1 t + · · · + ξ n−1 t n−1E{N n } = 4 π∫ 10[1 − n 2 [x 2 (1 − x 2 )/(1 − x 2n ] 2 ] 1/21 − x 2 dx◮ Downcrossings, crossings, critical pointsCritical points of different kinds are just zeroes of ḟ withdifferent side conditions. But now second derivatives appear,calculations will be harder. (f (t), f ′′ (t) are not independent.)◮ Weakening the conditions In the Gaussian case, only absolutecontinuity and the finiteness of λ is needed. (Itô, Ylvisaker)◮ Higher moments?

Some pertinent thoughts◮ Real roots of Gaussian polynomials The original Kac resultnow makes sense:f (t) = ξ 0 + ξ 1 t + · · · + ξ n−1 t n−1E{N n } = 4 π∫ 10[1 − n 2 [x 2 (1 − x 2 )/(1 − x 2n ] 2 ] 1/21 − x 2 dx◮ Downcrossings, crossings, critical pointsCritical points of different kinds are just zeroes of ḟ withdifferent side conditions. But now second derivatives appear,calculations will be harder. (f (t), f ′′ (t) are not independent.)◮ Weakening the conditions In the Gaussian case, only absolutecontinuity and the finiteness of λ is needed. (Itô, Ylvisaker)◮ Higher moments?◮ Fixed points of vector valued processes?

The Kac-Rice “Metatheorem”

The Kac-Rice “Metatheorem”

The Kac-Rice “Metatheorem”◮ The setupf = (f 1 , . . . , f N ) : M ⊂ R N → R Ng = (g 1 , . . . , g K ) : M ⊂ R N → R K

The Kac-Rice “Metatheorem”◮ The setup◮ Number of points:f = (f 1 , . . . , f N ) : M ⊂ R N → R Ng = (g 1 , . . . , g K ) : M ⊂ R N → R KN u ≡ N u (M) ≡ N u (f , g : M, B)∆= E { # {t ∈ M : f (t) = u, g(t) ∈ B} } .

The Kac-Rice “Metatheorem”◮ The setup◮ Number of points:f = (f 1 , . . . , f N ) : M ⊂ R N → R Ng = (g 1 , . . . , g K ) : M ⊂ R N → R KN u ≡ N u (M) ≡ N u (f , g : M, B)∆= E { # {t ∈ M : f (t) = u, g(t) ∈ B} } .◮ The “metatheorem”, or generalised Kac-Rice∫ ∫E{N u } = |det∇y| 1 B (v) p t (u, ∇y, v) d(∇y) dv dt∫MR{D }= E |det∇f (t) | 1 B (g(t)) ∣ f (t) = u p t (u) dt,Mp t(x, ∇y, v) is the joint density of (f t, ∇f t, g t)(∇f )(t) ≡ ∇f (t) ≡ ( f ij (t) ) i,j=1,...,N≡ ( ∂f i (t)∂t j)i,j=1,...,N .

The original (non-specific) Rice formula: The proof◮ Take a (positive) approximate delta function, δ ε , supported on[−ε, +ε], and ∫ R δ ε(x) dx = 1.

The original (non-specific) Rice formula: The proof◮ Take a (positive) approximate delta function, δ ε , supported on[−ε, +ε], and ∫ R δ ε(x) dx = 1.

The original (non-specific) Rice formula: The proof◮ Take a (positive) approximate delta function, δ ε , supported on[−ε, +ε], and ∫ R δ ε(x) dx = 1.∫1 =Rδ ε (x − u) dx =∫ t u1t l 1|ḟ (t)| δ ε (f (t) − u) 1 (0,∞) (f (t)) dt∫ t u1= lim |ḟ (t)| δε→0ε(f (t) − u) 1 (0,∞) (f (t)) dtt1l

The original (non-specific) Rice formula: The proof◮ Take a (positive) approximate delta function, δ ε , supported on[−ε, +ε], and ∫ R δ ε(x) dx = 1.∫1 =Rδ ε (x − u) dx =∫ t u1t l 1|ḟ (t)| δ ε (f (t) − u) 1 (0,∞) (f (t)) dt∫ t u1= lim |ḟ (t)| δε→0ε(f (t) − u) 1 (0,∞) (f (t)) dtt1lU u (f , T ) = lim |ḟ ε→0∫T(t)| δ ε(f (t) − u) 1 (0,∞) (f (t)) dt

The Kac-Rice Conditions (the fine print).

The Kac-Rice Conditions (the fine print).Let f , g, M and B be as above, with the additional assumption that the boundaries of M and B havefinite N − 1 and K − 1 dimensional measures, respectively. Furthermore, assume that the followingconditions are satisfied for some u ∈ R N :(a) All components of f , ∇f , and g are a.s. continuous and have finite variances (over M).(b) For all t ∈ M, the marginal densities p t (x) of f (t) (implicitly assumed to exist) are continuous atx = u.(c) The conditional densities p t (x|∇f (t), g(t)) of f (t) given g(t) and ∇f (t) (implicitly assumed toexist) are bounded above and continuous at x = u, uniformly in t ∈ M.(d) The conditional densities p t (z|f (t) = x) of det∇f (t) given f (t) = x, are continuous for z and xin neighbourhoods of 0 and u, respectively, uniformly in t ∈ M.(e) The conditional densities p t (z|f (t) = x) of g(t) given f (t) = x, are continuous for all z and for xin a neighbourhood u, uniformly in t ∈ M.(f) The following moment condition holds:{ ∣∣∣f ∣sup max E i ∣∣j (t) N } < ∞.t∈M 1≤i,j≤N(g)The moduli of continuity of each of the components of f , ∇f , and g satisfy(P { ω(η) > ε } = o η N ) , as η ↓ 0,for any ε > 0.

Higher (factorial) moments◮ Factorial notation(x) k∆ = x(x − 1) . . . (x − k + 1).

Higher (factorial) moments◮ Factorial notation(x) k∆ = x(x − 1) . . . (x − k + 1).◮ Kac-Rice (again?)∫E{(N u ) k } ==M k E∫M k ∫{ k∏|det∇f (t j ) | 1 B (g(t j )) ∣ ˜f}(˜t) = ũ p˜t (ũ)d˜tj=1R kD j=1k∏|detD j | 1 B (v j ) p˜t (ũ, ˜D, ṽ) d ˜D dṽ d˜t,

Higher (factorial) moments◮ Factorial notation(x) k∆ = x(x − 1) . . . (x − k + 1).◮ Kac-Rice (again?)∫E{(N u ) k } ==M k E∫M k ∫{ k∏|det∇f (t j ) | 1 B (g(t j )) ∣ ˜f}(˜t) = ũ p˜t (ũ)d˜tj=1R kD j=1k∏|detD j | 1 B (v j ) p˜t (ũ, ˜D, ṽ) d ˜D dṽ d˜t,M k = {˜t = (t 1, . . . , t k ) : t j ∈ M, 1 ≤ j ≤ k}˜f (˜t) = (f (t 1), . . . , f (t k )) : M k → R Nk˜g(˜t) = (g(t 1), . . . , g(t k )) : M k → R KkD = N(N + 1)/2 + K

The Gaussian case: What can/can’t be explicitly computed◮ General mean and covariance functions

The Gaussian case: What can/can’t be explicitly computed◮ General mean and covariance functions◮ Isotropic fields (N = 2, 3)

The Gaussian case: What can/can’t be explicitly computed◮ General mean and covariance functions◮ Isotropic fields (N = 2, 3)◮ Zero mean and constant variance (via the ”induced metric”)

The Gaussian case: What can/can’t be explicitly computed◮ General mean and covariance functions◮ Isotropic fields (N = 2, 3)◮ Zero mean and constant variance (via the ”induced metric”)◮ Gaussian related processes

The Gaussian case: What can/can’t be explicitly computed◮ General mean and covariance functions◮ Isotropic fields (N = 2, 3)◮ Zero mean and constant variance (via the ”induced metric”)◮ Gaussian related processes◮ Perturbed Gaussian processes(“Approximately”)

The Gaussian case: What can/can’t be explicitly computed◮ General mean and covariance functions◮ Isotropic fields (N = 2, 3)◮ Zero mean and constant variance (via the ”induced metric”)◮ Gaussian related processes◮ Perturbed Gaussian processes(“Approximately”)◮ E{No. of critical points of index k above the level u}

The Gaussian case: What can/can’t be explicitly computed◮ General mean and covariance functions◮ Isotropic fields (N = 2, 3)◮ Zero mean and constant variance (via the ”induced metric”)◮ Gaussian related processes◮ Perturbed Gaussian processes(“Approximately”)◮ E{No. of critical points of index k above the level u}∑N}◮ E{k=1 (−1)k (No. of critical points of index k above u))Useful for Morse theory

The Gaussian-related case

The Gaussian-related casef (t) = (f 1 (t), . . . , f k (t)) : T → R kF : R k → R d

The Gaussian-related casef (t) = (f 1 (t), . . . , f k (t)) : T → R kF : R k → R dg(t) ∆ = F (g(t)) = F (g 1 (t), . . . , g k (t)) ,

The Gaussian-related casef (t) = (f 1 (t), . . . , f k (t)) : T → R kF : R k → R dg(t) ∆ = F (g(t)) = F (g 1 (t), . . . , g k (t)) ,F (x) =k∑xi 2 ,1√x 1 k − 1( ∑ k2 x , m ∑ ni 2)1/2 n ∑ n+m1 x 2 in+1 x 2 ii.e. χ 2 fields with k degrees of freedom, T field with k − 1degrees of freedom, F field with n and m degrees of freedom..

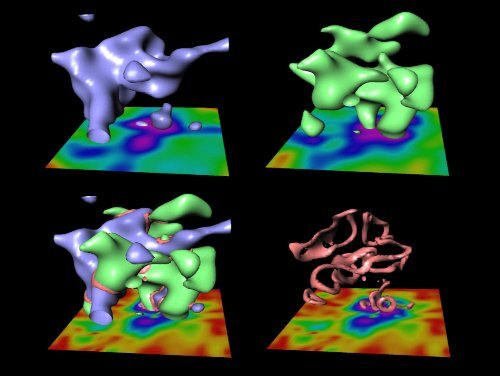

The Gaussian Kinematic Formula (GKF)

The Gaussian Kinematic Formula (GKF)Jonathan’s lecture

The perturbed-Gaussian case◮ A physics approach[ ∞∑]ϕ(x) = ϕ G (x) 1 + Tr [E G {h n (X )} · h n (x)]ϕ G is iid Gaussiann=3h n (x) = ∆ (−1) n 1 ∂ n ϕ G (x)ϕ G (x) ∂x nare Hermite tensors of rank n with coefficients constructedfrom the moments E G {h n (X )}

The perturbed-Gaussian case◮ A physics approach[ ∞∑]ϕ(x) = ϕ G (x) 1 + Tr [E G {h n (X )} · h n (x)]ϕ G is iid Gaussiann=3h n (x) = ∆ (−1) n 1 ∂ n ϕ G (x)ϕ G (x) ∂x nare Hermite tensors of rank n with coefficients constructedfrom the moments E G {h n (X )}◮ A statistical (Gaussian related) approachf (t) = f G (t) +J∑j=1p j ε j fjGR (t)

Applications I: Exceedence probabilities via level crossingsP { sup f (t) ≥ u }0≤t≤T

Applications I: Exceedence probabilities via level crossingsP { sup 0≤t≤T f (t) ≥ u }

Applications I: Exceedence probabilities via level crossingsP { sup 0≤t≤T f (t) ≥ u }P { sup f (t) ≥ u } = P {f (0) ≥ u} + P {f (0) < u, N u ≥ 1}0≤t≤T= P {f (0) ≥ u) + P {f (0) < u, N u ≥ 1}≤ P {f (0) ≥ u) + E{N u }= E{# of connected components in A u (T )}

Applications I: Exceedence probabilities via level crossingsP { sup 0≤t≤T f (t) ≥ u }P { sup f (t) ≥ u } = P {f (0) ≥ u} + P {f (0) < u, N u ≥ 1}0≤t≤T= P {f (0) ≥ u) + P {f (0) < u, N u ≥ 1}◮ Note: Nothing is Gaussian <strong>here</strong>!≤ P {f (0) ≥ u) + E{N u }= E{# of connected components in A u (T )}

Applications I: Exceedence probabilities via level crossingsP { sup 0≤t≤T f (t) ≥ u }P { sup f (t) ≥ u } = P {f (0) ≥ u} + P {f (0) < u, N u ≥ 1}0≤t≤T= P {f (0) ≥ u) + P {f (0) < u, N u ≥ 1}◮ Note: Nothing is Gaussian <strong>here</strong>!≤ P {f (0) ≥ u) + E{N u }= E{# of connected components in A u (T )}◮ Inequality is usually an approximation, for large u.

Applications II: Local maxima on the line◮ Number of local maxima above the level u{}M u (T ) = # t ∈ [0, T ] : ḟ (t) = 0, ¨f (t) < 0, f (t) ≥ u

Applications II: Local maxima on the line◮ Number of local maxima above the level u{}M u (T ) = # t ∈ [0, T ] : ḟ (t) = 0, ¨f (t) < 0, f (t) ≥ u◮ f stationary mean 0, variance 1, λ 4 = E{[f ′′ (t)] 4 }E {M −∞ (T )} = T λ1/2 42πλ 1/22

Applications II: Local maxima on the line◮ Number of local maxima above the level u{}M u (T ) = # t ∈ [0, T ] : ḟ (t) = 0, ¨f (t) < 0, f (t) ≥ u◮ f stationary mean 0, variance 1, λ 4 = E{[f ′′ (t)] 4 }E {M −∞ (T )} = T λ1/2 42πλ 1/22◮ Similarly, with ∆ = λ 4 − λ 2 2 and λ 2 ≡ λ.( )E {M u (T )} = T λ1/2 4λ 1/24uΨ∆ 1/22πλ 1/22( )− T √ λ1/2 2 λ2 uϕ(u)Φ2π ∆ 1/2 ,

Applications II: Local maxima on the line◮ Number of local maxima above the level u{}M u (T ) = # t ∈ [0, T ] : ḟ (t) = 0, ¨f (t) < 0, f (t) ≥ u◮ f stationary mean 0, variance 1, λ 4 = E{[f ′′ (t)] 4 }E {M −∞ (T )} = T λ1/2 42πλ 1/22◮ Similarly, with ∆ = λ 4 − λ 2 2 and λ 2 ≡ λ.( )E {M u (T )} = T λ1/2 4λ 1/24uΨ∆ 1/22πλ 1/22◮ An easy computationE {M u (T )}limu→∞ E {N u (T )} = 1.( )− T √ λ1/2 2 λ2 uϕ(u)Φ2π ∆ 1/2 ,

Applications II: Local maxima on the line◮ Number of local maxima above the level u{}M u (T ) = # t ∈ [0, T ] : ḟ (t) = 0, ¨f (t) < 0, f (t) ≥ u◮ f stationary mean 0, variance 1, λ 4 = E{[f ′′ (t)] 4 }E {M −∞ (T )} = T λ1/2 42πλ 1/22◮ Similarly, with ∆ = λ 4 − λ 2 2 and λ 2 ≡ λ.( )E {M u (T )} = T λ1/2 4λ 1/24uΨ∆ 1/22πλ 1/22◮ An easy computationE {M u (T )}limu→∞ E {N u (T )} = 1.which holds in very wide generality.( )− T √ λ1/2 2 λ2 uϕ(u)Φ2π ∆ 1/2 ,

Applications III: Local maxima on M ⊂ R N◮ M u (M) Number of local maxima on M above the level u

Applications III: Local maxima on M ⊂ R N◮ M u (M) Number of local maxima on M above the level u◮ N = 2 f isotropic on unit squareE {M −∞ } =16π √ 3λ 4λ 2

Applications III: Local maxima on M ⊂ R N◮ M u (M) Number of local maxima on M above the level u◮ N = 2 f isotropic on unit squareE {M −∞ } =◮ N = 2, f stationary on unit square16π √ 3λ 4λ 2E {M −∞ } = 12π 2 d 1|Λ| 1/2 G(−d 1/d 2 )Λ the covariance matrix of first order derivatives of fd 1, d 2 eigenvalues of cov matrix of second order derivativesG involves first and second order elliptic integrals

Applications III: Local maxima on M ⊂ R N◮ M u (M) Number of local maxima on M above the level u◮ N = 2 f isotropic on unit squareE {M −∞ } =◮ N = 2, f stationary on unit square16π √ 3λ 4λ 2E {M −∞ } = 12π 2 d 1|Λ| 1/2 G(−d 1/d 2 )Λ the covariance matrix of first order derivatives of fd 1, d 2 eigenvalues of cov matrix of second order derivativesG involves first and second order elliptic integrals◮ N = 2, f isotropic on unit squareE {M u } = ? ? ?

Applications III: Local maxima on M ⊂ R N◮ M u (M) Number of local maxima on M above the level u◮ N = 2 f isotropic on unit squareE {M −∞ } =◮ N = 2, f stationary on unit square16π √ 3λ 4λ 2E {M −∞ } = 12π 2 d 1|Λ| 1/2 G(−d 1/d 2 )Λ the covariance matrix of first order derivatives of fd 1, d 2 eigenvalues of cov matrix of second order derivativesG involves first and second order elliptic integrals◮ N = 2, f isotropic on unit square◮ Is t<strong>here</strong> a replacement forE {M u } = ? ? ?E {M u (T )}limu→∞ E {N u (T )} = 1.

Applications IV: Longuet-Higgins and oceanography

Applications IV: Longuet-Higgins and oceanography

Applications IV: Longuet-Higgins and oceanography◮ T<strong>here</strong> are precise computations for the expected numbers ofspecular points, mainly by M.S. Longuet-Higgins, 1948–2010

Applications IV: Longuet-Higgins and oceanography◮ T<strong>here</strong> are precise computations for the expected numbers ofspecular points, mainly by M.S. Longuet-Higgins, 1948–2010M.S. Longuet-Higgins, 1925–

Applications IV: Longuet-Higgins and oceanography◮ T<strong>here</strong> are precise computations for the expected numbers ofspecular points, mainly by M.S. Longuet-Higgins, 1948–2010Mark DennisM.S. Longuet-Higgins, 1925–

Applications V: Higher moments and complex polynomialsf (z) = ξ 0 +a 1 ξ 1 z+a 2 ξ 2 z 2 +· · ·+a n−1 ξ n−1 z n−1 , z ∈ C.

Applications V: Higher moments and complex polynomialsf (z) = ξ 0 +a 1 ξ 1 z+a 2 ξ 2 z 2 +· · ·+a n−1 ξ n−1 z n−1 , z ∈ C.◮ Means tell us w<strong>here</strong> we expect the roots to be, but variancesare needed to give concentration information.Balint Virag

Applications VI: Poisson limits and Slepian models

Applications VI: Poisson limits and Slepian modelsTheorem1: Sequences of increasingly rare events such as the existence of high level localmaxima in N dimensions or level crossings in 1 dimension, looked at over long time periodsor large regions so that a few of them still occur have an asymptotic Poissondistribution as long as dependence in time or space is not too strong.2: The normalisations and the parameters of the limiting Poisson depend only on theexpected number of events in a given region or time interval.

Applications VI: Poisson limits and Slepian modelsTheorem1: Sequences of increasingly rare events such as the existence of high level localmaxima in N dimensions or level crossings in 1 dimension, looked at over long time periodsor large regions so that a few of them still occur have an asymptotic Poissondistribution as long as dependence in time or space is not too strong.2: The normalisations and the parameters of the limiting Poisson depend only on theexpected number of events in a given region or time interval.TheoremIf f is stationary and ergodic although less will doP { f (τ) ∈ A ∣ ∣correctτ is a local maximum of f }

Applications VI: Poisson limits and Slepian modelsTheorem1: Sequences of increasingly rare events such as the existence of high level localmaxima in N dimensions or level crossings in 1 dimension, looked at over long time periodsor large regions so that a few of them still occur have an asymptotic Poissondistribution as long as dependence in time or space is not too strong.2: The normalisations and the parameters of the limiting Poisson depend only on theexpected number of events in a given region or time interval.TheoremIf f is stationary and ergodic although less will doP { f (τ) ∈ A ∣ ∣correctτ is a local maximum of f }=E{#{t ∈ B : t is a local maximum of f and f (t) ∈ A}}E{#{t ∈ B : t is a local maximum of f }}B is any ball

Applications VII: Eigenvalues of random matrices◮ A a n × n matrix

Applications VII: Eigenvalues of random matrices◮ A a n × n matrix◮ Definef A (t) ∆ = 〈At, t〉,t ∈ MIf A is random, then f A is a random field. If A is Gaussian(i.e. has Gaussian components) then f A is Gaussian.

Applications VII: Eigenvalues of random matrices◮ A a n × n matrix◮ Definef A (t) ∆ = 〈At, t〉,t ∈ MIf A is random, then f A is a random field. If A is Gaussian(i.e. has Gaussian components) then f A is Gaussian.◮ Algebraic factIf A has no repeated eigenvalues, t<strong>here</strong> are exactly 2n criticalpoints of f A , which occur at ± the eigenvectors of A.The values of f A at critical points are the eigenvalues of M

Applications VII: Eigenvalues of random matrices◮ A a n × n matrix◮ Definef A (t) ∆ = 〈At, t〉,t ∈ MIf A is random, then f A is a random field. If A is Gaussian(i.e. has Gaussian components) then f A is Gaussian.◮ Algebraic factIf A has no repeated eigenvalues, t<strong>here</strong> are exactly 2n criticalpoints of f A , which occur at ± the eigenvectors of A.The values of f A at critical points are the eigenvalues of M◮ Finding the maximum eigenvalueλ max (A) =sup f A (t)t∈S n−1

Applications VII: Eigenvalues of random matrices◮ A a n × n matrix◮ Definef A (t) ∆ = 〈At, t〉,t ∈ MIf A is random, then f A is a random field. If A is Gaussian(i.e. has Gaussian components) then f A is Gaussian.◮ Algebraic factIf A has no repeated eigenvalues, t<strong>here</strong> are exactly 2n criticalpoints of f A , which occur at ± the eigenvectors of A.The values of f A at critical points are the eigenvalues of M◮ Finding the maximum eigenvalueλ max (A) =sup f A (t)t∈S n−1◮ (Some) random matrix problems are equivalent to randomfield problems, and vice versa

Appendix I: The canonical Gaussian process◮ Consider f (t) = ∑ lj=1 ξ jϕ j (t)

Appendix I: The canonical Gaussian process◮ Consider f (t) = ∑ lj=1 ξ jϕ j (t)◮ σ 2 (t) ≡ 1 ⇒ ˜ϕ(t) = (ϕ 1 (t), . . . , π l (t)) ∈ S l−1 .

Appendix I: The canonical Gaussian process◮ Consider f (t) = ∑ lj=1 ξ jϕ j (t)◮ σ 2 (t) ≡ 1 ⇒ ˜ϕ(t) = (ϕ 1 (t), . . . , π l (t)) ∈ S l−1 .◮ A crucial mapping

Appendix I: The canonical Gaussian process◮ Consider f (t) = ∑ lj=1 ξ jϕ j (t)◮ σ 2 (t) ≡ 1 ⇒ ˜ϕ(t) = (ϕ 1 (t), . . . , π l (t)) ∈ S l−1 .◮ A crucial mapping◮ Define a new Gaussian process ˜f on ˜ϕ(M)˜f (x) = f(˜ϕ −1 (x) ) ,

Appendix I: The canonical Gaussian process◮ Consider f (t) = ∑ lj=1 ξ jϕ j (t)◮ σ 2 (t) ≡ 1 ⇒ ˜ϕ(t) = (ϕ 1 (t), . . . , π l (t)) ∈ S l−1 .◮ A crucial mapping◮ Define a new Gaussian process ˜f on ˜ϕ(M)(˜f (x) = f ˜ϕ −1 (x) ) ,}E{˜f (x)˜f (y) = E { f ( ˜ϕ −1 (x) ) f ( ˜ϕ −1 (y) )}= ∑ (ϕ j ˜ϕ −1 (x) ) (ϕ j ˜ϕ −1 (y) )= ∑ x j y j = 〈x, y〉

The canonical Gaussian process on S l−11: Has mean zero and covarianceE {f (s)f (s)} = 〈s, t〉for s, t ∈ S l−1 .2: It can be realised asf (t) =l∑t j ξ j .j=13: It is stationary and isotropic since the covariance is function ofonly the (geodesic) distance between s and t.

Exceedence probabilities for canonical process: M ⊂ S l−1P { sup f t ≥ u } =t∈M====∫ ∞0∫ ∞0∫ ∞u∫ ∞u∫ ∞uP { sup f t ≥ u ∣ |ξ| = r } P |ξ| (dr)t∈MP { sup〈ξ, t〉 ≥ u ∣ |ξ| = r } P |ξ| (dr)t∈MP { sup〈ξ, t〉 ≥ u ∣ } |ξ| = r P|ξ| (dr)t∈MP { sup〈ξ/r, t〉 ≥ u/r ∣ |ξ| = r } P |ξ| (dr)t∈MP { sup〈U, t〉 ≥ u/r } P |ξ| (dr)t∈Mw<strong>here</strong> U is uniform on S l−1 .

◮ We needP { sup〈U, t〉 ≥ u/r }t∈M

◮ We needP { sup〈U, t〉 ≥ u/r }t∈M◮ Working with tubesThe tube of radius ρ around a closed set M ∈ S l−1 ) is{}Tube(M, ρ) = t ∈ S l−1 : τ(t, M) ≤ ρ{}= t ∈ S l−1 : ∃ s ∈ M such that 〈s, t〉 ≥ cos(ρ){}= t ∈ S l−1 : sup 〈s, t〉 ≥ cos(ρ)s∈M.

◮ We needP { sup〈U, t〉 ≥ u/r }t∈M◮ Working with tubesThe tube of radius ρ around a closed set M ∈ S l−1 ) is{}Tube(M, ρ) = t ∈ S l−1 : τ(t, M) ≤ ρ{}= t ∈ S l−1 : ∃ s ∈ M such that 〈s, t〉 ≥ cos(ρ){}= t ∈ S l−1 : sup 〈s, t〉 ≥ cos(ρ)s∈M.◮ And so....P { sup f t ≥ u }t∈M= ∫ ∞uη (l Tube(M, cos −1 (u/r)) ) P |ξ| (dr)and geometry has entered the picture, in a serious fashion!

Appendix II: Stationary and isotropic fields◮ Definition: M has a group structure, µ(t) = const andC(s, t) = C(s − t).

Appendix II: Stationary and isotropic fields◮ Definition: M has a group structure, µ(t) = const andC(s, t) = C(s − t).◮ Gaussian case: (Weak) stationarity also implies strongstationarity.

Appendix II: Stationary and isotropic fields◮ Definition: M has a group structure, µ(t) = const andC(s, t) = C(s − t).◮ Gaussian case: (Weak) stationarity also implies strongstationarity.◮ M = R N : C : R N → R is non-negative definite ⇐⇒ t<strong>here</strong>exists a finite measure ν such that∫C(t) = e i〈t,λ〉 ν(dλ),R Nν is called the spectral measure and, since C is real, must besymmetric. i.e. ν(A) = ν(−A) for all A ∈ B N .

Appendix II: Stationary and isotropic fields◮ Definition: M has a group structure, µ(t) = const andC(s, t) = C(s − t).◮ Gaussian case: (Weak) stationarity also implies strongstationarity.◮ M = R N : C : R N → R is non-negative definite ⇐⇒ t<strong>here</strong>exists a finite measure ν such that∫C(t) = e i〈t,λ〉 ν(dλ),R Nν is called the spectral measure and, since C is real, must besymmetric. i.e. ν(A) = ν(−A) for all A ∈ B N .◮ Spectral momentsλ i1 ...i N∆ =∫R N λ i 11 · · · λ i NNν(dλ)ν is symmetric ⇒ odd ordered spectral moments are zero.

◮ Elementary considerations give{∂ k f (s)E∂s i1 ∂s i1 . . . ∂s ik∂ k }f (t)=∂t i1 ∂t i1 . . . ∂t ik∂ 2k C(s, t)∂s i1 ∂t i1 . . . ∂s ik ∂t ik.

◮ Elementary considerations give{∂ k f (s)E∂s i1 ∂s i1 . . . ∂s ik∂ k }f (t)=∂t i1 ∂t i1 . . . ∂t ik∂ 2k C(s, t)∂s i1 ∂t i1 . . . ∂s ik ∂t ik.◮ When f is stationary, and α, β, γ, δ ∈ {0, 1, 2, . . . }, then{ ∂ α+β f (t) ∂ γ+δ }f (t)E∂ α t i ∂ β t j ∂ γ t k ∂ δ t l= (−1) α+β ∂ α+β+γ+δ∣∂ α t i ∂ β t j ∂ γ t k ∂ δ C(t)t l∣t=0= (−1) α+β i α+β+γ+δ ∫R N λ α i λ β j λγ k λδ l ν(dλ).

◮ Elementary considerations give{∂ k f (s)E∂s i1 ∂s i1 . . . ∂s ik∂ k }f (t)=∂t i1 ∂t i1 . . . ∂t ik∂ 2k C(s, t)∂s i1 ∂t i1 . . . ∂s ik ∂t ik.◮ When f is stationary, and α, β, γ, δ ∈ {0, 1, 2, . . . }, then{ ∂ α+β f (t) ∂ γ+δ }f (t)E∂ α t i ∂ β t j ∂ γ t k ∂ δ t l= (−1) α+β ∂ α+β+γ+δ∣∂ α t i ∂ β t j ∂ γ t k ∂ δ C(t)t l◮ Write f j = ∂f /∂t j ,∣t=0= (−1) α+β i α+β+γ+δ ∫R N λ α i λ β j λγ k λδ l ν(dλ).f ij = ∂ 2 f /∂t i ∂t j Thenf (t) and f j (t) are uncorrelated,f i (t) and f jk (t) are uncorrelated

◮ Elementary considerations give{∂ k f (s)E∂s i1 ∂s i1 . . . ∂s ik∂ k }f (t)=∂t i1 ∂t i1 . . . ∂t ik∂ 2k C(s, t)∂s i1 ∂t i1 . . . ∂s ik ∂t ik.◮ When f is stationary, and α, β, γ, δ ∈ {0, 1, 2, . . . }, then{ ∂ α+β f (t) ∂ γ+δ }f (t)E∂ α t i ∂ β t j ∂ γ t k ∂ δ t l= (−1) α+β ∂ α+β+γ+δ∣∂ α t i ∂ β t j ∂ γ t k ∂ δ C(t)t l◮ Write f j = ∂f /∂t j ,∣t=0= (−1) α+β i α+β+γ+δ ∫R N λ α i λ β j λγ k λδ l ν(dλ).f ij = ∂ 2 f /∂t i ∂t j Thenf (t) and f j (t) are uncorrelated,f i (t) and f jk (t) are uncorrelated◮ Isotropy (C(t) = C(‖t‖) ⇒ ν is spherically symmetric ⇒E {f i (t)f j (t)} = −E {f (t)f ij (t)} = λδ ij

Appendix III: Regularity of Gaussian processes◮ The canonical metric, dd(s, t) ∆ = [ E {( f (s) − f (t) ) 2}] 12,A ball of radius ε and centered at t ∈ M is denoted byB d (t, ε) ∆ = {s ∈ M : d(s, t) ≤ ε} .

Appendix III: Regularity of Gaussian processes◮ The canonical metric, dd(s, t) ∆ = [ E {( f (s) − f (t) ) 2}] 12,A ball of radius ε and centered at t ∈ M is denoted by◮ Compactness assumptionB d (t, ε) ∆ = {s ∈ M : d(s, t) ≤ ε} .diam(M) ∆ =sup d(s, t) < ∞.s,t∈M

Appendix III: Regularity of Gaussian processes◮ The canonical metric, dd(s, t) ∆ = [ E {( f (s) − f (t) ) 2}] 12,A ball of radius ε and centered at t ∈ M is denoted by◮ Compactness assumptionB d (t, ε) ∆ = {s ∈ M : d(s, t) ≤ ε} .diam(M) ∆ =sup d(s, t) < ∞.s,t∈M◮ Entropy Fix ε > 0 and let N(M, d, ε) ≡ N(ε) denote thesmallest number of d-balls of radius ε whose union covers M.SetH(M, d, ε) ≡ H(ε) = ln (N(ε)) .Then N and H are called the (metric) entropy and log-entropyfunctions for M (or f ).

Dudley’s theoremLet f be a centered Gaussian field on a d-compact M Then t<strong>here</strong>exists a universal K such thatandw<strong>here</strong>E { supt∈M∫} diam(M)f t ≤ K H 1/2 (ε) dε,0E {ω f ,d (δ)} ≤ Kω f ,d (δ) ∆ =∫ δ0H 1/2 (ε) dε,sup |f (t) − f (s)| , δ > 0,d(s,t)≤δFurthermore, t<strong>here</strong> exists a random η ∈ (0, ∞) and a universal Ksuch thatfor all δ < η.ω f ,d (δ) ≤ K∫ δ0H 1/2 (ε) dε,

Special cases of the entropy result◮ If f is also stationaryf is a.s. continuous on M ⇐⇒ f is a.s. bounded on M⇐⇒∫ δ0H 1/2 (ε) dε < ∞, ∀δ > 0

Special cases of the entropy result◮ If f is also stationaryf is a.s. continuous on M ⇐⇒ f is a.s. bounded on M◮ If M ⊂ R N , andp 2 (u)⇐⇒∫ δ∆= sup E { |f s − f t | 2} ,|s−t|≤u0H 1/2 (ε) dε < ∞, ∀δ > 0continuity & boundedness follow if, for some δ > 0, either∫ δ∫ ∞ ((− ln u) 1 2 dp(u) < ∞ or p e −u2) du < ∞.0δ

Special cases of the entropy result◮ If f is also stationaryf is a.s. continuous on M ⇐⇒ f is a.s. bounded on M◮ If M ⊂ R N , andp 2 (u)⇐⇒∫ δ∆= sup E { |f s − f t | 2} ,|s−t|≤u0H 1/2 (ε) dε < ∞, ∀δ > 0continuity & boundedness follow if, for some δ > 0, either∫ δ∫ ∞ ((− ln u) 1 2 dp(u) < ∞ or p e −u2) du < ∞.0◮ A sufficient condition For some 0 < K < ∞ and α, η > 0,E { |f s − f t | 2} ≤for all s, t with |s − t| < η.δK|log |s − t| | 1+α ,

Appendix IV: Borell-Tsirelson inequality◮ Finiteness theorem: ‖f ‖ ∆ = sup t∈M f tP{‖f ‖ < ∞} = 1 ⇐⇒ E{‖f ‖} < ∞,

Appendix IV: Borell-Tsirelson inequality◮ Finiteness theorem: ‖f ‖ ∆ = sup t∈M f tP{‖f ‖ < ∞} = 1 ⇐⇒ E{‖f ‖} < ∞,◮ THE inequality: For all u > 0,σ 2 M = sup t∈M E{f 2t }P{‖f ‖ − E{‖f ‖} > u } ≤ e −u2 /2σ 2 M .

Appendix IV: Borell-Tsirelson inequality◮ Finiteness theorem: ‖f ‖ ∆ = sup t∈M f tP{‖f ‖ < ∞} = 1 ⇐⇒ E{‖f ‖} < ∞,◮ THE inequality: For all u > 0,σ 2 M = sup t∈M E{f 2t }◮ This impliesP{‖f ‖ − E{‖f ‖} > u } ≤ e −u2 /2σ 2 M .P{‖f ‖ ≥ u} ≤ e µu−u2 /2σ 2 M ,µ u = (2uE{‖f ‖} − [E{‖f ‖}] 2 )/σ 2 M

Appendix IV: Borell-Tsirelson inequality◮ Finiteness theorem: ‖f ‖ ∆ = sup t∈M f tP{‖f ‖ < ∞} = 1 ⇐⇒ E{‖f ‖} < ∞,◮ THE inequality: For all u > 0,σ 2 M = sup t∈M E{f 2t }◮ This impliesP{‖f ‖ − E{‖f ‖} > u } ≤ e −u2 /2σ 2 M .P{‖f ‖ ≥ u} ≤ e µu−u2 /2σ 2 M ,µ u = (2uE{‖f ‖} − [E{‖f ‖}] 2 )/σ 2 M◮ Asymptotics: For high levels u, the dominant behavior of allGaussian exceedence probabilities is determined by e −u2 /2σ 2 M .

Places to start reading and to find other references1. R.J. Adler and J.E. Taylor, Random Fields and Geometry,Springer, 20072. R.J. Adler, and J.E. Taylor, Topological Complexity ofRandom Functions, Springer Lecture Notes in <strong>Mathematics</strong>,Vol. 2019, Springer, 20103. D. Aldous, Probability Approximations via the PoissonClumping Heuristic, Applied Mathematical Sciences, Vol. 77,Springer-Verlag, 1989.4. J-M. Azaïs and M. Wschebor. Level Sets and Extrema ofRandom Processes and Fields, Wiley, 2009.5. H. Cramér and M.R. Leadbetter, Stationary and RelatedStochastic Processes, Wiley, 1967.6. M.R. Leadbetter, G. Lindgren, and H. Rootzén, Extremes andRelated Properties of Random Sequences and Processes,Springer-Verlag, 1983.