Optimizing subroutines in assembly language

Optimizing subroutines in assembly language

Optimizing subroutines in assembly language

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

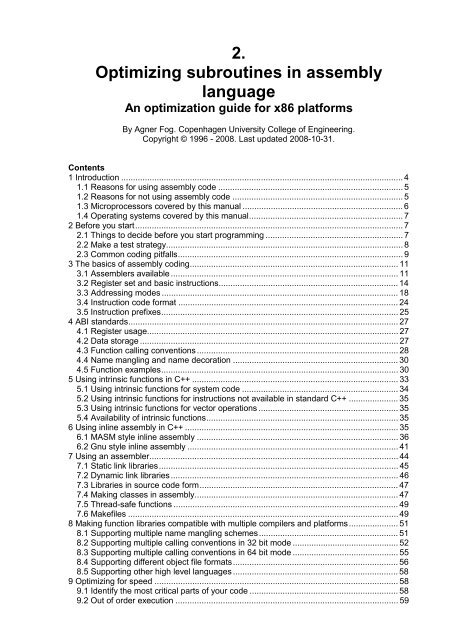

2.<strong>Optimiz<strong>in</strong>g</strong> <strong>subrout<strong>in</strong>es</strong> <strong>in</strong> <strong>assembly</strong><strong>language</strong>An optimization guide for x86 platformsBy Agner Fog. Copenhagen University College of Eng<strong>in</strong>eer<strong>in</strong>g.Copyright © 1996 - 2008. Last updated 2008-10-31.Contents1 Introduction ....................................................................................................................... 41.1 Reasons for us<strong>in</strong>g <strong>assembly</strong> code .............................................................................. 51.2 Reasons for not us<strong>in</strong>g <strong>assembly</strong> code ........................................................................ 51.3 Microprocessors covered by this manual .................................................................... 61.4 Operat<strong>in</strong>g systems covered by this manual................................................................. 72 Before you start................................................................................................................. 72.1 Th<strong>in</strong>gs to decide before you start programm<strong>in</strong>g .......................................................... 72.2 Make a test strategy.................................................................................................... 82.3 Common cod<strong>in</strong>g pitfalls............................................................................................... 93 The basics of <strong>assembly</strong> cod<strong>in</strong>g........................................................................................ 113.1 Assemblers available ................................................................................................ 113.2 Register set and basic <strong>in</strong>structions............................................................................ 143.3 Address<strong>in</strong>g modes .................................................................................................... 183.4 Instruction code format ............................................................................................. 243.5 Instruction prefixes.................................................................................................... 254 ABI standards.................................................................................................................. 274.1 Register usage.......................................................................................................... 274.2 Data storage ............................................................................................................. 274.3 Function call<strong>in</strong>g conventions ..................................................................................... 284.4 Name mangl<strong>in</strong>g and name decoration ...................................................................... 304.5 Function examples.................................................................................................... 305 Us<strong>in</strong>g <strong>in</strong>tr<strong>in</strong>sic functions <strong>in</strong> C++ ....................................................................................... 335.1 Us<strong>in</strong>g <strong>in</strong>tr<strong>in</strong>sic functions for system code .................................................................. 345.2 Us<strong>in</strong>g <strong>in</strong>tr<strong>in</strong>sic functions for <strong>in</strong>structions not available <strong>in</strong> standard C++ ..................... 355.3 Us<strong>in</strong>g <strong>in</strong>tr<strong>in</strong>sic functions for vector operations ........................................................... 355.4 Availability of <strong>in</strong>tr<strong>in</strong>sic functions................................................................................. 356 Us<strong>in</strong>g <strong>in</strong>l<strong>in</strong>e <strong>assembly</strong> <strong>in</strong> C++ .......................................................................................... 356.1 MASM style <strong>in</strong>l<strong>in</strong>e <strong>assembly</strong> ..................................................................................... 366.2 Gnu style <strong>in</strong>l<strong>in</strong>e <strong>assembly</strong> ......................................................................................... 417 Us<strong>in</strong>g an assembler......................................................................................................... 447.1 Static l<strong>in</strong>k libraries..................................................................................................... 457.2 Dynamic l<strong>in</strong>k libraries................................................................................................ 467.3 Libraries <strong>in</strong> source code form.................................................................................... 477.4 Mak<strong>in</strong>g classes <strong>in</strong> <strong>assembly</strong>...................................................................................... 477.5 Thread-safe functions ............................................................................................... 497.6 Makefiles .................................................................................................................. 498 Mak<strong>in</strong>g function libraries compatible with multiple compilers and platforms..................... 518.1 Support<strong>in</strong>g multiple name mangl<strong>in</strong>g schemes........................................................... 518.2 Support<strong>in</strong>g multiple call<strong>in</strong>g conventions <strong>in</strong> 32 bit mode ............................................. 528.3 Support<strong>in</strong>g multiple call<strong>in</strong>g conventions <strong>in</strong> 64 bit mode ............................................. 558.4 Support<strong>in</strong>g different object file formats...................................................................... 568.5 Support<strong>in</strong>g other high level <strong>language</strong>s ...................................................................... 589 <strong>Optimiz<strong>in</strong>g</strong> for speed ....................................................................................................... 589.1 Identify the most critical parts of your code ............................................................... 589.2 Out of order execution .............................................................................................. 59

9.3 Instruction fetch, decod<strong>in</strong>g and retirement ................................................................ 619.4 Instruction latency and throughput ............................................................................ 629.5 Break dependency cha<strong>in</strong>s......................................................................................... 639.6 Jumps and calls ........................................................................................................ 6410 <strong>Optimiz<strong>in</strong>g</strong> for size......................................................................................................... 7110.1 Choos<strong>in</strong>g shorter <strong>in</strong>structions.................................................................................. 7110.2 Us<strong>in</strong>g shorter constants and addresses .................................................................. 7310.3 Reus<strong>in</strong>g constants .................................................................................................. 7410.4 Constants <strong>in</strong> 64-bit mode ........................................................................................ 7410.5 Addresses and po<strong>in</strong>ters <strong>in</strong> 64-bit mode................................................................... 7510.6 Mak<strong>in</strong>g <strong>in</strong>structions longer for the sake of alignment............................................... 7611 <strong>Optimiz<strong>in</strong>g</strong> memory access............................................................................................ 7911.1 How cach<strong>in</strong>g works................................................................................................. 7911.2 Trace cache............................................................................................................ 8111.3 Alignment of data.................................................................................................... 8111.4 Alignment of code ................................................................................................... 8311.5 Organiz<strong>in</strong>g data for improved cach<strong>in</strong>g..................................................................... 8511.6 Organiz<strong>in</strong>g code for improved cach<strong>in</strong>g.................................................................... 8611.7 Cache control <strong>in</strong>structions....................................................................................... 8612 Loops ............................................................................................................................ 8612.1 M<strong>in</strong>imize loop overhead .......................................................................................... 8612.2 Induction variables.................................................................................................. 8912.3 Move loop-<strong>in</strong>variant code........................................................................................ 9012.4 F<strong>in</strong>d the bottlenecks................................................................................................ 9012.5 Instruction fetch, decod<strong>in</strong>g and retirement <strong>in</strong> a loop ................................................ 9112.6 Distribute µops evenly between execution units...................................................... 9112.7 An example of analysis for bottlenecks on PM........................................................ 9212.8 Same example on Core2 ........................................................................................ 9612.9 Loop unroll<strong>in</strong>g ......................................................................................................... 9712.10 Optimize cach<strong>in</strong>g .................................................................................................. 9912.11 Parallelization ..................................................................................................... 10012.12 Analyz<strong>in</strong>g dependences...................................................................................... 10112.13 Loops on processors without out-of-order execution ........................................... 10412.14 Macro loops ........................................................................................................ 10613 Vector programm<strong>in</strong>g.................................................................................................... 10813.1 Conditional moves <strong>in</strong> SIMD registers .................................................................... 10913.2 Us<strong>in</strong>g vector <strong>in</strong>structions with other types of data than they are <strong>in</strong>tended for ........ 11213.3 Shuffl<strong>in</strong>g data........................................................................................................ 11413.4 Generat<strong>in</strong>g constants............................................................................................ 11713.5 Access<strong>in</strong>g unaligned data ..................................................................................... 11913.6 Us<strong>in</strong>g AVX <strong>in</strong>struction set and YMM registers ....................................................... 12313.7 Vector operations <strong>in</strong> general purpose registers ..................................................... 12814 Multithread<strong>in</strong>g.............................................................................................................. 13015 CPU dispatch<strong>in</strong>g.......................................................................................................... 13015.1 Check<strong>in</strong>g for operat<strong>in</strong>g system support for XMM and YMM registers .................... 13216 Problematic Instructions .............................................................................................. 13316.1 LEA <strong>in</strong>struction (all processors)............................................................................. 13316.2 INC and DEC (all Intel processors) ....................................................................... 13416.3 XCHG (all processors) .......................................................................................... 13416.4 Shifts and rotates (P4) .......................................................................................... 13516.5 Rotates through carry (all processors) .................................................................. 13516.6 Bit test (all processors) ......................................................................................... 13516.7 LAHF and SAHF (all processors).......................................................................... 13516.8 Integer multiplication (all processors).................................................................... 13516.9 Division (all processors)........................................................................................ 13516.10 Str<strong>in</strong>g <strong>in</strong>structions (all processors) ...................................................................... 14016.11 WAIT <strong>in</strong>struction (all processors) ........................................................................ 14116.12 FCOM + FSTSW AX (all processors).................................................................. 1422

16.13 FPREM (all processors)...................................................................................... 14316.14 FRNDINT (all processors)................................................................................... 14316.15 FSCALE and exponential function (all processors) ............................................. 14416.16 FPTAN (all processors)....................................................................................... 14516.17 FSQRT (SSE processors)................................................................................... 14516.18 FLDCW (Most Intel processors).......................................................................... 14517 Special topics .............................................................................................................. 14617.1 XMM versus float<strong>in</strong>g po<strong>in</strong>t registers (Processors with SSE) .................................. 14617.2 MMX versus XMM registers (Processors with SSE2)............................................ 14717.3 XMM versus YMM registers (Processors with AVX).............................................. 14717.4 Free<strong>in</strong>g float<strong>in</strong>g po<strong>in</strong>t registers (all processors)..................................................... 14817.5 Transitions between float<strong>in</strong>g po<strong>in</strong>t and MMX <strong>in</strong>structions (Processors with MMX). 14817.6 Convert<strong>in</strong>g from float<strong>in</strong>g po<strong>in</strong>t to <strong>in</strong>teger (All processors) ...................................... 14817.7 Us<strong>in</strong>g <strong>in</strong>teger <strong>in</strong>structions for float<strong>in</strong>g po<strong>in</strong>t operations .......................................... 15017.8 Us<strong>in</strong>g float<strong>in</strong>g po<strong>in</strong>t <strong>in</strong>structions for <strong>in</strong>teger operations .......................................... 15317.9 Mov<strong>in</strong>g blocks of data (All processors).................................................................. 15317.10 Self-modify<strong>in</strong>g code (All processors)................................................................... 15418 Measur<strong>in</strong>g performance............................................................................................... 15418.1 Test<strong>in</strong>g speed ....................................................................................................... 15418.2 The pitfalls of unit-test<strong>in</strong>g ...................................................................................... 15619 Literature..................................................................................................................... 1563

1 IntroductionThis is the second <strong>in</strong> a series of five manuals:1. <strong>Optimiz<strong>in</strong>g</strong> software <strong>in</strong> C++: An optimization guide for W<strong>in</strong>dows, L<strong>in</strong>ux and Macplatforms.2. <strong>Optimiz<strong>in</strong>g</strong> <strong>subrout<strong>in</strong>es</strong> <strong>in</strong> <strong>assembly</strong> <strong>language</strong>: An optimization guide for x86platforms.3. The microarchitecture of Intel and AMD CPU's: An optimization guide for <strong>assembly</strong>programmers and compiler makers.4. Instruction tables: Lists of <strong>in</strong>struction latencies, throughputs and micro-operationbreakdowns for Intel and AMD CPU's.5. Call<strong>in</strong>g conventions for different C++ compilers and operat<strong>in</strong>g systems.The latest versions of these manuals are always available from www.agner.org/optimize.Copyright conditions are listed <strong>in</strong> manual 5.The present manual expla<strong>in</strong>s how to comb<strong>in</strong>e <strong>assembly</strong> code with a high level programm<strong>in</strong>g<strong>language</strong> and how to optimize CPU-<strong>in</strong>tensive code for speed by us<strong>in</strong>g <strong>assembly</strong> code.This manual is <strong>in</strong>tended for advanced <strong>assembly</strong> programmers and compiler makers. It isassumed that the reader has a good understand<strong>in</strong>g of <strong>assembly</strong> <strong>language</strong> and someexperience with <strong>assembly</strong> cod<strong>in</strong>g. Beg<strong>in</strong>ners are advised to seek <strong>in</strong>formation elsewhere andget some programm<strong>in</strong>g experience before try<strong>in</strong>g the optimization techniques describedhere. I can recommend the various <strong>in</strong>troductions, tutorials, discussion forums andnewsgroups on the Internet (see l<strong>in</strong>ks from www.agner.org/optimize) and the book"Introduction to 80x86 Assembly Language and Computer Architecture" by R. C. Detmer, 2.ed. 2006.The present manual covers all platforms that use the x86 <strong>in</strong>struction set. This <strong>in</strong>struction setis used by most microprocessors from Intel and AMD. Operat<strong>in</strong>g systems that can use this<strong>in</strong>struction set <strong>in</strong>clude DOS (16 and 32 bits), W<strong>in</strong>dows (16, 32 and 64 bits), L<strong>in</strong>ux (32 and64 bits), FreeBSD/Open BSD (32 and 64 bits), and Intel-based Mac OS (32 bits).Optimization techniques that are not specific to <strong>assembly</strong> <strong>language</strong> are discussed <strong>in</strong> manual1: "<strong>Optimiz<strong>in</strong>g</strong> software <strong>in</strong> C++". Details that are specific to a particular microprocessor arecovered by manual 3: "The microarchitecture of Intel and AMD CPU's". Tables of <strong>in</strong>structiontim<strong>in</strong>gs etc. are provided <strong>in</strong> manual 4: "Instruction tables: Lists of <strong>in</strong>struction latencies,throughputs and micro-operation breakdowns for Intel and AMD CPU's". Details aboutcall<strong>in</strong>g conventions for different operat<strong>in</strong>g systems and compilers are covered <strong>in</strong> manual 5:"Call<strong>in</strong>g conventions for different C++ compilers and operat<strong>in</strong>g systems".Programm<strong>in</strong>g <strong>in</strong> <strong>assembly</strong> <strong>language</strong> is much more difficult than high-level <strong>language</strong>. Mak<strong>in</strong>gbugs is very easy, and f<strong>in</strong>d<strong>in</strong>g them is very difficult. Now you have been warned! Pleasedon't send your programm<strong>in</strong>g questions to me. Such mails will not be answered. There arevarious discussion forums on the Internet where you can get answers to your programm<strong>in</strong>gquestions if you cannot f<strong>in</strong>d the answers <strong>in</strong> the relevant books and manuals.Good luck with your hunt for nanoseconds!4

1.1 Reasons for us<strong>in</strong>g <strong>assembly</strong> codeAssembly cod<strong>in</strong>g is not used as much today as previously. However, there are still reasonsfor learn<strong>in</strong>g and us<strong>in</strong>g <strong>assembly</strong> code. The ma<strong>in</strong> reasons are:1. Educational reasons. It is important to know how microprocessors and compilerswork at the <strong>in</strong>struction level <strong>in</strong> order to be able to predict which cod<strong>in</strong>g techniquesare most efficient, to understand how various constructs <strong>in</strong> high level <strong>language</strong>swork, and to track hard-to-f<strong>in</strong>d errors.2. Debugg<strong>in</strong>g and verify<strong>in</strong>g. Look<strong>in</strong>g at compiler-generated <strong>assembly</strong> code or thedis<strong>assembly</strong> w<strong>in</strong>dow <strong>in</strong> a debugger is useful for f<strong>in</strong>d<strong>in</strong>g errors and for check<strong>in</strong>g howwell a compiler optimizes a particular piece of code.3. Mak<strong>in</strong>g compilers. Understand<strong>in</strong>g <strong>assembly</strong> cod<strong>in</strong>g techniques is necessary formak<strong>in</strong>g compilers, debuggers and other development tools.4. Embedded systems. Small embedded systems have fewer resources than PC's andma<strong>in</strong>frames. Assembly programm<strong>in</strong>g can be necessary for optimiz<strong>in</strong>g code for speedor size <strong>in</strong> small embedded systems.5. Hardware drivers and system code. Access<strong>in</strong>g hardware, system control registersetc. may sometimes be difficult or impossible with high level code.6. Access<strong>in</strong>g <strong>in</strong>structions that are not accessible from high level <strong>language</strong>. Certa<strong>in</strong><strong>assembly</strong> <strong>in</strong>structions have no high-level <strong>language</strong> equivalent.7. Self-modify<strong>in</strong>g code. Self-modify<strong>in</strong>g code is generally not profitable because it<strong>in</strong>terferes with efficient code cach<strong>in</strong>g. It may, however, be advantageous for exampleto <strong>in</strong>clude a small compiler <strong>in</strong> math programs where a user-def<strong>in</strong>ed function has tobe calculated many times.8. <strong>Optimiz<strong>in</strong>g</strong> code for size. Storage space and memory is so cheap nowadays that it isnot worth the effort to use <strong>assembly</strong> <strong>language</strong> for reduc<strong>in</strong>g code size. However,cache size is still such a critical resource that it may be useful <strong>in</strong> some cases tooptimize a critical piece of code for size <strong>in</strong> order to make it fit <strong>in</strong>to the code cache.9. <strong>Optimiz<strong>in</strong>g</strong> code for speed. Modern C++ compilers generally optimize code quite well<strong>in</strong> most cases. But there are still many cases where compilers perform poorly andwhere dramatic <strong>in</strong>creases <strong>in</strong> speed can be achieved by careful <strong>assembly</strong> programm<strong>in</strong>g.10. Function libraries. The total benefit of optimiz<strong>in</strong>g code is higher <strong>in</strong> function librariesthat are used by many programmers.11. Mak<strong>in</strong>g function libraries compatible with multiple compilers and operat<strong>in</strong>g systems.It is possible to make library functions with multiple entries that are compatible withdifferent compilers and different operat<strong>in</strong>g systems. This requires <strong>assembly</strong>programm<strong>in</strong>g.The ma<strong>in</strong> focus <strong>in</strong> this manual is on optimiz<strong>in</strong>g code for speed, though some of the othertopics are also discussed.1.2 Reasons for not us<strong>in</strong>g <strong>assembly</strong> codeThere are so many disadvantages and problems <strong>in</strong>volved <strong>in</strong> <strong>assembly</strong> programm<strong>in</strong>g that itis advisable to consider the alternatives before decid<strong>in</strong>g to use <strong>assembly</strong> code for aparticular task. The most important reasons for not us<strong>in</strong>g <strong>assembly</strong> programm<strong>in</strong>g are:5

1. Development time. Writ<strong>in</strong>g code <strong>in</strong> <strong>assembly</strong> <strong>language</strong> takes much longer time than<strong>in</strong> a high level <strong>language</strong>.2. Reliability and security. It is easy to make errors <strong>in</strong> <strong>assembly</strong> code. The assembler isnot check<strong>in</strong>g if the call<strong>in</strong>g conventions and register save conventions are obeyed.Nobody is check<strong>in</strong>g for you if the number of PUSH and POP <strong>in</strong>structions is the same <strong>in</strong>all possible branches and paths. There are so many possibilities for hidden errors <strong>in</strong><strong>assembly</strong> code that it affects the reliability and security of the project unless youhave a very systematic approach to test<strong>in</strong>g and verify<strong>in</strong>g.3. Debugg<strong>in</strong>g and verify<strong>in</strong>g. Assembly code is more difficult to debug and verifybecause there are more possibilities for errors than <strong>in</strong> high level code.4. Ma<strong>in</strong>ta<strong>in</strong>ability. Assembly code is more difficult to modify and ma<strong>in</strong>ta<strong>in</strong> because the<strong>language</strong> allows unstructured spaghetti code and all k<strong>in</strong>ds of dirty tricks that aredifficult for others to understand. Thorough documentation and a consistentprogramm<strong>in</strong>g style is needed.5. Portability. Assembly code is very platform-specific. Port<strong>in</strong>g to a different platform isdifficult.6. System code can use <strong>in</strong>tr<strong>in</strong>sic functions <strong>in</strong>stead of <strong>assembly</strong>. The best modern C++compilers have <strong>in</strong>tr<strong>in</strong>sic functions for access<strong>in</strong>g system control registers and othersystem <strong>in</strong>structions. Assembly code is no longer needed for device drivers and othersystem code when <strong>in</strong>tr<strong>in</strong>sic functions are available.7. Application code can use <strong>in</strong>tr<strong>in</strong>sic functions or vector classes <strong>in</strong>stead of <strong>assembly</strong>.The best modern C++ compilers have <strong>in</strong>tr<strong>in</strong>sic functions for vector operations andother special <strong>in</strong>structions that previously required <strong>assembly</strong> programm<strong>in</strong>g. It is nolonger necessary to use old fashioned <strong>assembly</strong> code to take advantage of the XMMvector <strong>in</strong>structions. See page 33.8. Compilers have been improved a lot <strong>in</strong> recent years. The best compilers are nowquite good. It takes a lot of expertise and experience to optimize better than the bestC++ compiler.1.3 Microprocessors covered by this manualThe follow<strong>in</strong>g families of x86-family microprocessors are discussed <strong>in</strong> this manual:Microprocessor nameIntel Pentium (without name suffix)Intel Pentium MMXIntel Pentium ProIntel Pentium IIIntel Pentium IIIIntel Pentium 4 (NetBurst)Intel Pentium 4 with EM64T, Pentium D, etc.Intel Pentium M, Core Solo, Core DuoIntel Core 2AMD AthlonAMD Athlon 64, Opteron, etc., 64-bitAMD Family 10h, Phenom, third generation OpteronTable 1.1. Microprocessor familiesAbbreviationP1PMMXPProP2P3P4P4EPMCore2AMD K7AMD K8AMD K106

See manual 3: "The microarchitecture of Intel and AMD CPU's" for details.1.4 Operat<strong>in</strong>g systems covered by this manualThe follow<strong>in</strong>g operat<strong>in</strong>g systems can use x86 family microprocessors:16 bit: DOS, W<strong>in</strong>dows 3.x.32 bit: W<strong>in</strong>dows, L<strong>in</strong>ux, FreeBSD, OpenBSD, NetBSD, Intel-based Mac OS X.64 bit: W<strong>in</strong>dows, L<strong>in</strong>ux, FreeBSD, OpenBSD, NetBSD, Intel-based Mac OS X.All the UNIX-like operat<strong>in</strong>g systems (L<strong>in</strong>ux, BSD, Mac OS) use the same call<strong>in</strong>gconventions, with very few exceptions. Everyth<strong>in</strong>g that is said <strong>in</strong> this manual about L<strong>in</strong>uxalso applies to other UNIX-like systems, possibly <strong>in</strong>clud<strong>in</strong>g systems not mentioned here.2 Before you start2.1 Th<strong>in</strong>gs to decide before you start programm<strong>in</strong>gBefore you start to program <strong>in</strong> <strong>assembly</strong>, you have to th<strong>in</strong>k about why you want to use<strong>assembly</strong> <strong>language</strong>, which part of your program you need to make <strong>in</strong> <strong>assembly</strong>, and whatprogramm<strong>in</strong>g method to use. If you haven't made your development strategy clear, then youwill soon f<strong>in</strong>d yourself wast<strong>in</strong>g time optimiz<strong>in</strong>g the wrong parts of the program, do<strong>in</strong>g th<strong>in</strong>gs<strong>in</strong> <strong>assembly</strong> that could have been done <strong>in</strong> C++, attempt<strong>in</strong>g to optimize th<strong>in</strong>gs that cannot beoptimized further, mak<strong>in</strong>g spaghetti code that is difficult to ma<strong>in</strong>ta<strong>in</strong>, and mak<strong>in</strong>g code that isfull or errors and difficult to debug.Here is a checklist of th<strong>in</strong>gs to consider before you start programm<strong>in</strong>g:• Never make the whole program <strong>in</strong> <strong>assembly</strong>. That is a waste of time. Assembly codeshould be used only where speed is critical and where a significant improvement <strong>in</strong>speed can be obta<strong>in</strong>ed. Most of the program should be made <strong>in</strong> C or C++. These arethe programm<strong>in</strong>g <strong>language</strong>s that are most easily comb<strong>in</strong>ed with <strong>assembly</strong> code.• If the purpose of us<strong>in</strong>g <strong>assembly</strong> is to make system code or use special <strong>in</strong>structionsthat are not available <strong>in</strong> standard C++ then you should isolate the part of theprogram that needs these <strong>in</strong>structions <strong>in</strong> a separate function or class with a welldef<strong>in</strong>ed functionality. Use <strong>in</strong>tr<strong>in</strong>sic functions (see p. 33) if possible.• If the purpose of us<strong>in</strong>g <strong>assembly</strong> is to optimize for speed then you have to identifythe part of the program that consumes the most CPU time, possibly with the use of aprofiler. Check if the bottleneck is file access, memory access, CPU <strong>in</strong>structions, orsometh<strong>in</strong>g else, as described <strong>in</strong> manual 1: "<strong>Optimiz<strong>in</strong>g</strong> software <strong>in</strong> C++". Isolate thecritical part of the program <strong>in</strong>to a function or class with a well-def<strong>in</strong>ed functionality.• If the purpose of us<strong>in</strong>g <strong>assembly</strong> is to make a function library then you should clearlydef<strong>in</strong>e the functionality of the library. Decide whether to make a function library or aclass library. Decide whether to use static l<strong>in</strong>k<strong>in</strong>g (.lib <strong>in</strong> W<strong>in</strong>dows, .a <strong>in</strong> L<strong>in</strong>ux) ordynamic l<strong>in</strong>k<strong>in</strong>g (.dll <strong>in</strong> W<strong>in</strong>dows, .so <strong>in</strong> L<strong>in</strong>ux). Static l<strong>in</strong>k<strong>in</strong>g is usually moreefficient, but dynamic l<strong>in</strong>k<strong>in</strong>g may be necessary if the library is called from <strong>language</strong>ssuch as C# and Visual Basic. You may possibly make both a static and a dynamicl<strong>in</strong>k version of the library.7

• If the purpose of us<strong>in</strong>g <strong>assembly</strong> is to optimize an embedded application for size orspeed then f<strong>in</strong>d a development tool that supports both C/C++ and <strong>assembly</strong> andmake as much as possible <strong>in</strong> C or C++.• Decide if the code is reusable or application-specific. Spend<strong>in</strong>g time on carefuloptimization is more justified if the code is reusable. A reusable code is mostappropriately implemented as a function library or class library.• Decide if the code should support multithread<strong>in</strong>g. A multithread<strong>in</strong>g application cantake advantage of microprocessors with multiple cores. Any data that must bepreserved from one function call to the next on a per-thread basis should be stored<strong>in</strong> a C++ class or a per-thread buffer supplied by the call<strong>in</strong>g program.• Decide if portability is important for your application. Should the application work <strong>in</strong>both W<strong>in</strong>dows, L<strong>in</strong>ux and Intel-based Mac OS? Should it work <strong>in</strong> both 32 bit and 64bit mode? Should it work on non-x86 platforms? This is important for the choice ofcompiler, assembler and programm<strong>in</strong>g method.• Decide if your application should work on old microprocessors. If so, then you maymake one version for microprocessors with, for example, the SSE2 <strong>in</strong>struction set,and another version which is compatible with old microprocessors. You may evenmake several versions, each optimized for a particular CPU. It is recommended tomake automatic CPU dispatch<strong>in</strong>g (see page 130).• There are three <strong>assembly</strong> programm<strong>in</strong>g methods to choose between: (1) Use<strong>in</strong>tr<strong>in</strong>sic functions and vector classes <strong>in</strong> a C++ compiler. (2) Use <strong>in</strong>l<strong>in</strong>e <strong>assembly</strong> <strong>in</strong> aC++ compiler. (3) Use an assembler. These three methods and their relativeadvantages and disadvantages are described <strong>in</strong> chapter 5, 6 and 7 respectively(page 33, 35 and 44 respectively).• If you are us<strong>in</strong>g an assembler then you have to choose between different syntaxdialects. It may be preferred to use an assembler that is compatible with the<strong>assembly</strong> code that your C++ compiler can generate.• Make your code <strong>in</strong> C++ first and optimize it as much as you can, us<strong>in</strong>g the methodsdescribed <strong>in</strong> manual 1: "<strong>Optimiz<strong>in</strong>g</strong> software <strong>in</strong> C++". Make the compiler translatethe code to <strong>assembly</strong>. Look at the compiler-generated code and see if there are anypossibilities for improvement <strong>in</strong> the code.• Highly optimized code tends to be very difficult to read and understand for othersand even for yourself when you get back to it after some time. In order to make itpossible to ma<strong>in</strong>ta<strong>in</strong> the code, it is important that you organize it <strong>in</strong>to small logicalunits (procedures or macros) with a well-def<strong>in</strong>ed <strong>in</strong>terface and call<strong>in</strong>g convention andappropriate comments. Decide on a consistent strategy for code comments anddocumentation.• Save the compiler, assembler and all other development tools together with thesource code and project files for later ma<strong>in</strong>tenance. Compatible tools may not beavailable <strong>in</strong> a few years when updates and modifications <strong>in</strong> the code are needed.2.2 Make a test strategyAssembly code is error prone, difficult to debug, difficult to make <strong>in</strong> a clearly structured way,difficult to read, and difficult to ma<strong>in</strong>ta<strong>in</strong>, as I have already mentioned. A consistent teststrategy can ameliorate some of these problems and save you a lot of time.8

My recommendation is to make the <strong>assembly</strong> code as an isolated module, function, class orlibrary with a well-def<strong>in</strong>ed <strong>in</strong>terface to the call<strong>in</strong>g program. Make it all <strong>in</strong> C++ first. Thenmake a test program which can test all aspects of the code you want to optimize. It is easierand safer to use a test program than to test the module <strong>in</strong> the f<strong>in</strong>al application.The test program has two purposes. The first purpose is to verify that the <strong>assembly</strong> codeworks correctly <strong>in</strong> all situations. And the second purpose is to test the speed of the<strong>assembly</strong> code without <strong>in</strong>vok<strong>in</strong>g the user <strong>in</strong>terface, file access and other parts of the f<strong>in</strong>alapplication program that may make the speed measurements less accurate and lessreproducible.You should use the test program repeatedly after each step <strong>in</strong> the development process andafter each modification of the code.Make sure the test program works correctly. It is quite common to spend a lot of timelook<strong>in</strong>g for an error <strong>in</strong> the code under test when <strong>in</strong> fact the error is <strong>in</strong> the test program.There are different test methods that can be used for verify<strong>in</strong>g that the code works correctly.A white box test supplies a carefully chosen series of different sets of <strong>in</strong>put data to makesure that all branches, paths and special cases <strong>in</strong> the code are tested. A black box testsupplies a series of random <strong>in</strong>put data and verifies that the output is correct. A very longseries of random data from a good random number generator can sometimes f<strong>in</strong>d rarelyoccurr<strong>in</strong>g errors that the white box test hasn't found.The test program may compare the output of the <strong>assembly</strong> code with the output of a C++implementation to verify that it is correct. The test should cover all boundary cases andpreferably also illegal <strong>in</strong>put data to see if the code generates the correct error responses.The speed test should supply a realistic set of <strong>in</strong>put data. A significant part of the CPU timemay be spent on branch mispredictions <strong>in</strong> code that conta<strong>in</strong>s a lot of branches. The amountof branch mispredictions depends on the degree of randomness <strong>in</strong> the <strong>in</strong>put data. You mayexperiment with the degree of randomness <strong>in</strong> the <strong>in</strong>put data to see how much it <strong>in</strong>fluencesthe computation time, and then decide on a realistic degree of randomness that matches atypical real application.An automatic test program that supplies a long stream of test data will typically f<strong>in</strong>d moreerrors and f<strong>in</strong>d them much faster than test<strong>in</strong>g the code <strong>in</strong> the f<strong>in</strong>al application. A good testprogram will f<strong>in</strong>d most errors, but you cannot be sure that it f<strong>in</strong>ds all errors. It is possible thatsome errors show up only <strong>in</strong> comb<strong>in</strong>ation with the f<strong>in</strong>al application.2.3 Common cod<strong>in</strong>g pitfallsThe follow<strong>in</strong>g list po<strong>in</strong>ts out some of the most common programm<strong>in</strong>g errors <strong>in</strong> <strong>assembly</strong>code.1. Forgett<strong>in</strong>g to save registers. Some registers have callee-save status, for exampleEBX. These registers must be saved <strong>in</strong> the prolog of a function and restored <strong>in</strong> theepilog if they are modified <strong>in</strong>side the function. Remember that the order of POP<strong>in</strong>structions must be the opposite of the order of PUSH <strong>in</strong>structions. See page 27 for alist of callee-save registers.2. Unmatched PUSH and POP <strong>in</strong>structions. The number of PUSH and POP <strong>in</strong>structionsmust be equal for all possible paths through a function. Example:Example 2.1. Unmatched push/poppush ebxtest ecx, ecxjz F<strong>in</strong>ished9

...pop ebxF<strong>in</strong>ished:ret; Wrong! Label should be before pop ebxHere, the value of EBX that is pushed is not popped aga<strong>in</strong> if ECX is zero. The result isthat the RET <strong>in</strong>struction will pop the former value of EBX and jump to a wrongaddress.3. Us<strong>in</strong>g a register that is reserved for another purpose. Some compilers reserve theuse of EBP or EBX for a frame po<strong>in</strong>ter or other purpose. Us<strong>in</strong>g these registers for adifferent purpose <strong>in</strong> <strong>in</strong>l<strong>in</strong>e <strong>assembly</strong> can cause errors.4. Stack-relative address<strong>in</strong>g after push. When address<strong>in</strong>g a variable relative to thestack po<strong>in</strong>ter, you must take <strong>in</strong>to account all preced<strong>in</strong>g <strong>in</strong>structions that modify thestack po<strong>in</strong>ter. Example:Example 2.2. Stack-relative address<strong>in</strong>gmov [esp+4], edipush ebppush ebxcmp esi, [esp+4]; Probably wrong!Here, the programmer probably <strong>in</strong>tended to compare ESI with EDI, but the value ofESP that is used for address<strong>in</strong>g has been changed by the two PUSH <strong>in</strong>structions, sothat ESI is <strong>in</strong> fact compared with EBP <strong>in</strong>stead.5. Confus<strong>in</strong>g value and address of a variable. Example:Example 2.3. Value versus address (MASM syntax).dataMyVariable DD 0; Def<strong>in</strong>e variable.codemov eax, MyVariable ; Gets value of MyVariablemov eax, offset MyVariable; Gets address of MyVariablelea eax, MyVariable ; Gets address of MyVariablemov ebx, [eax]; Gets value of MyVariable through po<strong>in</strong>termov ebx, [100]; Gets the constant 100 despite bracketsmov ebx, ds:[100] ; Gets value from address 1006. Ignor<strong>in</strong>g call<strong>in</strong>g conventions. It is important to observe the call<strong>in</strong>g conventions forfunctions, such as the order of parameters, whether parameters are transferred onthe stack or <strong>in</strong> registers, and whether the stack is cleaned up by the caller or thecalled function. See page 27.7. Function name mangl<strong>in</strong>g. A C++ code that calls an <strong>assembly</strong> function should useextern "C" to avoid name mangl<strong>in</strong>g. Some systems require that an underscore (_)is put <strong>in</strong> front of the name <strong>in</strong> the <strong>assembly</strong> code. See page 30.8. Forgett<strong>in</strong>g return. A function declaration must end with both RET and ENDP. Us<strong>in</strong>gone of these is not enough. The execution will cont<strong>in</strong>ue <strong>in</strong> the code after theprocedure if there is no RET.9. Forgett<strong>in</strong>g stack alignment. The stack po<strong>in</strong>ter must po<strong>in</strong>t to an address divisible by16 before any call statement, except <strong>in</strong> 16-bit systems and 32-bit W<strong>in</strong>dows. Seepage 27.10

10. Forgett<strong>in</strong>g shadow space <strong>in</strong> 64-bit W<strong>in</strong>dows. It is required to reserve 32 bytes ofempty stack space before any function call <strong>in</strong> 64-bit W<strong>in</strong>dows. See page 29.11. Mix<strong>in</strong>g call<strong>in</strong>g conventions. The call<strong>in</strong>g conventions <strong>in</strong> 64-bit W<strong>in</strong>dows and 64-bitL<strong>in</strong>ux are different. See page 27.12. Forgett<strong>in</strong>g to clean up float<strong>in</strong>g po<strong>in</strong>t stack. All float<strong>in</strong>g po<strong>in</strong>t stack registers that areused by a function must be cleared, typically with FSTP ST(0), before the functionreturns, except for ST(0) if it is used for return value. It is necessary to keep track ofexactly how many float<strong>in</strong>g po<strong>in</strong>t registers are <strong>in</strong> use. If a functions pushes morevalues on the float<strong>in</strong>g po<strong>in</strong>t register stack than it pops, then the register stack willgrow each time the function is called. An exception is generated when the stack isfull. This exception may occur somewhere else <strong>in</strong> the program.13. Forgett<strong>in</strong>g to clear MMX state. A function that uses MMX registers must clear thesewith the EMMS <strong>in</strong>struction before any call or return.14. Forgett<strong>in</strong>g to clear YMM state. A function that uses YMM registers must clear thesewith the VZEROUPPER or VZEROALL <strong>in</strong>struction before any call or return.15. Forgett<strong>in</strong>g to clear direction flag. Any function that sets the direction flag with STDmust clear it with CLD before any call or return.16. Mix<strong>in</strong>g signed and unsigned <strong>in</strong>tegers. Unsigned <strong>in</strong>tegers are compared us<strong>in</strong>g the JBand JA <strong>in</strong>structions. Signed <strong>in</strong>tegers are compared us<strong>in</strong>g the JL and JG <strong>in</strong>structions.Mix<strong>in</strong>g signed and unsigned <strong>in</strong>tegers can have un<strong>in</strong>tended consequences.17. Forgett<strong>in</strong>g to scale array <strong>in</strong>dex. An array <strong>in</strong>dex must be multiplied by the size of onearray element. For example mov eax, MyIntegerArray[ebx*4].18. Exceed<strong>in</strong>g array bounds. An array with n elements is <strong>in</strong>dexed from 0 to n - 1, notfrom 1 to n. A defective loop writ<strong>in</strong>g outside the bounds of an array can cause errorselsewhere <strong>in</strong> the program that are hard to f<strong>in</strong>d.19. Loop with ECX = 0. A loop that ends with the LOOP <strong>in</strong>struction will repeat 2 32 times ifECX is zero. Be sure to check if ECX is zero before the loop.20. Read<strong>in</strong>g carry flag after INC or DEC. The INC and DEC <strong>in</strong>structions do not change thecarry flag. Do not use <strong>in</strong>structions that read the carry flag, such as ADC, SBB, JC, JBE,SETA, etc. after INC or DEC. Use ADD and SUB <strong>in</strong>stead of INC and DEC to avoid thisproblem.3 The basics of <strong>assembly</strong> cod<strong>in</strong>g3.1 Assemblers availableThere are several assemblers available for the x86 <strong>in</strong>struction set, but currently none ofthem is good enough for universal recommendation. Assembly programmers are <strong>in</strong> theunfortunate situation that there is no universally agreed syntax for x86 <strong>assembly</strong>. Differentassemblers use different syntax variants. The most common assemblers are listed below.MASMThe Microsoft assembler is <strong>in</strong>cluded with Microsoft C++ compilers. Free versions cansometimes be obta<strong>in</strong>ed by download<strong>in</strong>g the Microsoft W<strong>in</strong>dows driver kit (WDK) or theplatforms software development kit (SDK) or as an add-on to the free Visual C++ Express11

Edition. MASM has been a de-facto standard <strong>in</strong> the W<strong>in</strong>dows world for many years, and the<strong>assembly</strong> output of most W<strong>in</strong>dows compilers uses MASM syntax. MASM has manyadvanced <strong>language</strong> features. The syntax is somewhat messy and <strong>in</strong>consistent due to aheritage that dates back to the very first assemblers for the 8086 processor. Microsoft is stillma<strong>in</strong>ta<strong>in</strong><strong>in</strong>g MASM <strong>in</strong> order to provide a complete set of development tools for W<strong>in</strong>dows, butit is obviously not profitable and the ma<strong>in</strong>tenance of MASM is apparently kept at a m<strong>in</strong>imum.New <strong>in</strong>struction sets are still added regularly, but the 64-bit version has several deficiencies.The newest version can run only when the compiler is <strong>in</strong>stalled and only <strong>in</strong> W<strong>in</strong>dows XP orlater. Version 6 and earlier can run <strong>in</strong> any system, <strong>in</strong>clud<strong>in</strong>g L<strong>in</strong>ux with a W<strong>in</strong>dows emulator.Such versions are circulat<strong>in</strong>g on the web.GASThe Gnu assembler is part of the Gnu B<strong>in</strong>utils package that is <strong>in</strong>cluded with mostdistributions of L<strong>in</strong>ux, BSD and Mac OS X. The Gnu compilers produce <strong>assembly</strong> outputthat goes through the Gnu assembler before it is l<strong>in</strong>ked. The Gnu assembler traditionallyuses the AT&T syntax that works well for mach<strong>in</strong>e-generated code, but it is very<strong>in</strong>convenient for human-generated <strong>assembly</strong> code. The AT&T syntax has the operands <strong>in</strong>an order that differs from all other x86 assemblers and from the <strong>in</strong>struction documentationpublished by Intel and AMD. It uses various prefixes like % and $ for specify<strong>in</strong>g operandtypes.Fortunately, newer versions of the Gnu assembler has an option for us<strong>in</strong>g Intel syntax<strong>in</strong>stead. The Gnu-Intel syntax is almost identical to MASM syntax. The Gnu-Intel syntaxdef<strong>in</strong>es only the syntax for <strong>in</strong>struction codes, not for directives, functions, macros, etc. Thedirectives still use the old Gnu-AT&T syntax. Specify .<strong>in</strong>tel_syntax noprefix to use theIntel syntax. Specify .att_syntax prefix to return to the AT&T syntax before leav<strong>in</strong>g<strong>in</strong>l<strong>in</strong>e <strong>assembly</strong> <strong>in</strong> C or C++ code.The Gnu assembler is available for all platforms, but the W<strong>in</strong>dows version is currently notfully up to date.NASMNASM is a free open source assembler with support for several platforms and object fileformats. The syntax is more clear and consistent than MASM syntax. NASM has fewer highlevelfeatures than MASM, but it is sufficient for most purposes.YASMYASM is very similar to NASM and uses exactly the same syntax. YASM has turned out tobe more versatile, stable and reliable than NASM <strong>in</strong> my tests and to produce better code.Does not support OMF format. YASM has often been the first assembler to support new<strong>in</strong>struction sets. YASM would be my best recommendation for a free multi-platformassembler if you don't need MASM syntax.FASMThe Flat assembler is another open source assembler for multiple platforms. The syntax isnot compatible with other assemblers. FASM is itself written <strong>in</strong> <strong>assembly</strong> <strong>language</strong> - anentic<strong>in</strong>g idea, but unfortunately this makes the development and ma<strong>in</strong>tenance of it lessefficient. FASM is currently not fully up to date with the latest <strong>in</strong>struction sets.WASMThe WASM assembler is <strong>in</strong>cluded with the Open Watcom C++ compiler. The syntaxresembles MASM but is somewhat different. Not fully up to date.12

JWASMJWASM is a further development of WASM. It is fully compatible with MASM syntax,<strong>in</strong>clud<strong>in</strong>g advanced macro and high level directives. Does not currently support 64-bit mode.JWASM is a good choice if MASM syntax is desired.TASMBorland Turbo Assembler is <strong>in</strong>cluded with CodeGear C++ Builder. It is compatible withMASM syntax except for some newer syntax additions. TASM is no longer ma<strong>in</strong>ta<strong>in</strong>ed but isstill available. It is obsolete and does not support current <strong>in</strong>struction sets.HLAHigh Level Assembler is actually a high level <strong>language</strong> compiler that allows <strong>assembly</strong>-likestatements and produces <strong>assembly</strong> output. This was probably a good idea at the time it was<strong>in</strong>vented, but today where the best C++ compilers support <strong>in</strong>tr<strong>in</strong>sic functions, I believe thatHLA is no longer needed.Inl<strong>in</strong>e <strong>assembly</strong>Microsoft and Intel C++ compilers support <strong>in</strong>l<strong>in</strong>e <strong>assembly</strong> us<strong>in</strong>g a subset of the MASMsyntax. It is possible to access C++ variables, functions and labels simply by <strong>in</strong>sert<strong>in</strong>g theirnames <strong>in</strong> the <strong>assembly</strong> code. This is easy, but does not support C++ register variables. Seepage 35.The Gnu assembler supports <strong>in</strong>l<strong>in</strong>e <strong>assembly</strong> with access to the full range of <strong>in</strong>structionsand directives of the Gnu assembler <strong>in</strong> both Intel and AT&T syntax. The access to C++variables from <strong>assembly</strong> uses a quite complicated method.The Intel compilers for L<strong>in</strong>ux and Mac systems support both the Microsoft style and the Gnustyle of <strong>in</strong>l<strong>in</strong>e <strong>assembly</strong>.Intr<strong>in</strong>sic functions <strong>in</strong> C++This is the newest and most convenient way of comb<strong>in</strong><strong>in</strong>g low-level and high-level code.Intr<strong>in</strong>sic functions are high-level <strong>language</strong> representatives of mach<strong>in</strong>e <strong>in</strong>structions. Forexample, you can do a vector addition <strong>in</strong> C++ by call<strong>in</strong>g the <strong>in</strong>tr<strong>in</strong>sic function that isequivalent to an <strong>assembly</strong> <strong>in</strong>struction for vector addition. Furthermore, it is possible todef<strong>in</strong>e a vector class with an overloaded + operator so that a vector addition is obta<strong>in</strong>edsimply by writ<strong>in</strong>g +. Intr<strong>in</strong>sic functions are supported by Microsoft, Intel and Gnu compilers.See page 33 and manual 1: "<strong>Optimiz<strong>in</strong>g</strong> software <strong>in</strong> C++".Which assembler to choose?In most cases, the easiest solution is to use <strong>in</strong>tr<strong>in</strong>sic functions <strong>in</strong> C++ code. The compilercan take care of most of the optimization so that the programmer can concentrate onchoos<strong>in</strong>g the best algorithm and organiz<strong>in</strong>g the data <strong>in</strong>to vectors. System programmers canaccess system <strong>in</strong>structions by us<strong>in</strong>g <strong>in</strong>tr<strong>in</strong>sic functions without hav<strong>in</strong>g to use <strong>assembly</strong><strong>language</strong>.Where real low-level programm<strong>in</strong>g is needed, such as <strong>in</strong> highly optimized function libraries,you may use an assembler.It may be preferred to use an assembler that is compatible with the C++ compiler you areus<strong>in</strong>g. This allows you to use the compiler for translat<strong>in</strong>g C++ to <strong>assembly</strong>, optimize the<strong>assembly</strong> code further, and then assemble it. If the assembler is not compatible with thesyntax generated by the compiler then you may generate an object file with the compilerand disassemble the object file to the <strong>assembly</strong> syntax you need. The objconv disassemblersupports several different syntax dialects.13

The examples <strong>in</strong> this manual use MASM syntax, unless otherwise noted. The MASM syntaxis described <strong>in</strong> Microsoft Macro Assembler Reference at msdn.microsoft.com.See www.agner.org/optimize for l<strong>in</strong>ks to various syntax manuals, cod<strong>in</strong>g manuals anddiscussion forums.3.2 Register set and basic <strong>in</strong>structionsRegisters <strong>in</strong> 16 bit modeGeneral purpose and <strong>in</strong>teger registersFull registerbit 0 - 15Partial registerbit 8 - 15Partial registerbit 0 - 7AX AH ALBX BH BLCX CH CLDX DH DLSIDIBPSPFlagsIPTable 3.1. General purpose registers <strong>in</strong> 16 bit mode.The 32-bit registers are also available <strong>in</strong> 16-bit mode if supported by the microprocessorand operat<strong>in</strong>g system. The high word of ESP should not be used because it is not saveddur<strong>in</strong>g <strong>in</strong>terrupts.Float<strong>in</strong>g po<strong>in</strong>t registersFull registerbit 0 - 79ST(0)ST(1)ST(2)ST(3)ST(4)ST(5)ST(6)ST(7)Table 3.2. Float<strong>in</strong>g po<strong>in</strong>t stack registersMMX registers may be available if supported by the microprocessor. XMM registers may beavailable if supported by microprocessor and operat<strong>in</strong>g system.Segment registersFull registerbit 0 - 15CSDSESSSTable 3.3. Segment registers <strong>in</strong> 16 bit modeRegister FS and GS may be available.14

Registers <strong>in</strong> 32 bit modeGeneral purpose and <strong>in</strong>teger registersFull registerbit 0 - 31Partial registerbit 0 - 15Partial registerbit 8 - 15Partial registerbit 0 - 7EAX AX AH ALEBX BX BH BLECX CX CH CLEDX DX DH DLESISIEDIDIEBPBPESPSPEFlagsFlagsEIPIPTable 3.4. General purpose registers <strong>in</strong> 32 bit modeFloat<strong>in</strong>g po<strong>in</strong>t and 64-bit vector registersFull registerbit 0 - 79ST(0)ST(1)ST(2)ST(3)ST(4)ST(5)ST(6)ST(7)Table 3.5. Float<strong>in</strong>g po<strong>in</strong>t and MMX registersPartial registerbit 0 - 63MM0MM1MM2MM3MM4MM5MM6MM7The MMX registers are only available if supported by the microprocessor. The ST and MMXregisters cannot be used <strong>in</strong> the same part of the code. A section of code us<strong>in</strong>g MMXregisters must be separated from any subsequent section us<strong>in</strong>g ST registers by execut<strong>in</strong>gan EMMS <strong>in</strong>struction.128- and 256-bit <strong>in</strong>teger and float<strong>in</strong>g po<strong>in</strong>t vector registersFull registerbit 0 - 255Full or partial registerbit 0 - 127YMM0XMM0YMM1XMM1YMM2XMM2YMM3XMM3YMM4XMM4YMM5XMM5YMM6XMM6YMM7XMM7Table 3.6. XMM and YMM registers <strong>in</strong> 32 bit modeThe XMM registers are only available if supported both by the microprocessor and theoperat<strong>in</strong>g system. Scalar float<strong>in</strong>g po<strong>in</strong>t <strong>in</strong>structions use only 32 or 64 bits of the XMMregisters for s<strong>in</strong>gle or double precision, respectively. The YMM registers are expected to beavailable <strong>in</strong> 2010.Segment registers15

Full registerbit 0 - 15CSDSESFSGSSSTable 3.7. Segment registers <strong>in</strong> 32 bit modeRegisters <strong>in</strong> 64 bit modeGeneral purpose and <strong>in</strong>teger registersFull registerbit 0 - 63PartialregisterPartialregisterPartialregisterbit 8 - 15Partialregisterbit 0 - 7bit 0 - 31 bit 0 - 15RAX EAX AX AH ALRBX EBX BX BH BLRCX ECX CX CH CLRDX EDX DX DH DLRSI ESI SI SILRDI EDI DI DILRBP EBP BP BPLRSP ESP SP SPLR8 R8D R8W R8BR9 R9D R9W R9BR10 R10D R10W R10BR11 R11D R11W R11BR12 R12D R12W R12BR13 R13D R13W R13BR14 R14D R14W R14BR15 R15D R15W R15BRFlagsRIPTable 3.8. Registers <strong>in</strong> 64 bit modeFlagsThe high 8-bit registers AH, BH, CH, DH can only be used <strong>in</strong> <strong>in</strong>structions that have no REXprefix.Note that modify<strong>in</strong>g a 32-bit partial register will set the rest of the register (bit 32-63) to zero,but modify<strong>in</strong>g an 8-bit or 16-bit partial register does not affect the rest of the register. Thiscan be illustrated by the follow<strong>in</strong>g sequence:; Example 3.1. 8, 16, 32 and 64 bit registersmov rax, 1111111111111111H ; rax = 1111111111111111Hmov eax, 22222222H; rax = 0000000022222222Hmov ax, 3333H ; rax = 0000000022223333Hmov al, 44H ; rax = 0000000022223344HThere is a good reason for this <strong>in</strong>consistency. Sett<strong>in</strong>g the unused part of a register to zero ismore efficient than leav<strong>in</strong>g it unchanged because this removes a false dependence onprevious values. But the pr<strong>in</strong>ciple of resett<strong>in</strong>g the unused part of a register cannot beextended to 16 bit and 8 bit partial registers because this would break the backwardscompatibility with 32-bit and 16-bit modes.The only <strong>in</strong>struction that can have a 64-bit immediate data operand is MOV. Other <strong>in</strong>teger<strong>in</strong>structions can only have a 32-bit sign extended operand. Examples:16

; Example 3.2. Immediate operands, full and sign extendedmov rax, 1111111111111111H ; Full 64 bit immediate operandmov rax, -1; 32 bit sign-extended operandmov eax, 0ffffffffH; 32 bit zero-extended operandadd rax, 1; 8 bit sign-extended operandadd rax, 100H; 32 bit sign-extended operandadd eax, 100H; 32 bit operand. result is zero-extendedmov rbx, 100000000H; 64 bit immediate operandadd rax, rbx; Use an extra register if big operandIt is not possible to use a 16-bit sign-extended operand. If you need to add an immediatevalue to a 64 bit register then it is necessary to first move the value <strong>in</strong>to another register ifthe value is too big for fitt<strong>in</strong>g <strong>in</strong>to a 32 bit sign-extended operand.Float<strong>in</strong>g po<strong>in</strong>t and 64-bit vector registersFull registerbit 0 - 79ST(0)ST(1)ST(2)ST(3)ST(4)ST(5)ST(6)ST(7)Table 3.9. Float<strong>in</strong>g po<strong>in</strong>t and MMX registers17Partial registerbit 0 - 63MM0MM1MM2MM3MM4MM5MM6MM7The ST and MMX registers cannot be used <strong>in</strong> the same part of the code. A section of codeus<strong>in</strong>g MMX registers must be separated from any subsequent section us<strong>in</strong>g ST registers byexecut<strong>in</strong>g an EMMS <strong>in</strong>struction. The ST and MMX registers cannot be used <strong>in</strong> device driversfor 64-bit W<strong>in</strong>dows.128- and 256-bit <strong>in</strong>teger and float<strong>in</strong>g po<strong>in</strong>t vector registersFull registerbit 0 - 255Full or partial registerbit 0 - 127YMM0XMM0YMM1XMM1YMM2XMM2YMM3XMM3YMM4XMM4YMM5XMM5YMM6XMM6YMM7XMM7YMM8XMM8YMM9XMM9YMM10XMM10YMM11XMM11YMM12XMM12YMM13XMM13YMM14XMM14YMM15XMM15Table 3.10. XMM and YMM registers <strong>in</strong> 64 bit modeScalar float<strong>in</strong>g po<strong>in</strong>t <strong>in</strong>structions use only 32 or 64 bits of the XMM registers for s<strong>in</strong>gle ordouble precision, respectively. The YMM registers are expected to be available <strong>in</strong> 2010.Segment registersFull register

it 0 - 15CSFSGSTable 3.11. Segment registers <strong>in</strong> 64 bit modeSegment registers are only used for special purposes.3.3 Address<strong>in</strong>g modesAddress<strong>in</strong>g <strong>in</strong> 16-bit mode16-bit code uses a segmented memory model. A memory operand can have any of thesecomponents:• A segment specification. This can be any segment register or a segment or groupname associated with a segment register. (The default segment is DS, except if BP isused as base register). The segment can be implied from a label def<strong>in</strong>ed <strong>in</strong>side asegment.• A label def<strong>in</strong><strong>in</strong>g a relocatable offset. The offset relative to the start of the segment iscalculated by the l<strong>in</strong>ker.• An immediate offset. This is a constant. If there is also a relocatable offset then thevalues are added.• A base register. This can only be BX or BP.• An <strong>in</strong>dex register. This can only be SI or DI. There can be no scale factor.A memory operand can have all of these components. An operand conta<strong>in</strong><strong>in</strong>g only animmediate offset is not <strong>in</strong>terpreted as a memory operand by the MASM assembler, even if ithas a []. Examples:; Example 3.3. Memory operands <strong>in</strong> 16-bit modeMOV AX, DS:[100H] ; Address has segment and immediate offsetADD AX, MEM[SI]+4 ; Has relocatable offset and <strong>in</strong>dex and immediateData structures bigger than 64 kb are handled <strong>in</strong> the follow<strong>in</strong>g ways. In real mode andvirtual mode (DOS): Add<strong>in</strong>g 1 to the segment register corresponds to add<strong>in</strong>g 10H to theoffset. In protected mode (W<strong>in</strong>dows 3.x): Add<strong>in</strong>g 8 to the segment register corresponds toadd<strong>in</strong>g 10000H to the offset. The value added to the segment must be a multiple of 8.Address<strong>in</strong>g <strong>in</strong> 32-bit mode32-bit code uses a flat memory model <strong>in</strong> most cases. Segmentation is possible but onlyused for special purposes (e.g. thread environment block <strong>in</strong> FS).A memory operand can have any of these components:• A segment specification. Not used <strong>in</strong> flat mode.• A label def<strong>in</strong><strong>in</strong>g a relocatable offset. The offset relative to the FLAT segment group iscalculated by the l<strong>in</strong>ker.• An immediate offset. This is a constant. If there is also a relocatable offset then thevalues are added.18

• A base register. This can be any 32 bit register.• An <strong>in</strong>dex register. This can be any 32 bit register except ESP.• A scale factor applied to the <strong>in</strong>dex register. Allowed values are 1, 2, 4, 8.A memory operand can have all of these components. Examples:; Example 3.4. Memory operands <strong>in</strong> 32-bit modemov eax, fs:[10H] ; Address has segment and immediate offsetadd eax, mem[esi] ; Has relocatable offset and <strong>in</strong>dexadd eax, [esp+ecx*4+8] ; Base, <strong>in</strong>dex, scale and immediate offsetPosition-<strong>in</strong>dependent code <strong>in</strong> 32-bit modePosition-<strong>in</strong>dependent code is required for mak<strong>in</strong>g shared objects (*.so) <strong>in</strong> 32-bit Unix-likesystems. The most common method for mak<strong>in</strong>g position-<strong>in</strong>dependent code <strong>in</strong> 32-bit L<strong>in</strong>uxand BSD is to use a global offset table (GOT) conta<strong>in</strong><strong>in</strong>g the addresses of all static objects.The GOT method is quite <strong>in</strong>efficient because the code has to fetch an address from theGOT every time it reads or writes data <strong>in</strong> the data segment. A faster method is to use anarbitrary reference po<strong>in</strong>t, as shown <strong>in</strong> the follow<strong>in</strong>g example:; Example 3.5. Position-<strong>in</strong>dependent code, 32 bit, YASM syntaxSECTION .dataalpha: dd 1beta: dd 2SECTION .textfunca: ; This function returns alpha + betacall get_thunk_ecx ; get ecx = eiprefpo<strong>in</strong>t:; ecx po<strong>in</strong>ts heremov eax, [ecx+alpha-refpo<strong>in</strong>t] ; relative addressadd eax, [ecx+beta -refpo<strong>in</strong>t] ; relative addressretget_thunk_ecx: ; Function for read<strong>in</strong>g <strong>in</strong>struction po<strong>in</strong>termov ecx, [esp]retThe only <strong>in</strong>struction that can read the <strong>in</strong>struction po<strong>in</strong>ter <strong>in</strong> 32-bit mode is the call<strong>in</strong>struction. In example 3.5 we are us<strong>in</strong>g call get_thunk_ecx for read<strong>in</strong>g the <strong>in</strong>structionpo<strong>in</strong>ter (eip) <strong>in</strong>to ecx. ecx will then po<strong>in</strong>t to the first <strong>in</strong>struction after the call. This is ourreference po<strong>in</strong>t, named refpo<strong>in</strong>t. (get_thunk_ecx must be a separate function with itsown return because a call without a return would cause mispredictions of subsequentreturns). All objects <strong>in</strong> the data segment can now be addressed relative to refpo<strong>in</strong>t withecx as po<strong>in</strong>ter.This method is commonly used <strong>in</strong> Mac systems, where the mach-o file format supportsreferences relative to an arbitrary po<strong>in</strong>t. Other file formats don't support this k<strong>in</strong>d ofreference, but it is possible to use a self-relative reference with an offset. The YASM andGnu assemblers will do this automatically, while most other assemblers are unable tohandle this situation. It is therefore necessary to use a YASM or Gnu assembler if you wantto generate position-<strong>in</strong>dependent code <strong>in</strong> 32-bit mode with this method. The code may lookstrange <strong>in</strong> a debugger or disassembler, but it executes without any problems <strong>in</strong> all 32-bitx86 operat<strong>in</strong>g systems.The GOT method would use the same reference po<strong>in</strong>t method as <strong>in</strong> example 3.5 foraddress<strong>in</strong>g the GOT, and then use the GOT to read the addresses of alpha and beta <strong>in</strong>toother po<strong>in</strong>ter registers. This is an unnecessary waste of time and registers because if we19

can access the GOT relative to the reference po<strong>in</strong>t, then we can just as well access alphaand beta relative to the reference po<strong>in</strong>t.The po<strong>in</strong>ter tables used <strong>in</strong> switch/case statements can use the same reference po<strong>in</strong>t forthe table and for the po<strong>in</strong>ters <strong>in</strong> the table:; Example 3.6. Position-<strong>in</strong>dependent switch, 32 bit, YASM syntaxSECTION .datajumptable: dd case1-refpo<strong>in</strong>t, case2-refpo<strong>in</strong>t, case3-refpo<strong>in</strong>tSECTION .textfuncb: ; This function implements a switch statementmov eax, [esp+4] ; function parametercall get_thunk_ecx ; get ecx = eiprefpo<strong>in</strong>t:; ecx po<strong>in</strong>ts herecmp eax, 3jnb case_default ; <strong>in</strong>dex out of rangemov eax, [ecx+eax*4+jumptable-refpo<strong>in</strong>t] ; read table entry; The jump addresses are relative to refpo<strong>in</strong>t, get absolute address:add eax, ecxjmp eax ; jump to desired casecase1: ...retcase2: ...retcase3: ...retcase_default:...retget_thunk_ecx: ; Function for read<strong>in</strong>g <strong>in</strong>struction po<strong>in</strong>termov ecx, [esp]retAddress<strong>in</strong>g <strong>in</strong> 64-bit mode64-bit code always uses a flat memory model. Segmentation is impossible except for FS andGS which are used for special purposes only (thread environment block, etc.).There are several different address<strong>in</strong>g modes <strong>in</strong> 64-bit mode: RIP-relative, 32-bit absolute,64-bit absolute, and relative to a base register.RIP-relative address<strong>in</strong>gThis is the preferred address<strong>in</strong>g mode for static data. The address conta<strong>in</strong>s a 32-bit signextendedoffset relative to the <strong>in</strong>struction po<strong>in</strong>ter. The address cannot conta<strong>in</strong> any segmentregister or <strong>in</strong>dex register, and no base register other than RIP, which is implicit. Example:; Example 3.7a. RIP-relative memory operand, MASM syntaxmov eax, [mem]; Example 3.7b. RIP-relative memory operand, NASM/YASM syntaxdefault relmov eax, [mem]; Example 3.7c. RIP-relative memory operand, Gas/Intel syntaxmov eax, [mem+rip]20

The MASM assembler always generates RIP-relative addresses for static data when noexplicit base or <strong>in</strong>dex register is specified. On other assemblers you must remember tospecify relative address<strong>in</strong>g.32-bit absolute address<strong>in</strong>g <strong>in</strong> 64 bit modeA 32-bit constant address is sign-extended to 64 bits. This address<strong>in</strong>g mode works only if itis certa<strong>in</strong> that all addresses are below 2 31 (or above -2 31 for system code).It is safe to use 32-bit absolute address<strong>in</strong>g <strong>in</strong> L<strong>in</strong>ux and BSD, where all addresses arebelow 2 31 by default. It is unsafe <strong>in</strong> W<strong>in</strong>dows DLL's because they might be relocated to anyaddress. It will not work <strong>in</strong> Mac systems, where addresses are above 2 32 by default.Note that NASM, YASM and Gas assemblers can make 32-bit absolute addresses whenyou do not explicitly specify rip-relative addresses. You have to specify default rel <strong>in</strong>NASM/YASM or [mem+rip] <strong>in</strong> Gas to avoid 32-bit absolute addresses.There is absolutely no reason to use absolute addresses for simple memory operands. Riprelativeaddresses make <strong>in</strong>structions shorter, they elim<strong>in</strong>ate the need for relocation at loadtime, and they are safe to use <strong>in</strong> all systems.Absolute addresses are needed only for access<strong>in</strong>g arrays where there is an <strong>in</strong>dex register,e.g.; Example 3.8. 32 bit absolute addresses <strong>in</strong> 64-bit modemov al, [chararray + rsi]mov ebx, [<strong>in</strong>tarray + rsi*4]This method can be used only if the address is guaranteed to be < 2 31 , as expla<strong>in</strong>ed above.See below for alternative methods of address<strong>in</strong>g static arrays.The MASM assembler generates absolute addresses only when a base or <strong>in</strong>dex register isspecified together with a memory label as <strong>in</strong> example 3.8 above.The <strong>in</strong>dex register should preferably be a 64-bit register, not a 32-bit register. Segmentationis possible only with FS or GS.64-bit absolute address<strong>in</strong>gThis uses a 64-bit absolute virtual address. The address cannot conta<strong>in</strong> any segmentregister, base or <strong>in</strong>dex register. 64-bit absolute addresses can only be used with the MOV<strong>in</strong>struction, and only with AL, AX, EAX or RAX as source or dest<strong>in</strong>ation.; Example 3.9. 64 bit absolute address, YASM/NASM syntaxmov eax, dword [qword a]This address<strong>in</strong>g mode is not supported by the MASM assembler, but it is supported byother assemblers.Address<strong>in</strong>g relative to 64-bit base registerA memory operand <strong>in</strong> this mode can have any of these components:• A base register. This can be any 64 bit <strong>in</strong>teger register.• An <strong>in</strong>dex register. This can be any 64 bit <strong>in</strong>teger register except RSP.• A scale factor applied to the <strong>in</strong>dex register. The only possible values are 1, 2, 4, 8.• An immediate offset. This is a constant offset relative to the base register.A base register is always needed for this address<strong>in</strong>g mode. The other components areoptional. Examples:21

; Example 3.10. Base register address<strong>in</strong>g <strong>in</strong> 64 bit modemov eax, [rsi]add eax, [rsp + 4*rcx + 8]This address<strong>in</strong>g mode is used for data on the stack, for structure and class members andfor arrays.Address<strong>in</strong>g static arrays <strong>in</strong> 64 bit modeIt is not possible to access static arrays with RIP-relative address<strong>in</strong>g and an <strong>in</strong>dex register.There are several possible alternatives.The follow<strong>in</strong>g examples address static arrays. The C++ code for this example is:// Example 3.11a. Static arrays <strong>in</strong> 64 bit mode// C++ code:static <strong>in</strong>t a[100], b[100];for (<strong>in</strong>t i = 0; i < 100; i++) {b[i] = -a[i];}The simplest solution is to use 32-bit absolute addresses. This is possible as long as theaddresses are below 2 31 .; Example 3.11b. Use 32-bit absolute addresses; (64 bit L<strong>in</strong>ux); Assumes that image base < 80000000H.dataA DD 100 dup (?) ; Def<strong>in</strong>e static array AB DD 100 dup (?) ; Def<strong>in</strong>e static array B.codexor ecx, ecx ; i = 0TOPOFLOOP:; Top of loopmov eax, [A+rcx*4] ; 32-bit address + scaled <strong>in</strong>dexneg eaxmov [B+rcx*4], eax ; 32-bit address + scaled <strong>in</strong>dexadd ecx, 1cmp ecx, 100 ; i < 100jb TOPOFLOOP ; LoopThe assembler will generate a 32-bit relocatable address for A and B <strong>in</strong> example 3.11bbecause it cannot comb<strong>in</strong>e a RIP-relative address with an <strong>in</strong>dex register.This method is used by Gnu and Intel compilers <strong>in</strong> 64-bit L<strong>in</strong>ux to access static arrays. It isnot used by any compiler for 64-bit W<strong>in</strong>dows, I have seen, but it works <strong>in</strong> W<strong>in</strong>dows as well ifthe address is less than 2 31 . The image base is typically 2 22 for application programs andbetween 2 28 and 2 29 for DLL's, so this method will work <strong>in</strong> most cases, but not all. Thismethod cannot normally be used <strong>in</strong> 64-bit Mac systems because all addresses are above2 32 by default.The second method is to use image-relative address<strong>in</strong>g. The follow<strong>in</strong>g solution loads theimage base <strong>in</strong>to register RBX by us<strong>in</strong>g a LEA <strong>in</strong>struction with a RIP-relative address:; Example 3.11c. Address relative to image base; 64 bit, W<strong>in</strong>dows only, MASM assembler.dataA DD 100 dup (?)B DD 100 dup (?)extern __ImageBase:byte22