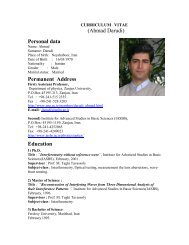

714 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 15, NO. 3, MARCJ 2006Fig. 1.error.Overall modified <strong>SPIHT</strong> (M<strong>SPIHT</strong>) coding scheme. A scaling step is added be<strong>for</strong>e <strong>SPIHT</strong> coding to balance the classification error <strong>and</strong> the reconstructionative importance placed on <strong>MSE</strong> <strong>and</strong> classification accommodatedusing a Lagrangian distortion measure, analogous to thatemployed in B-TSVQ.The rescaling of coefficients is a well-known technique <strong>for</strong>adjusting their relative importance prior to encoding. There<strong>for</strong>e,the principal challenge considered here involves developing atechnique to autonomously <strong>and</strong> adaptively learn the appropriatewavelet-coefficient scalings, accounting <strong>for</strong> a Lagrangian distortionmeasure of the type discussed above. The difficulty isthat we do not want to make any assumptions about what maybe important <strong>for</strong> a classification task, since in many applications(e.g., remote sensing) the imagery is often too variable. We,there<strong>for</strong>e, proceed under the assumption that segmenting theimage into distinct textural classes will play an important role ina subsequent classification task. For example, in remote-sensingclassification, an anomaly or target (to be distinguished withina classification stage) is typically defined by its textural contrastrelative to the texture of the background. There<strong>for</strong>e, thefirst stage of our algorithm is to segment the image into textures,with determined adaptively via a minimum-descriptionlength (MDL) framework [26]. Once the segmentation isper<strong>for</strong>med, a second algorithm is employed to scale the importanceof the wavelet coefficients in the context of realizing thissegmentation. The <strong>MSE</strong> is also accounted <strong>for</strong>, in a Lagrangiansetting, with the relative importance placed on classification <strong>and</strong><strong>MSE</strong> dictated by the chosen Lagrange multiplier. Note that accounting<strong>for</strong> <strong>MSE</strong> in addition to importance <strong>for</strong> classification isnecessary because the final decoded image will likely be viewedby a human, who may not wish to rely overly on the results ofthe (likely imperfect) classifier at the encoder.After per<strong>for</strong>ming segmentation <strong>and</strong> determining the relativeimportance of the wavelet coefficients, the coefficients arescaled appropriately, with the coefficient-dependent scale factorsent to the decoder. The encoding <strong>and</strong> decoding procedure thenemploys a slightly modified <strong>for</strong>m of <strong>SPIHT</strong>, as detailed below.The overall coding scheme is shown in Fig. 1.Since wavelets are to be employed in the subsequent <strong>SPIHT</strong>basedencoder/decoder, we employ a wavelet-based segmentationalgorithm. hidden Markov trees (HMTs) are well suitedto capturing the multiscale statistical dependence of waveletcoefficients [4]. We propose an unsupervised image segmentationmethod using an HMT mixture model, the parameterestimation problem <strong>for</strong> which is solved by a generalized expectation-maximization(EM) algorithm [1]. The posterior probabilitydistribution across the mixture components yields the imagesegmentation. The segmentation is per<strong>for</strong>med autonomouslyat the encoder.After per<strong>for</strong>ming segmentation into textures, the final stepprior to encoding is to scale the wavelet coefficients based ontheir importance <strong>for</strong> classification, while also accounting in aLagrangian sense <strong>for</strong> <strong>MSE</strong>. This is effected by implementingkernel matching pursuits (KMP) [24]. The wavelet coefficientsare scaled based on the KMP results, <strong>and</strong> then encoded using amodified version of <strong>SPIHT</strong>, as detailed below. We compare theper<strong>for</strong>mance of B-TSVQ <strong>and</strong> modified <strong>SPIHT</strong>.The remainder of the paper is organized as follows. In SectionII, we present the definition of the HMT mixture modelalong with the expectation-maximization (EM) training algorithm.We consider in Section III an additive regression modelto estimate the importance of the wavelet coefficients <strong>for</strong> texturerecognition, employing a KMP solution. In Section IV, themodified <strong>SPIHT</strong> coding scheme is discussed. Typical results ofthe algorithms are presented in Section V, with conclusions presentedin Section VI.II. IMAGE SEGMENTATION<strong>Image</strong> segmentation is a fundamental low-level operation inimage analysis <strong>for</strong> object identification. The encoding strategyadopted here is based on a wavelet decomposition of the image,<strong>and</strong>, there<strong>for</strong>e, we utilize a wavelet-based segmentation procedure.To account <strong>for</strong> the variability of anticipated imagery, thesegmentation algorithm autonomously determines the numberof textures as well as their statistical characteristics.A. HMT-Based Block ClassificationThe image is analyzed with a two-dimensional wavelet trans<strong>for</strong>m,employing a one-dimensional trans<strong>for</strong>m in each of the twoprincipal directions. For wavelet levels we yield a contiguousset of wavelet quadtrees, each corresponding to a blockin the original image, with . Based on the persistencestatistical property of wavelet coefficients, which observes thatlarge (or small) wavelet coefficient magnitudes tend to propagatethrough the scales corresponding to the same spatial location,Crouse et al. [4] have introduced the wavelet-domain HMTmodel to capture the joint wavelet statistics.The HMT models the marginal probability density function(pdf) of each wavelet coefficient as a Gaussian mixture with ahidden state variable. It assumes that the key dependency betweenthe hidden state variable of the wavelet coefficients is

CHANG AND CARIN: MODIFIED <strong>SPIHT</strong> ALGORITHM FOR IMAGE CODING 715tree-structured <strong>and</strong> Markovian, tied to the wavelet quadtree, employinga state transition matrix to quantify the degree of persistencebetween scales. The iterative EM algorithm <strong>for</strong> HMTs[4] was proposed to train the parameters (the mixture densityparameters <strong>and</strong> the probabilistic graph transition probabilities)to match the data in the maximum likelihood (ML) sense. Thetrained HMT provides a good approximation to the joint probabilityof the wavelet coefficients, yielding good classificationper<strong>for</strong>mance.The conventional HMT training process requires the availabilityof labeled imagery. Specifically, data must be provided<strong>for</strong> each texture class, followed by HMT training. The trainedHMTs can then be applied to segment new imagery that mightbe observed, assuming that this new imagery is characterized bysimilar textures. In our problem we assume little or no knowledgeof the anticipated image textural properties, <strong>and</strong>, there<strong>for</strong>e,determination of the textural classes is determined jointly withHMT training.B. HMT Mixture ModelWe assume that the statistics of the wavelet coefficients fromthe overall image may be represented as a mixture of HMTs,analogous to the well-known Gaussian mixture model (GMM)[1]. The probabilistic model <strong>for</strong> mixture components is givenbywhere are the wavelet coefficients of a wavelet tree, ,are the mixing coefficients of the textures,which may also be interpreted as the prior probabilities, <strong>and</strong> represents the parameters of the th HMTcomponent. The vector represents the cumulative set of modelparameters, specifically <strong>and</strong> , .We employ an iterative training procedure, analogous to thatfound in GMM design [1]. Let <strong>and</strong> represent themodel parameters <strong>for</strong> mixture component after iteration .Weestimate the probability that the th wavelet tree is generated bytexture (corresponding to the th HMT, denoted )as(1)where, <strong>for</strong> notational simplicity, we define. Equation (3) represents an approximation tothe conditional expectationusing. The samples that are associated withtexture with higher likelihood make a greater contribution tothe parameters of that texture component. The cumulative setof HMT parameters , e.g., state-transition probabilities,state-dependent parameters, etc., define the overall set of parameters<strong>for</strong> the th HMT.The mixing coefficients are updated aswhere we have again assumed .For initialization, we use all the data with equal probabilityto train an initial (single) HMT with parameters. We then cluster the data into (scalar) Gaussian mixtures(denoting textures) based on , assumingthat the data from the same texture have similar probabilityvalues. In this manner, we assign the initial probability. The same ideahas been applied to effectively use unlabeled sequential datain learning hidden Markov models [10], with this termed theextended Baum–Welch (EBW) algorithm.We segment the image via a maximum a posteriori (MAP)estimator, that is(5)where represents the HMT model parameters <strong>for</strong> mixturecomponent , after convergence is achieved <strong>for</strong> the a<strong>for</strong>ementionedtraining algorithm. The parameter , representing thenumber of textures in the image, is selected autonomously via anin<strong>for</strong>mation-theoretic model-selection method called the MDLprinciple, derived by Rissanen [18]. The MDL principle statesthat the best model is the one that minimizes the summed descriptionlength of the model <strong>and</strong> the likelihood of the data withrespect to the model, making a trade-off between the model accuracy<strong>and</strong> model succinctness [26]. In our case, we calculatethe value as(4)(2)The parameters of each HMT are updated by an augmented<strong>for</strong>m of the EM algorithm in [4]. In [4], the wavelettreesare each used separately within an “upward-downward”algorithm to update the parameters of eachindividual HMT. On iteration , HMT model parametersare initiated using parameters from the previous step. Letrepresent an arbitrary parameter from ,soupdated using wavelet tree . Then, the associated cumulativeparameter, based on all wavelet trees, is expressed as(3)where the first term denotes the accuracy of the mixturemodel, the second term reflects the model complexity, <strong>and</strong>is the number of the free parameters estimated. We chooseto minimize (6).III. QUANTIZATION BINSThe purpose of rescaling the wavelet coefficients is to helpthe encoder order the output bit stream with consideration ofthe ultimate recognition task, with the balance between <strong>MSE</strong><strong>and</strong> segmentation per<strong>for</strong>mance driven by a Lagrangian metric.Wavelet coefficients that play an important role in defining thesegmented texture class labels (determined automatically, asdiscussed in Section II) should be represented by a relatively(6)