Entropy and Mutual Information

Entropy and Mutual Information

Entropy and Mutual Information

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

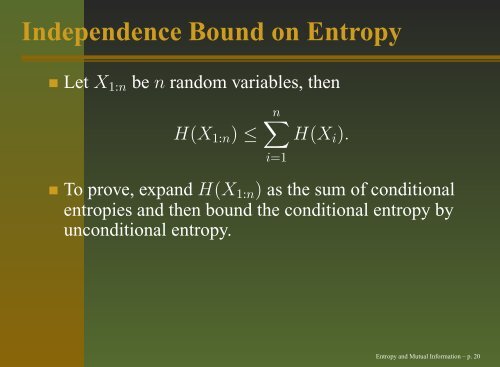

Independence Bound on <strong>Entropy</strong>Let X 1:n be n r<strong>and</strong>om variables, thenH(X 1:n ) ≤n∑i=1H(X i ).To prove, exp<strong>and</strong> H(X 1:n ) as the sum of conditionalentropies <strong>and</strong> then bound the conditional entropy byunconditional entropy.<strong>Entropy</strong> <strong>and</strong> <strong>Mutual</strong> <strong>Information</strong> – p. 20