Entropy and Mutual Information

Entropy and Mutual Information

Entropy and Mutual Information

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

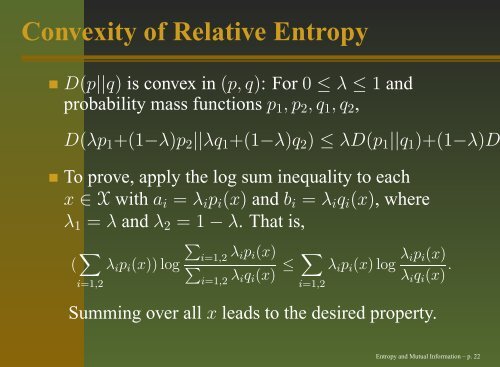

Convexity of Relative <strong>Entropy</strong>D(p||q) is convex in (p, q): For 0 ≤ λ ≤ 1 <strong>and</strong>probability mass functions p 1 , p 2 , q 1 , q 2 ,D(λp 1 +(1−λ)p 2 ||λq 1 +(1−λ)q 2 ) ≤ λD(p 1 ||q 1 )+(1−λ)DTo prove, apply the log sum inequality to eachx ∈ X with a i = λ i p i (x) <strong>and</strong> b i = λ i q i (x), whereλ 1 = λ <strong>and</strong> λ 2 = 1 − λ. That is,( ∑i=1,2λ i p i (x)) log∑i=1,2 λ ip i (x)∑i=1,2 λ iq i (x) ≤ ∑i=1,2λ i p i (x) log λ ip i (x)λ i q i (x) .Summing over all x leads to the desired property.<strong>Entropy</strong> <strong>and</strong> <strong>Mutual</strong> <strong>Information</strong> – p. 22