Criterion-related Validity “Is the test valid?†Criterion-related Validity ...

Criterion-related Validity “Is the test valid?†Criterion-related Validity ...

Criterion-related Validity “Is the test valid?†Criterion-related Validity ...

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

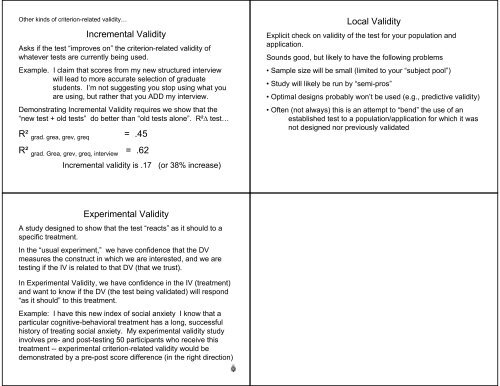

O<strong>the</strong>r kinds of criterion-<strong>related</strong> <strong>valid</strong>ity…Incremental <strong>Validity</strong>Asks if <strong>the</strong> <strong>test</strong> “improves on” <strong>the</strong> criterion-<strong>related</strong> <strong>valid</strong>ity ofwhatever <strong>test</strong>s are currently being used.Example. I claim that scores from my new structured interviewwill lead to more accurate selection of gradua<strong>test</strong>udents. I’m not suggesting you stop using what youare using, but ra<strong>the</strong>r that you ADD my interview.Demonstrating Incremental <strong>Validity</strong> requires we show that <strong>the</strong>“new <strong>test</strong> + old <strong>test</strong>s” do better than “old <strong>test</strong>s alone”. R²Δ <strong>test</strong>…R² grad. grea, grev, greq = .45R² grad. Grea, grev, greq, interview = .62Incremental <strong>valid</strong>ity is .17 (or 38% increase)Local <strong>Validity</strong>Explicit check on <strong>valid</strong>ity of <strong>the</strong> <strong>test</strong> for your population andapplication.Sounds good, but likely to have <strong>the</strong> following problems• Sample size will be small (limited to your “subject pool”)• Study will likely be run by “semi-pros”• Optimal designs probably won’t be used (e.g., predictive <strong>valid</strong>ity)• Often (not always) this is an attempt to “bend” <strong>the</strong> use of anestablished <strong>test</strong> to a population/application for which it wasnot designed nor previously <strong>valid</strong>atedExperimental <strong>Validity</strong>A study designed to show that <strong>the</strong> <strong>test</strong> “reacts” as it should to aspecific treatment.In <strong>the</strong> “usual experiment,” we have confidence that <strong>the</strong> DVmeasures <strong>the</strong> construct in which we are interested, and we are<strong>test</strong>ing if <strong>the</strong> IV is <strong>related</strong> to that DV (that we trust).In Experimental <strong>Validity</strong>, we have confidence in <strong>the</strong> IV (treatment)and want to know if <strong>the</strong> DV (<strong>the</strong> <strong>test</strong> being <strong>valid</strong>ated) will respond“as it should” to this treatment.Example: I have this new index of social anxiety I know that aparticular cognitive-behavioral treatment has a long, successfulhistory of treating social anxiety. My experimental <strong>valid</strong>ity studyinvolves pre- and post-<strong>test</strong>ing 50 participants who receive thistreatment -- experimental criterion-<strong>related</strong> <strong>valid</strong>ity would bedemonstrated by a pre-post score difference (in <strong>the</strong> right direction)