16. Systems of Linear Equations 1 Matrices and Systems of Linear ...

16. Systems of Linear Equations 1 Matrices and Systems of Linear ...

16. Systems of Linear Equations 1 Matrices and Systems of Linear ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

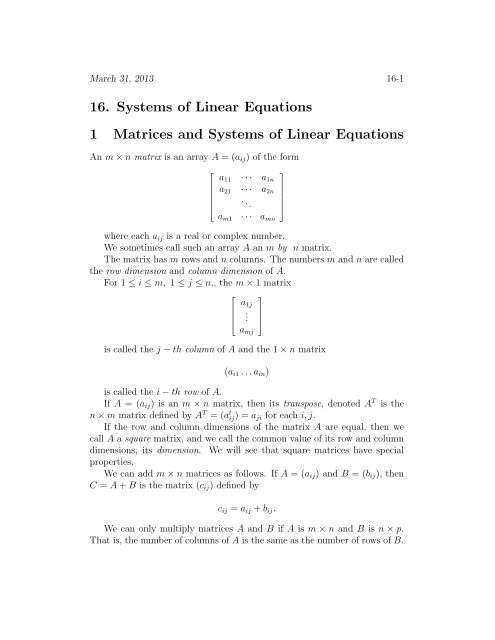

March 31, 2013 16-1<strong>16.</strong> <strong>Systems</strong> <strong>of</strong> <strong>Linear</strong> <strong>Equations</strong>1 <strong>Matrices</strong> <strong>and</strong> <strong>Systems</strong> <strong>of</strong> <strong>Linear</strong> <strong>Equations</strong>An m × n matrix is an array A = (a ij ) <strong>of</strong> the form⎡⎢⎣⎤a 11 · · · a 1na 21 · · · a 2n. .. ⎥⎦a m1 · · · a mnwhere each a ij is a real or complex number.We sometimes call such an array A an m by n matrix.The matrix has m rows <strong>and</strong> n columns. The numbers m <strong>and</strong> n are calledthe row dimension <strong>and</strong> column dimension <strong>of</strong> A.For 1 ≤ i ≤ m, 1 ≤ j ≤ n,, the m × 1 matrix⎡⎢⎣⎤a 1j⎥. ⎦a mjis called the j − th column <strong>of</strong> A <strong>and</strong> the 1 × n matrix(a i1 . . . a in )is called the i − th row <strong>of</strong> A.If A = (a ij ) is an m × n matrix, then its transpose, denoted A T is then × m matrix defined by A T = (a t ij) = a ji for each i, j.If the row <strong>and</strong> column dimensions <strong>of</strong> the matrix A are equal, then wecall A a square matrix, <strong>and</strong> we call the common value <strong>of</strong> its row <strong>and</strong> columndimensions, its dimension. We will see that square matrices have specialproperties.We can add m × n matrices as follows. If A = (a ij ) <strong>and</strong> B = (b ij ), thenC = A + B is the matrix (c ij ) defined byc ij = a ij + b ij .We can only multiply matrices A <strong>and</strong> B if A is m × n <strong>and</strong> B is n × p.That is, the number <strong>of</strong> columns <strong>of</strong> A is the same as the number <strong>of</strong> rows <strong>of</strong> B.

March 31, 2013 16-2In that case, if A = (a ij ) <strong>and</strong> B = b jk , then C = A · B is the m × p matrixC = (c ik ) defined byn∑c ik = a ij b jk .j=1Thus the element c ik is the dot product <strong>of</strong> the ith row <strong>of</strong> A <strong>and</strong> the jthcolumn <strong>of</strong> B.Both the operations <strong>of</strong> matrix addition <strong>and</strong> matrix multiplication areassociative. That is,(A + B) + C = A + (B + C), (AB)C = A(BC).Multiplication <strong>of</strong> matrices is not always commutative, even for squarematrices. For instance, if[ ][ ]1 11 0A = <strong>and</strong> B = ,0 11 1then,AB =[2 11 1]<strong>and</strong> BA =[1 11 2].Let us consider some matrices A, B <strong>and</strong> illustrate these concepts.Example 1[ ] [ ]2 3 3 −1 2A = , B =1 2 1 2 3[ ]9 4 13C = A · B =5 3 8Example 2A =⎡⎢⎣B · A not defined[ ]2 1A T =3 22 −1 31 2 13 −2 2⎤⎥⎦ , B =⎡⎢⎣1−3−2⎤⎥⎦

March 31, 2013 16-3A T =⎡⎢⎣2 1 3−1 2 −23 1 2C = A · B =⎤⎡⎢⎣−1−75⎤⎥⎦⎥⎦ , (A.B) T = [ 1 −3 −2 ]Fact. (A · B) T = B T · A T .Let e i be the n−vector with zeroes everywhere except in the i−th position<strong>and</strong> a 1 there. This is called the st<strong>and</strong>ard i−th unit n−vector.The n × n matrix whose i−th row consists <strong>of</strong> a 1 in the i−th position<strong>and</strong> zeroes elsewhere is called the n × n identity matrix, <strong>and</strong> is denoted I n(or simply I if the context makes the size clear).For any m × n matrix A we haveI m A = AI n = A.2 Multiplication <strong>of</strong> matrices by row <strong>and</strong> columnvectorsLet p <strong>and</strong> n be positive integers.Let u 1 , u 2 , . . . , u n be n vectors in R p , <strong>and</strong> let a 1 , a 2 , . . . , a n be n realnumbers.The expressionu = a 1 u 1 + a 2 u 2 + . . . + a n u nis called the linear combination <strong>of</strong> the vectors{u 1 , u 2 , . . . , u n }with coefficients {a 1 , a 2 , . . . , a n }.Any expression <strong>of</strong> the above form is called a linear combination <strong>of</strong> vectorsin R p .It is useful to note the following properties <strong>of</strong> matrix multiplication.

March 31, 2013 16-4Let A = (a ij ) be m × n <strong>and</strong> let B = (b jk ) be n × p. Then, <strong>of</strong> course, C ism × p.Let Cir be the i−th row <strong>of</strong> C <strong>and</strong> A r i be the i−th row <strong>of</strong> A, thenC r i = A r i · BSimilarly, if CjcB, thenis the j−th column <strong>of</strong> C <strong>and</strong> B c j is the j−th column <strong>of</strong>C c j = A · B c jWe wish to write these matrix expressions as certain linear combinations.From the definitions, it follows that<strong>and</strong>n∑Ci r = a i1 B1 r + a i2 B2 r + . . . + a in Bn r = a ij Bj r , (1)j=1n∑Cj c = b 1j A c 11 r + b 2j A c 2 + . . . + b nj A c n = b ij A c i (2)i=1Thus, we see thatThe i−th row <strong>of</strong> A · B is the linear combination <strong>of</strong> the rows <strong>of</strong> B withcoefficients given by the i−th row <strong>of</strong> A<strong>and</strong>The j−th column <strong>of</strong> A · B is the linear combination <strong>of</strong> the columns <strong>of</strong> Awith coefficients given by the j−th column <strong>of</strong> B3 Some properties <strong>of</strong> square matricesAn n × n matrix A is invertible if there is another n × n matrix B such thatAB = BA = I. We also call A non-singular. A singular matrix is one thatis not invertible.The matrix B is unique <strong>and</strong> called the inverse <strong>of</strong> A. It is usually writtenA −1 .It is a fact that, if A <strong>and</strong> B are two n × n invertible matrix, then theirproduce A · B is also invertible, <strong>and</strong> we have the formula(A · B) −1 = B −1 · A −1

March 31, 2013 16-5There is a useful number which we can associate to a square matrix, calledits determinant. This is <strong>of</strong>ten written det(A).For a 1 [ × 1 matrix]A = (a 11 ), we set det(A) = a 11 .a11 aIf A =12, we seta 21 a 22det(A) = a 11 a 22 − a 12 a 21We define det(A) inductively for matrices <strong>of</strong> higher dimension.Assuming that we know det(B) for all n × n matrices, let A be an (n +1) × (n + 1) matrix.Definen+1∑det(A) = (−1) i+1 a i1 det(A(i | 1)i=1where A(i | 1) is the n × n matrix obtained by deleting the i − th row<strong>and</strong> first column <strong>of</strong> A.This is called expansion by minors along the first column <strong>of</strong> A.One gets the same answer by exp<strong>and</strong>ing by minors along any row orcolumn.As an example <strong>of</strong> expansion along the second row (assuming that it exists),taken+1∑det(A) = (−1) 2+j det(A(2 | j)).j=1An easy way to remember the signs in the preceding summation is tomake an | × |n S matrix whose entries are either ”+” or ”-” as follows. LetS 11 = ” + ”. Make S 12 = ” − ”, S 13 = ” + ”, <strong>and</strong> continue along the first row.For the second row, start with ”-” <strong>and</strong> alternate along this row. Continue inthis way to obtain the whole matrix S.Examples <strong>of</strong> S for 2 × 2 <strong>and</strong> 3 × 3 matrices are[+ −− +],⎡⎢⎣+ − +− + −+ − +⎤⎥⎦ .Let 0 denote the n−vector all <strong>of</strong> whose entries are 0.

March 31, 2013 16-6A collection u 1 , u 2 , . . . , u k <strong>of</strong> vectors in R n is called a linearly independentset <strong>of</strong> vectors in R n , if whenever we have a linear combinationα 1 u 1 + . . . + α k u k = 0,with the α ′ is constants (scalars) we must have α i = 0 for every i.Fact. The following conditions are equivalent for an n × n matrix A tobe invertible.1. the rows <strong>of</strong> A form a linearly independent set <strong>of</strong> vectors2. the columns <strong>of</strong> A form a linearly independent set <strong>of</strong> vectors3. for every vector b, the systemhas a unique solutionAx = bHere we are writing b as a column vector (or as an n × 1 matrix.4. det(A) ≠ 0The function det maps the set <strong>of</strong> square matrices with real entries to thereal numbers. (If the matrix A has complex entries, then det(A) is a complexnumber. In this case, the determinant function has similar properties. Forsimplicity, we emphasize real matrices).Additional properties <strong>of</strong> determinants:1. For any square matrix A, det(A) = det(A T ) (here A T is the transpose<strong>of</strong> A).2. For any two square matrices A <strong>and</strong> B <strong>of</strong> the same dimension, det(A ·B) = det(A) · det(B).3. det(I n ) = 1 for any n.4. For an n × n matrix A, <strong>and</strong>, for each 1 ≤ i ≤ n, let A i be its i−th row.We may consider the determinant function as a function <strong>of</strong> the rows.That is, we may write

March 31, 2013 16-7det(A) = det(A 1 , A 2 , . . . , A n ).Now, fix some i with 1 ≤ i ≤ n.Suppose that A <strong>and</strong> B are n × n matrices which only differ in theiri − th rows. That is, for j ≠ i, A j = B j . Let C be the matrix whosei−row is the sum aA i + bB i , where a <strong>and</strong> b are constants, <strong>and</strong> whoseother rows also equal the corresponding rows <strong>of</strong> A <strong>and</strong> B.Then,det(C) = a det(A) + b det(B)This property <strong>of</strong> determinants is called multi-linearity as a function <strong>of</strong>the rows.Replacing rows by columns, we also get the det(A) is multi-linear as afunction <strong>of</strong> the columns.5. If B is the matrix obtained from A by interchanging two rows thendet(B) = −det(A). One refers to this property by saying that det(A)is skew-symmetric as a function <strong>of</strong> the rows <strong>of</strong> A. The function a →det(A) is also skew-symmetric as a function <strong>of</strong> the columns <strong>of</strong> A.Let us compute some determinants.A =[2 −13 2], det(A) = 2(2) − 3(−1) = 7A =⎡⎢⎣2 −1 12 −3 41 2 2⎤⎥⎦ ,det(A) = 2(−6 − 8) − 2(−4) + −4 + 3 = −214 <strong>Systems</strong> <strong>of</strong> <strong>Linear</strong> <strong>Equations</strong>We will write vectors x = (x 1 , . . . , x n ) in R n both as row vectors <strong>and</strong> columnvectors.<strong>Matrices</strong> are useful for dealing with systems <strong>of</strong> linear equations.Suppose we are given a system <strong>of</strong> m equations in n unknowns

March 31, 2013 16-8We can write the systemas a single vector equationa 11 x 1 + a 12 x 2 + . . . + a 1n x n = b 1a 21 x 1 + a 22 x 2 + . . . + a 2n x n.= b 2(3)a m1 x 1 + a m2 x 2 + . . . + a mn x n = b mAx = b (4)where A in the m × n matrix (a ij ), x is an unknown n−vector, <strong>and</strong> b isa known m−vector.Comment: Strictly speaking, the left <strong>and</strong> right sides <strong>of</strong> (4) are m × 1matrices. It is customary to ignore this <strong>and</strong> simply refer to these as m −vectors. Sometimes, we refer to 1 × n matrices as n − vectors. The contextwill make it clear what is being done.We wish to determine whether the system (3) has a solution, <strong>and</strong>, if so,how many solutions does it have. Also, we seek a convenient way to representthe solutions.Before we consider this in detail, we note that there is a nice geometricdescription <strong>of</strong> the expression (4).4.1 <strong>Linear</strong> Maps <strong>and</strong> <strong>Matrices</strong>Consider the map T (x) = A · x. This defines a map from R n to R m .To solve the equation (4), where A <strong>and</strong> b are given, we are looking fora vector x ∈ R n such that T (x) = b. That is, we want b to be in theset-theoretic image <strong>of</strong> T .The map T has very special properties. It is what is called a linear mapor a linear transformation from R n to R m .The definition is the following.Definition 4.1 The map T : R n → R m is called linear if it preserves theoperations <strong>of</strong> vector addition <strong>and</strong> scalar multiplication. That is, for any twovectors x, y ∈ R n <strong>and</strong> any real number α, we haveT (x + y) = T (x) + T (y),

March 31, 2013 16-9<strong>and</strong>T (αx) = αT (x)One can easily see that maps from R n to R m defined using multiplication<strong>of</strong> vectors x by m × n matrices A as above are linear maps.Conversely, it is relatively easy to prove that every linear map T : R n →R m is defined by multiplication by some m × n matrix.To be actually correct in this case, it is easier to ignore our convention <strong>of</strong>writing vectors as either row or column vectors, <strong>and</strong> to stick to row vectors.That is, we write x = (x 1 , x 2 , . . . , x n ) as a 1 × n matrix.Then, we have the followingProposition 4.2 If T : R n → R m is a linear map, then there is an n × mmatrix A such T (x) = x · A for all x ∈ R n .Pro<strong>of</strong>. Let {e 1 , e 2 , . . . , e n } denote the st<strong>and</strong>ard unit vectors in R n , <strong>and</strong>let {f 1 , f 2 , . . . , f m } denote the st<strong>and</strong>ard unit vectors in R m .Observe that writing a vector x ∈ R n as x = (x 1 , x 2 , . . . , x n ) amounts tosame asn∑x = x i e i .i=1asSimilarly, if y ∈ R m is written as y = (y 1 , . . . , y m ), then this is the samem∑y = y j f j .j=1Let T : R n → R m be a linear map.By linearity, we haven∑n∑T (x) = T ( x i e i ) = x i T (e i ) (5)i=1i=1Now, each T (e i ) is a vector in R m , so there are real numbers a ij suchthatm∑T (e i ) = a ij f jj=1

March 31, 2013 16-10Inserting these expressions into (5) we getn∑ m∑n∑ m∑m∑ n∑T (x) = x i ( a ij f j ) = x i a ij f j = x i a ij f ji=1 j=1i=1 j=1j=1 i=1Let A be the n × m matrix given by A = (a ij ).Thinking <strong>of</strong> x = (x 1 , x 2 , . . . , x n ) as a 1 × n matrix, <strong>and</strong> y = T (x) =(y 1 , y 2 , . . . , y m ) as a 1 × m matrix, we havex · A = y. (6)QED.Remark To keep things in column vector format, we would only have toreplace the matrix A by its transpose A T <strong>and</strong> write T (x) = A T · x.4.2 Matrix methods for systems <strong>of</strong> linear equationsLet us return to the system <strong>of</strong> linear equations (3).Our goal is to develop simple <strong>and</strong> effective ways <strong>of</strong> obtaining the solutionset <strong>of</strong> the system.We first observe that the set <strong>of</strong> solutions is unchanged it we do any combination<strong>of</strong> the following operations on the system (3) (including both theleft <strong>and</strong> right sides <strong>of</strong> the equation).1. interchange two rows2. multiply a row by a non-zero constant3. add a mutltiple <strong>of</strong> one equation to another.These are called elementary row operations on the matrix.Because the solutions don’t change under these operations, we can usethem to try to simplify the equations (i.e., put them into a form where wecan determine the solutions).First, we observe that it is not necessary to keep the variables in manipulation<strong>of</strong> the equations.From the system (3), we write the following matrix

March 31, 2013 16-11⎡⎢⎣∣ ⎤a 11 a 12 . . . a 1n ∣∣∣∣∣∣∣∣∣ b 1a 21 a 22 . . . a 2n b 2⎥.. ⎦a m1 a m2 . . . a mn b mThis is called the augmented matrix <strong>of</strong> the system.We manipulate this matrix with the operations above to put the partcorresponding to A in a better form so that we can read <strong>of</strong>f the solutions.Example 1. Consider the system2x 1 + 3x 2 = 4x 1 − x 2 = 6Of course, we can solve this by Cramer’s rule. We first do this. Afterward, weuse the method <strong>of</strong> row operations. This latter method provides a systematicway to h<strong>and</strong>le general linear systems <strong>of</strong> equations.Using Cramer’s rule, we first write the system in terms <strong>of</strong> matrices:[2 31 −1] [ ] [ ]x1 4=x 2 6(7)Then, we getx 1 =([ ])4 3det6 −1([ ]) = 22/5, x 2 =2 3det1 −1([ ])2 4det1 6([ ]) = −8/52 3det1 −1Next, let’s do this with row operations. We denote row i by R i .Write the augmented matrix:2 3 41 -1 60 5 -81 -1 6

March 31, 2013 16-12We can now read <strong>of</strong>f that 5x 2 = −8, x 1 − x 2 = 6, so we can read thesolution as x 2 = −8/5, x 1 = 6 + x 2 = 22/5.Example 2 Use row operations to solveThe augmented matrix is:2x 1 + 3x 2 + x 3 = 2x 1 − x 2 − x 3 = 13x 1 − 2x 2 = 32 3 1 21 -1 -1 13 -2 0 3Replace R 1 with −2R 2 + R 10 5 3 01 -1 -1 13 -2 0 3Replace R 3 with −3R 2 + R 30 5 3 01 -1 -1 10 1 3 00 0 -12 01 0 2 10 1 3 0Now read <strong>of</strong>f the solutions as:x 3 = 0x 2 = −3x 3 = 0x 1 = 1 − 2x 3 = 1or, x 1 = 1, x 2 = 0, x 3 = 0.Example 3. Use row operations to solve the system.

March 31, 2013 16-132x 1 + 3x 2 + x 3 = 2x 1 − x 2 − x 3 = 13x 1 + 2x 2 = 3Notice that this system only differs from that in the preceding exampleby the a change <strong>of</strong> sign in the multiplier <strong>of</strong> x 2 in the third equation. Thesolutions, however, will change considerably. In Example 2, there was aunique solution. In this example, it will be seen that there are infinitelymany solutions.We begin with the augmented matrix, <strong>and</strong> proceed to use the row operations.Instead <strong>of</strong> writing each changed matrix, we combine several steps.2 3 1 21 -1 -1 13 2 0 3−2R 2 + R 1 followed by −3R 2 + R 30 5 3 01 -1 -1 10 5 3 0Now, this reduces to the two equationsx 1 = 1 + x 2 + x 35x 2 + 3x 3 = 0x 2 = −(3/5)x 3x 1 = 1 − (3/5)x 3 + x 3x 1 = 1 + (2/5)x 3So we see that solutions have the form⎡⎤ ⎡ ⎤ ⎡1 + (2/5)x 3 1⎢⎥ ⎢ ⎥ ⎢⎣ −(3/5)x 3 ⎦ = ⎣ 0 ⎦ + x 3 ⎣x 3 02/5−3/51⎤⎥⎦

March 31, 2013 16-14This shows that the set <strong>of</strong> solutions is a line in R 3 running through thepoint (1, 0, 0) with the direction (2/5, −3/5, 1).In general, the set <strong>of</strong> solutions <strong>of</strong> a system <strong>of</strong> 3 equations in 3 unknownswill be a subset <strong>of</strong> R 3 <strong>of</strong> one <strong>of</strong> the following types:1. empty (i.e., there are no solutions)2. a point (there is a unique solution)3. a line (there are infinitely many solutions)4. a plane (there are infinitely many solutions)5. all <strong>of</strong> R 3 (the coefficients a ij are all 0).Example 4.Solve the system2x 1 − 2x 2 + x 3 = 3x 1 − x 2 − x 3 = 13x 1 + 2x 2 + 2x 3 = 2We write the augmented matrix <strong>and</strong> do some row operations.2 -2 1 31 -1 -1 13 2 2 20 0 3 11 -1 -1 10 5 5 -1x 3 = 1/3x 2 = −1/5 − x 3 = −1/5 − 1/3 − −3/15 − 5/15 = −8/15x 1 = 1 + x 2 + x 3 = 1 − 8/15 + 5/15 = 12/15 = 4/5.

March 31, 2013 16-155 Finding the inverse <strong>of</strong> a matrixThe 2 × 2 matrix A is invertible if <strong>and</strong> only if det(A) ≠ 0.LettingA =[ ]a11 a 12,a 21 a 22we can compute A −1 from the formulaA −1 =Example 5.Find the matrix X such that[2 1−1 2[1det(A)]X =]a 22 −a 12.−a 21 a 11[3 0−2 1Here the matrix X is itself 2 × 2. This has the form A · X = B for 2 × 2matrices.If A is non-singular, then we get the answer fromX = A −1 B.We check non-singularity by computing det(A). We get det(A) = 5, sothe matrix is non-singular <strong>and</strong> its inverse is(1/5)[2 −11 2]]so,X = A −1 B = (1/5)[2 −11 2] [3 0−2 1]=[8/5 −1/5−1/5 2/5]For higher dimensional matrices, the formula for the inverse is harder, soit is convenient to find another method.Suppose that the dimension <strong>of</strong> A is n.Then, we are looking for an n × n matrix B such that A · B = I where Iis the n × n identity matrix.

March 31, 2013 16-16Thus, we can form a big augmented matrix <strong>of</strong> the formA | I.This is an n × 2n matrix (with the vertical line in the middle).Applying the row operation method for solving linear systems to thismatrix, we do a sequence <strong>of</strong> successive row modifications. If A is actuallyinvertible, then, at the end <strong>of</strong> doing these operations, we will actually get ann × 2n matrix <strong>of</strong> the formI | B.It turns out that the resulting matrix B is actually A −1 .The pro<strong>of</strong> <strong>of</strong> this is not difficult. It amounts to the following observation.Let us use the notation op to denote any <strong>of</strong> the elementary row operations.Write A op B to mean that B is obtained from A by applying the elementaryrow operation op.As above, let I be the n × n identity matrix.Then the following is true.Proposition Let I denote that n × n identity matrix. Let op be anyelementary row operation, <strong>and</strong> assume that A op B <strong>and</strong> I op D.Then,B = DAThis means that we get B from A doing a left multiplication by D whereD is gotten from I via the same elementary row operation used to get B fromA.Let us describe this in more detail. It will be convenient to have specificnotations for the matrices gotten by applying elementary row operations toI.There are <strong>of</strong> three types:Let i, j be any two integers with 1 ≤ i ≤ n <strong>and</strong> 1 ≤ j ≤ n.1. Interchange <strong>of</strong> rowsLet P ij denote the matrix obtained by interchanging the i−th <strong>and</strong> j−throws <strong>of</strong> I. (This is usually called a permutation matrix since it permutesthe rows <strong>of</strong> I).

March 31, 2013 16-172. Multiplication <strong>of</strong> a row by a non-zero constant.Let c be a non-zero constant, <strong>and</strong> let E c,i denote the matrix whose j−throw is the unit vector i j if j ≠ i, <strong>and</strong> whose i−th row is ce i .3. Addition <strong>of</strong> a multiple <strong>of</strong> row i to row j.Let E ci+j denote the matrix obtained from I by adding c times row ito row j.Let us consider some examples.Example 6 Consider an interchange <strong>of</strong> the first two rows.A =⎡⎢⎣2 3 11 −1 10 4 3⎤⎥⎦ , B =The associated permutation matrix isThen, one sees thatP 12 =⎡⎢⎣⎡⎢⎣0 1 01 0 00 0 1B = P 12 A1 −1 12 3 10 4 3Example 7. Consider the replacing row 1 by row 1 plus 2 times row 3A =⎡⎢⎣2 3 11 −1 10 4 3⎤⎥⎦ , B =The associated matrix is E 23+1 , with<strong>and</strong>E 23+1 =⎡⎢⎣⎡⎢⎣1 0 20 1 00 0 1B = E 23+1 A.⎤⎥⎦2 11 71 −1 10 4 3⎤⎥⎦ ,⎤⎥⎦⎤⎥⎦

March 31, 2013 16-18Try a few more examples yourself to get comfortable with this.Now, notice that each <strong>of</strong> the matrices P ij , E c,i , E ci+j is invertible. Indeed,Eij−1 = E ij , Ec,i−1 = E 1/c,i , <strong>and</strong> Eci+j −1 = E −ci+j .It follows that if we do a sequence <strong>of</strong> k row modifications to a matrix Athis amounts to successively multiplying it on the left by k matrices obtainedfrom one <strong>of</strong> the three types P ij , E c,i , E ci+j .This means that after k such modifications, the matrix A is replaced byD 1 D 2 . . . D k Awhere each D k is one <strong>of</strong> the P ij , E c,i or E ci+j we just discussed.If we had an augmented matrixA | I<strong>and</strong> we do the same row modifications on it, then, <strong>and</strong> the end, we getD 1 D 2 . . . D k A | D 1 D 2 . . . D k IIf we end up with I on the left, then D 1 D 2 . . . D k = A −1 . On the rightwe end up with D 1 D 2 . . . D k I = D 1 D 2 . . . D k . So we have written down A −1 .5.1 A simple method to compute the inverse <strong>of</strong> a 3 × 3matrixLet A = (a ij ) be an invertible n × n matrix. We can use determinants <strong>and</strong>Cramer’s rule to get a simple way to compute A −1 using Cramer’s rule.We first observe that Cramer’s rule holds for every invertible square matrix:The i−th coordinate <strong>of</strong> the solution to A · x = b has the formx i = det(A i)det(A)where A i is the matrix obtained by replacing the i−th column <strong>of</strong> A bythe i−th unit column vector e T i .Since A −1 is the solution X <strong>of</strong> the matrix equation A · X = I, the i − thcolumn <strong>of</strong> A −1 is the solution to the matrix equation A · x = e T i .We apply Cramer’s rule to the case <strong>of</strong> a 3 × 3 invertible matrix

March 31, 2013 16-19A =⎡⎢⎣⎤a 11 a 12 a 13⎥a 21 a 22 a 23 ⎦a 31 a 32 a 33Let u i denote the i−th column <strong>of</strong> A −1 .Then,⎡∣ a 22 a ∣∣∣∣ ⎤23∣ a 32 a 33 ∣ 1au 1 =− 21 a ∣∣∣∣23, udet(A)∣a 31 a 33 2 =⎢∣ ⎣a 21 a ∣∣∣∣ ⎥22 ⎦∣ a 31 a 321det(A)⎡⎢⎣−∣∣−∣∣a 12 a ∣∣∣∣ ⎤13a 32 a 33 ∣ a 11 a ∣∣∣∣13a 31 a 33 ∣ a 11 a ∣∣∣∣ ⎥12 ⎦a 31 a 32u 3 =1det(A)⎡⎢⎣∣−∣∣∣a 12 a ∣∣∣∣ ⎤13a 22 a 23 ∣ a 11 a ∣∣∣∣13a 21 a 23 ∣ a 11 a ∣∣∣∣ ⎥12 ⎦a 21 a 22In practice, this easy to compute. After computing the determinant <strong>of</strong>A, it simply involves computing 9 2 × 2 determinants.5.2 The inverse <strong>of</strong> an n × n matrix ALet A be an n × n matrix.For a given pair (i, j) <strong>of</strong> indexes (i.e., 1 ≤ i ≤ n, 1 ≤ j ≤ n), define then × n matrix C = Adj(A) byC ij = (−1) i+j det(A(j | i))

March 31, 2013 16-20where A(j | i) is the (n − 1) × (n − 1) matrix obtained by deleting thej−th row <strong>and</strong> the i− column <strong>of</strong> A.The matrix C = Adj(A) is called the classical adjoint <strong>of</strong> A.The following theorem can be found in most books on Matrix Theory or<strong>Linear</strong> Algebra.Theorem For any square real or complex matrix A, we haveA · Adj(A) = det(A) · Iwhere I is the n × n identity matrix.If follows that if det(A) ≠ 0, thendet(A −1 ) =6 Eigenvalues <strong>and</strong> eigenvectors1 · Adj(A) (8)det(A)Let A be an n × n matrix (real or complex).An eigenvector is a non-zero vector ξ such that there is a scalar r suchthat Aξ = rξ. Note that the scalar r may be 0. When one can find such a ξ<strong>and</strong> a scalar r, one calls r an eigenvalue <strong>of</strong> the matrix A, <strong>and</strong> one calls ξ aneigenvector <strong>of</strong> A associated to r or for r.If ξ is a non-zero eigenvector, then the unit vector in the direction <strong>of</strong> ξ,ξnamely is called a unit eigenvector.| ξ |It turns out that if ξ is an eigenvector associated to the eigenvalue r, thenso is any non-zero scalar mutliple <strong>of</strong> ξ. It is sometimes useful to have a uniqueway to specify a particular vector in the set <strong>of</strong> non-zero scalar multiples <strong>of</strong>ξ. Accordingly we define the scaled version <strong>of</strong> a non-zero vector v to be thevector♯v = 1 v ivwhere v i is the first non-zero entry in v. We use the sharp symbol frommusic to denote the scaled version <strong>of</strong> v.For instance, we have♯[−23]=(13−2⎡)⎢, ♯ ⎣0−34⎤⎥⎦ =⎡⎢⎣014−3⎤⎥⎦ (9)

March 31, 2013 16-21To underst<strong>and</strong> the notion <strong>of</strong> eigenvector better, observe that the equationAξ = rξis equivalent to either <strong>of</strong> the equationsor(rI − A)ξ = 0(A − rI)ξ = 0where I is the n × n identity matrix.If either <strong>of</strong> these equations had a non-zero vector ξ as a solution, then itwould follow thatdet(rI − A) = 0 (10)The expression det(rI − A) is actually a polynomial <strong>of</strong> degree n <strong>of</strong> theformz(r) = r n + a n−1 r n−1 + . . . + a 0<strong>and</strong> the above equation can be written as z(r) = 0.The polynomial z(r) is called the characteristic polynomial <strong>of</strong> the matrixA, <strong>and</strong> its roots are the eigenvalues <strong>of</strong> A.The existence <strong>of</strong> these roots is provided by theTheorem (Fundamental Theorem <strong>of</strong> Algebra) Letp(r) = a n r n + a n−1 r n−1 + . . . + a 0be a polynomial <strong>of</strong> degree n (i.e. a n ≠ 0) with complex coefficientsa 0 , a 1 , . . . , a n .Then, there is a complex number α such that z(α) = 0.Remark. The Euclidean algorithm for positive integers states that giventwo positive integers p > q there are integers k > 0 <strong>and</strong> 0 ≤ s < q such thatp = kq + sThere is an analogous result for polynomials. Let us denote by deg(z(r))the degree <strong>of</strong> the polynomial z(r) (with real <strong>of</strong> complex coefficients).

March 31, 2013 16-22Euclidean Algorithm for Polynomials:Let p(r) <strong>and</strong> q(r) be two polynomials with deg(q(r)) < deg(p(r)). Thenthere are polynomials k(r) <strong>and</strong> s(r) such thatp(r) = k(r)q(r) + s(r)<strong>and</strong> deg(s(r)) < deg(q(r)).From this we have, for any complex polynomial z(r), <strong>and</strong> any complexnumber α, there exist a complex polynomial q(r) with deg(z(r)) =deg(q(r)) + 1 <strong>and</strong> a complex complex number c such thatz(r) = (r − α)q(r) + cNote that if α is a root <strong>of</strong> z(r) (i.e., z(α) = 0), then c = 0. That is, thepolynomial z − α is a factor <strong>of</strong> z(r).Let us make repeated use <strong>of</strong> the Fundamental Theorem <strong>of</strong> Algebra onz(r).There exists a root r 1 <strong>of</strong> z(r) <strong>and</strong> a polynomial z 1 (r) such thatz(r) = (r − r 1 )z 1 (r)Next, there exists a root r 2 <strong>of</strong> z 1 (r) <strong>and</strong> a polynomial z 2 (r) such thatz(r) = (r − r 1 )(r − r 2 )z 2 (r)asContinuing this way, we obtain all <strong>of</strong> the roots <strong>of</strong> z(r) as can express itz(r) = a n (r − r 1 )(r − r 2 ) · · · (r − r n )Note that the roots need not be distinct. So, we refer to this expression forz(r) as its factorization with multiplicities. We also say that the polynomialz(r) <strong>of</strong> degree n has n roots with multiplicity.Remarks.1. If z(r) is a polynomial <strong>of</strong> degree n with real coefficients, then its rootsmay be real or complex. If r 1 = a + bi is such a complex root (i.e.,b ≠ 0), the complex conjugate ¯r 1 = a − bi is also a root <strong>of</strong> z(r).

March 31, 2013 16-232. The Fundamental Theorem <strong>of</strong> Algebra is an existence theorem. It givesno information about how to find the roots <strong>of</strong> a given polynomial z(r).For degree two, one can explicitly find the roots via that quadraticformula (i.e., involving square roots <strong>of</strong> expressions involving the coefficients).For degrees three <strong>and</strong> four, there also are explicit formulas to find theroots in terms <strong>of</strong> taking expressions involving various roots <strong>of</strong> the coefficients.For degree greater than four, there are no such formulas thatwork in all cases. This surprising result (<strong>of</strong>ten called the unsolvability<strong>of</strong> the quintic) was proved by Abel in 1823. For more information lookup the Abel-Ruffini Theorem on Wikipedia.Note that some texts call the polynomial z 1 (r) = det(A − rI) the characteristicpolynomial <strong>of</strong> A. Since z 1 (r) = (−1) n z(r), these two polynomialshave the same roots, so to solve problems concerning eigenvalues, it reallydoes not matter which definition is used.Of course, even if A is real, the characteristic polynomial z(r) may havereal or complex roots. A real eigenvalue will have associated eigenvectorswhich are also real, <strong>and</strong> a complex eigenvalue will only have associated complexeigenvectors (i.e. written as u 1 + iu 2 with u 1 <strong>and</strong> u 2 both real vectors<strong>and</strong> u 2 non-zero).6.1 Simple formulas for the characteristic polynomials<strong>of</strong> 2 × 2 <strong>and</strong> 3 × 3 matricesFor any matrix A = (a ij ), define the trace <strong>of</strong> A to ben∑tr(A) = a iii=1Thus, tr(A) is simply the sum <strong>of</strong> the diagonal entries.If( )a bA = ,c dthen

March 31, 2013 16-24z(r) = det(rI − A) = r 2 − tr(A)r + det(A).In the 3 × 3 case, formula for z(r) is a bit more complicated:If⎛⎞a b c⎜⎟A = ⎝ d e f ⎠ ,g h ithen(z(r) = r 3 − tr(A)r 2 + det( e fh i ) + det( a cg i ) + det( a b)d e ) r − det(A)Remark. There are also simple formulas for eigenvalues <strong>and</strong> eigenvectorsfor two dimensional square matrices. These are described in Section 17 <strong>of</strong>the Notes.In order to find eigenvalues <strong>and</strong> eigenvectors for matrices A <strong>of</strong> dimensionhigher than 2, it is necessary to do more work on solving the associated linearsystems.For instance, ifA =⎡⎢⎣1 2 22 1 31 1 0then, the characteristic polynomial will beIts roots arer 1 = 3 + √ 292⎤⎥⎦z(r) = r 3 − 2r 2 − 8r − 5, r 2 = 3 − √ 29, r 3 = −12The associated three eigenvectors are found by solving the three linearsystems(A − rI)ξ = 0for each <strong>of</strong> the three values r = r 1 , r = r 2 , r = r 3 .

March 31, 2013 16-256.2 Subspaces <strong>of</strong> R n , Null Space <strong>and</strong> Range <strong>of</strong> a <strong>Linear</strong>MapIt is convenient to have name for subsets <strong>of</strong> R n which behave well undervector addition <strong>and</strong> scalar multiplication.Definition. A subset W <strong>of</strong> R n is called a linear subspace (or, simply asubspace) if it satisfies the following two properties.1. For any two vectors v <strong>and</strong> w in W , we have v + w ∈ W .2. For any vector v ∈ W <strong>and</strong> scalar α, we have αv ∈ W .We <strong>of</strong>ten say that W is closed under vector addition <strong>and</strong> scalar multiplication.Let W be a subspace <strong>of</strong> R n which contains at least one non-zero vector.A basis for W is a maximal linear independent set B = {v 1 , v 2 , . . . , v k } <strong>of</strong>vectors in W . This means that, for any vector w in W the setB 1 = {w} ⋃ Bis no longer linear independent. That is, we cannot increase B inside W<strong>and</strong> keep it a linearly independent set.It is a fact that any two bases <strong>of</strong> a subspace W have the same number<strong>of</strong> elements. This common number is called the dimension <strong>of</strong> W . Note thesingle element subset {0} is a subspace <strong>of</strong> R n . We define its dimension to be0.Let B be any subset <strong>of</strong> R n . Then, the subspace spanned by B, denotedsp(B) is defined to the set <strong>of</strong> finite linear combinations a 1 v 1 + a 2 v 2 + a j v jwhere a i is a scalar <strong>and</strong> v i is a vector in B for all i.If B = {v 1 , v 2 , . . . , v k } is a finite set, then we write sp(v 1 , v 2 , . . . , v k ) forsp(B).Note that if dim(sp(B)) = d <strong>and</strong> k > d, then the set B cannot be linearlyindependent.Examples.1. The subspaces <strong>of</strong> R 2 consist <strong>of</strong>(a) the set {0|} consisting <strong>of</strong> the zero vector. (dimension 0)(b) the lines through the origin (dimension 1)

March 31, 2013 16-26(c) all <strong>of</strong> R 2 (dimension 2)2. The subspaces <strong>of</strong> R 3 consist <strong>of</strong>(a) the set {0|} consisting <strong>of</strong> the zero vector. (dimension 0)(b) the lines through the origin (dimension 1)(c) the planes through the origin (dimension 2)(d) all <strong>of</strong> R 3 (dimension 3)3. Let 0 < k < n <strong>and</strong> consider the set <strong>of</strong> vectors (x 1 , x 2 , . . . , x k , 0, . . . 0)<strong>of</strong> vectors in R n whose coordinates x i are zero for i > k. This is asubspace <strong>of</strong> dimension k spanned by the vectors e 1 , e 2 , . . . , e k where e iis the st<strong>and</strong>ard unit vector whose only non-zero entry is a 1 in the i−thposition.Definition. Let T : R n → R p be a linear map with associated matrix Awhose j−th column A c j is T (e j ). The null-space or kernel <strong>of</strong> T is the set <strong>of</strong>vectors v in R n such that T (v) = 0. The Range <strong>of</strong> T is the set <strong>of</strong> vectorsw ∈ R p such that there is a v ∈ R n such that T (v) = w.It is easy to show that the kernel <strong>of</strong> T <strong>and</strong> the range <strong>of</strong> T are subspaces<strong>of</strong> R n <strong>and</strong> R p , respectively.6.3 Some properties <strong>of</strong> eigenvalues <strong>and</strong> eigenvectorsFirst, we discuss some general facts about eigenvalues <strong>and</strong> eigenvectors whichare valid in any dimension.1. Let A be an n × n matrix <strong>and</strong> let r 1 be an eigenvalue <strong>of</strong> A. Let ξ <strong>and</strong>η be eigenvectors associated to r 1 . Then, for arbitrary scalars α, β, wehave that αξ + βη is also an eigenvector associated to r 1 provided thatit is not the zero vector.Pro<strong>of</strong>.Let v = αξ + βη <strong>and</strong> assume this is not 0.We have

March 31, 2013 16-27A(v) = A(αξ + βη)= αAξ + βAη= αr 1 ξ + βr 1 η= r 1 (αξ + βη)= r 1 vTherefore v is also an eigenvector as required.2. Let r 1 ≠ r 2 be distinct eigenvalues <strong>of</strong> A with associated eigenvectorsξ, η, respectively. Then, ξ is not a multiple <strong>of</strong> η.Pro<strong>of</strong>.Assume that ξ = αη for some α. Since both vectors are not 0, we musthave α ≠ 0.Now,Aξ = r 1 ξ= r 1 αη,Aξ = Aαη = αAη = αr 2 η,So,r 1 αη = r 2 αη.Since α ≠ 0, <strong>and</strong> η ≠ 0, we get r 1 = r 2 which is a contradiction.3. A real matrix may not have any real eigenvalues, but always has complexeigenvalues.In the language <strong>of</strong> subspaces, if we consider all <strong>of</strong> the eigenvectors for r 1<strong>and</strong> add the zero vector, then we get a subspace <strong>of</strong> R n . This is called theeigenspace <strong>of</strong> r 1 .

March 31, 2013 16-28A simple method to find eigenvectors for 2 × 2 matricesLet( )a bA =c dbe a 2 × 2 matrix with characteristic polynomial<strong>and</strong> let r 1 be a root <strong>of</strong> z(r).We wish to find a vector v =z(r) = r 2 − (a + b)r + ad − bc,(v1v 2)such thatAv = r 1 v or (A − r 1 I)v = 0with I equal to the 2 × 2 identity matrix.That is, we wish to solve the system <strong>of</strong> equations(a − r 1 )v 1 + bv 2 = 0cv 1 + (d − r 1 )v 2 = 0This is a homogeneous system <strong>of</strong> linear equations, <strong>and</strong>, since it has asolution, the two equations must be multiples <strong>of</strong> each other. Thus, we onlyneed to solve the first equation.Case 1: b ≠ 0Since b ≠ 0, we can let v 1 = 1 <strong>and</strong> get v 2 = r 1−ato solve the equation.bHence, the vectorv =(1r 1 −abis an eigenvector for r 1 .Note that this works whether the root is real or complex. In the complexcase, we get an associated complex eigenvector–there is no associated realeigenvector.If the two roots <strong>of</strong> z(r) are r 1 , r 2 with both real <strong>and</strong> distinct, then thesame formula works for each <strong>of</strong> them.)

March 31, 2013 16-29That is, an eigenvector for r 1 is v 1 =( )1r 2 −a .b(1r 1 −ab)<strong>and</strong> one for r 2 is v 2 =If the roots are real <strong>and</strong> equal, then this gives one eigenvector v 1There are two possibilities that can occur:Either all eigenvectors are non-zero multiples <strong>of</strong> v 1 or there is secondeigenvector v 2 which is not a multiple <strong>of</strong> v 1 . In this latter case all non-zerovectors in R 2 in fact are eigenvectors.Case 2: b = 0 but c ≠ 0.In this case, we use the second equation in a similar way <strong>and</strong> get the eigenvectorv 1 =(r1 −dc1are similar as well.Examples.). The cases <strong>of</strong> real <strong>and</strong> equal or complex eigenvalues1.(3 8A =−1 −6)Characteristic polynomial: z(r) = r 2 + 3r − 10 = (r + 5)(r − 2),roots: r = −5, 2Eigenvalues <strong>and</strong> Eigenvectors:2.r = −5, v =r = 2, v =(1r−38(1r−38A =))==(1−5−38(12−38(4 −11 4)))==(1−1(1−1/8))Characteristic polynomial: z(r) = r 2 − 8r + 17,

March 31, 2013 16-30roots:r = 8 ± √ 64 − 682= 4 ± iEigenvalues <strong>and</strong> Eigenvectors:r = 4 + i, v =r = 4 − i, v =(14+i−4−1(14−i−4−1)=(1−i)) ( )1=i3.A =(6 01 6)Characteristic polynomial: z(r) = r 2 − 12r + 36 = (r − 6) 2 ,roots: 6, 6Eigenvalues <strong>and</strong> Eigenvectors:( )0r = 6, v =1