Bayesian Learning Handouts.pdf - Richard J. Povinelli

Bayesian Learning Handouts.pdf - Richard J. Povinelli

Bayesian Learning Handouts.pdf - Richard J. Povinelli

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

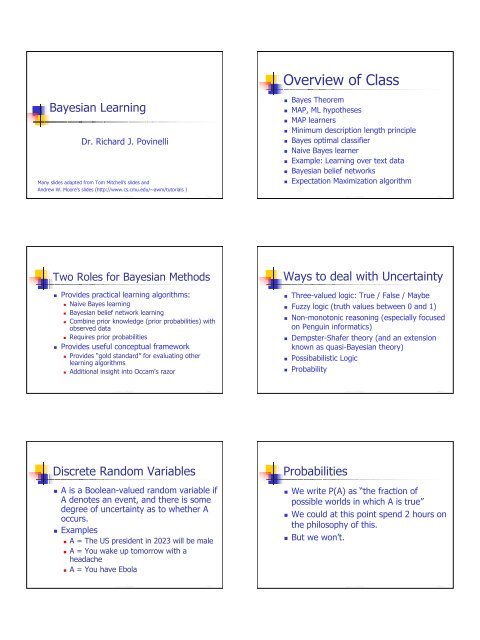

<strong>Bayesian</strong> <strong>Learning</strong><br />

Dr. <strong>Richard</strong> J. <strong>Povinelli</strong><br />

Many slides adapted from Tom Mitchell’s slides and<br />

Andrew W. Moore’s slides (http://www.cs.cmu.edu/~awm/tutorials )<br />

rev 1.0, 1/20/2003<br />

Two Roles for <strong>Bayesian</strong> Methods<br />

Page 1<br />

� Provides practical learning algorithms:<br />

� Naive Bayes learning<br />

� <strong>Bayesian</strong> belief network learning<br />

� Combine prior knowledge (prior probabilities) with<br />

observed data<br />

� Requires prior probabilities<br />

� Provides useful conceptual framework<br />

� Provides “gold standard” for evaluating other<br />

learning algorithms<br />

� Additional insight into Occam's razor<br />

rev 1.0, 1/20/2003<br />

Discrete Random Variables<br />

� A is a Boolean-valued random variable if<br />

A denotes an event, and there is some<br />

degree of uncertainty as to whether A<br />

occurs.<br />

� Examples<br />

� A = The US president in 2023 will be male<br />

� A = You wake up tomorrow with a<br />

headache<br />

� A = You have Ebola<br />

rev 1.0, 1/20/2003<br />

Page 3<br />

Page 5<br />

Overview of Class<br />

� Bayes Theorem<br />

� MAP, ML hypotheses<br />

� MAP learners<br />

� Minimum description length principle<br />

� Bayes optimal classifier<br />

� Naive Bayes learner<br />

� Example: <strong>Learning</strong> over text data<br />

� <strong>Bayesian</strong> belief networks<br />

� Expectation Maximization algorithm<br />

rev 1.0, 1/20/2003<br />

Ways to deal with Uncertainty<br />

� Three-valued logic: True / False / Maybe<br />

� Fuzzy logic (truth values between 0 and 1)<br />

� Non-monotonic reasoning (especially focused<br />

on Penguin informatics)<br />

� Dempster-Shafer theory (and an extension<br />

known as quasi-<strong>Bayesian</strong> theory)<br />

� Possibabilistic Logic<br />

� Probability<br />

Probabilities<br />

rev 1.0, 1/20/2003<br />

� We write P(A) as “the fraction of<br />

possible worlds in which A is true”<br />

� We could at this point spend 2 hours on<br />

the philosophy of this.<br />

� But we won’t.<br />

rev 1.0, 1/20/2003<br />

Page 2<br />

Page 4<br />

Page 6

Event space of<br />

all possible<br />

worlds<br />

Its area is 1<br />

Visualizing A<br />

Worlds in which<br />

A is true<br />

Worlds in which A is False<br />

rev 1.0, 1/20/2003<br />

Interpreting the axioms<br />

� 0

Theorems from the Axioms<br />

� Axioms<br />

� 0

Bayes Rule<br />

P ( A∧B) P ( B A)<br />

=<br />

P ( A)<br />

P ( A B) P ( B)<br />

=<br />

P ( A)<br />

Bayes, Thomas (1763) An essay<br />

towards solving a problem in the doctrine<br />

of chances. Philosophical Transactions<br />

of the Royal Society of London, 53:370-<br />

418<br />

rev 1.0, 1/20/2003<br />

Using Bayes Rule to Gamble<br />

$1.00<br />

The “Win” envelope has<br />

a dollar and four<br />

beads in it<br />

rev 1.0, 1/20/2003<br />

The “Lose” envelope has<br />

three beads and no<br />

money<br />

Interesting question: before deciding, you are allowed to see one bead<br />

drawn from the envelope.<br />

Suppose it’s black: How much should you pay?<br />

Suppose it’s red: How much should you pay?<br />

Choosing Hypotheses<br />

P ( D h) P ( h)<br />

P ( h D)<br />

=<br />

P ( D)<br />

Generally want the most probable hypothesis given the training data<br />

Maximum a posteriori hypothesis hMAP<br />

hMAP = argmax P ( h D)<br />

h∈H P ( D h) P ( h)<br />

= argmax<br />

h∈H P ( D)<br />

= argmax P ( D h) P ( h)<br />

h∈H If assume P ( hi) = P ( hj)<br />

then can further simplify,<br />

and choose the<br />

Maximum likelihood (ML) hypothesis<br />

hML = argmax P ( D hi)<br />

hi∈H rev 1.0, 1/20/2003<br />

Page 19<br />

Page 21<br />

Page 23<br />

Using Bayes Rule to Gamble<br />

$1.00<br />

R R B B R B B<br />

The “Win” envelope has<br />

a dollar and four<br />

beads in it<br />

rev 1.0, 1/20/2003<br />

The “Lose” envelope<br />

has three beads and<br />

no money<br />

Trivial question: someone draws an envelope at random and offers to<br />

sell it to you. How much should you pay?<br />

Classroom Activity<br />

� Suppose it’s black: How much should<br />

you pay?<br />

� Suppose it’s red: How much should<br />

you pay?<br />

� With a partner figure out how you<br />

represent each of these. You have 5<br />

minutes.<br />

rev 1.0, 1/20/2003<br />

Bayes Theorem<br />

� Does patient have cancer or not?<br />

� A patient takes a lab test and the result comes back positive.<br />

The test returns a correct positive result in only 97% of the<br />

cases in which the disease is actually present, and a correct<br />

negative result in only 98% of the cases in which the<br />

disease is not present. Furthermore, 0.007 of the entire<br />

population have this cancer.<br />

� Probabilities<br />

� P(cancer) =<br />

� P(¬cancer) =<br />

� P(+ | cancer) =<br />

� P(- | cancer) =<br />

� P(+ | ¬cancer) =<br />

� P(- | ¬cancer) =<br />

rev 1.0, 1/20/2003<br />

Page 20<br />

Page 22<br />

Page 24

Brute Force MAP<br />

Hypothesis Learner<br />

1. For each hypothesis h ∈ H,<br />

calculate<br />

the posterior probability<br />

( )<br />

( )<br />

P ( D)<br />

( )<br />

P D h P h<br />

P h D =<br />

2. Output the hypothesis hMAP<br />

with the<br />

highest posterior probability<br />

h = argmax P h D<br />

MAP<br />

h∈H ( )<br />

rev 1.0, 1/20/2003<br />

Relation to Concept <strong>Learning</strong> II<br />

� Assume fixed set of instances <br />

� Assume D is the set of classifications<br />

D = < c (x1 ),…,c (xm )><br />

� Choose P (D |h)<br />

� P (D |h) = 1 if h consistent with D<br />

� P (D |h) = 0 otherwise<br />

� Choose P (h) to have a uniform distribution<br />

� P (h) = 1/|H| for all h in H<br />

� Then,<br />

⎧⎪<br />

⎪<br />

1<br />

if h is consistent with D<br />

P ( h D)<br />

= ⎪<br />

⎨VS H , D<br />

⎪⎪⎪⎩<br />

0 otherwise<br />

rev 1.0, 1/20/2003<br />

Characterizing <strong>Learning</strong> Algorithms<br />

by Equivalent MAP Learners<br />

rev 1.0, 1/20/2003<br />

Page 25<br />

Page 27<br />

Page 29<br />

Relation to Concept <strong>Learning</strong> I<br />

� Consider our usual concept learning task<br />

� instance space X, hypothesis space H, training<br />

examples D<br />

� consider the FindS learning algorithm (outputs<br />

most specific hypothesis from the version space<br />

VS H,D )<br />

� What would Bayes rule produce as the MAP<br />

hypothesis?<br />

� Does FindS output a MAP hypothesis??<br />

rev 1.0, 1/20/2003<br />

Evolution of Posterior Probabilities<br />

rev 1.0, 1/20/2003<br />

<strong>Learning</strong> A Real Valued Function<br />

� Consider any real-valued<br />

target function f<br />

� Training examples<br />

, where d i is a<br />

noisy training value<br />

� d i = f(x i ) + e i<br />

� e i is an independent random<br />

variable N(0,σ)<br />

� Then the maximum likelihood<br />

hypothesis h ML is the one that<br />

minimizes the sum of squared<br />

errors<br />

rev 1.0, 1/20/2003<br />

m<br />

argmin<br />

h∈H i = 1<br />

Page 26<br />

Page 28<br />

( ( ) ) 2<br />

hML = ∑<br />

di−h xi<br />

Page 30

<strong>Learning</strong> A Real Valued Function<br />

h∈ H i = 1<br />

h∈ H i = 1<br />

( )<br />

h = argmax p D h<br />

ML<br />

h∈H = argmax<br />

= argmax<br />

m<br />

∏<br />

m<br />

∏<br />

( i )<br />

p d h<br />

1<br />

2πσ<br />

rev 1.0, 1/20/2003<br />

2<br />

e<br />

( ) 2<br />

1⎛di<br />

−h x ⎞<br />

i<br />

− ⎜ ⎟<br />

⎜ ⎟<br />

2⎜⎜⎝<br />

σ<br />

⎟<br />

⎠⎟<br />

<strong>Learning</strong> to Predict Probabilities<br />

� Consider predicting survival probability from patient data<br />

� Training examples < x i , d i >, where d i is 1 or 0<br />

� Want to train neural network to output a probability given x i<br />

(not a 0 or 1)<br />

� In this case can show<br />

hML = argmax ∑dilnh(<br />

xi) + ( 1 −di) ln( 1 −h(<br />

xi)<br />

)<br />

h∈ H i = 1<br />

Weight update rule for a sigmoid unit:<br />

w ← w + ∆w<br />

where<br />

∆ w = η∑<br />

( d − h( x ) ) x<br />

m<br />

jk jk jk<br />

m<br />

jk i i ijk<br />

i = 1<br />

rev 1.0, 1/20/2003<br />

Example<br />

� H = decision trees, D = training data<br />

labels<br />

� L C1 (h) is # bits to describe tree h<br />

� L C2 (D|h) is # bits to describe D given h<br />

� Note L C2 (D|h) =0 if examples classified<br />

perfectly by h. Need only describe<br />

exceptions<br />

� Hence h MDL trades off tree size for<br />

training errors<br />

rev 1.0, 1/20/2003<br />

Page 31<br />

Page 33<br />

Page 35<br />

Maximize Natural Log Instead<br />

⎛<br />

⎜ m<br />

⎜<br />

ML = ln ⎜<br />

⎜argmax ⎜ ∏<br />

h∈ H ⎜ i = 1<br />

⎜⎝<br />

1<br />

2<br />

2πσ<br />

2<br />

1⎛di<br />

h( xi<br />

) ⎞<br />

⎜ − ⎟ ⎞<br />

− ⎜ ⎟<br />

2<br />

⎜ ⎟ ⎟<br />

⎜⎝ σ ⎠⎟<br />

⎟<br />

⎠⎟<br />

⎛<br />

m ⎜ ⎛<br />

= argmax ln⎜ ∑ ⎜ ⎜<br />

h∈ H i = 1 ⎜ ⎜<br />

⎜<br />

⎝<br />

⎝<br />

2<br />

⎛ 1⎛di<br />

−h( xi<br />

) ⎞<br />

⎜<br />

⎟ ⎞⎞ − ⎟<br />

1 ⎞ ⎜<br />

⎟<br />

2<br />

⎜ ⎟<br />

⎟ ⎜ ⎜⎜⎝ σ ⎠⎟⎟⎟<br />

⎟ + ln⎜e<br />

⎟ ⎟<br />

2 ⎟ ⎜ ⎟ ⎟⎟<br />

2πσ<br />

⎠⎟⎜<br />

⎟⎟<br />

⎝ ⎜ ⎠⎟⎠<br />

⎟<br />

m ⎛<br />

⎜ ⎛<br />

= argmax ln⎜<br />

∑ ⎜ ⎜ h∈H ⎜ i = 1 ⎜⎜⎝ ⎜⎝<br />

2<br />

1 ⎞<br />

⎟ 1 ⎛di − h( x ) ⎞ ⎞<br />

i ⎟<br />

⎟<br />

2 ⎟ −<br />

⎜ ⎟<br />

⎜ ⎟ ⎟<br />

2πσ<br />

⎠⎟2⎜<br />

⎟<br />

⎜⎝<br />

⎟<br />

σ<br />

⎟<br />

⎠⎟⎟<br />

⎠⎟<br />

m<br />

2<br />

= argmax ∑−(<br />

di −h(<br />

xi<br />

) )<br />

h∈ H i = 1<br />

m<br />

= argmin∑<br />

( d − h( x ) )<br />

h∈ H i = 1<br />

h e<br />

2<br />

i i<br />

rev 1.0, 1/20/2003<br />

rev 1.0, 1/20/2003<br />

Page 32<br />

Minimum Description Length Principle<br />

� Occam's razor: prefer the shortest<br />

hypothesis<br />

� MDL: prefer the hypothesis h that<br />

minimizes<br />

h = argminL<br />

h + L D h<br />

LC( x)<br />

x under encoding C<br />

( ) ( )<br />

MDL C1 C 2<br />

h∈H where is the description length of<br />

MAP MDL Principle I<br />

( ) ( )<br />

h = argmax P D h P h<br />

MAP<br />

h∈H rev 1.0, 1/20/2003<br />

( ) ( )<br />

= argmax log P D h + log P h<br />

h∈H 2 2<br />

Page 34<br />

( ) ( )<br />

= argmin −log P D h −log<br />

P h<br />

h∈H 2 2<br />

Page 36

MAP MDL Principle II<br />

� Interesting fact from information theory:<br />

� The optimal (shortest expected coding length)<br />

code for an event with probability p is -log 2p bits.<br />

� So interpret equation on last slide:<br />

� -log 2P (h) is length of h under optimal code<br />

� -log 2P (D |h) is length of D given h under optimal<br />

code<br />

� Prefer the hypothesis that minimizes<br />

� length(h) + length(misclassifications)<br />

rev 1.0, 1/20/2003<br />

Classroom Activity<br />

� Consider:<br />

� Three possible hypotheses:<br />

� P (h 1 |D)=0.4, P (h 2 |D)= 0.3, P (h 3 |D)= 0.3<br />

� Given new instance x,<br />

� h 1 (x)=+, h 2 (x)=-, h 3 (x)=-<br />

� What's most probable classification<br />

of x?<br />

� With a partner figure out how you<br />

represent each of these. You have<br />

5 minutes.<br />

rev 1.0, 1/20/2003<br />

Gibbs Classifier<br />

� Bayes optimal classifier provides best result, but can<br />

be expensive if many hypotheses.<br />

� Gibbs algorithm:<br />

1. Choose one hypothesis at random, according to P (h |D)<br />

2. Use this to classify new instance<br />

� Surprising fact: Assume target concepts are drawn at<br />

random from H according to priors on H. Then:<br />

� E [error Gibbs ] ≤ 2E [error Bayes Optimal ]<br />

� Suppose correct, uniform prior distribution over H,<br />

then<br />

� Pick any hypothesis from VS, with uniform probability<br />

� Its expected error no worse than twice Bayes optimal<br />

rev 1.0, 1/20/2003<br />

Page 37<br />

Page 39<br />

Page 41<br />

Most Probable Classification<br />

of New Instances<br />

� So far we've sought the most probable<br />

hypothesis given the data D (i.e., hMAP) � Given new instance x, what is its most<br />

probable classification?<br />

� hMAP (x) is not the most probable<br />

classification!<br />

rev 1.0, 1/20/2003<br />

Bayes Optimal Classifier<br />

Bayes optimal classification:<br />

argmax P v h P h D<br />

v j ∈V<br />

hi∈H ( j i ) ( i )<br />

Example:<br />

P ( h1 D) = .4, P ( − h1) = 0, P ( + h1)<br />

= 1<br />

P ( h2 D) = .3, P ( − h2) = 1, P ( + h2)<br />

= 0<br />

P ( h3 D) = .3, P ( − h3) = 1, P ( + h3)<br />

= 0<br />

therefore<br />

+ = .4, − = .6<br />

∑P ( hi ) P ( hi D) ∑ P ( hi ) P ( hi D)<br />

hi ∈H hi ∈H<br />

and<br />

argmax<br />

∑<br />

∑<br />

v j ∈V<br />

hi∈H ( j i ) ( i )<br />

P v h P h D = −<br />

rev 1.0, 1/20/2003<br />

Naive Bayes Classifier I<br />

� Along with decision trees, neural networks,<br />

nearest neighbor, one of the most practical<br />

learning methods.<br />

� When to use<br />

� Moderate or large training set available<br />

� Attributes that describe instances are conditionally<br />

independent given classification<br />

� Successful applications:<br />

� Diagnosis<br />

� Classifying text documents<br />

rev 1.0, 1/20/2003<br />

Page 38<br />

Page 40<br />

Page 42

Naive Bayes Classifier II<br />

� Assume target function f: X→V, where each instance x described by<br />

attributes .<br />

Most probable value of f ( x)<br />

) is:<br />

vMAP = argmax P ( v j a1, a2, …,<br />

an)<br />

v j ∈V<br />

P ( a1, a2, …,<br />

anv j) P ( v j)<br />

v MAP = argmax<br />

v j ∈V<br />

P ( a1, a2, …,<br />

an)<br />

vMAP = argmax P ( a1, a2, …,<br />

anv j) P ( v j)<br />

v j ∈V<br />

Naive Bayes assumption:<br />

P ( a1, a2, …,<br />

an v j) = ∏P<br />

( ai v j)<br />

i<br />

which gives Naive Bayes classifier:<br />

v = argmax P ( v ) ∏P<br />

( a v )<br />

NB<br />

v j ∈V<br />

j<br />

i<br />

i j<br />

rev 1.0, 1/20/2003<br />

Naive Bayes: Example<br />

� Consider PlayTennis again, and new instance<br />

� <br />

� Want to compute:<br />

( ) ∏ ( )<br />

v = argmax P v P a v<br />

NB j i j<br />

v j ∈V<br />

i<br />

( ) ( ) ( ) ( ) ( )<br />

( ) ( ) ( ) ( ) ( )<br />

P y P sun y P cool y P high y P strong y = 0.005<br />

P n P sun n P cool n P high n P strong n = 0.021<br />

→ v = n<br />

NB<br />

rev 1.0, 1/20/2003<br />

Naive Bayes: Subtleties II<br />

2. What if none of the training instances with target<br />

value vj have attribute value a ? i Then<br />

� ˆ P ( ai v j)<br />

= 0,and ...<br />

�<br />

ˆ P ( v ) ˆ<br />

j ∏P<br />

( ai v j)<br />

= 0<br />

i<br />

� Typical solution is <strong>Bayesian</strong> estimate for P_hat(ai | v ) j<br />

� ˆ nc+ mp<br />

P ( ai v j)<br />

←<br />

n + m<br />

� where<br />

� n is number of training examples for which v=v j ,<br />

rev 1.0, 1/20/2003<br />

Page 43<br />

Page 45<br />

� n c number of examples for which v=v j and a=a i<br />

� p is prior estimate for P_hat(a i | v j )<br />

� m is weight given to prior (i.e. number of “virtual” examples)<br />

Page 47<br />

Naive Bayes Algorithm<br />

( examples )<br />

NaiveBayesLearn<br />

For each target value v j<br />

ˆ P ( v j ) ← estimate P ( v j )<br />

For each attribute value aiof each attribute a<br />

ˆ P a v ˆ P a v<br />

( i j ) ← estimate ( i j )<br />

v<br />

( x )<br />

argmax ˆ P v ˆ P a v<br />

ClassifyNewIns tance<br />

= ∏<br />

( ) ( )<br />

NB j i j<br />

v j ∈V a ∈x<br />

rev 1.0, 1/20/2003i<br />

Naive Bayes: Subtleties I<br />

1. Conditional independence assumption is often<br />

violated<br />

� P ( a1, a2, …,<br />

anv j ) = ∏P<br />

( aiv j )<br />

i<br />

rev 1.0, 1/20/2003<br />

Page 44<br />

� ...but it works surprisingly well anyway. Note don't<br />

need estimated posteriors P_hat(v j |x) to be correct;<br />

need only that<br />

� argmax ˆ P ( v ) ˆ<br />

j ∏P<br />

( aj v j) = argmax P ( v j) P ( a1, …,<br />

anv j )<br />

v j ∈V i<br />

v j ∈V<br />

� see [Domingos & Pazzani, 1996] for analysis<br />

� Naive Bayes posteriors often unrealistically close to 1<br />

or 0<br />

<strong>Learning</strong> to Classify Text I<br />

� Why?<br />

� Learn which news articles are of interest<br />

� Learn to classify web pages by topic<br />

� Naive Bayes is among most effective<br />

algorithms<br />

� What attributes shall we use to<br />

represent text documents??<br />

rev 1.0, 1/20/2003<br />

Page 46<br />

Page 48

<strong>Learning</strong> to Classify Text II<br />

� Target concept Interesting? : Document<br />

→{+,-}<br />

1. Represent each document by vector of words<br />

� one attribute per word position in document<br />

2. <strong>Learning</strong>: Use training examples to estimate<br />

� P(+)<br />

� P(-)<br />

� P(doc|+)<br />

� P(doc|-)<br />

rev 1.0, 1/20/2003<br />

LearnNaiveBayesText (Examples, V)<br />

1. Collect all words and other tokens that occur in Examples<br />

� Vocabulary ← all distinct words and other tokens in Examples<br />

2. Calculate the required P (v j ) and P (w k |v j ) probability terms<br />

3. For each target value v j in V do<br />

� docs j ← subset of Examples for which the target value is v j<br />

� P (v j ) ← | docs j | / |Examples|<br />

� Text j ← a single document created by concatenating all members<br />

of docs j<br />

� n ← total number of words in Text j (counting duplicate words<br />

multiple times)<br />

� for each word w k in Vocabulary<br />

� nk ← number of times word wk occurs in Textj � P (wk |v ) j ← (nk + 1) / (n + |Vocabulary|)<br />

rev 1.0, 1/20/2003<br />

Twenty NewsGroups<br />

� Given 1000 training documents from each group<br />

Page 49<br />

Page 51<br />

� Learn to classify new documents according to which<br />

newsgroup it came from<br />

� comp.graphics, comp.os.ms-windows.misc,<br />

comp.sys.ibm.pc.hardware, comp.sys.mac.hardware,<br />

comp.windows.x<br />

� misc.forsale, rec.autos, rec.motorcycles, rec.sport.baseball,<br />

rec.sport.hockey<br />

� alt.atheism, soc.religion.christian, talk.religion.misc,<br />

talk.politics.mideast, talk.politics.misc, talk.politics.guns<br />

� sci.space, sci.crypt, sci.electronics, sci.med<br />

� Naive Bayes: 89% classification accuracy<br />

rev 1.0, 1/20/2003<br />

Page 53<br />

<strong>Learning</strong> to Classify Text III<br />

� Naive Bayes conditional independence<br />

assumption length( doc )<br />

� P doc v = P a = w v<br />

( j ) ∏ ( i k j )<br />

i = 1<br />

� where P (a i = w k| v j) is probability that<br />

word in position i is w k, given v j<br />

� one more assumption:<br />

� P (a i =w k|v j) = P(a m=w k|v j), forall i,m<br />

rev 1.0, 1/20/2003<br />

ClassifyNaiveBayesText(Doc)<br />

� positions ← all word positions in Doc<br />

that contain tokens found in Vocabulary<br />

� Return v NB, where<br />

rev 1.0, 1/20/2003<br />

( ) ( )<br />

v NB = argmax P v j ∏ P aivi v j ∈V i ∈positions<br />

Article from rec.sport.hockey<br />

� Path: cantaloupe.srv.cs.cmu.edu!dasnews.harvard.edu!ogicse!uwm.edu<br />

� From: xxx@yyy.zzz.edu (John Doe)<br />

� Subject: Re: This year's biggest and worst (opinion)...<br />

� Date: 5 Apr 93 09:53:39 GMT<br />

� I can only comment on the Kings, but the most obvious candidate for<br />

pleasant surprise is Alex Zhitnik. He came highly touted as a defensive<br />

defenseman, but he's clearly much more than that. Great skater and<br />

hard shot (though wish he were more accurate). In fact, he pretty<br />

much allowed the Kings to trade away that huge defensive liability Paul<br />

Coffey. Kelly Hrudey is only the biggest disappointment if you thought<br />

he was any good to begin with. But, at best, he's only a mediocre<br />

goaltender. A better choice would be Tomas Sandstrom, though not<br />

through any fault of his own, but because some thugs in Toronto<br />

decided<br />

rev 1.0, 1/20/2003<br />

Page 50<br />

Page 52<br />

Page 54

<strong>Learning</strong> Curve for 20 Newsgroups<br />

Accuracy vs. Training set size (1/3 withheld for test)<br />

rev 1.0, 1/20/2003<br />

Conditional Independence I<br />

Page 55<br />

� Definition: X is conditionally independent of Y<br />

given Z if the probability distribution<br />

governing X is independent of the value of Y<br />

given the value of Z; that is, if<br />

� (∀ x i ,y j ,z k ) P (X=x i | Y=y j , Z=z k ) = P (X=x i | Z=z k )<br />

� more compactly, we write<br />

� P(X | Y,Z) = P(X | Z)<br />

rev 1.0, 1/20/2003<br />

<strong>Bayesian</strong> Belief Network I<br />

� Network represents a set of conditional independence<br />

assertions:<br />

� Each node is asserted to be conditionally independent of its<br />

nondescendants, given its immediate predecessors.<br />

� Directed acyclic graph<br />

rev 1.0, 1/20/2003<br />

Page 57<br />

Page 59<br />

<strong>Bayesian</strong> Belief Networks<br />

� Interesting because<br />

� Naive Bayes assumption of conditional<br />

independence too restrictive<br />

� But it's intractable without some such assumptions<br />

� <strong>Bayesian</strong> belief networks describe conditional<br />

independence among subsets of variables<br />

� Allows combining prior knowledge about<br />

(in)dependencies among variables with observed<br />

training data<br />

� (Also called Bayes nets)<br />

rev 1.0, 1/20/2003<br />

Conditional Independence II<br />

� Example: Thunder is conditionally<br />

independent of Rain, given Lightning<br />

� P (Thunder | Rain, Lightning) =<br />

P (Thunder | Lightning)<br />

� Naive Bayes uses conditional<br />

independence to justify<br />

� P (X,Y |Z) = P (X |Y,Z ) P (Y |Z )<br />

� P (X,Y |Z) = P (X |Z ) P (Y |Z )<br />

rev 1.0, 1/20/2003<br />

<strong>Bayesian</strong> Belief Network II<br />

� Represents joint probability distribution over all variables<br />

� e.g., P (Storm, BusTourGroup,…, ForestFire)<br />

� in general, P (y 1 ,…, y n ) = ∏ n i=1 P (y i | Parents(Y i )) where<br />

Parents(Y i ) denotes immediate predecessors of Y i in graph<br />

� so, joint distribution is fully defined by graph, plus the<br />

P (y i | Parents(Y i ))<br />

rev 1.0, 1/20/2003<br />

Page 56<br />

Page 58<br />

Page 60

Inference in <strong>Bayesian</strong> Networks<br />

� How can one infer the (probabilities of) values of one<br />

or more network variables, given observed values of<br />

others?<br />

� Bayes net contains all information needed for this inference<br />

� If only one variable with unknown value, easy to infer it<br />

� In general case, problem is NP hard<br />

� In practice, can succeed in many cases<br />

� Exact inference methods work well for some network<br />

structures<br />

� Monte Carlo methods “simulate” the network randomly to<br />

calculate approximate solutions<br />

rev 1.0, 1/20/2003<br />

<strong>Learning</strong> Bayes Nets<br />

Page 61<br />

� Suppose structure known, variables partially<br />

observable<br />

� e.g., observe ForestFire, Storm,<br />

BusTourGroup, Thunder, but not Lightning,<br />

Campfire ...<br />

� Similar to training neural network with hidden<br />

units<br />

� In fact, can learn network conditional probability<br />

tables using gradient ascent!<br />

� Converge to network h that (locally) maximizes P<br />

(D |h)<br />

rev 1.0, 1/20/2003<br />

More on <strong>Learning</strong> Bayes Nets<br />

� EM algorithm can also be used. Repeatedly:<br />

1. Calculate probabilities of unobserved variables,<br />

assuming h<br />

2. Calculate new w ijk to maximize E [lnP (D|h)] where<br />

D now includes both observed and (calculated<br />

probabilities of) unobserved variables<br />

� When structure unknown...<br />

� Algorithms use greedy search to add/substract<br />

edges and nodes<br />

� Active research topic<br />

rev 1.0, 1/20/2003<br />

Page 63<br />

Page 65<br />

<strong>Learning</strong> of <strong>Bayesian</strong> Networks<br />

� Several variants of this learning task<br />

� Network structure might be known or<br />

unknown<br />

� Training examples might provide values of<br />

all network variables, or just some<br />

� If structure known and observe all<br />

variables<br />

� Then it's easy as training a Naive Bayes<br />

classifier<br />

rev 1.0, 1/20/2003<br />

Gradient Ascent for Bayes Nets<br />

� Let wijk denote one entry in the conditional<br />

probability table for variable Yi in the network<br />

� wijk = P (Yi =yij | Parents(Yi ) = the list uik of values)<br />

� e.g., if Yi = Campfire, then uik might be<br />

<br />

� Perform gradient ascent by repeatedly<br />

1. update all wijk using training data D<br />

wijk ← wijk + η Σd∈D Ph (yij , uik | d) / wijk 2. then, renormalize the wijk to assure<br />

� Σj wijk = 1<br />

� 0 ≤ wijk ≤ 1<br />

rev 1.0, 1/20/2003<br />

Summary: <strong>Bayesian</strong> Belief Networks<br />

rev 1.0, 1/20/2003<br />

Page 62<br />

Page 64<br />

� Combine prior knowledge with observed data<br />

� Impact of prior knowledge (when correct!) is<br />

to lower the sample complexity<br />

� Active research area<br />

� Extend from boolean to real-valued variables<br />

� Parameterized distributions instead of tables<br />

� Extend to first-order instead of propositional<br />

systems<br />

� More effective inference methods<br />

Page 66

Expectation Maximization (EM)<br />

� When to use:<br />

� Data is only partially observable<br />

� Unsupervised clustering (target value<br />

unobservable)<br />

� Supervised learning (some instance attributes<br />

unobservable)<br />

� Some uses:<br />

� Train <strong>Bayesian</strong> Belief Networks<br />

� Unsupervised clustering (AUTOCLASS)<br />

� <strong>Learning</strong> Hidden Markov Models<br />

rev 1.0, 1/20/2003<br />

EM for Estimating k Means I<br />

� Given:<br />

� Instances from X generated by mixture of k Gaussian<br />

distributions<br />

� Unknown means of the k Gaussians<br />

� Don't know which instance x i was generated by which<br />

Gaussian<br />

� Determine:<br />

� Maximum likelihood estimates of <br />

� Think of full description of each instance as<br />

y i = < x i , z i1 , z i2 >, where<br />

� z ij is 1 if x i generated by j th Gaussian<br />

� x i observable<br />

� z ij unobservable<br />

rev 1.0, 1/20/2003<br />

EM for Estimating k Means III<br />

M step:<br />

rev 1.0, 1/20/2003<br />

Page 67<br />

Page 69<br />

� Calculate a new maximum likelihood hypothesis<br />

h’ = , assuming the value taken on by each hidden<br />

variable z ij is its expected value E[z ij ] calculated above.<br />

Replace h = by h’ = .<br />

m<br />

i = 1<br />

j ← m<br />

µ<br />

∑<br />

∑<br />

E ⎡z ⎤<br />

⎣ ij ⎦<br />

xi<br />

E ⎡z ⎤<br />

⎣ ij ⎦<br />

i = 1<br />

Page 71<br />

Generating Data from Mixture<br />

of k Gaussians<br />

� Each instance x generated by<br />

� Choosing one of the k Gaussians with uniform probability<br />

� Generating an instance at random according to that Gaussian<br />

rev 1.0, 1/20/2003<br />

EM for Estimating k Means II<br />

� EM Algorithm: Pick random initial h = , then<br />

iterate<br />

E step:<br />

� Calculate the expected value E[z ij ] of each hidden variable<br />

z ij , assuming the current hypothesis h = holds<br />

E ⎡z ⎤<br />

⎣ ij ⎦<br />

=<br />

=<br />

( = i µ = µ j )<br />

∑ p( x = xi<br />

µ = µ n)<br />

∑<br />

p x x<br />

2<br />

n = 1<br />

1<br />

2<br />

− ( x )<br />

2 i −µ<br />

j<br />

2σ<br />

e<br />

1<br />

2<br />

2 − ( x )<br />

2 i −µ<br />

n<br />

2σ<br />

e<br />

n = 1<br />

EM Algorithm<br />

rev 1.0, 1/20/2003<br />

rev 1.0, 1/20/2003<br />

Page 68<br />

Page 70<br />

� Converges to local maximum likelihood<br />

h and provides estimates of hidden<br />

variables z ij<br />

� In fact, local maximum in E [lnP (Y |h)]<br />

� Y is complete (observable plus<br />

unobservable variables) data<br />

� Expected value is taken over possible<br />

values of unobserved variables inY<br />

Page 72

General EM Problem<br />

� Given:<br />

� Observed data X={x 1 ,…, x m }<br />

� Unobserved data Z={z 1 ,…, z m }<br />

� Parameterized probability distribution P (Y |h), where<br />

� Y={y 1 ,…, y m } is the full data y i = x i ∪ z i<br />

� h are the parameters<br />

� Determine:<br />

� h that (locally) maximizes E [lnP (Y |h)]<br />

� Many uses:<br />

� Train <strong>Bayesian</strong> belief networks<br />

� Unsupervised clustering (e.g., k means)<br />

� Hidden Markov Models<br />

rev 1.0, 1/20/2003<br />

Summary Points<br />

� Bayes Theorem<br />

� MAP, ML hypotheses<br />

� MAP learners<br />

� Minimum description length principle<br />

� Bayes optimal classifier<br />

� Naive Bayes learner<br />

� Example: <strong>Learning</strong> over text data<br />

� <strong>Bayesian</strong> belief networks<br />

� Expectation Maximization algorithm<br />

rev 1.0, 1/20/2003<br />

Page 73<br />

Page 75<br />

General EM Method<br />

� Define likelihood function Q (h' | h) which<br />

calculates Y = X ∪Z using observed X and<br />

current parameters h to estimate Z<br />

� Q (h' | h) ← E [ln P (Y | h‘ ) | h, X ]<br />

EM Algorithm:<br />

Estimation (E) step: Calculate Q (h' | h) using the<br />

current hypothesis h and the observed data X to<br />

estimate the probability distribution over Y .<br />

� Q (h' | h) ← E [ln P (Y | h‘ ) | h, X ]<br />

Maximization (M) step: Replace hypothesis h by the<br />

hypothesis h‘ that maximizes this Q function.<br />

� h ← argmax h' Q (h' | h)<br />

rev 1.0, 1/20/2003<br />

Page 74