Policy Gradient Algorithms

Policy Gradient Algorithms

Policy Gradient Algorithms

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

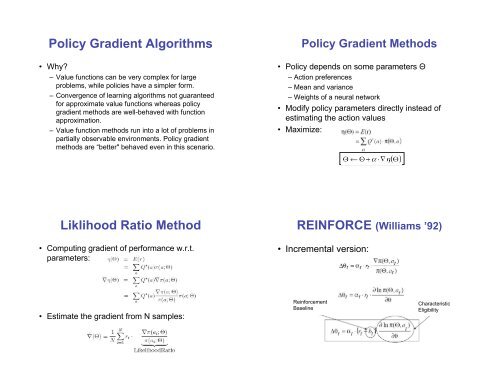

<strong>Policy</strong> <strong>Gradient</strong> <strong>Algorithms</strong>• Why?– Value functions can be very complex for largeproblems, while policies have a simpler form.– Convergence of learning algorithms not guaranteedfor approximate value functions whereas policygradient methods are well-behaved with functionapproximation.– Value function methods run into a lot of problems inpartially observable environments. <strong>Policy</strong> gradientmethods are “better" behaved even in this scenario.<strong>Policy</strong> <strong>Gradient</strong> Methods• <strong>Policy</strong> depends on some parameters !– Action preferences– Mean and variance– Weights of a neural network• Modify policy parameters directly instead ofestimating the action values• Maximize:Liklihood Ratio Method• Computing gradient of performance w.r.t.parameters:REINFORCE (Williams ’92)• Incremental version:• Estimate the gradient from N samples:ReinforcementBaselineCharacteristicEligibility

Special case – Generalized L R-I• Consider binary bandit problems witharbitrary rewardsReinforcement Comparison• Set baseline to average of observedrewards• Softmax action selectionReinforcement Comparison contd.Computation ofcharacteristic eligibility forsoftmax action selectionContinuous Actions• Use a Gaussian distribution to selectactions• For suitable choice of parameters:

MC <strong>Policy</strong> <strong>Gradient</strong>• Samples are entire trajectoriess 0 , a 0 , r 1 , s 1 , a 1 , . . . , s T• Evaluation criterion is the return along the path,instead of immediate rewards• The gradient estimation equation becomes:where, R i (s 0 ) is the return starting from state s 0and p i (s 0 ;!) is the probability of i th trajectory,starting from s 0 and using policy given by !.MC <strong>Policy</strong> <strong>Gradient</strong> contd.• The “likelihood ratio" in this case evaluatesto:• Estimate depends on starting state s 0 .One way to address this problem is toassume a fixed initial state.• More common assumption is to use theaverage reward formulation.(1)MC <strong>Policy</strong> <strong>Gradient</strong> contd.• Recall:– Maximize average reward per time step:– Unichain assumption: One set of “recurrent"class of states– ! " is then state independent– Recurrent class: Starting from any state in theclass, the probability of visiting all the states inthe class is 1.MC <strong>Policy</strong> <strong>Gradient</strong> contd.• Assumption 1: For every policy underconsideration, the Unichain assumption issatisfied, with the same set of recurrentstates.• Pick one recurrent state i*. Trajectories aredefined as starting and ending at thisrecurrent state.• Assumption 2: Bounded rewards.

Incremental UpdateSimple MC <strong>Policy</strong> <strong>Gradient</strong> Algorithm• We can incrementally compute the summation inEquation 1, over one trajectory as follows:• z T is known as an eligibility trace. Recall thecharacteristic eligibility term from REINFORCE:• z T keeps track of this eligibility over time, henceis called a trace.Adjust ! using a simple stochastic gradient ascent rule:where " is a positive step size parameter.Simple MC <strong>Policy</strong> <strong>Gradient</strong> Algorithm contd.• The algorithm computes an unbiasedestimate of the gradient.• Can be very slow due to high variance inthe estimates.• Variance is related to the “recurrence time”or the episode length.• For problems with large state spaces, thevariance becomes unacceptably high.Variance reduction techniques• Truncate summation (eligibility traces)• Decay eligibility traces. In this case, thedecay rate controls the bias-variance tradeoff.• Actor-Critic methods. These methods usevalue function estimates to reducevariance.• Employ a set of recurrent states to defineepisodes, instead of just one i*.