Name-Ethnicity Classification and Ethnicity ... - Dr. C. Lee Giles

Name-Ethnicity Classification and Ethnicity ... - Dr. C. Lee Giles

Name-Ethnicity Classification and Ethnicity ... - Dr. C. Lee Giles

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

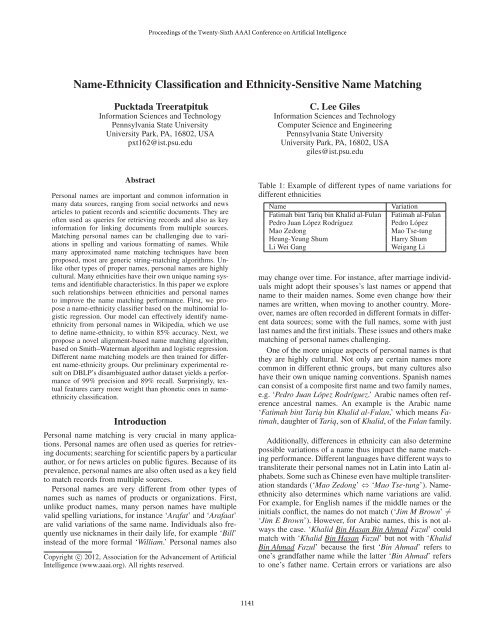

Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence<strong>Name</strong>-<strong>Ethnicity</strong> <strong>Classification</strong> <strong>and</strong> <strong>Ethnicity</strong>-Sensitive <strong>Name</strong> MatchingPucktada TreeratpitukInformation Sciences <strong>and</strong> TechnologyPennsylvania State UniversityUniversity Park, PA, 16802, USApxt162@ist.psu.eduC. <strong>Lee</strong> <strong>Giles</strong>Information Sciences <strong>and</strong> TechnologyComputer Science <strong>and</strong> EngineeringPennsylvania State UniversityUniversity Park, PA, 16802, USAgiles@ist.psu.eduAbstractPersonal names are important <strong>and</strong> common information inmany data sources, ranging from social networks <strong>and</strong> newsarticles to patient records <strong>and</strong> scientific documents. They areoften used as queries for retrieving records <strong>and</strong> also as keyinformation for linking documents from multiple sources.Matching personal names can be challenging due to variationsin spelling <strong>and</strong> various formatting of names. Whilemany approximated name matching techniques have beenproposed, most are generic string-matching algorithms. Unlikeother types of proper names, personal names are highlycultural. Many ethnicities have their own unique naming systems<strong>and</strong> identifiable characteristics. In this paper we exploresuch relationships between ethnicities <strong>and</strong> personal namesto improve the name matching performance. First, we proposea name-ethnicity classifier based on the multinomial logisticregression. Our model can effectively identify nameethnicityfrom personal names in Wikipedia, which we useto define name-ethnicity, to within 85% accuracy. Next, wepropose a novel alignment-based name matching algorithm,based on Smith–Waterman algorithm <strong>and</strong> logistic regression.Different name matching models are then trained for differentname-ethnicity groups. Our preliminary experimental resulton DBLP’s disambiguated author dataset yields a performanceof 99% precision <strong>and</strong> 89% recall. Surprisingly, textualfeatures carry more weight than phonetic ones in nameethnicityclassification.IntroductionPersonal name matching is very crucial in many applications.Personal names are often used as queries for retrievingdocuments; searching for scientific papers by a particularauthor, or for news articles on public figures. Because of itsprevalence, personal names are also often used as a key fieldto match records from multiple sources.Personal names are very different from other types ofnames such as names of products or organizations. First,unlike product names, many person names have multiplevalid spelling variations, for instance ‘Arafat’ <strong>and</strong> ‘Arafaat’are valid variations of the same name. Individuals also frequentlyuse nicknames in their daily life, for example ‘Bill’instead of the more formal ‘William.’ Personal names alsoCopyright c 2012, Association for the Advancement of ArtificialIntelligence (www.aaai.org). All rights reserved.Table 1: Example of different types of name variations fordifferent ethnicities<strong>Name</strong>Fatimah bint Tariq bin Khalid al-FulanPedro Juan López RodríguezMao ZedongHeung-Yeung ShumLi Wei GangVariationFatimah al-FulanPedro LópezMao Tse-tungHarry ShumWeigang Limay change over time. For instance, after marriage individualsmight adopt their spouses’s last names or append thatname to their maiden names. Some even change how theirnames are written, when moving to another country. Moreover,names are often recorded in different formats in differentdata sources; some with the full names, some with justlast names <strong>and</strong> the first initials. These issues <strong>and</strong> others makematching of personal names challenging.One of the more unique aspects of personal names is thatthey are highly cultural. Not only are certain names morecommon in different ethnic groups, but many cultures alsohave their own unique naming conventions. Spanish namescan consist of a composite first name <strong>and</strong> two family names,e.g. ‘Pedro Juan López Rodríguez.’ Arabic names often referenceancestral names. An example is the Arabic name‘Fatimah bint Tariq bin Khalid al-Fulan,’ which means Fatimah,daughter of Tariq, son of Khalid, of the Fulan family.Additionally, differences in ethnicity can also determinepossible variations of a name thus impact the name matchingperformance. Different languages have different ways totransliterate their personal names not in Latin into Latin alphabets.Some such as Chinese even have multiple transliterationst<strong>and</strong>ards (‘Mao Zedong’ ⇔ ‘Mao Tse-tung’). <strong>Name</strong>ethnicityalso determines which name variations are valid.For example, for English names if the middle names or theinitials conflict, the names do not match (‘Jim M Brown’ =‘Jim E Brown’). However, for Arabic names, this is not alwaysthe case. ‘Khalid Bin Hasan Bin Ahmad Fazul’ couldmatch with ‘Khalid Bin Hasan Fazul’ but not with ‘KhalidBin Ahmad Fazul’ because the first ‘Bin Ahmad’ refers toone’s gr<strong>and</strong>father name while the latter ‘Bin Ahmad’ refersto one’s father name. Certain errors or variations are also1141

more common in some name ethnicities than others. Chinesenames are often mistakenly reversed (‘<strong>Lee</strong> Wang’ ⇔ ‘Wang<strong>Lee</strong>’), for a variety of reasons. It is more common to drop thelast name in Spanish names (out of the two lastnames), thanin English names (‘Pedro Juan López Rodríguez’ ⇔ ‘PedroLópez’).While various personal name matching methods havebeen proposed (Christen 2006), most are generic <strong>and</strong> cultureor ethnicity independent. Others are too specific <strong>and</strong> arespecially designed to work with specific name ethnicities. Inthis paper we explore the relationships between ethnicities<strong>and</strong> personal names to improve the name matching performance.For this work, we consider a name-ethnicity classas a nationality category or a collection of nationality categoriesgiven to that name in Wikipedia. For more detail seethe <strong>Name</strong>-<strong>Ethnicity</strong> <strong>Classification</strong> section.This work has three main contributions. First, we presenta novel name-ethnicity classifier based on the multinomiallogistic regression. Our classifier identifies ethnicity of eachname based on its sequences of alphabets <strong>and</strong> sequences ofphonetics sound. Second, we extend the Smith-Watermanalignment algorithm to take into account various characteristicsfound in personal name matching. Third, we proposean ethnicity-sensitive name matching method, by combiningour name-ethnicity classifier with our name alignment algorithm,where different costs are placed on different types ofmisalignments depending on the ethnicity of the names beingcompared.Related Work<strong>Name</strong>-<strong>Ethnicity</strong> <strong>Classification</strong> The ethnicity of a personis an important demographic indicator used in many applicationsincluding target advertising, public policy, <strong>and</strong> scientificbehavioral studies. However, unlike names, ethnicinformation is often unavailable due to practical, politicalor legal reasons. Thus, especially in biomedical research,name-based ethnicity classification has gathered much interest(Coldman, Braun, <strong>and</strong> Gallagher 1988; Fiscella <strong>and</strong>Fremont 2006; Mateos 2007; Gill, Bhopal, <strong>and</strong> Kai 2005;Burchard et al. 2003). The primary used method in ethnicityclassification is to compare to existing name lists.(Coldman, Braun, <strong>and</strong> Gallagher 1988) use a simple probabilisticmethod based on full name lists to identify peoplewith Chinese ethnicity. (Gill, Bhopal, <strong>and</strong> Kai 2005) combinesurname analysis with location information to betterinfer ethnicity from names. The drawback of such dictionaryapproaches is that it cannot classify names which donot appear in the training data <strong>and</strong> constructing such a dictionaryis often difficult. Furthermore, existing dictionariesoften do not have desired granularity of ethnic groups. Forinstance, US Census data only contains six broad ethnicitycategories: Caucasian, African American, Asian/PacificIsl<strong>and</strong>er, American Indian/Alaskan Native, Hispanic, <strong>and</strong>‘Two or more races’. More recently, (Chang et al. 2010)train a graphical model based on US Census names to inferethnicities of Facebook users from names <strong>and</strong> studied theinteractions between ethnic groups. (Ambekar et al. 2009)use Hidden Markov Model <strong>and</strong> decision trees to classifynames into 13 ethnic groups. This work is the most similarto our name-ethnicity classifier. Both their <strong>and</strong> our approachbreak down each name into smaller units of character sequences,which allow the models to make ethnicity predictionon names that they have not seen before. However, theirapproach only models sequences of characters in differentname-ethnicities, while ours utilizes both phonetic <strong>and</strong> charactersequences.<strong>Name</strong> Matching Much work has been done on namematching in the domain of information integration, recordlinkage <strong>and</strong> information retrieval (Bilenko et al. 2003;Christen 2006). Most of the previously proposed techniquescan be categorized into two categories: phonetic-based <strong>and</strong>edit distance-based. The phonetic-based approaches converteach name string into a code according to its pronunciation,which is then used for comparison (Raghavan <strong>and</strong> Allan2004). The edit distance approaches define a small numberof edit operations (e.g. insertion, deletion <strong>and</strong> substitution),each with an associated cost. The distance betweentwo names is then defined to be the total cost of edit operationsrequired to change one name into another. Jaro measuresthe similarity based on number <strong>and</strong> order of charactersshared between names. Jaro-Winkler then improves on Jarodistance by emphasizing matches of the first few characters.Other notable flavors of edit distance used in name matchinginclude Levenshtein distance <strong>and</strong> Smith-Waterman distance(Freeman, Condon, <strong>and</strong> Ackerman 2006). An excellentreview of commonly used name matching methods can befound in (Christen 2006). Recently, (Gong, Wang, <strong>and</strong> Oard2009) propose a transformation based approach, where theycompute the best transformation path between names basedon three types of operation: abbreviation, omission <strong>and</strong> sequencechanging. SVM is then used to learn the final decisionrule. Their approach is somewhat related to our namematching method. Both attempt to find a mapping betweentwo names; for them, it is the best transformation path usinga graph-based algorithm, <strong>and</strong> for us, it is the optimalalignment through an alignment algorithm. However, theirapproach assumes universal cost for each type of transformations,while our cost function depends on the ethnicity ofthe names.<strong>Name</strong>-<strong>Ethnicity</strong> <strong>Classification</strong>In this section, we describe the process we use to collecta list of names <strong>and</strong> the features used in our name-ethnicityclassifier.Extracting <strong>Name</strong>s from WikipediaInspired by (Ambekar et al. 2009), we take advantage ofWikipedia <strong>and</strong> their categories as the source for collectingpersonal names of different ethnicities. To cultivate a list ofpersonal names for a given nationality, we use the followingprocedure. First, for each target nationality N, we pick theWikipedia category N people as the root node. We then employBFS to transverse all subcategories <strong>and</strong> pages reachablefrom the root node up the the depth of 4. Simple heuristicsis used to restrict the link transversals. For example, we onlyinclude subcategories whose titles contain the word ‘people’or ‘s of ’ (eg. ‘Members of the Institut de France’) or1142

Table 2: <strong>Name</strong>-<strong>Ethnicity</strong> data gathered from Wikipedia<strong>Ethnicity</strong> #<strong>Name</strong>s Wikipedia CategoriesMEA 10,559 Egyptian, Iraqi, Iranian,Lebanese, Syrian, TunisianIND 21,271 IndianENG 28,624 BritishFRN 29,271 FrenchGER 35,101 GermanITA 23,328 ItalianSPA 15,154 Columbian, Spanish, VenezuelanRUS 19,580 RussianCHI 10,385 ChineseJAP 17,790 JapaneseKOR 3,750 KoreanVIE 859 VietnameseTotal 215,672end with plural nouns (eg. ‘entertainers’). An example of avalid path is shown below:French people → French people by occupation→ French entertainers→ French magicians→ Alex<strong>and</strong>er HerrmannThe leaf nodes resulting from such transversal are then collectedas personal names for that nationality. Note that neitherour heuristics nor Wikipedia categories are perfect. Forinstance, names under ‘British people of Indian descent,’which are of Indian ethnicity, will be filed under Englishnames. Non-personal names could also be included. For instance,musical group names such as ‘Spice Girls’ is includedbecause it is under ‘British musicians’. Thus, we alsomanually curate the resulting name lists, removing any suchobvious missassignments as best as we could.In the end, we gather a total of 215,672 personalnames (after curation) from 19 nationalities, which are thengrouped into 12 ethnic groups as shown in Table 2. Personalnames of Egyptian, Iraqi, Iranian, Lebanese, Syrian<strong>and</strong> Tunisian are grouped together as Middle Eastern names(MEA) <strong>and</strong> names of Spanish, Columbian, Venezuelan aregrouped together as Spanish names (SPA).<strong>Name</strong>-<strong>Ethnicity</strong> Classifier <strong>and</strong> FeaturesWe then train a multinomial logistic regression classifier toidentify ethnicity of different names based on characters <strong>and</strong>phonetic sequences. The intuition is that names of differentethnicity have identifiable sequences of alphabets <strong>and</strong> phonetics.The multinomial logistic regression is a logistic regressionthat is generalized to more than two discrete outcomes.In a multinomial logistic regression, the conditionalprobabilities are calculated as follows:Pr(Y i = y K |X i )=11+ K−1l=1 exp(β l,0+β T l ·Xi)Pr(Y i = y k |X i )=exp(β k,0 +β T k ·Xi)1+ K−1l=1 exp(β l,0+β T l ·Xi) k=1,...,K−1Table 3: Example of features used in <strong>Name</strong>-<strong>Ethnicity</strong> classifierFeat Type String −→ FeaturesnonASCII Pedro López −→ ócharNgram $pedro$ +lopez+ −→ $p, pe, ed, ... (2gram)$pe, ped, ... (3gram)dmpNgram $PTR$ +LPS+ −→ $P m, PT m, ...$PT m, PTR m, ...soundex P360 L120 −→ P360 s, L120 sFor the name-ethnicity classification, Y i is the r<strong>and</strong>om variablefor the ethnicity of the name i <strong>and</strong> X i represents the correspondingfeature vector. The set {y 1 ,...,y K } is the set ofK ethnicity classes. The set of coefficients {β l,0 ,β l } k=1...Kare estimated by a maximum a posteriori probability (MAP)through iterative process. The normal distribution is used asprior on the coefficients.Our classifier utilizes four types of features: (1) nonASCII,(2) charNgram – character ngrams, (3) dmpNgram – DoubleMetaphone ngrams <strong>and</strong> (4) Soundex. The nonASCII featuresconsist of non-ASCII characters (such as ‘ä’, ‘é’) present inthe name. The charNgram features are character bigrams,trigrams, <strong>and</strong> four-grams of the name after normalization,where all non-ASCII characters are mapped to their ASCIIequivalences (‘ä’ → ‘a’). The phonetic characteristics of thename are represented by dmpNgram <strong>and</strong> soundex features.To compute dmpNgram features, each name token is first encodedwith the Double Metaphone algorithm. The bigrams,trigrams <strong>and</strong> four-grams are then generated from the resultingencoding. Lastly, soundex features are simply Soundexencodings of each token in the name. The Soundex <strong>and</strong> DoubleMetaphone algorithms are popular phonetic algorithmsfor encoding a word according to its phonetic sound. TheSoundex algorithm encodes each word as a code of fourcharacters: the first character of the word <strong>and</strong> a three digitcode representing its phonetic sound (Knuth 1973). For instance,Both ‘Steven’ <strong>and</strong> ‘Stephen’ are encoded as ‘S315’.Double Metaphone is a more advance phonetic algorithmthan Soundex, designed to better h<strong>and</strong>le non-English words(Phillips 2000). It can also return multiple codes in the caseof words with phonetic ambiguity. Double Metaphone encodeseach word into a code of 16 consonant symbols, e.g‘Stephen’ is encoded as ‘STFN.’Before the ngram generation, both for charNgram <strong>and</strong>dmpNgram, we pad the beginning <strong>and</strong> the ending of each tokenwith ‘$.’ To differentiate last names from other tokens,the last tokens are padded with ‘+’ instead of ‘$.’ For example,‘Pedro López’ would be padded to ‘$pedro$ +lopez+,’resulting in character ngrams ‘$p’, ‘pe’, ‘ed’, ... , ‘opez’, <strong>and</strong>‘pez+.’ The full example of features extracted for the name‘Pedro López’ is shown in Table 3.<strong>Ethnicity</strong>-Sensitive <strong>Name</strong> MatchingIn this section, we present the overview of the ethnicitysensitivename matching algorithm. To calculate a probabilitythat a pair of names match, we first tokenize eachname into name tokens. A dynamic programming based on1143

Smith–Waterman algorithm is applied to find the optimalalignment between the two tokenized names. Features arethen extracted from the optimal alignment. A logistic regressionmodel is then applied to convert features into the matchprobability. Using the name-ethnicity classifier to separatenames into ethnic groups, we train separate logistic regressionmodels for each name-ethnicity. Now we discuss eachstep in more detail.Computing Optimal AlignmentWe use a modified version of Smith–Waterman algorithmto find the optimal alignment between two names.The Smith–Waterman algorithm is a popular alignment algorithmfor identifying common molecular subsequences(Smith <strong>and</strong> Waterman 1981). However, the st<strong>and</strong>ardSmith–Waterman operates on sequences of characters (e.g.aligning characters in ‘ACAT‘ with ‘AGCA’) <strong>and</strong> only allowsexact match between characters. We, on the other h<strong>and</strong>,are interested in aligning sequences of name tokens, notcharacters. In addition, name tokens can align without beingan exact match (such as ‘Kathy’ <strong>and</strong> ‘Katharine’). Therefore,we extend the Smith–Waterman algorithm to tokenalignment <strong>and</strong> replace its exact match comparison with anapproximate matching function.For a given name pair p = (p 0 ,...,p m ) <strong>and</strong>q = (q 0 ,...,q n ), where p i ,q j are word tokens in p<strong>and</strong> q respectively, we define the token similarity betweenthe token p i <strong>and</strong> q j , denoted by S(p i ,q j ), as follows:⎧⎪⎨ Jaro-Winkler(p i,q j) if |p i|≥2 <strong>and</strong> |q j|≥2S(p i,q j)= 0.95⎪⎩if (|p i|=1 or |q j|=1) <strong>and</strong> init(p i)=init(q j)0 otherwisewhere init(a) = the first character of a. Basically, we measurethe similarity between tokens with the Jaro-Winklersimilarity, which works well for short strings. But in thecase where one of the token is just an initial, the similarityis 0.95 if their initials are compatible, such as between p i=‘E’ <strong>and</strong> q j =‘Edward,’ <strong>and</strong> 0 otherwise. The value 0.95is arbitrary chosen, because we want the similarity of theinitial-match cases to be sufficiently high, but still less thanthose of exact matches.We then define the scoring matrix, H, where H(i, j) representsthe maximum similarity score between (p 0 ,...,p i )<strong>and</strong> (q 0 ,...,q j ), in term of a similarity function S as follows:H(i,0)=0,H(0,j)=0,0≤i≤m0≤j≤nH(i+1,j+1)=⎧H(i,j)+S(p i,q j) if S(p i,q j) > 0.8 //p i matches q j⎪⎨ H(i,j−1)+1 if p i=q j−1q j //p i spans 2 tokensmax H(i−1,j)+1 if p i−1p i=q j //q j spans 2 tokens⎪⎩H(i+1,j)H(i,j+1)for 0≤i≤m−1, 0≤j≤n−1// skip q j// skip p iThe matrix H can be computed using dynamic programming.Unlike in the st<strong>and</strong>ard Smith–Waterman, where eachcell can align with at most one other cell, our name token canalign with upto two tokens in the corresponding name. This− Marcelo Bicho Dos Santos− 0.0 0.0 0.0 0.0 0.0Marcelino 0.0 0.96 0.96 0.96 0.96B 0.0 0.96 1.91 1.91 1.91Santos 0.0 0.96 1.91 1.91 2.91Figure 1: Scoring matrix H for aligning ‘Marcelo Bicho DosSantos’ <strong>and</strong> ‘Marcelino B Santos.’ The arrows show the pathof the optimal alignment generated by the traceback procedure.Table 4: Examples of the optimal alignments between (a)‘Marcelo Bicho Dos Santos’ <strong>and</strong> ‘Marcelino B Santos.’ (b)‘Juan Ginés Sánchez Moreno’ <strong>and</strong> ‘G Lopez Moreno.’(a) p Marcelo Bicho Dos Santosq Marcelino B - SantosS(p i ,q j ) 0.96 0.95 1.0(b) p Juan Ginés Sánchez Morenoq - G Lopez MorenoS(p i ,q j ) 0.95 1.0allows our alignment algorithm to account for a commonproblem in name matching, where multiple name segmentationsexist for instance (‘DeFélice’ ⇔ ‘De Félice’) <strong>and</strong>(‘Min Seo Kim’ ⇔ ‘Minseo Kim’), without any special preprocessingsteps. After the matrix H is computed, the tracebackprocedure similar to the one in Smith–Waterman algorithm,can be used to find the optimal alignment. Figure 1shows an example of the matrix H for aligning ‘MarcelinoB Santos’ <strong>and</strong> ‘Marcelo Bicho Dos Santos’ <strong>and</strong> the resultingoptimal alignment are shown in Figure 4a.Since name field reversal such as (‘Min Seo Kim’ ⇒‘Kim Min Seo’) is very common, especially with East Asiannames, we introduce a shift operator α such that α t (p) shiftseach token in p, t positions to the right for t > 0 <strong>and</strong> tothe left for t < 0. So α 1 (p) = (p m ,p 0 ,...,p m−1 ) <strong>and</strong>α −1 (p) =(p 1 ,...,p m ,p 0 ). Let Ω(p, q) denotes the optimalalignment between p <strong>and</strong> q, <strong>and</strong> Ω s (p, q) denotes the optimalalignment with the shift operation. ThenΩ s (p, q) =arg max M(Ω(α t (p),q)))Ω(α t (p),q),t∈ {−1, 0, 1}where M(Ω(p, q)) is the similarity score for the alignmentΩ(p, q) in the matrix H. So Ω s (p, q) is the best alignmentout of all alignments with various shifts.<strong>Name</strong> Matching ProbabilityFrom the resulting optimal alignment Ω s (p, q), we thencategorize each match/mismatch based on its location. Forexample, we differentiate unmatched tokens at the endof a name from unmatched tokens at the beginning of aname. The probability P , that a name p matches a nameq is then computed based on the number of each types ofmatch/mismatch in their optimal alignment Ω s (p, q). More1144

specifically, the probability P depends on the feature vectorf =(x 1 ,...,x 7 ) wherex 1 = # of skip tokens before the first aligned token pairx 2 = # of skip tokens after the last aligned token pairx 3 = # of skip tokens in the middle that are initialsx 4 = # of skip tokens in the middle that are non-initialsx 5 = # conflicting tokensx 6 = # of shift operation in the optimal alignment = |t|x 7 = products of similarity scores of aligned token pairs=S(p u ,q v )u,vp u align with q v in Ω s(p,q)Two tokens p i , q j are considered to be conflicting ()if they both are unaligned <strong>and</strong> are between the same alignedtoken pairs, for example ‘Sánchez’ <strong>and</strong> ‘Lopez’ in Table 4b.Unaligned tokens that do not conflict with any tokens areconsidered skip tokens (), for example ‘Dos’ in Table4a <strong>and</strong> ‘Juan’ in Table 4b.To illustrate, consider the two optimal alignments shownin Table 4. In (a), there are one skip token in the middle <strong>and</strong>three aligned tokens pairs. So x 3 =1<strong>and</strong> x 7 =0.96×0.95×1 thus f =(0, 0, 1, 0, 0, 0,.91). In (b), there are two alignedtoken pairs, two conflicting tokens <strong>and</strong> one skip token. Sox 1 =1,x 5 =2<strong>and</strong> x 7 =0.95 × 1.0.To compute P , we assume that the odds ratio of P is directlyproportion toP1 − P ∝ D 1 x1 D 2x 2... D 6x 6D 7log(x 7)where D 1 ,...,D 6 ,D 7 are discounting factors for differenttypes of alignment/misalignment (x 1 ,...,x 6 ,x 7 ). The oddsratio of P can be rewritten as:P1 − P = D x0D 1 x1 ... D 6 log(x6 D 7)7 (1)Plog(1 − P )=β 0 + β 1 x 1 + ... + β 7 log(x 7 ) (2)logit(P )=β 0 + β 1 x 1 + ... + β 7 log(x 7 ) (3)The equation (3) above is simply a logistic regression model.Thus the optimal values for the coefficient β 1 ,...,β 7 withrespect to a dataset can be easily estimated.In the training phase, we use the name-ethnicity classifierto classify names in the training data according to their ethnicities.Separate logistic regression models are then builtfor each name-ethnicity, e.g. one for Spanish names, onefor Middle Eastern names <strong>and</strong> so on. In addition, a backoffmodel is train over all the training data. In the evaluationphase, if both names to be compared are classified as ofthe same ethnicity, the ethnicity specific regression model isused, otherwise the default model is used.Currently, the token similarity function S(pi, qj) is ethnicityindependent. However, in the future, different tokensimilarity functions can be used for different ethnicitynames. For instance, if the system detects that the namesbeing compared are Chinese, a special similarity functionTable 5: The precision, recall <strong>and</strong> F1 measure of the nameethnicityclassifier for each ethnicity.<strong>Ethnicity</strong> Precision Recall F1MEA 0.79 0.78 0.79IND 0.89 0.86 0.87ENG 0.79 0.85 0.82FRN 0.80 0.80 0.80GER 0.84 0.85 0.85ITA 0.85 0.86 0.85SPA 0.82 0.79 0.81RUS 0.90 0.85 0.81CHI 0.92 0.90 0.91JAP 0.97 0.95 0.96KOR 0.93 0.92 0.92VIE 0.93 0.83 0.88Accuracy 0.85that includes the mapping between Hanyu-Pinyin <strong>and</strong> Wade-<strong>Giles</strong> (two different transliteration systems for M<strong>and</strong>arinChinese) can be used instead. Additionally, in a real system,one can also improve the similarity function S(pi, qj)by incorporating nickname dictionaries for different ethnicgroups; for example, mapping ‘Bartholomew’ with ‘Bart,’or ‘Meus’ for English names, <strong>and</strong> mapping the Chinese firstname ‘Jian’ to its Western nickname ‘Jerry.’Experiments<strong>Name</strong>-<strong>Ethnicity</strong> <strong>Classification</strong> on WikipediaTo assess the performance of our name-ethnicity classifier,we r<strong>and</strong>omly split the name list collected from Wikipidiainto 70% training data <strong>and</strong> 30% test data. Table 5 shows theprecision, recall <strong>and</strong> F1 measure of the classifier for eachethnicity. The overall classification accuracy is 0.85, withJapanese as the most identifiable name-ethnicity (F1=0.96),followed by Korean (F1=0.92). In general, the classifier doeswell identifying East Asian names (CHI, JAP, KOR, <strong>and</strong>VIE) with over 90% precision <strong>and</strong> recall with just one exception,VIE’s recall. The most problematic class is MiddleEastern names (MEA) with 0.79 F1, followed by Frenchwith 0.80 F1. We also ran another experiment without anydiacritic features; every name is normalized to its ASCIIequivalence. With just ASCII features, the classification accuracydrops down slightly to 0.83. This suggests that evenwithout diacritics, each name-ethnicity still has identifiablecharacteristics that can be used for classification.The confusion matrix between different name-ethnicitiesis shown in Figure 2. We observe that Middle Eastern namesare mostly confused with Indian names. This is not unexpected,since India has a large population of Muslims. Mostof the confusion for European names are with other Europeannames, especially between English, French, <strong>and</strong> German.The majority of confusion for Russian names are withGerman names.We also examine the coefficient of each feature learnedby the classifier. The most predictive features for each non-English ethnic group, are listed in Table 6 according to theircoefficients in the logistic regression. For instance, the ‘bh’1145

Table 6: Most predictive features, excluding nonASCII, foreach name-ethnicity according to the ethnicity classifier. ‘$’represents the word boundary <strong>and</strong> ‘+’ indicates the lastnameboundary.<strong>Ethnicity</strong> Feature ExampleMEA ou Jassem KhalloufiIND bh Bhairavi DesaiFRN ien$ Henri ChretienGER sch Gregor SchneiderITA one+ Giovanni FalconeSPA $de$ Jesus de PolancoRUS v+ Viktor YakushevCHI ng$ Jing HuangJAP tsu Yutakayama HiromitsuKOR ae Song Tae Kon, Nam Yun BaeVIE nh Nguyen Dinh, Linh Chicharacter sequences (as in ‘Bhairavi Desai’) are highly predictiveof Indians names. The ‘sch’ character sequences ismore unique in German names, while ‘v+’ (the lastnameending with ‘v’) is a good indicator for names of Russianethnicity. An example of notable phonetic features picked upby our classifier is Soundex S400 for Middle Eastern names(corresponding to ‘EL’ as in ‘Hatem El Mekki’). It is interestingto note that the majority of the top features are characterfeatures. This suggests that the written form of a nameis a better indicator of its ethnicity than its phonetic. In general,the top features learned by our classifier comply withour expectations.Overall, our name-ethnicity classifier can infer ethnicityfrom names with fairly high accuracy. Our result is overallcomparable to the previously reported attempt in (Ambekaret al. 2009), <strong>and</strong> is significantly better at identifyingsome ethnic groups such as German, <strong>and</strong> East Asian names.Using smaller units of names, instead of the full names, asfeatures not only makes the classifier generalize better to unseennames, but should also help reduce the number of trainingdata needed in order to include a new ethnic class. Oneinteresting direction for future work would be to comparethe effect of the size of the training data on the performancebetween our model <strong>and</strong> theirs.<strong>Name</strong> Matching EvaluationWe evaluate our name matching algorithm on the DBLP authordataset (Dataset 2) (Lange <strong>and</strong> Naumann 2011). Thedataset contains 10,000 pairs of ambiguous author recordsfrom DBLP, that were manually cleaned due to author requests,or by fine-tuning heuristics. The dataset was createdto be a challenging testbed for author record disambiguation.We use the subset of the data (2500 record pairs), whichcomprises only of author records from authors with multiplename aliases.As the baselines, we implement four st<strong>and</strong>ard stringmatching techniques, Jaro-Winkler <strong>and</strong> Levenstein editingdistance, together with their recursive versions (L2 Jaro-Winkler <strong>and</strong> L2 Levenstein) using the SecondString library 1 .1 http://secondstring.sourceforge.net/Figure 2: The confusion matrix of name-ethnicity classificationon Wikipedia data. The rows are the true ethnicities,<strong>and</strong> the columns are the predictions. Darker tiles indicatesless confusion between ethnic pairs, while lighter tiles representmore confusion.We evaluate the performance of all baselines with variousthresholds, <strong>and</strong> plot their precision <strong>and</strong> recall in Figure 3.The maximal F1 for all possible threshold values is 0.75 forJaro-Winkler, 0.70 for Levenstein distance, 0.80 for L2 Jaro-Winkler, <strong>and</strong> 0.77 for L2 Levenstein.We create three ethnicity-dependent name matching models;one for Middle Eastern names (MEA), one for Spanishnames (SPA), one for East Asian names (CHI, JAP, KOR,VIE), <strong>and</strong> one default model, which is trained on all ethnicitynames. The three ethnic groups are chosen since theyhave quite distinct naming conventions compared to typicalWestern names. We train <strong>and</strong> evaluate the ethnicity-sensitivename matching models using 10-folds cross validation. Ourmodel achieves 0.99 precision, 0.89 recall <strong>and</strong> 0.94 F1 measure.This is 14% improvement in F1 over the best st<strong>and</strong>ardsimilarity measures (L2 Jaro-Winkler). Our algorithm scoresextremely high precision. While the recall is at 0.89, whichis slightly lower, it is still quite high. When examining thenames that their matches were not correctly recalled, weobserve that some of them would be difficult even for humanwithout using other information. For instance, ‘HedvigSidenbladh’ <strong>and</strong> ‘Hedvig Kjellström’ are marked as the sameauthor in the DBLP dataset, so as ‘Maria-Florina Balcan’<strong>and</strong> ‘Maria-Florina Popa.’ These are examples of authorsthat changed their last names. One drawback of training separatemodels for each ethnicity is that we effectively makethe training data for each model smaller. Thus we would liketo test our model with other larger dataset in the future <strong>and</strong>to investigate the impact on performance.ConclusionWe propose a novel name-ethnicity classifier based on themultinomial logistic regression. Our name-ethnicity classifieris trained <strong>and</strong> evaluated on Wikipedia data, achievingaround 85% accuracy. We observe that name strings wereusually more important than phonetic sequences for classifi-1146

Figure 3: The recall-precision curves of the baseline editdistancemeasures on DBLP data. The optimal F1 is 0.75 forJaro-Winkler (R=0.7, P=0.81), 0.70 for Levenstein distance(R=0.6, P=0.81), 0.80 for L2 Jaro-Winkler (R=0.7, P=0.93),<strong>and</strong> 0.77 for L2 Levenstein (R=0.8, P=0.74).cation. In addition, we also propose a novel name-matchingmethod that is ethnicity-sensitive. The name-matching algorithmis trained <strong>and</strong> evaluated on the st<strong>and</strong>ard DBLP authordataset, achieving 14% improvement in F1 over the st<strong>and</strong>ardname similarity measures. Both our ethnicity classifier<strong>and</strong> our ethnicity-sensitive name matching also can be easilyextended to include new ethnicities. Future work wouldbe to extend this to other <strong>and</strong> possibly finer definitions ofethnicity <strong>and</strong> to larger datasets; for instance, to differentiatebetween French names in France <strong>and</strong> French names inQuebec, Canada.AcknowledgmentThis project has been funded, in part by, the DTRA grant(HDTRA1-09-1-0054) <strong>and</strong> the National Science Foundation.We gratefully thank Prasenjit Mitra, Madian Khabsa,Pradeep Teregowda, Sujatha Das, <strong>and</strong> Jose San Pedro fortheir useful discussions.ReferencesAmbekar, A.; Ward, C.; Mohammed, J.; Male, S.; <strong>and</strong>Skiena, S. 2009. <strong>Name</strong>-ethnicity classification from opensources. In Proceedings of the 15th ACM SIGKDD internationalconference on Knowledge Discovery <strong>and</strong> Data Mining,49–58.Bilenko, M.; Mooney, R.; Cohen, W.; Ravikumar, P.; <strong>and</strong>Fienberg, S. 2003. Adaptive name matching in informationintegration. Intelligent Systems 18(5):16–23.Burchard, E. G.; Ziv, E.; Coyle, N.; Gomez, S. L.; Tang, H.;Karter, A. J.; Mountain, J. L.; Pérez-Stable, E. J.; Sheppard,D.; <strong>and</strong> Risch, N. 2003. The Importance of Race <strong>and</strong> EthnicBackground in Biomedical Research <strong>and</strong> Clinical Practice.The New Engl<strong>and</strong> Journal of Medicine 348:1170–1175.Chang, J.; Rosenn, I.; Backstrom, L.; <strong>and</strong> Marlow, C. 2010.epluribus: <strong>Ethnicity</strong> on social networks. In Proceedings ofthe International Conference in Weblogs <strong>and</strong> Social Media(ICWSM), 18–25.Christen, P. 2006. A comparison of personal name matching:Techniques <strong>and</strong> practical issues. Workshop on MiningComplex Data (MCD ’06).Coldman, A. J.; Braun, T.; <strong>and</strong> Gallagher, R. P. 1988. Theclassification of ethnic status using name information. Journalof Epidemiology <strong>and</strong> Community Health 42:390–395.Fiscella, K., <strong>and</strong> Fremont, A. M. 2006. Use of geocoding<strong>and</strong> surname analysis to estimate race <strong>and</strong> ethnicity. HealthService Research 41:1482–1500.Freeman, A. T.; Condon, D. S. L.; <strong>and</strong> Ackerman, C. M.2006. Cross linguistic name matching in English <strong>and</strong> Arabic:a one to many mapping extension of the Levenshteinedit distance algorithm. Proceedings of the Human LanguageTechnology Conference of the North American Chapterof the Association of Computational Linguistics.Gill, P. S.; Bhopal, R.; <strong>and</strong> Kai, J. 2005. Limitations <strong>and</strong> potentialof country of birth as proxy for ethnic group. BritishMedical Journal 330:196.Gong, J.; Wang, L.; <strong>and</strong> Oard, D. 2009. Matching personnames through name transformation. In Proceeding of the18th ACM Conference on Information <strong>and</strong> Knowledge Management,1875–1878.Knuth, D. 1973. Fundamental Algorithms: The Art of ComputerProgramming.Lange, D., <strong>and</strong> Naumann, F. 2011. Frequency-aware SimilarityMeasures: Why Arnold Schwarzenegger is Always aDuplicate. In Proceedings of the 20th ACM CIKM Conferenceon Information <strong>and</strong> Knowledge Management 24–28.Mateos, P. 2007. A review of name-based ethnicity classificationmethods <strong>and</strong> their potential in population studies.Population Space <strong>and</strong> Place.Phillips, L. 2000. The double metaphone search algorithm.C/C++ Users Journal 18(6).Raghavan, H., <strong>and</strong> Allan, J. 2004. Using Soundex Codesfor Indexing <strong>Name</strong>s in ASR documents. In Proceedingsof the HLT-NAACL 2004 Workshop on Interdisciplinary Approachesto Speech Indexing <strong>and</strong> Retrieval.Smith, T., <strong>and</strong> Waterman, M. 1981. Identification of commonmolecular subsequences. The Journal of Molecular Biology.1147