LPC Prediction Error-Analysis of Its Variation with the Position of the ...

LPC Prediction Error-Analysis of Its Variation with the Position of the ...

LPC Prediction Error-Analysis of Its Variation with the Position of the ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

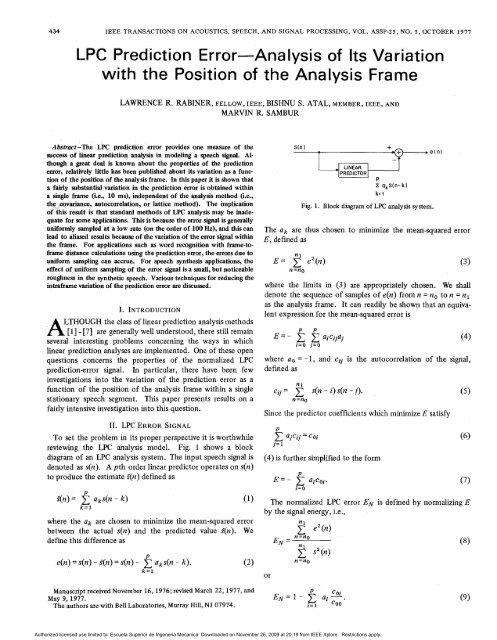

434 IEEE TRANSACTIONS ON ACOUSTICS, SPEECH, AND SIGNAL PROCESSING, VOL. ASSP-25, NO. 5, OCTOBER 1977<strong>LPC</strong> <strong>Prediction</strong> <strong>Error</strong>-<strong>Analysis</strong> <strong>of</strong> <strong>Its</strong> <strong>Variation</strong><strong>with</strong> <strong>the</strong> <strong>Position</strong> <strong>of</strong> <strong>the</strong> <strong>Analysis</strong> Frame.Abstract-The <strong>LPC</strong> prediction error provides one measure <strong>of</strong> <strong>the</strong>success <strong>of</strong> linear prediction analysis in modeling a speech signal. Althougha great deal is known about <strong>the</strong> properties <strong>of</strong> <strong>the</strong> predictionerror, relatively little has been published about its variation as a function<strong>of</strong> <strong>the</strong> position <strong>of</strong> <strong>the</strong> analysis frame. In this paper it is shown thata fairly substantial variation in <strong>the</strong> prediction error is obtained <strong>with</strong>ina single frame (i.e., 10 ms), independent <strong>of</strong> <strong>the</strong> analysis method (i.e.,<strong>the</strong>. pvariance, autocorrelation, or lattice method). The implication<strong>of</strong> this result is that standard methods <strong>of</strong> <strong>LPC</strong> analysis may be inadequatefor some applications. This is because <strong>the</strong> error signal is generallyuniformly sampled at a low rate (on <strong>the</strong> order <strong>of</strong> 100 Hz), and this canlead to aliased results because <strong>of</strong> <strong>the</strong> variation <strong>of</strong> <strong>the</strong> error signal <strong>with</strong>in<strong>the</strong> frame. For applications such as word recognition <strong>with</strong> frame-t<strong>of</strong>ramedistance calculations using <strong>the</strong> prediction error, <strong>the</strong> errors due touniform sampling can accrue. For speech syn<strong>the</strong>sis applications, <strong>the</strong>effect <strong>of</strong> uniform sampling <strong>of</strong> <strong>the</strong> error signal is a small, but noticeableroughness in <strong>the</strong> syn<strong>the</strong>tic speech. Various techniques for reducing <strong>the</strong>intraframe variation <strong>of</strong> <strong>the</strong> prediction error are discussed.I. INTRODUCTIONLTHOUGH <strong>the</strong> class <strong>of</strong> linear prediction analysis methodsA w [71 are generally well understood, <strong>the</strong>re still remainseveral interesting problems concerning <strong>the</strong> ways in whichlinear prediction analyses are implemented. One <strong>of</strong> <strong>the</strong>se openquestions concerns <strong>the</strong> properties <strong>of</strong> <strong>the</strong> normalized <strong>LPC</strong>prediction-error signal. In particular, <strong>the</strong>re have been fewinvestigations into <strong>the</strong> variation <strong>of</strong> <strong>the</strong> prediction error as afunction <strong>of</strong> <strong>the</strong> position <strong>of</strong> <strong>the</strong> analysis frame <strong>with</strong>in a singlestationary speech segment. This paper presents results on afairly intensive investigation into this question.11. <strong>LPC</strong> ERROR SIGNALTo set <strong>the</strong> problem in its proper perspective it is worthwhilereviewing <strong>the</strong> <strong>LPC</strong> analysis model. Fig. 1 shows a blockdiagram <strong>of</strong> an <strong>LPC</strong> analysis system. The input speech signal isdenoted as s(n). A pth order linear predictor operates on s(n)to produce <strong>the</strong> estimate f(n) defined asPi(n) = aks(n - k)k=lwhere <strong>the</strong> ak are chosen to minimize <strong>the</strong> mean-squared errorbetween <strong>the</strong> actual s(n) and <strong>the</strong> predicted value f(n). Wedefine this difference asPe(n) = s(n) - f(n) = s(n) - aks(n - k). (2)k=lPREDICTORPP oks(n-k)k= 1Fig. 1. Block diagram <strong>of</strong> <strong>LPC</strong> analysis system.The ak are thus chosen to minimize <strong>the</strong> mean-squared errorE, defined asnlE= ez(n)n =nowhere <strong>the</strong> limits in (3) are appropriately chosen. We shalldenote <strong>the</strong> sequence <strong>of</strong> samples <strong>of</strong> e(n) from n = no to n = nlas <strong>the</strong> analysis frame. It can readily be shown that an equivalentexpression for <strong>the</strong> mean-squared error iswhere a. = - 1, and cij is <strong>the</strong> autocorrelation <strong>of</strong> <strong>the</strong> signal,defined as'_ 1cjj =n =nos(n - i) s(n - j).Since <strong>the</strong> predictor coefficients which minimize E satisfyD2 ajcij = coij= 1(4) is fur<strong>the</strong>r simplified to <strong>the</strong> formE=-Pi= 0ajcoj.The normalized <strong>LPC</strong> error EN is defined by normalizing Eby <strong>the</strong> signal energy, i.e.,orManuscript received November 16,1976; revised March 22,1977, andMay 9, 1971.The authors are <strong>with</strong> Bell Laboratories, Murray Hill, NJ 07974.Authorized licensed use limited to: Escuela Superior de Ingeneria Mecanica. Downloaded on November 26, 2009 at 20:19 from IEEE Xplore. Restrictions apply.

RABINER et al.: <strong>LPC</strong> PREDICTION ERROR-ITS VARIATION4355949LRR - I Y VOWELM.14 N-200COVARIANCE METHOD4597SAW - AH VOWELM=14 N.200COVARIANCE METHOD-7443 -80130 TIME (SAMPLES) 2i 3 0 TIME ) (SAMPLES 2131622 1 i( bl-1905 0 TIME (SAMPLES) 213104LOG(db)-16220 TIME (SAMPLES) 2i310.6LOG(db)LOG(d b)260 FREOUENCY 05KHZFREPUENCY83ERROR SPECTRUM420 FREOUENCY 5KHZhi511. " I 1 ' ERROR SPECTRUM I0FREPUENCY5KHZ(dlLR - IY VOWELSAW - AH VOWELM = 14 N= 200M= 14 N=X)OAUTOCORRELATION METHODAUTOCORRELATION METHODHAMMING WINDOWHAMMING WINDOW4485 4043(a) (a)- -- -Aw--SIGNAL-49440 TIME (SAMPLES)-70441990 TIME (SAMPLES)137313271-1373 - 1327102 101LOG(db)(c)LOG(db)22 0 FREOUENCY2i5KHZ 0 FREPUENCY 5KHz76 77(3 (dlERROR SPECTRUM .46 u..I0 5KHZ0%HZFREOUENCYFREPUENCYFig. 5. Typical signals and spectra for <strong>LPC</strong> autocorrelation method fora male speaker.a female speaker.Fig. 3. Typical signals and spectra for <strong>LPC</strong> autocorrelation method forFigs. 2-5 illustrate some typical analysis frames for two <strong>of</strong> figure shows <strong>the</strong> signal samples used in <strong>the</strong> analysis: part<strong>the</strong> well-known methods <strong>of</strong> <strong>LPC</strong> analysis; i.e., <strong>the</strong> covariance (b) shows <strong>the</strong> resulting error signal (e@)); part (c) shows <strong>the</strong>method and <strong>the</strong> autocorrelation method.' Part (a) <strong>of</strong> each signal spectrum (as obtained using an FFT analysis) as well as'Results are not presented for <strong>the</strong> lattice method [ 31 -[7] since <strong>the</strong>yare almost identical to those obtained for <strong>the</strong> covariance method.'These figures show all samples used in <strong>the</strong> analysis. For <strong>the</strong> covariancemethod <strong>the</strong> first p samples are not used in computing <strong>the</strong>prediction residual.Authorized licensed use limited to: Escuela Superior de Ingeneria Mecanica. Downloaded on November 26, 2009 at 20:19 from IEEE Xplore. Restrictions apply.

RABINER et ai.: <strong>LPC</strong> PREDICTION ERROR-ITS VARIATION 4311.4X109LRR-IY VOWELM= 14 N.2002.4X109p y-LRR - I Y VOWELM= 44 N=400ENERGY0 0 50 100 t 50 200 250 300 350 4000 50 100 150 200 250 300 350 400TIME (SAMPLES)TIME (SAMPLES)INORMALIZED ERROR I01 I I I I 1 I I I0 50 100 I50 200 250 300 350 400TIME (SAMPLES)AUTOCORRELATION METHODHAMMING WINDOW0.03001 I I I I 1 I I I0 50 400 150 200 250 300 350 400TIME (SAMPLES 1AUTOCORRELATION METHODHAMMING WINDOWI NORMALIZED 1ERROR0.130 tIC)01 I 1 I I I I I INORMALIZED ERROR0 50 100 150 200 250 300 350 400TI ME (SAMPLES)AUTOCORRELATION METHODRECTANGULAR WINDOWNORMALIZED ERROR0I I I I0 50 100 150 200 250 300 350 400TIME (SAMPLES)Fig. 6. <strong>Prediction</strong>-error sequemes for 200 samples <strong>of</strong> speech for three<strong>LPC</strong> systems.not linearly predictable. The magnitude <strong>of</strong> this variation isconsiderably smaller for <strong>the</strong> analysis using <strong>the</strong> Hammingwindow (30-percent variation) than for <strong>the</strong> analysis <strong>with</strong> <strong>the</strong>rectangular window (about 500-percent variation) due to <strong>the</strong>tapering <strong>of</strong> <strong>the</strong> Hamming window at <strong>the</strong> ends <strong>of</strong> <strong>the</strong> analysiswindow. Ano<strong>the</strong>r component <strong>of</strong> <strong>the</strong> high-frequency variation<strong>of</strong> <strong>the</strong> prediction error is related to <strong>the</strong> position <strong>of</strong> <strong>the</strong> analysisframe <strong>with</strong> respect to pitch pulses, as discussed previously for<strong>the</strong> covariance meehod. However, this component <strong>of</strong> <strong>the</strong> erroris much less a factor for <strong>the</strong> autocorrelation analysis than for<strong>the</strong> covariance method; especially in <strong>the</strong> case when a Hammingwindow is used, since new pitch pulses that enter <strong>the</strong> analysisframe are tapered by <strong>the</strong> window.01 I I 1 I I I I J0 50 I00 150 200 25Q 300 350 400TIME (SAMPLES)Fig. 7. <strong>Prediction</strong>-error sequences for N = 400 sample frames.Fig. 7 illustrates <strong>the</strong> effect <strong>of</strong> using a longer analysis frame(40 ms) on <strong>the</strong> prediction error for both <strong>the</strong> covariancemethod [Fig. 7(b)] and <strong>the</strong> autocorrelation method using aHamming window [Fig. 7(c)]. Similar effects to those obtainedin Fig. 6 are obtained. However, <strong>the</strong> magnitude <strong>of</strong><strong>the</strong> variation in <strong>the</strong> prediction error is smaller. For <strong>the</strong> covariancemethod [Fig. 7(b)] this is true since <strong>the</strong> frame alwayscontains at least four complete pitch periods, and most <strong>of</strong> <strong>the</strong>time five pitch periods are contained <strong>with</strong>in <strong>the</strong> frame. Thus<strong>the</strong> prediction error is smaller only when <strong>the</strong> analysis frame ispositioned so that only four error peaks are included <strong>with</strong>in<strong>the</strong> frame. For <strong>the</strong> autocorrelation method fFig. 7(c)] <strong>the</strong>contribution <strong>of</strong> <strong>the</strong> first p (14) error terms to <strong>the</strong> predictionerror is a smaller total percentage <strong>of</strong> <strong>the</strong> overall error for a40-ms frame than for a 20-ms frame. Thus again <strong>the</strong> variationin <strong>the</strong> high-frequency component <strong>of</strong> <strong>the</strong> error is reduced.Fig. 8 illustrates <strong>the</strong> results obtained for two cases <strong>of</strong> apitch-synchronous analysis using <strong>the</strong> covariance method. Fig.8(a) and (b) shows <strong>the</strong> signal energy and prediction error usinga single period (8.4 ms); whereas, Fig. 8(c) and (d) shows <strong>the</strong>results for an analysis frame which is two periods (16.9-ms)long. It is seen in <strong>the</strong>se figures that even though <strong>the</strong> predictionerror shows long flat regions (i.e., little or no variation<strong>with</strong> <strong>the</strong> analysis-frame position) for both pitch-synchronousanalyses, even slight changes in <strong>the</strong> pitch period lead tosharp discontinuities in <strong>the</strong> prediction error. These resultsstrongly suggest that <strong>the</strong> sensitivity <strong>of</strong> <strong>the</strong> prediction error tosmall errors in choosing <strong>the</strong> pitch period for pitch-synchronousAuthorized licensed use limited to: Escuela Superior de Ingeneria Mecanica. Downloaded on November 26, 2009 at 20:19 from IEEE Xplore. Restrictions apply.

RABINER et ai.: <strong>LPC</strong> PREDICTION ERROR-ITS VARIATION 439prediction error is <strong>the</strong> number <strong>of</strong> whole pitch periods contained<strong>with</strong>in <strong>the</strong> analysis frame.It is important to be able to place some perspective on <strong>the</strong>importance and implications <strong>of</strong> <strong>the</strong>se results. The predictionerror is a measure <strong>of</strong> <strong>the</strong> success <strong>with</strong> which a frame <strong>of</strong> speechcan be linearly predicted. Thus variations <strong>of</strong> from 30 to 500percent in <strong>the</strong> prediction error due to slight shifts <strong>of</strong> <strong>the</strong>analysis-frame position means that <strong>the</strong> prediction error mustbe carefully interpreted and carefully used in any applicationon which it is based. One such application is <strong>the</strong> computation<strong>of</strong> distance proposed by Itakura [lo] based on a ratio <strong>of</strong>prediction errors. In this application <strong>the</strong> quantity D(a, ci),defined asis computed in which Vis a correlation matrix and <strong>the</strong> termsE2 = dVcit and El =aVat are prediction residuals or normalized<strong>LPC</strong> errors based on using <strong>the</strong> <strong>LPC</strong> sets li and a. Whatwe have shown is that, depending on <strong>the</strong> analysis method and<strong>the</strong> frame position, variations <strong>of</strong> E2 on <strong>the</strong> order <strong>of</strong> 30-500percent can be obtained. The implications <strong>of</strong> this variation indefming <strong>LPC</strong> distance are discussed in Section V.A second case in which variation <strong>of</strong> <strong>the</strong> prediction error<strong>with</strong> analysis-frame position may be important is <strong>the</strong> <strong>LPC</strong>vocoder. It can be argued that analysis frames <strong>with</strong> <strong>the</strong>smallest prediction error may lead to <strong>the</strong> best estimates <strong>of</strong> polecenter frequencies and bandwidths since <strong>the</strong> peaks in <strong>the</strong> errorsignal due to <strong>the</strong> pitch pulses essentially make <strong>the</strong> analysismore noisy and subject to numerical errors. To test this conjecture<strong>LPC</strong> syn<strong>the</strong>sis results were obtained using frames <strong>with</strong>both <strong>the</strong> highest and lowest prediction errors and <strong>the</strong> resultantsyn<strong>the</strong>tic utterances were compared. We discuss <strong>the</strong>se comparisonsin Section VI.Iv. METHODS OF REDUCING THE VARIABILITYOF THE PREDICTION ERRORIn order to reduce <strong>the</strong> variability in <strong>the</strong> .<strong>LPC</strong> prediction error<strong>with</strong> <strong>the</strong> position <strong>of</strong> <strong>the</strong> analysis frame, two distinct preprocessingmethods were investigated. These were as follows.1) Allpass filtering <strong>of</strong> s(n) to reduce <strong>the</strong> peakedness <strong>of</strong> e(n)at <strong>the</strong> beginning <strong>of</strong> each pitch period; i.e., to spread out <strong>the</strong>error pulse in e@).2) Preemphasizing s(n) by a first-order network to reduce<strong>the</strong> effects <strong>of</strong> <strong>the</strong> high-frequency error in e(n) at <strong>the</strong> beginning<strong>of</strong> <strong>the</strong> frame by making <strong>the</strong> magnitude <strong>of</strong> e(n) larger throughout<strong>the</strong> frame.Results <strong>of</strong> using <strong>the</strong>se two techniques are presented in Figs.10 and 11. Fig. 10 shows <strong>the</strong> effect on <strong>the</strong> prediction error <strong>of</strong>using a 24th-order allpass fdter6 [12] to spread out (i.e., phasedisperse) <strong>the</strong> signal for <strong>the</strong> covariance method [Fig. lO(b)]and for <strong>the</strong> autocorrelation method using a Hamming window[Fig. lO(c)]. By contrasting <strong>the</strong>se results <strong>with</strong> those previouslyshown in Fig. 6, <strong>the</strong> following effects are noted.6For this implementation <strong>the</strong> signal was processed by a cascade <strong>of</strong>three <strong>of</strong> <strong>the</strong> eight-order allpass filters <strong>of</strong> [ 91. The detailed nature <strong>of</strong><strong>the</strong> allpass filter is not critical. It is important that <strong>the</strong> effective duration<strong>of</strong> <strong>the</strong> impulse response <strong>of</strong> <strong>the</strong> allpass filter be large enough tosDread out <strong>the</strong> excitation over <strong>the</strong> Ditch Deriod. but not so large so as tosmear <strong>the</strong> tempord variations <strong>of</strong> tie preiictor coefficients. -1) For <strong>the</strong> covariance method <strong>the</strong> allpass filter effectivelyspreads out <strong>the</strong> sharp changes in <strong>the</strong> prediction error signal. Apitch-synchronous variation in <strong>the</strong> prediction error representinglow-frequency (gradual) changes is now <strong>the</strong> dominanteffect <strong>of</strong> varying <strong>the</strong> position <strong>of</strong> <strong>the</strong> analysis frame. In addition,a small noiselike component rides on this low-frequencysignal. The noise is due primarily to <strong>the</strong> detailed shape <strong>of</strong> <strong>the</strong>error signal from <strong>the</strong> linear prediction analysis.2) For <strong>the</strong> autocorrelation method <strong>the</strong> effect <strong>of</strong> <strong>the</strong> allpassfiltering is essentially negligible because <strong>the</strong> dominant errorterms were shown to be related to <strong>the</strong> analysis method ra<strong>the</strong>rthati details <strong>of</strong> <strong>the</strong> error signal itself. Thus a substantial highfrequencyvariation <strong>of</strong> <strong>the</strong> prediction error remains after <strong>the</strong>allpass fdter is applied.Fig. 11 shows <strong>the</strong> results obtained for <strong>the</strong> autocorrelationmethod when a first-order preemphasis network <strong>of</strong> <strong>the</strong> formH(2) = 1 - az-1 (14)<strong>with</strong> a = 0.95 is applied to <strong>the</strong> speech signal prior to <strong>the</strong> <strong>LPC</strong>analysis. Since <strong>the</strong> major effect <strong>of</strong> <strong>the</strong> preemphasis network isto reduce <strong>the</strong> spectral variations in <strong>the</strong> input speech signal,<strong>the</strong> prediction error increases in value quite significantly over<strong>the</strong> values obtained <strong>with</strong>out <strong>the</strong> preemphasizer. The effect<strong>of</strong> this increased normalized error is to essentially swamp out<strong>the</strong> high-frequency variation in <strong>the</strong> prediction error due to <strong>the</strong>first p samples since <strong>the</strong> error signal is uniformly higherthroughout <strong>the</strong> analysis interval. Thus, as seen in Fig. 1 l(b),<strong>the</strong> prediction error shows considerably smaller variation <strong>with</strong>respect to <strong>the</strong> position <strong>of</strong> <strong>the</strong> analysis frame when <strong>the</strong> equalizeris used than when it is not used.We did not study <strong>the</strong> effect <strong>of</strong> preemphasis for <strong>the</strong> covariancemethod. The results for <strong>the</strong> covariance method cannotbe significantly different from those <strong>of</strong> <strong>the</strong> autocorrela-tion method. Therefore <strong>the</strong> reasons previously stated for <strong>the</strong>reduction in variability <strong>of</strong> <strong>the</strong> prediction error for <strong>the</strong> autocorrelationmethod apply equally well to <strong>the</strong> covariancemethod; in both cases, <strong>the</strong> large prediction error resultingfrom <strong>the</strong> preemphasis <strong>of</strong> <strong>the</strong> speech signal wil effectivelyswamp <strong>the</strong> smaller frame-dependent variations <strong>of</strong> <strong>the</strong> predictionerror.The conclusion <strong>of</strong> this section is that signal conditioningtechniques provide effective methods <strong>of</strong> reducing <strong>the</strong> variationin <strong>the</strong> <strong>LPC</strong> prediction error <strong>with</strong> <strong>the</strong> position <strong>of</strong> <strong>the</strong>analysis frame. It should be noted that <strong>the</strong> signal conditioningtechniques discussed in this section were independent <strong>of</strong> <strong>the</strong>signal. Signal-dependent methods for reducing <strong>the</strong> variability<strong>of</strong> <strong>the</strong> prediction error, such as adjusting <strong>the</strong> position and size<strong>of</strong> <strong>the</strong> frame based on <strong>the</strong> pitch period, can also be applied,but are much morepitch .sensitive to accurate determination <strong>of</strong>V. EFFECTS OF PREDICTION-ERROR VARIATIONON AN LE DISTANCE METRICItakura [lo] has proposed a measure <strong>of</strong> similarity betweenspeech frames <strong>with</strong> measured <strong>LPC</strong> sets <strong>of</strong> a and ci asi.e., <strong>the</strong> distance between frames is related to <strong>the</strong> ratio <strong>of</strong>prediction residuals or normalized <strong>LPC</strong> errors. (O<strong>the</strong>r <strong>LPC</strong>Authorized licensed use limited to: Escuela Superior de Ingeneria Mecanica. Downloaded on November 26, 2009 at 20:19 from IEEE Xplore. Restrictions apply.

440 IEEE TRANSACTIONS ON ACOUSTICS, SPEECH, AND SIGNAL PROCESSING, VOL. ASSP-25, NO. 5, OCTOBER 19771.4 X109LRR-IY VOWEL -ALLPASSM -14 N.200AUTOCORRELATION METHODHAMMING WINDOWM= 14 N=200LRR-IY VOWEL PRE-EMPHASIZEDO b 50 ,bo 1& 260 2b0 3;o 3 h ATIME (SAMPLES)I I I I I I I 10 50 100 4 5 0 x)o 250 300 350 400TIME (SAMPLES)'2027COVARIANCE METHODNORMALIZED ERROR0.116NORMALIZED ERROR0.0290 50 loo 150 zoo 250 3cil 350 400TIME (SAMPLES)AUTOCORRELATION MEMODHAMMING WINDOW01 I I I 1 I I I J0 50 I0 150 200 250 300 350 400TIME (SAMPLES)Fig. 11. The effects <strong>of</strong> preemphasis on <strong>the</strong> prediction-error sequence.and00 50 IO0 150 ZOO a 300 350 400TIME (SAMPLES)Fig. 10.. The effects <strong>of</strong> allpass filtering on <strong>the</strong> prediction-error sequence.distance metrics have been proposed by Gray and Markel[I31.) A fundamental implication <strong>of</strong> <strong>the</strong> previously givendistance measure is that if a and S are basically from <strong>the</strong>same frame, <strong>the</strong>n D(a, 2) should be essentially 0, or El E2.Conver'sely, if a and ri are from dissimilar frames, <strong>the</strong>n D(a, 2)should be large. We have already shown that if a and b represent<strong>LPC</strong> sets obtained from positioning <strong>the</strong> analysis frame inslightly different positions, <strong>the</strong>n <strong>the</strong> prediction error can varyby 30 percent or more by itself. This result, by itself, doesnot mean that D(a, 6) wiU vary by 30 percent or more, sinceEl and E2 are not both minimum prediction errors, butinstead El is <strong>the</strong> error obtained when parameter set a is used<strong>with</strong> <strong>the</strong> correlation matrix V obtained from parameter set 8.However, this result suggests that significant variations inD(Q, S) might also exist because <strong>of</strong> variations in <strong>the</strong> predictionerrorterm E2 due to <strong>the</strong> position <strong>of</strong> <strong>the</strong> analysis frame.To test this hypo<strong>the</strong>sis an <strong>LPC</strong> analysis was carried out on asample-by-sample basis for a 1.75s utterance. The autocorrelationmethod was used <strong>with</strong> a 20-ms frame size using a Hammingwindow. The analysis rate was nominally set at 100frames/s. Thus, <strong>with</strong>in each 10-ms frame, 100 <strong>LPC</strong> analyseswere performed, <strong>the</strong>reby giving <strong>the</strong> prediction error at a10000-Hz rate. The positions at which <strong>the</strong> peak predictionerror and <strong>the</strong> minimum prediction error occurred were obtained.From <strong>the</strong> <strong>LPC</strong> sets corresponding to <strong>the</strong>se two positions(amax and amin) <strong>the</strong> following quantities were computed:amax Vmin &axDz(amax,amin)= a . . at . (16)mm mm minSince both sets <strong>of</strong> a's were from ostensibly <strong>the</strong> same analysisframe, one would <strong>the</strong>oretically expect Dl and D2 to be closeto 1.0 for all frames. A total <strong>of</strong> 175 calculations <strong>of</strong> Dl andDz were made. Fig. 12(a) shows <strong>the</strong> distribution <strong>of</strong> <strong>the</strong>occurrences <strong>of</strong> values <strong>of</strong> Dl, D2 and <strong>the</strong> combined set <strong>of</strong>Dl and D2. Of <strong>the</strong>se 175 calculations, Dl exceeded a threshold<strong>of</strong> 1.2 a total <strong>of</strong> 97 times, and Dz exceeded this thresholda total <strong>of</strong> 85 times; i.e., more than 50 percent <strong>of</strong> <strong>the</strong> frameswere classified as dissimilar to shifted versions <strong>of</strong> <strong>the</strong> framesusing a 20-percent variation threshold. Additionally, in manycases, <strong>the</strong> distances exceeded <strong>the</strong> 20-percent threshold by aconsiderable margin.The previous experiment was repeated on <strong>the</strong> same sentenceafter it had been preemphasized using <strong>the</strong> network discussedin <strong>the</strong> previous section. The results for this case are shown inFig. 12(b). For <strong>the</strong> 175 frames tested, Dl exceeded <strong>the</strong>threshold <strong>of</strong> 1.2 a total <strong>of</strong> 34 times, and D2 exceeded thisthreshold a total <strong>of</strong> 31 times. Of <strong>the</strong>se 67 cases, <strong>the</strong> majorityoccurred in regions where large signal changes were occurring;Le., in speech transitions, etc., where such behavior is entirelyanticipated. These results indicate that signal preconditioningis a useful technique when a distance measure <strong>of</strong> <strong>the</strong> typepreviously described is to be used to compare <strong>LPC</strong> parametersets.7The implication <strong>of</strong> <strong>the</strong> results presented in this section isthat in <strong>the</strong> worst case when one compares <strong>LPC</strong> sets obtainedfrom positioning <strong>the</strong> analysis frame so as to give <strong>the</strong> maximumand minimum prediction errors, <strong>the</strong>n fairly large distancesbetween such frames can be obtained. Since such frames arephysically <strong>the</strong> same this result suggests that spuriously largeamin KnaxaLnDl (umin9amax) =amax Laxahax(1 5)7Results are not given for <strong>the</strong> covariance method, since <strong>the</strong> <strong>LPC</strong>distance <strong>of</strong> (13) is almost always used <strong>with</strong> <strong>the</strong> autocorrelation method.Authorized licensed use limited to: Escuela Superior de Ingeneria Mecanica. Downloaded on November 26, 2009 at 20:19 from IEEE Xplore. Restrictions apply.

Authorized licensed use limited to: Escuela Superior de Ingeneria Mecanica. Downloaded on November 26, 2009 at 20:19 from IEEE Xplore. Restrictions apply.442 IEEE TRANSACTIONS ON ACOUSTICS, SPEECH, AND SIGNAL PROCESSING, VOL. ASSP-25, NO. 5, OCTOBER 1977REFERENCES[ 11 B. S. Atal and S. L. Hanauer, “Speech analysis and syn<strong>the</strong>sis bylinear prediction <strong>of</strong> <strong>the</strong> speech wave,” J. Acoust. SOC. Amer.,VO~. 50, pp. 637-655,1971.[2] F. Itakura and S. Saito, “A statistical method for estimation <strong>of</strong>speech spectral density and formant frequencies,” Electron.Commun. Japan, vol. 53-A, pp. 36-43,1970.[3] -, “Digital filtering techniques for speech analysis and syn<strong>the</strong>sis,”in Proc. 7th Int. Congr. Acoustics (Budapest, 1971), Paper25C1.[4] J. D. Markel and A. H. Gray, Linea? <strong>Prediction</strong> <strong>of</strong> Speech.Berlin: Springer, 1976.[5] J. Makhoul, “Linear prediction: A tutorial review,” Proc. IEEE,VO~. 63, pp. 561-580, 1975.[ 61 J. Burg, “A new analysis technique for time series data,” NATOAdvanced Study Institute on Signal Processing, Enschede, TheNe<strong>the</strong>rlands, 1968.[ 71 J. Makhoul, “New lattice methods for linear prediction,” in Proc.1976 IEEE Int. Con5 Acoustics, Speech, and Signal Processing,Apr. 1976, pp. 462-465.[8] G. S. Kang, “Application <strong>of</strong> linear prediction encoding to anarrowband voice digitizer,” Naval Res. Lab. Rep. 7774, Oct.1974.[9] G. S. Kang and D. C. Coulter, “600-bit-per-second voice digitizer(linear predictive formant vocoder),” Naval Res. Lab. Rep. 8043,Nov. 1976.[lo] F. Itakura, “Minimum prediction residual principle applied tospeech recognition,” IEEE Trans. Acoust., Speech, Signal Processing,vol. ASSP-23, pp. 67-72,1975.[ll] S. Chandra and W. C. Lin, “Experimental comparison betweenstationary and nonstationary formulations <strong>of</strong> linear predictionapplied to speech,” IEEE Trans. Acoust., Speech, Signal Processing,vol. ASSP-22, pp. 403-415, 1974.[12] L. R. Rabiner and R. E. Crochiere, “On <strong>the</strong> design <strong>of</strong> all-passsignals <strong>with</strong> peak amplitude constraints,” Bell Syst. Tech. J., vol.55, pp. 395-407,Apr. 1976.[13] A. H. Gray and J. D. Markel, “Distance measures for speechprocessing,” IEEE Trans. Acoust., Speech, Signal Processing,VO~. ASSP-24, pp. 380-391, Oct. 1976.[14] C. A. McGonegal, L. R. Rabiner, and A. E. Rosenberg, “Asemiautomatic pitch detector (SAPD),” IEEE Trans. Acoust.,Speech, SignalProcessing,vol. ASSP-23, pp. 570-574,Dec. 1975.A Necessary and Sufficient Condition forQuantization <strong>Error</strong>s to be Uniformand WhiteAbstract-In this paper, a necessary and sufficient condition is givento model <strong>the</strong> output <strong>of</strong> a quantizer as an infinite-predsion input and anadditive, uniform, white noise. The statistical properties <strong>of</strong> <strong>the</strong> qumtizationerror are studied, and a detailed analysis for Gaussian distributedinputs is given.TI. INTRODUCTIONHE IMPLEMENTATION <strong>of</strong> filters <strong>with</strong> digital deviceshaving finite word-length introduces unavoidable quantizationerrors. These effects have been widely studied[6]-[8]. There are three main sources <strong>of</strong> quantization errorthat can arise: input quantization, coefficient quantization,and quantization in arithmetic operations. Once a model torepresent input quantization error has been developed, modelsto represent <strong>the</strong> o<strong>the</strong>r two types <strong>of</strong> error can easily be obtained[6]-[8]. Hence, our attention in this paper will be oninput quantization, which occurs in <strong>the</strong> analog-to-digital conversionprocess.A quantizer can be viewed as a nonlinear mapping from <strong>the</strong>Manuscript received September 17, 1976;revised February 14,1977.This work was supported in part by <strong>the</strong> National Science Foundationunder Grant ENG74-07800 and in part by <strong>the</strong> National Institutes <strong>of</strong>Health, Division <strong>of</strong> Research Resources, under Grant RR00396.A. B. Sripad is <strong>with</strong> <strong>the</strong> Department <strong>of</strong> Systems Science and Ma<strong>the</strong>matics,Washington University, St. Louis, MO 631 30.D. L. Snyder is <strong>with</strong> <strong>the</strong> Department <strong>of</strong> Electrical Engineering and <strong>the</strong>Biomedical Computer Laboratory, Washington University, St. Louis,MO 63130.domain <strong>of</strong> continuous-amplitude inputs onto one <strong>of</strong> a countablenumber <strong>of</strong> possible output levels. The analysis <strong>of</strong> errorsintroduced <strong>with</strong> this mapping can be approached ei<strong>the</strong>r usingnonlinear deterministic methods [9] or using stochasticmethods [I]-[8]. The later approach is <strong>the</strong> one we adopt inthis paper.With <strong>the</strong> stochastic method, <strong>the</strong> output <strong>of</strong> <strong>the</strong> quantizer ismodeled as an infinite-precision input and an additive noise.The additive noise is a random variable whose distribution isnonzero only over an interval equal to <strong>the</strong> quantization stepsize. Widrow [I] showed that under <strong>the</strong> condition that <strong>the</strong>input random variable has a certain band-limited characteristicfunction, <strong>the</strong> quantization noise is uniformly distributed; thisis frequently referred to as <strong>the</strong> “Quantization Theorem”[ 11 -[SI .l The band-limitedness assumption on <strong>the</strong> inputrandom variable is a sufficient condition that is not satisfieduniversally. In this paper, a weaker sufficient condition whichis also necessary for <strong>the</strong> Quantization Theorem is given. Thisexpands <strong>the</strong> class <strong>of</strong> input distributions for which <strong>the</strong> uniformnoise model to represent quantization errors can be used <strong>with</strong>confidence and is discussed in Section 111.1 More precisely, Widrow established that if <strong>the</strong> input random variablehas a band-limited characteristic function, <strong>the</strong>n <strong>the</strong> input distributioncan be recovered from <strong>the</strong> quantized-output distribution, and <strong>the</strong>quantization noise density is uniform [l], [2]. Because <strong>of</strong> its dominantimportance in applications, we are interested in <strong>the</strong> latter part <strong>of</strong> thisresult, and refer to it as <strong>the</strong> “Quantization Theorem’’ for brevity.