Hacker Bits, August 2016

HACKER BITS is the monthly magazine that gives you the hottest technology stories crowdsourced by the readers of Hacker News. We select from the top voted stories and publish them in an easy-to-read magazine format. Get HACKER BITS delivered to your inbox every month! For more, visit https://hackerbits.com/2016-08.

HACKER BITS is the monthly magazine that gives you the hottest technology stories crowdsourced by the readers of Hacker News. We select from the top voted stories and publish them in an easy-to-read magazine format.

Get HACKER BITS delivered to your inbox every month! For more, visit https://hackerbits.com/2016-08.

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

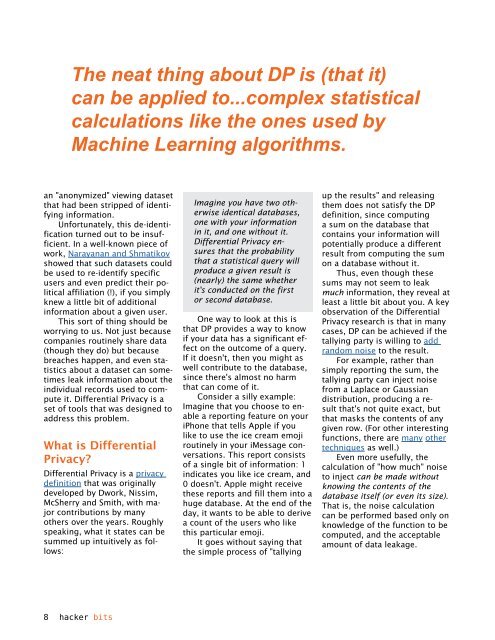

The neat thing about DP is (that it)<br />

can be applied to...complex statistical<br />

calculations like the ones used by<br />

Machine Learning algorithms.<br />

an "anonymized" viewing dataset<br />

that had been stripped of identifying<br />

information.<br />

Unfortunately, this de-identification<br />

turned out to be insufficient.<br />

In a well-known piece of<br />

work, Narayanan and Shmatikov<br />

showed that such datasets could<br />

be used to re-identify specific<br />

users and even predict their political<br />

affiliation (!), if you simply<br />

knew a little bit of additional<br />

information about a given user.<br />

This sort of thing should be<br />

worrying to us. Not just because<br />

companies routinely share data<br />

(though they do) but because<br />

breaches happen, and even statistics<br />

about a dataset can sometimes<br />

leak information about the<br />

individual records used to compute<br />

it. Differential Privacy is a<br />

set of tools that was designed to<br />

address this problem.<br />

What is Differential<br />

Privacy?<br />

Differential Privacy is a privacy<br />

definition that was originally<br />

developed by Dwork, Nissim,<br />

McSherry and Smith, with major<br />

contributions by many<br />

others over the years. Roughly<br />

speaking, what it states can be<br />

summed up intuitively as follows:<br />

Imagine you have two otherwise<br />

identical databases,<br />

one with your information<br />

in it, and one without it.<br />

Differential Privacy ensures<br />

that the probability<br />

that a statistical query will<br />

produce a given result is<br />

(nearly) the same whether<br />

it's conducted on the first<br />

or second database.<br />

One way to look at this is<br />

that DP provides a way to know<br />

if your data has a significant effect<br />

on the outcome of a query.<br />

If it doesn't, then you might as<br />

well contribute to the database,<br />

since there's almost no harm<br />

that can come of it.<br />

Consider a silly example:<br />

Imagine that you choose to enable<br />

a reporting feature on your<br />

iPhone that tells Apple if you<br />

like to use the ice cream emoji<br />

routinely in your iMessage conversations.<br />

This report consists<br />

of a single bit of information: 1<br />

indicates you like ice cream, and<br />

0 doesn't. Apple might receive<br />

these reports and fill them into a<br />

huge database. At the end of the<br />

day, it wants to be able to derive<br />

a count of the users who like<br />

this particular emoji.<br />

It goes without saying that<br />

the simple process of "tallying<br />

up the results" and releasing<br />

them does not satisfy the DP<br />

definition, since computing<br />

a sum on the database that<br />

contains your information will<br />

potentially produce a different<br />

result from computing the sum<br />

on a database without it.<br />

Thus, even though these<br />

sums may not seem to leak<br />

much information, they reveal at<br />

least a little bit about you. A key<br />

observation of the Differential<br />

Privacy research is that in many<br />

cases, DP can be achieved if the<br />

tallying party is willing to add<br />

random noise to the result.<br />

For example, rather than<br />

simply reporting the sum, the<br />

tallying party can inject noise<br />

from a Laplace or Gaussian<br />

distribution, producing a result<br />

that's not quite exact, but<br />

that masks the contents of any<br />

given row. (For other interesting<br />

functions, there are many other<br />

techniques as well.)<br />

Even more usefully, the<br />

calculation of "how much" noise<br />

to inject can be made without<br />

knowing the contents of the<br />

database itself (or even its size).<br />

That is, the noise calculation<br />

can be performed based only on<br />

knowledge of the function to be<br />

computed, and the acceptable<br />

amount of data leakage.<br />

8 hacker bits