Intra-note Features Prediction Model for Jazz Saxophone Performance

Intra-note Features Prediction Model for Jazz Saxophone Performance

Intra-note Features Prediction Model for Jazz Saxophone Performance

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

INTRA-NOTE FEATURES PREDICTION MODEL FOR JAZZ<br />

SAXOPHONE PERFORMANCE<br />

Rafael Ramirez Amaury Hazan Esteban Maestre<br />

Pompeu Fabra University<br />

Music Technology Group - IUA<br />

Ocata 1, 08003 Barcelona, Spain<br />

{rafael, ahazan, emaestre}@iua.upf.es<br />

ABSTRACT<br />

Expressive per<strong>for</strong>mance is an important issue in music<br />

which has been studied from different perspectives. In<br />

this paper we describe an approach to investigate musical<br />

expressive per<strong>for</strong>mance based on inductive machine<br />

learning. In particular, we focus on the study of variations<br />

on intra-<strong>note</strong> features (e.g. attack) that a saxophone<br />

interpreter introduces in order to expressively per<strong>for</strong>m a<br />

<strong>Jazz</strong> standard. The study of these features is intended to<br />

build on our current system which predicts expressive deviations<br />

on <strong>note</strong> duration, <strong>note</strong> onset and <strong>note</strong> energy.<br />

1. INTRODUCTION<br />

<strong>Model</strong>ing expressive music per<strong>for</strong>mance is one of the most<br />

challenging aspects of computer music. The focus of this<br />

paper is the study of how skilled musicians (saxophone<br />

<strong>Jazz</strong> players in particular) express and communicate their<br />

view of the musical and emotional content of musical pieces<br />

by introducing deviations and changes of various parameters.<br />

In the past, we have studied expressive deviations<br />

on <strong>note</strong> duration, <strong>note</strong> onset and <strong>note</strong> energy [11,<br />

10]. We have used this study as the basis of an inductive<br />

content-based trans<strong>for</strong>mation system <strong>for</strong> per<strong>for</strong>ming<br />

expressive trans<strong>for</strong>mation on musical phrases.<br />

In this paper we focus on the study of the intra-<strong>note</strong><br />

features (i.e. attack, sustain, release) that the interpreter<br />

introduces in order to expressively per<strong>for</strong>m a piece. In<br />

particular, we apply machine learning techniques to induce<br />

a predictive model <strong>for</strong> the type of attack, sustain and<br />

release of a <strong>note</strong> according to the context in which the <strong>note</strong><br />

appears.<br />

The rest of the paper is organized as follows: Section<br />

2 briefly describes how we extract a melodic description<br />

from audio recordings. Section 3 describes the approach<br />

we have followed <strong>for</strong> the induction of the predictive model<br />

of the mentioned intra-<strong>note</strong> features. Section 4 reports on<br />

some related work, and finally Section 5 presents some<br />

conclusions and indicates some areas of future research.<br />

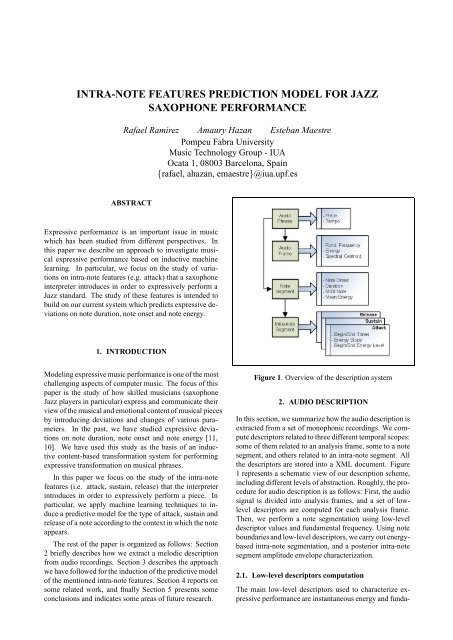

Figure 1. Overview of the description system<br />

2. AUDIO DESCRIPTION<br />

In this section, we summarize how the audio description is<br />

extracted from a set of monophonic recordings. We compute<br />

descriptors related to three different temporal scopes:<br />

some of them related to an analysis frame, some to a <strong>note</strong><br />

segment, and others related to an intra-<strong>note</strong> segment. All<br />

the descriptors are stored into a XML document. Figure<br />

1 represents a schematic view of our description scheme,<br />

including different levels of abstraction. Roughly, the procedure<br />

<strong>for</strong> audio description is as follows: First, the audio<br />

signal is divided into analysis frames, and a set of lowlevel<br />

descriptors are computed <strong>for</strong> each analysis frame.<br />

Then, we per<strong>for</strong>m a <strong>note</strong> segmentation using low-level<br />

descriptor values and fundamental frequency. Using <strong>note</strong><br />

boundaries and low-level descriptors, we carry out energybased<br />

intra-<strong>note</strong> segmentation, and a posterior intra-<strong>note</strong><br />

segment amplitude envelope characterization.<br />

2.1. Low-level descriptors computation<br />

The main low-level descriptors used to characterize expressive<br />

per<strong>for</strong>mance are instantaneous energy and funda-

mental frequency. Energy is computed on the spectral domain,<br />

using the values of the amplitude spectrum. For the<br />

estimation of the instantaneous fundamental frequency we<br />

use a harmonic matching model, the Two-Way Mismatch<br />

procedure (TWM) [8]. After a first test of this implementation,<br />

some improvements to the original algorithm<br />

where implemented and reported in [6].<br />

2.2. Note segmentation<br />

Note segmentation is per<strong>for</strong>med using a set of frame descriptors,<br />

which are energy computation in different frequency<br />

bands and fundamental frequency. Energy onsets<br />

are first detected following a band-wise algorithm that<br />

uses some psycho-acoustical knowledge [7]. In a second<br />

step, fundamental frequency transitions are also detected.<br />

Finally, both results are merged to find the <strong>note</strong> boundaries.<br />

2.3. Note descriptor computation<br />

We compute <strong>note</strong> descriptors using the <strong>note</strong> boundaries<br />

and the low-level descriptors values. The low-level descriptors<br />

associated to a <strong>note</strong> segment are computed by<br />

averaging the frame values within this <strong>note</strong> segment. Pitch<br />

histograms have been used to compute the pitch <strong>note</strong> and<br />

the fundamental frequency that represents each <strong>note</strong> segment,<br />

as found in [9].<br />

2.4. <strong>Intra</strong>-<strong>note</strong> description<br />

We per<strong>for</strong>m intra-<strong>note</strong> segmentation based on energy envelope<br />

contour. Once <strong>note</strong> boundaries are found, energy<br />

envelopes of <strong>note</strong>s extracted from the recordings and divided<br />

into three segments, namely attack, sustain, release.<br />

Figure 2 shows the representation of these segments <strong>for</strong> a<br />

melody fragment.<br />

The procedure <strong>for</strong> intra-<strong>note</strong> energy-based description<br />

is outlined as follows: We consider the audio <strong>note</strong> segments<br />

as differentiable function over time. We compute<br />

the zero-crossings of third derivative [Jenssen] in order<br />

to select the segment characteristic points, i.e. maximum<br />

curvature points. Then, we join these points by straight<br />

lines, con<strong>for</strong>ming a set of consecutive linear segments.<br />

We compute the slopes and durations (i.e. the projection<br />

on the time axis) of the linear fragments, and finally define<br />

an attack, a sustain and a release section. We characterize<br />

a the complete envelope of a <strong>note</strong> by the following<br />

(self explanatory) six descriptors: Attack Relative Duration<br />

(ARD), Attack Regression Slope (ARS), Attack Relative<br />

End Value (AREV), Sustain Regression Slope (SS),<br />

release Relative Duration (RRD), and Release Regression<br />

Slope (RS). We obtained a correlation coefficient of 0.83<br />

<strong>for</strong> our data set of 4360 <strong>note</strong>s.<br />

3. INTRA-NOTE PREDICTIVE MODEL<br />

In this section, we describe our inductive approach <strong>for</strong><br />

learning the intra-<strong>note</strong> predictive model from per<strong>for</strong>mances<br />

Figure 2. Example of linear envelope approximation of a<br />

sequence of saxophone <strong>note</strong>s<br />

of <strong>Jazz</strong> standards by a skilled saxophone player. Our aim<br />

is to find an intra-<strong>note</strong>-level model which predict how a<br />

particular <strong>note</strong> in a particular context should be played<br />

(i.e. predict its envelope). This, we believe, will improve<br />

our current model which predicts <strong>note</strong>-level deviations on<br />

<strong>note</strong> duration, <strong>note</strong> onset and <strong>note</strong> energy. As we have<br />

pointed out in the past, We are aware of the fact that not<br />

all the expressive trans<strong>for</strong>mations per<strong>for</strong>med by a musician<br />

can be predicted at a local <strong>note</strong> level. Musicians per<strong>for</strong>m<br />

music considering a number of abstract structures<br />

(e.g. musical phrases) which makes of expressive per<strong>for</strong>mance<br />

a multi-level phenomenon. In this context, our ultimate<br />

aim is to obtain an integrated model of expressive<br />

per<strong>for</strong>mance which combines <strong>note</strong>-level knowledge with<br />

structure-level knowledge. Thus, the work presented in<br />

this paper may be seen as part of a starting step towards<br />

this ultimate aim.<br />

Training data. The training data used in our experimental<br />

investigations are monophonic recordings of four <strong>Jazz</strong><br />

standards (Body and Soul, Once I loved, Like Someone in<br />

Love and Up Jumped Spring) per<strong>for</strong>med by a professional<br />

musician at 11 different tempos around the nominal tempo<br />

(apart from the tempo requirements, the musician was not<br />

given any particular instructions on how to per<strong>for</strong>m the<br />

pieces). A set of melodic features is extracted from the<br />

recordings which is stored in a structured <strong>for</strong>mat. The<br />

per<strong>for</strong>mances are then compared with their corresponding<br />

scores in order to automatically compute the trans<strong>for</strong>mations<br />

per<strong>for</strong>med.<br />

Descriptors. In this paper, we are concerned with intra<strong>note</strong><br />

(in particular the <strong>note</strong>’s envelope) expressive trans<strong>for</strong>mations.<br />

Given a <strong>note</strong>’s musical context in the score,<br />

we are interested in inducing a model <strong>for</strong> predicting aspects<br />

of the <strong>note</strong>’s envelope. The musical context of each<br />

<strong>note</strong> is defined in a structured way using first order logic<br />

predicates. The predicate melo/10 specifies in<strong>for</strong>mation<br />

both about the <strong>note</strong> itself and the local context in which it<br />

appears. In<strong>for</strong>mation about intrinsic properties of the <strong>note</strong><br />

includes the <strong>note</strong> duration and the <strong>note</strong>’s metrical position,<br />

while in<strong>for</strong>mation about its context includes the duration<br />

of previous and following <strong>note</strong>s, and extension and direction<br />

of the intervals between the <strong>note</strong> and both the previous<br />

and the subsequent <strong>note</strong>. contextnarmour/4

specifies in<strong>for</strong>mation about the Narmour groups a particular<br />

<strong>note</strong> belongs to. Temporal aspect of music is encoded<br />

via predicate succ/4. For instance, succ(A,B,C,D)<br />

indicates that <strong>note</strong> in position D in the excerpt indexed by<br />

the tuple (A,B) follows <strong>note</strong> C.<br />

The use of first order logic <strong>for</strong> specifying the musical<br />

context of each <strong>note</strong> is much more convenient than using<br />

traditional attribute-value (propositional) representations.<br />

Encoding both the notion of successor <strong>note</strong>s and<br />

Narmour group membership would be very difficult using<br />

a propositional representation. In order to mine the<br />

structured data we used Tilde’s top-down decision tree induction<br />

algorithm ([2]). Tilde can be considered as a first<br />

order logic extension of the C4.5 decision tree algorithm:<br />

instead of testing attribute values at the nodes of the tree,<br />

Tilde tests logical predicates. This provides the advantages<br />

of both propositional decision trees (i.e. efficiency<br />

and pruning techniques) and the use of first order logic<br />

(i.e. increased expressiveness). The increased expressiveness<br />

of first order logic not only provides a more elegant<br />

and efficient specification of the musical context of a <strong>note</strong>,<br />

but it provides a more accurate predictive model (more on<br />

this later). Tilde can also be used to build multivariate regression<br />

trees, i.e. trees able to predict vectors. In our<br />

case the predicted vectors are the amplitude envelope linear<br />

approximation descriptors, which can be seen as a first<br />

step towards a more complete (e.g. pitch and centroid envelope),<br />

and more refined (e.g. using spline regression)<br />

intra-<strong>note</strong> description.<br />

Table 1 compares the correlation coefficients obtained<br />

by 10-fold cross validation of a propositional regression<br />

tree model and our first order logic regression tree model<br />

<strong>for</strong> each descriptor in the amplitude envelope prediction<br />

task. On the one hand, the first order logic model takes<br />

into account a wider <strong>note</strong>’s musical context by considering<br />

an arbitrary temporal window around the <strong>note</strong> (via<br />

succ/4 predicate). On the other hand, the propositional<br />

model only consider the <strong>note</strong>’s local context, i.e. the temporal<br />

in<strong>for</strong>mation is restricted to the duration and pitch of<br />

the previous and following <strong>note</strong>s. In Table 1 ARD refers<br />

to Attack Relative Duration, ARS to Attack Regression<br />

Slope, AREV to Attack Relative End Value, SS to Sustain<br />

Regression Slope, RRD to release Relative Duration, and<br />

RS to Release Regression Slope. The pruned tree models<br />

have an average size of 69 nodes <strong>for</strong> the propositional<br />

model, and 123 nodes <strong>for</strong> the first order logic model. We<br />

also obtained correlation coefficients of 0.71 and 0.58 <strong>for</strong><br />

the complete amplitude envelope prediction task by per<strong>for</strong>ming<br />

a 10-fold cross-validation <strong>for</strong> the first order logic<br />

model and the propositional model, respectively. Table 2<br />

shows the average absolute error (AAE) and the root mean<br />

squared error (RMSE) <strong>for</strong> each descriptor in the amplitude<br />

envelope prediction task.<br />

4. RELATED WORK<br />

Previous research in building per<strong>for</strong>mance models in a<br />

somehow structured musical context has included a broad<br />

Morphological attribute C.C (Prop) C.C(FOL)<br />

ARD 0.19 0.27<br />

ARS 0.39 0.51<br />

AREV 0.51 0.65<br />

SS 0.22 0.24<br />

RRD 0.24 0.31<br />

RRS 0.31 0.40<br />

Table 1. Comparision of Pearson Correlation coefficients<br />

obtained by 10-fold cross-validation <strong>for</strong> propositional and<br />

first order logic models.<br />

Morphological attribute AAE RMSE<br />

ARD 0.11 0.19<br />

ARS 0.05 0.08<br />

AREV 0.65 0.91<br />

SS 0.01 0.01<br />

RRD 0.20 0.26<br />

RRS 0.05 0.11<br />

Table 2. Errors <strong>for</strong> each descriptor in the amplitude envelope<br />

prediction task by the first order logic model obtained<br />

by 10-fold cross validation<br />

spectrum of music domains.<br />

Widmer et al [12] have focused on the task of discovering<br />

general rules of expressive classical piano per<strong>for</strong>mance<br />

as well as generating them from real per<strong>for</strong>mance<br />

data via inductive machine learning. The per<strong>for</strong>mance<br />

data used <strong>for</strong> the study are MIDI and audio recordings of<br />

piano sonatas by W.A. Mozart per<strong>for</strong>med by a skilled pianist.<br />

In addition to these data, the music score along with<br />

hierarchical phrase structure description done by hand was<br />

also coded. The resulting substantial data consists of in<strong>for</strong>mation<br />

about the nominal <strong>note</strong> onsets, duration, metrical<br />

in<strong>for</strong>mation and annotations. However, given that<br />

they are interested in classical piano per<strong>for</strong>mances, they<br />

do not study intra-<strong>note</strong> expressive variations. Here, we<br />

are interested in saxophone recordings of <strong>Jazz</strong> standards,<br />

thus we have studied deviations on local duration, onset<br />

and energy, as well as ornamentations [11]. In [10], we<br />

generate saxophone expressive per<strong>for</strong>mances by predicting<br />

local <strong>note</strong> deviations.<br />

In [4], Dannenberg et al study trumpet envelopes by<br />

computing amplitude envelopes descriptors in a total of<br />

125 contours (i.e. 3 <strong>note</strong>s sequences varying in interval<br />

size, direction, and articulation). Statistical analysis techniques<br />

led to find significant groupings of the envelopes<br />

by interval or direction types, or more specific intentions<br />

(e.g. staccato, legato). This work is extended to a system<br />

that combines instrument and per<strong>for</strong>mance models ([3]).<br />

The authors do not take into account duration, onset deviations<br />

or ornamentations.<br />

Dubnov et al [5] have followed a similar line. Analysis<br />

of sound behavior as it occurs in course of an actual per<strong>for</strong>mances<br />

in several solo works is per<strong>for</strong>med, in order to<br />

build a model able to reproduce aspects of sound texture

originated by expressive inflexions of the per<strong>for</strong>mer. Correlations<br />

between pitch, energy, and spectral envelopes<br />

variations are studied, phase coherence between pitch and<br />

energy, and decomposition between periodic component<br />

and noise component are investigated. To our knowledge,<br />

these models are devised after a preliminary statistical analysis<br />

rather than being induced from the training data. A<br />

possible reason may be the difficulties to parameterize continuos<br />

data <strong>for</strong>m real world acoustic recordings and feed a<br />

machine learning component with the parameterized data.<br />

A first step towards continuous signal parameterization<br />

is done in [1], where voice pitch-continuous signals,<br />

namely indian gamakas, are parameterized with Bezier<br />

splines. An approximation of amplitude, pitch, and centroid<br />

curves is obtained, and the reduced data can be used<br />

to render similar signals. Nevertheless, the proposed representation<br />

lacks of a higher level context (e.g. attack,<br />

sustain) that can be used to analyze and synthesize in a<br />

more accurate way specific parts of the audio signal.<br />

5. CONCLUSION<br />

This paper describes an inductive logic programming approach<br />

<strong>for</strong> learning expressive per<strong>for</strong>mance trans<strong>for</strong>mations<br />

at the intra-<strong>note</strong> level. In particular, we have focused<br />

on the study of variations on intra-<strong>note</strong> features that<br />

a saxophone interpreter introduces in order to expressively<br />

per<strong>for</strong>m <strong>Jazz</strong> standards. We have compared the induced<br />

first order logic model and a propositional model and concluded<br />

that the increased expressiveness of first order logic<br />

not only provides a more elegant and efficient specification<br />

of the musical context of a <strong>note</strong>, but it provides a<br />

more accurate predictive model than the one obtained with<br />

propositional machine learning techniques.<br />

Future work: This paper presents work in progress so<br />

there is future work in different directions. We plan to<br />

explore different envelope approximations, e.g. pitch and<br />

centroid envelope or splines, <strong>for</strong> characterizing the <strong>note</strong>s’<br />

envelopes. Another short term goal is to incorporate the<br />

intra-<strong>note</strong> model described in this paper into our current<br />

system which predicts expressive deviations on <strong>note</strong> duration,<br />

<strong>note</strong> onset and <strong>note</strong> energy. This will give us a<br />

better validation of the intra-<strong>note</strong> model. We also plan to<br />

increase the amount of training data as well as experiment<br />

with different in<strong>for</strong>mation encoded in it. Increasing the<br />

training data, extending the in<strong>for</strong>mation in it and combining<br />

it with background musical knowledge will certainly<br />

generate a more complete model.<br />

Acknowledgments: This work is supported by the Spanish<br />

TIC project ProMusic (TIC 2003-07776-C02-01). We<br />

would like to thank Emilia Gomez and Maarten Grachten<br />

<strong>for</strong> pre-processing and providing the data.<br />

6. REFERENCES<br />

[1] B. Battey. Bezier spline modeling of pitchcontinuous<br />

melodic expression and ornamentation.<br />

Computer Music Journal, 28:4, 2004.<br />

[2] H. Blockeel, L. De Raedt, and J. Ramon. Top-down<br />

induction of clustering trees. In ed. J. Shavlik, editor,<br />

Proceedings of the 15th International Conference on<br />

Machine Learning, pages 53–63, Madison, Wisconsin,<br />

USA, 1998. Morgan Kaufmann.<br />

[3] R.B. Danneberg and I. Derenyi. Combining instrument<br />

and per<strong>for</strong>mance models <strong>for</strong> high quality music<br />

synthesis. Journal of New Music Research, 27:3,<br />

1998.<br />

[4] R.B. Danneberg, H. Pellerin, and I. Derenyi. A study<br />

of trumpet envelopes. In Proceedings of the International<br />

Computer Music Conference., San Fransisco:<br />

International Computer Music Association., 1998.<br />

[5] S. Dubnov and X. Rodet. Study of spectro-temporal<br />

parameters in musical per<strong>for</strong>mance, with applications<br />

<strong>for</strong> expressive instrument synthesis. In 1998<br />

IEEE International Conference on Systems Man and<br />

Cybernetics, San Diego, USA, November 1998.<br />

[6] E. Gómez. Melodic Description of Audio Signals<br />

<strong>for</strong> Music Content Processing. PhD thesis, Pompeu<br />

Fabra University, 2002.<br />

[7] A. Klapuri. Sound onset detection by applying psychoacoustic<br />

knowledge. In Proceedings of the IEEE<br />

International Conference on Acoustics, Speech and<br />

Signal Processing,ICASSP, 1999.<br />

[8] R.C. Maher and J.W. Beauchamp. Fundamental frequency<br />

estimation of musical signals using a twoway<br />

mismatch procedure. Journal of the Acoustic<br />

Society of America, 95, 1994.<br />

[9] R.J. McNab, Smith Ll. A., and Witten I.H. Signal<br />

processing <strong>for</strong> melody transcription. In SIG working<br />

paper, volume 95-22. 1996.<br />

[10] R. Ramirez and A. Hazan. <strong>Model</strong>ing expressive<br />

music per<strong>for</strong>mance in jazz. In Proceedings of the<br />

18th Florida Artificial Intelligence Research Society<br />

Conference (FLAIRS 2005), Clearwater Beach, FL,<br />

2005.<br />

[11] R. Ramirez, A. Hazan, E. Gmez, and E. Maestre.<br />

Understanding expressive trans<strong>for</strong>mations in saxophone<br />

jazz per<strong>for</strong>mances using inductive machine<br />

learning. In Proceedings of Sound and Music Computing<br />

’04, Paris, France, 2004.<br />

[12] G. Widmer and A. Tobudic. Playing mozart by<br />

analogy: Learning multi-level timing and dynamics<br />

strategies. Journal of New Music Research, 2003.