Acquisition

Acquisition

Acquisition

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Acquisition</strong><br />

Dr. Yossi Rubner<br />

Some slides from: Yung-Yu Chuang (DigiVfx) Jan Neumann, Pat Hanrahan, Alexei Efros<br />

Outline<br />

• Pinhole camera<br />

• Lens<br />

• Lens Aberrations<br />

• Exposure<br />

• Sensors<br />

• Sensor Noise<br />

• Camera Pipeline<br />

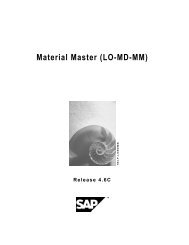

Image/Video <strong>Acquisition</strong><br />

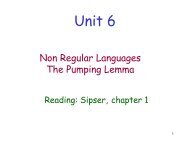

Camera trial #1<br />

source: Yung-Yu Chuang<br />

Film<br />

Digital Camera<br />

scene film<br />

Put a piece of film in front of an object.<br />

1

Pinhole camera<br />

pinhole camera<br />

scene barrier<br />

film<br />

Add a barrier to block off most of the rays<br />

• It reduces blurring<br />

• The pinhole is known as the aperture<br />

• The image is inverted<br />

The Pinhole Camera Model (where)<br />

(X,Y,Z)<br />

d – focal length<br />

y p<br />

(x,y)<br />

x p<br />

d<br />

⎛X<br />

⎞<br />

⎛ x⎞<br />

⎛1<br />

0 0 0⎞⎜<br />

⎟<br />

⎜ ⎟ ⎜<br />

⎟⎜<br />

Y ⎟<br />

⎜ y⎟<br />

= ⎜0<br />

1 0 0⎟⎜<br />

⎟<br />

⎜ ⎟ ⎜<br />

⎟ Z<br />

⎝w⎠<br />

⎝0<br />

0 −1<br />

d 0⎠<br />

⎜<br />

⎟<br />

⎝ 1 ⎠<br />

Y<br />

center of<br />

projection<br />

(pinhole)<br />

X<br />

Z<br />

Shrinking the aperture<br />

Why not making the aperture as small as possible?<br />

• Less light gets through<br />

• Diffraction effect<br />

2

Shrinking the aperture<br />

Sharpest image is obtained when:<br />

pinhole diameter<br />

Example: If<br />

f’ = 50mm,<br />

λ<br />

= 600nm (red),<br />

d = 0.36mm<br />

d = 2 f ' λ<br />

Pinhole Images Outline<br />

Exposure 4 seconds Exposure 96 minutes<br />

Images copyright © 2000 Zero Image Co.<br />

High-end commercial pinhole cameras<br />

~$200<br />

• Pinhole camera<br />

• Lens<br />

• Lens Aberrations<br />

• Exposure<br />

• Sensors<br />

• Sensor Noise<br />

• Camera Pipeline<br />

3

Adding a lens<br />

(Thin) Lens<br />

scene film<br />

Thin lens equation:<br />

• Any object point satisfying this equation is in focus<br />

Adding a lens<br />

scene lens<br />

film<br />

A lens focuses light onto the film<br />

“circle of<br />

confusion”<br />

• There is a specific distance at which objects are “in focus”<br />

• Other points project to a “circle of confusion” in the image<br />

Circle of Confusion<br />

Circle of confusion proportional to the size of the aperture<br />

4

Aperture controls Depth of Field<br />

• Changing the aperture affects depth of field<br />

– Smaller aperture:<br />

• better DOF<br />

• increased exposure<br />

Thick Lens<br />

• Corrects aberrations<br />

• Change zoom<br />

Depth of Field<br />

Field of View (Zoom)<br />

http://www.cambridgeincolour.com/tutorials/depth-of-field.htm<br />

5

Field of View (Zoom) Simplified Zoom Lens in Operation<br />

FOV depends of Focal Length<br />

f<br />

Smaller FOV = larger Focal Length<br />

Outline<br />

• Pinhole camera<br />

• Lens<br />

• Lens Aberrations<br />

• Exposure<br />

• Sensors<br />

• Sensor Noise<br />

• Camera Pipeline<br />

From wikipedia<br />

6

Radial Distortion<br />

No distortion Pin cushion Barrel<br />

• Radial distortion of the image<br />

– Caused by imperfect lenses<br />

– Deviations are most noticeable for rays that pass<br />

through the edge of the lens<br />

Radial Distortions<br />

No Distortion Barrel Distortion Pincushion Distortion<br />

• Radial distance from Image Center:<br />

r u = r d + k 1 r d 3<br />

r u = undistorted radius<br />

r d = distorted radius<br />

Correcting radial distortion<br />

from Helmut Dersch<br />

Correcting Radial Distortions<br />

Before After<br />

http://www.grasshopperonline.com/barrel_distortion_correction_software.html<br />

7

Correcting Radial Distortions - Video<br />

Video courtesy of Vigilant Ltd.<br />

Vignetting<br />

Vignetting Chromatic Aberration<br />

L3 L2 L1<br />

• More light passes through lens L3 for scene point A than scene point B<br />

• Results in spatially non-uniform brightness (in the periphery of the image)<br />

A<br />

B<br />

photo by Robert Johnes<br />

longitudinal chromatic aberration transverse chromatic aberration<br />

(axial) (lateral)<br />

8

Chromatic Aberration<br />

longitudinal chromatic<br />

aberration (axial)<br />

Canon EF 85/1.2 L USM<br />

transverse chromatic<br />

aberration (lateral)<br />

Cosina 3.5-4.5/19-35<br />

@ 20 mm<br />

Good lens<br />

Carl Zeiss Distagon<br />

2.8/21<br />

http://www.vanwalree.com/optics/chromatic.html<br />

Chromatic Aberration<br />

Spherical aberration Spherical aberration<br />

• Rays parallel to the axis do not converge<br />

• Outer portions of the lens yield smaller focal lengths<br />

Near Lens Center Near Lens Outer Edge<br />

Spherical lens are free of chromatic aberration but do not focus well.<br />

Parabolic lens does.<br />

9

Astigmatism<br />

Different focal length for inclined rays<br />

Lens Glare<br />

• Stray inter-reflections of light within the optical lens system<br />

• Happens when very bright sources are present in the scene<br />

Reading: http://www.dpreview.com<br />

Coma<br />

point off the axis depicted as comet shaped blob<br />

Outline<br />

• Pinhole camera<br />

• Lens<br />

• Lens Aberrations<br />

• Exposure<br />

• Sensors<br />

• Sensor Noise<br />

• Camera Pipeline<br />

10

Exposure<br />

• Two main parameters:<br />

– Aperture (in f stop)<br />

– Shutter speed (in fraction of a second)<br />

Aperture<br />

• Aperture is the diameter of the lens opening,<br />

usually specified by f-stop, f/D, a fraction of the<br />

focal length.<br />

– f/2.0 on a 50mm means that the aperture is 25mm<br />

– f/2.0 on a 100mm means that the aperture is 50mm<br />

• When a change in f-stop<br />

occurs, the light is either<br />

doubled or cut in half.<br />

• Lower f-stop, more light<br />

(larger lens opening)<br />

• Higher f-stop, less light<br />

(smaller lens opening)<br />

Types of Shutters Leaf Shutter<br />

• Advantages<br />

– Uniform illumination<br />

– Entire frame illuminated at once<br />

• Disadvantages<br />

– Illumination not constant over time<br />

– Limitations on shutter speed<br />

11

Focal Plane Shutter<br />

Aperture vs. Shutter<br />

• Advantages<br />

– Cost effective (one shutter needed<br />

for all lenses<br />

– Can achieve very fast shutter speeds<br />

(~1/10000 sec)<br />

• Disadvantages<br />

– May cause time distortion<br />

Depth of Field<br />

f/22 f/4<br />

Small Aperture Large Aperture<br />

(Low speed) (High speed)<br />

Shutter speed – Rule of Thumb<br />

• Handheld camera: shutter speed = 1 / f<br />

• Stabilized gear: 2-3 shutter speeds slower<br />

– Optical image stabilization<br />

• Canon - IS (Image Stabilizer) lenses<br />

• Nikon - VR (Vibration Reduction) lenses<br />

• Sigma - OS (Optical Stabilizer) lenses<br />

– CCD-shift stabilization<br />

• Konica Minolta’s Anti-Shake cameras<br />

• Pentax’s Shake-Reduction cameras<br />

Aperture vs. Shutter<br />

1/30 sec. @ f/22<br />

Motion Blur<br />

1/6400 sec. @ f/2.5<br />

Small Aperture Large Aperture<br />

(Low speed) (High speed)<br />

12

High Dynamic Range Image Short exposure<br />

Long exposure<br />

Real world<br />

radiance<br />

Picture<br />

intensity<br />

10 -6 10 6<br />

dynamic range<br />

10 -6 10 6<br />

Pixel value 0 to 255<br />

Real world<br />

radiance<br />

Picture<br />

intensity<br />

10 -6 10 6<br />

dynamic range<br />

10 -6 10 6<br />

Pixel value 0 to 255<br />

Varying shutter speeds<br />

13

HDR – High Dynamic Range Outline<br />

Spatial Sampling<br />

• When a continuous scene is imaged on the<br />

sensor, the continuous image is divided into<br />

discrete elements - picture elements (pixels)<br />

• Pinhole camera<br />

• Lens<br />

• Lens Aberrations<br />

• Exposure<br />

• Sensors<br />

• Sensor Noise<br />

• Camera Pipeline<br />

Spatial Sampling<br />

14

Sampling<br />

• The density of the sampling denotes the<br />

separation capability of the resulting image<br />

• Image resolution defines the finest details that<br />

are still visible by the image<br />

• We use a cyclic pattern to test the separation<br />

capability of an image<br />

0<br />

Sampling Frequency<br />

x<br />

Sampling Frequency<br />

Nyquist Frequency<br />

• Nyquist Rule: To observe details at frequency f<br />

(wavelength d) one must sample at frequency > 2f<br />

(sampling intervals < d/2)<br />

•<br />

• The Frequency 2f is the NYQUIST frequency.<br />

• Aliasing: If the pattern wavelength is less than 2d<br />

erroneous patterns may be produced.<br />

1D Example:<br />

0<br />

15

Aliasing - Moiré Patterns Quantization<br />

Digitizers (Quantization)<br />

Image Sensors<br />

• Considerations<br />

• Speed<br />

• Resolution<br />

• Signal / Noise Ratio<br />

• Cost<br />

16

MOS (Metal Oxide Semiconductor)<br />

• Photosensitive element<br />

• Charge acquired depends on the number of<br />

photons which reach the element<br />

• CCD devices are arrays of this basic element<br />

CCD (Charge Coupled Device)<br />

• Boyle and Smith, 1969, Bell Labs<br />

• Converts light into electrical signal (pixels)<br />

Photoelectric Effect<br />

Increasing energy<br />

Conduction Band<br />

Valence Band<br />

1.26eV<br />

Hole Electron<br />

• Thermally generated electrons are indistinguishable<br />

from photo-generated electrons � ‘Dark Current’.<br />

CCD Readout<br />

Bucket Brigade<br />

• Integration<br />

• Charge Shift and<br />

Read-out<br />

•Charge Amplifier<br />

17

CMOS (Complementary Metal-Oxide Semiconductor)<br />

• Each pixel owns its own charge-voltage conversion<br />

• No need for external shutter (electronic shutter)<br />

• The chip outputs digital bits<br />

• Much faster than CCD devices<br />

CCD vs. CMOS<br />

• Mature technology<br />

• Specific technology<br />

• High production cost<br />

• High power consumption<br />

• Higher fill factor<br />

• Blooming<br />

• Sequential readout<br />

Spectral Response of a Typical CCD Sensitivity<br />

• Sensitivity<br />

Reset<br />

Output<br />

T int<br />

∆V<br />

• Recent technology<br />

• Standard IC technology<br />

• Cheap<br />

• Low power<br />

• Less sensitive<br />

• Per pixel amplification<br />

• Random pixel access<br />

• Smart pixels<br />

• On chip integration<br />

with other components<br />

– is the ratio of voltage response to the photo energy illuminated.<br />

Voltage Drop (V/s)<br />

Slope = sensitivity<br />

( V / lx-s)<br />

Light intensity (lx)<br />

18

Sensor Size Comparison Sensor Parameters<br />

Fill Factor<br />

• The ratio between the light sensitive pixel area<br />

and the total pixel area.<br />

Total pixel area:<br />

5µm x 5µm<br />

Fill factor ≈ 40%<br />

Photo<br />

Sensing<br />

Area<br />

• Fill factor<br />

– The area in the sensor that is truly sensitive to light<br />

– Shift registers and others can reduce it up to a 30%<br />

• Well capacity<br />

– The quantity of charge that can be stored in each pixel<br />

– Close relation with pixel dimensions<br />

• Integration time:<br />

– Exposure time that is required to excite the CCD elements<br />

– IDepends on the scene brightness<br />

• <strong>Acquisition</strong> time:<br />

– Time needed to transfer the information gathered by the CCD<br />

– Depends on the number of pixels in the sensor<br />

Outline<br />

• Pinhole camera<br />

• Lens<br />

• Lens Aberrations<br />

• Exposure<br />

• Sensors<br />

• Sensor Noise<br />

• Camera Pipeline<br />

19

Sensor noise<br />

Noise Sources<br />

• Photon noise / Shot Noise (Poisson)<br />

• Dark Noise (Constant)<br />

•Thermal noise (Poisson)<br />

• Resetting (fixed)<br />

• Read-out noise (white & 1/f)<br />

• Blooming<br />

Poisson Distribution, FT = 5<br />

0 .2<br />

0 . 1 5<br />

0 .1<br />

0 . 0 5<br />

(After T. Lomheim, The Aerospace Corporation)<br />

http://www.stw.tu-ilmenau.de/~ff/beruf_cc/cmos/cmos_noise.pdf<br />

0<br />

0 2 0 4 0 6 0 8 0 1 0 0<br />

Poisson Distribution : std equals sqrt of Mean.<br />

σ<br />

2<br />

shot<br />

N<br />

= FT =<br />

N<br />

photons<br />

Photon Shot Noise<br />

• Light is quantum in nature<br />

• Noise due to statistics of the detected photons<br />

themselves<br />

• The probability distribution for N photons to be<br />

counted in an observation time T is Poisson<br />

P<br />

( N | F,<br />

T )<br />

( FT )<br />

N −FT<br />

e<br />

N!<br />

• F = fixed average flux (photons/sec)<br />

Poisson Distribution, FT = 10<br />

0 . 1 4<br />

0 . 1 2<br />

0 .1<br />

0 . 0 8<br />

0 . 0 6<br />

0 . 0 4<br />

0 . 0 2<br />

=<br />

0<br />

0 2 0 4 0 6 0 8 0 1 0 0<br />

N<br />

20

Poisson Distribution, FT = 20<br />

0 .1<br />

0 . 0 8<br />

0 . 0 6<br />

0 . 0 4<br />

0 . 0 2<br />

0<br />

0 2 0 4 0 6 0 8 0 1 0 0<br />

Photon Noise<br />

• More noise in bright parts of the image<br />

• You can identify the white and black regions from<br />

the noise image<br />

N<br />

Poisson Distribution, FT = 50<br />

0 . 0 6<br />

0 . 0 5<br />

0 . 0 4<br />

0 . 0 3<br />

0 . 0 2<br />

0 . 0 1<br />

0<br />

0 2 0 4 0 6 0 8 0 1 0 0<br />

N<br />

• As FT grows, Poisson distribution approaches Gaussian<br />

distribution<br />

• Signal To Noise (SNR) Increases with Mean.<br />

2 2<br />

N N<br />

SNR = = = N<br />

2<br />

σ N<br />

Photon Noise<br />

Photon Noise more noticeable in dark images.<br />

21

Dark Current Noise<br />

• Electron emission when no light<br />

• Dark current noise is high for long exposures<br />

• To remove (some) of it<br />

– Calibrate the camera (make response linear)<br />

– Capture the image of the scene as usual<br />

– Cover the lens with the lens cap and take another<br />

picture<br />

– Subtract the second image from the first image<br />

Quantum Efficiency<br />

• Not every photon hitting a pixel creates a free electron<br />

• Quantum Efficiency (QE) =<br />

– electrons collected / photons hitting the pixel<br />

QE [%]<br />

• QE heavily depends on the<br />

wavelength<br />

• QE < 100% degrades the SNR of a camera<br />

SNR =<br />

e<br />

QE SNR<br />

p<br />

blue � green � red<br />

• Typical max QE values : 25% (CMOS) … 60% (CCD)<br />

lambda [nm]<br />

Dark Current Noise<br />

Original image + Dark Current Noise Image with lens cap on<br />

Sensor noise<br />

ideal relationship between<br />

electrons and impinging photons<br />

Light Signal (QE = 50)<br />

Result of subtraction<br />

CCD capacity limit<br />

Photon Noise affects (QE = 50)<br />

Copyright Timo Autiokari,<br />

22

Outline<br />

• Pinhole camera<br />

• Lens<br />

• Lens Aberrations<br />

• Exposure<br />

• Sensors<br />

• Sensor Noise<br />

• Camera Pipeline<br />

Sensor Response<br />

• The response of a sensor is proportional to the<br />

radiance and the throughput<br />

Camera pipeline<br />

Measurement Equation<br />

• Scene Radiance L(x,ω,t,λ)<br />

• Optics (x’,ω’) = T(x,ω,λ)<br />

• Pixel Response P(x,λ)<br />

• Shutter S(x,ω,t)<br />

23

SLR (Single-Lens Reflex)<br />

• Reflex (R in SLR) means that we see through<br />

the same lens used to take the image.<br />

• Not the case for compact cameras<br />

Camera calibration<br />

• Geometric<br />

– How pixel coordinates relate to directions in<br />

the world<br />

• Photometric<br />

– How pixel values relate to radiance amounts<br />

in the world<br />

SLR view finder<br />

Mirror<br />

(flipped for exposure)<br />

Light from scene<br />

lens<br />

Prism<br />

Mirror<br />

(when viewing)<br />

Color Chart Calibration<br />

Your eye<br />

Film/sensor<br />

• Important preprocessing step for many vision and graphics<br />

algorithms<br />

• Use a color chart with precisely known reflectance<br />

90%<br />

59.1% 36.2% 19.8% 9.0%<br />

3.1%<br />

0<br />

0 ? 1<br />

Irradiance = const * Reflectance<br />

• Use more camera exposures to fill up the curve<br />

• Method assumes constant lighting on all patches and works best<br />

when source is far away<br />

• Unique inverse exists because g is monotonic and smooth<br />

Pixel Values<br />

255<br />

g<br />

−1<br />

g<br />

?<br />

24

Space of response curves Sensing Color<br />

Multi-Chip<br />

wavelength<br />

dependent<br />

light<br />

3 CCD<br />

beam splitter<br />

Field Sequential<br />

Foveon X3 TM<br />

Bayer pattern<br />

25

Field Sequential Field Sequential<br />

Embedded color filters<br />

Color filters can be manufactured directly onto<br />

the photodetectors.<br />

Color filter array<br />

Bayer pattern<br />

Color filter arrays (CFAs)/color filter mosaics<br />

26

Color filter array<br />

Kodak DCS620x<br />

Color filter arrays (CFAs)/color filter mosaics<br />

Bayer’s pattern<br />

Demosaicking CFA’s Color filter array<br />

red green blue output<br />

27

X3 technology<br />

red green blue output<br />

Cameras with X3<br />

Sigma SD10, SD9 Polaroid X530<br />

Hanvision HVDUO<br />

5M/10M<br />

Foveon X3 sensor<br />

Bayer CFA<br />

Color processing<br />

X3 sensor<br />

• After color values are recorded, more color<br />

processing usually happens:<br />

– White balance<br />

– Non-linearity to approximate film response or match<br />

TV monitor gamma<br />

28

White Balance<br />

warmer +3<br />

Gamma Correction<br />

automatic white balance<br />

• Gamma correction applied by the converter<br />

redistributes the pixel luminance values so that<br />

limited brightness range captured by the sensor<br />

is “mapped” to match our eye’s sensitivity.<br />

Gamma = 2.2 is a good match to distribute<br />

relative brightness in a print or in a video<br />

display.<br />

Manual white balance<br />

white balance with<br />

the white book<br />

white balance with<br />

the red book<br />

Gamma =1 vs. Gamma = 2.2<br />

29

Many Sources for Degradation<br />

• Sampling in space<br />

Pixels<br />

• Sampling in intensity<br />

Quantization<br />

• Sampling in color<br />

Color Filter Array (CFA)<br />

• Sampling in time<br />

Exposure, Interlacing<br />

• Sampling in frequency<br />

Lens and pixel PSF (point-spread-function)<br />

30