Pietruch R., Grzanka A., Konopka W.: Vowels recognition

Pietruch R., Grzanka A., Konopka W.: Vowels recognition

Pietruch R., Grzanka A., Konopka W.: Vowels recognition

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

16 th International Congress on Sound and Vibration, 5–9 July 2009, Kraków, Poland<br />

was made using analysis of acoustical and facial expression parameters.<br />

3. Methods<br />

3.1 Computer system<br />

Presenting system’s platform is Windows XP. Program is based on DirectShow filters technology.<br />

It extracts speech parameters from digital movie files (mostly in MPEG format), visualizes them,<br />

and provides methods for archiving. We implemented automatic face detection algorithm for frontal<br />

view image sequence. The system automatically tracks elements of face in real time, extracts visual<br />

parameters including lips shape, compares them with acoustical descriptors and updates vocal tract<br />

model parameters. Neural network simulation and learning were made on Linux platform Ubuntu<br />

8.04 distribution. We used GNU Octave program version 3.0.0 with nnet-0.1.9 package.<br />

3.2 Acoustical model<br />

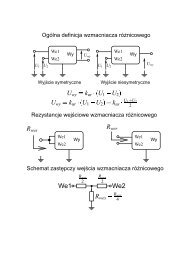

In this work we used the model of vocal tract divided into 10 sections of resonance cavities.<br />

We assumed that the palate is closed and the waves don’t pass through the nasal cavity. There is no<br />

energy loss and only reflection effect is taken into consideration. With these assumptions the vocal<br />

tract model is equivalent to lattice filter with PARCOR parameters equal to negative reflection coefficients<br />

[7]. Reversed lattice filter can be then transformed into transversal filter [3], which parameters<br />

can be estimated from speech signal using linear prediction method. Adaptive recursive least squares<br />

algorithm was used to estimate transversal filter LPC coefficients [3]. There is reversed transformation<br />

that from given LPC parameters derives related cross-sectional diameters of vocal tract sections.<br />

Transversal filter coefficients were used to track formant frequencies of six Polish vowels [6]. Two<br />

first formants F1 and F2 were chosen as the acoustical descriptors in audio <strong>recognition</strong> system.<br />

3.3 Formants tracking<br />

In the system with vowel <strong>recognition</strong> functionality the formant tracking algorithm was implemented.<br />

There was used Christensen algorithm for finding formants candidates from spectrum [2].<br />

All minimums of spectrum second derivative are chosen. For 4kHz bandwidth that was assumed in<br />

our study we can find up to 5 candidates f K = {f K 1 , f K 2 , · · · , f K nK } and we need to assign 4 formants<br />

numbers F = {F 1, F 2, · · · , F nF }. There can be three situations:<br />

2<br />

1. nK = nF<br />

Then candidates are assigned respectively to formants numbers with a function fK→F according<br />

to equation 1.<br />

fK→F : f K j → F i ⇔ i = j (1)<br />

where : i, j ∈ 1, 2, · · · , nF<br />

2. nK < nF<br />

In this situation nF − nK formants will be rejected. Function 2 will specify which formants<br />

need to be unassigned. The function can be given using BF matrix where each row represents<br />

candidate and each column represents one of formant (equation 3). This transformation is found<br />

using best-fit, minimum cost algorithm 7.<br />

fK→F : f K j → F i (2)<br />

BF (j, i) = 0 ⇔ fK→F (j) = i (3)