Optimal transport, Euler equations, Mather and DiPerna-Lions theories

Optimal transport, Euler equations, Mather and DiPerna-Lions theories

Optimal transport, Euler equations, Mather and DiPerna-Lions theories

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

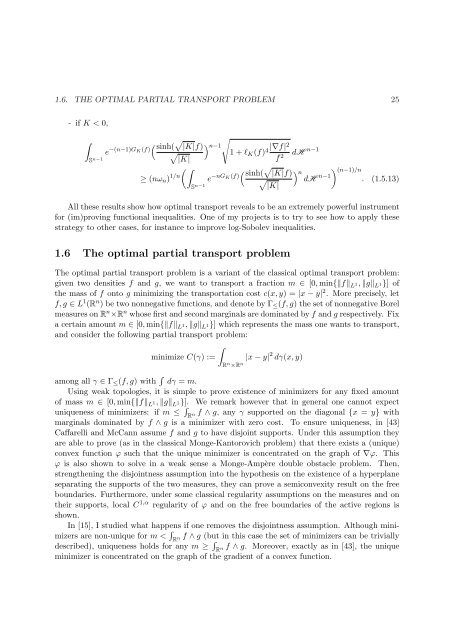

1.6. THE OPTIMAL PARTIAL TRANSPORT PROBLEM 25<br />

- if K < 0,<br />

<br />

S n−1<br />

e −(n−1)GK(f) sinh( <br />

|K|f)<br />

n−1 |∇f|2<br />

1 + ℓK(f) 4<br />

|K|<br />

f<br />

≥ (nωn) 1/n<br />

<br />

S n−1<br />

2 dH n−1<br />

e −nGK(f) sinh( |K|f)<br />

n dH<br />

|K|<br />

n−1<br />

(n−1)/n<br />

. (1.5.13)<br />

All these results show how optimal <strong>transport</strong> reveals to be an extremely powerful instrument<br />

for (im)proving functional inequalities. One of my projects is to try to see how to apply these<br />

strategy to other cases, for instance to improve log-Sobolev inequalities.<br />

1.6 The optimal partial <strong>transport</strong> problem<br />

The optimal partial <strong>transport</strong> problem is a variant of the classical optimal <strong>transport</strong> problem:<br />

given two densities f <strong>and</strong> g, we want to <strong>transport</strong> a fraction m ∈ [0, min{fL1, gL1}] of<br />

the mass of f onto g minimizing the <strong>transport</strong>ation cost c(x, y) = |x − y| 2 . More precisely, let<br />

f, g ∈ L1 (Rn ) be two nonnegative functions, <strong>and</strong> denote by Γ≤(f, g) the set of nonnegative Borel<br />

measures on Rn ×Rn whose first <strong>and</strong> second marginals are dominated by f <strong>and</strong> g respectively. Fix<br />

a certain amount m ∈ [0, min{fL1, gL1}] which represents the mass one wants to <strong>transport</strong>,<br />

<strong>and</strong> consider the following partial <strong>transport</strong> problem:<br />

<br />

minimize C(γ) := |x − y| 2 dγ(x, y)<br />

R n ×R n<br />

among all γ ∈ Γ≤(f, g) with dγ = m.<br />

Using weak topologies, it is simple to prove existence of minimizers for any fixed amount<br />

of mass m ∈ [0, min{f L 1, g L 1}]. We remark however that in general one cannot expect<br />

uniqueness of minimizers: if m ≤ <br />

R n f ∧ g, any γ supported on the diagonal {x = y} with<br />

marginals dominated by f ∧ g is a minimizer with zero cost. To ensure uniqueness, in [43]<br />

Caffarelli <strong>and</strong> McCann assume f <strong>and</strong> g to have disjoint supports. Under this assumption they<br />

are able to prove (as in the classical Monge-Kantorovich problem) that there exists a (unique)<br />

convex function ϕ such that the unique minimizer is concentrated on the graph of ∇ϕ. This<br />

ϕ is also shown to solve in a weak sense a Monge-Ampère double obstacle problem. Then,<br />

strengthening the disjointness assumption into the hypothesis on the existence of a hyperplane<br />

separating the supports of the two measures, they can prove a semiconvexity result on the free<br />

boundaries. Furthermore, under some classical regularity assumptions on the measures <strong>and</strong> on<br />

their supports, local C1,α regularity of ϕ <strong>and</strong> on the free boundaries of the active regions is<br />

shown.<br />

In [15], I studied what happens if one removes the disjointness assumption. Although minimizers<br />

are non-unique for m < <br />

Rn f ∧ g (but in this case the set of minimizers can be trivially<br />

described), uniqueness holds for any m ≥ <br />

Rn f ∧ g. Moreover, exactly as in [43], the unique<br />

minimizer is concentrated on the graph of the gradient of a convex function.