Populations, Parameters, Statistics, and Sampling

Populations, Parameters, Statistics, and Sampling

Populations, Parameters, Statistics, and Sampling

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

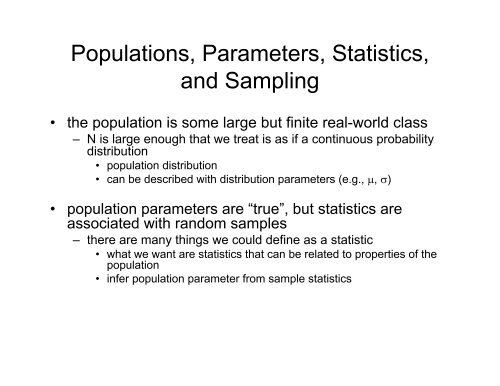

<strong>Populations</strong>, <strong>Parameters</strong>, <strong>Statistics</strong>,<br />

<strong>and</strong> <strong>Sampling</strong><br />

• the population is some large but finite real-world class<br />

– N is large enough that we treat is as if a continuous probability<br />

distribution<br />

• population distribution<br />

• can be described with distribution parameters (e.g., μ, σ)<br />

• population parameters are “true”, but statistics are<br />

associated with r<strong>and</strong>om samples<br />

– there are many things we could define as a statistic<br />

• what we want are statistics that can be related to properties of the<br />

population<br />

• infer population parameter from sample statistics

<strong>Sampling</strong> Distributions<br />

• When taking N samples (observations) from the<br />

population, we are interested in is a statistic across all<br />

possible samples of N<br />

– sampling distribution: for an assumed population parameter, the<br />

probability distribution for all possible samples of size N<br />

• e.g., sampling N times from a Bernoulli population with parameter P<br />

• If N=1, the sampling distribution is the population distribution<br />

– sampling distribution of the mean<br />

• just like the population, sampling distributions are theoretical, <strong>and</strong><br />

have “true” parameters<br />

– st<strong>and</strong>ard deviation of the sampling distribution of the mean is the<br />

st<strong>and</strong>ard error of the mean (σ M )<br />

• The statistics from a particular sample give a “point<br />

estimation” for the population parameters<br />

– in general these will be different due to r<strong>and</strong>om effects

Maximum Likelihood (MLE)<br />

• In calculating an estimate of the population parameter, set<br />

it so as to maximize the probability of the sample<br />

observations<br />

– p(D | M) is the likelihood of the data (D) given a population model<br />

(M)<br />

– an example:<br />

• three hypotheses regarding proportion of liberal arts majors (.4, .5,<br />

<strong>and</strong> .6)<br />

• Sample 15 students <strong>and</strong> observe that 9 are liberal arts majors<br />

• What’s the likelihood of each hypothesis?<br />

– L(x=9; p=?, N=15)<br />

– A statistic is a maximum likelihood estimator (MLE) when its value<br />

as applied to population parameter maximizes the sample result

Other Properties<br />

• Some statistics are designed with other properties in mind<br />

beyond probability (MLE) – in these examples, G is<br />

sample statistic that estimates θ<br />

– unbiased<br />

• expected value of G over all samples is θ (E[G] = θ)<br />

– x is unbiased estimate of μ<br />

– for binomial, P is unbiased estimate of p<br />

– however, S 2 is a biased estimate of σ 2<br />

– consistency<br />

• as N increases, should approach population parameters<br />

– relative efficiency<br />

• the variance of the estimator as compared to other estimators<br />

– mean is more efficient than median for normal distributions<br />

– sufficient<br />

• G contains all the information in the sample that can be used to find θ<br />

– often a set of sufficient statistics is required (e.g., mean <strong>and</strong> corrected<br />

variance)

<strong>Sampling</strong> Distribution of the Mean<br />

• Mean is unbiased, consistent, efficient, <strong>and</strong> combined<br />

with σ 2 is sufficient in many circumstances<br />

– the mean of the sampling distribution of means is the same as<br />

the population mean<br />

• However, the sampling distribution of the mean is not<br />

identical to population distribution<br />

• for example, variance of means σ M 2 = σ 2 / N<br />

– σ M is the st<strong>and</strong>ard error of the mean<br />

– a st<strong>and</strong>ard score of the sample mean is therefore<br />

Z<br />

M<br />

x − μ x − μ<br />

= =<br />

σ σ / N<br />

M<br />

– in order to compare the observed mean to the expected mean given<br />

some hypothesis, we need the st<strong>and</strong>ard error of the mean<br />

– suppose x = 15, μ = 10, σ = 4 <strong>and</strong> N = 16<br />

» what’s the probability of this deviant, or greater?

• E[S 2 ] ≠ σ 2<br />

Bias in the Sample Variance<br />

– proof on p. 216 E[S2 ] = σ2 - σ 2<br />

M<br />

• this is because the mean used to calculate S will in general be<br />

different than the population mean<br />

– the sample mean is always exactly centered on the particular sample<br />

<strong>and</strong> so deviations from it underestimate true population deviations<br />

2<br />

2 2 2 2 σ ⎛ N −1<br />

⎞ 2<br />

ES [ ] = σ − σM= σ − = ⎜ ⎟σ<br />

N ⎝ N ⎠<br />

– the sample variance is too small by a factor of (N-1)/N<br />

• S 2 is the uncorrected variance <strong>and</strong> s 2 is corrected<br />

– the corrected st<strong>and</strong>ard deviation is still slightly biased (only variance<br />

is unbiased)<br />

» the amount of remaining bias is small though, so it’s typical to<br />

use s to estimate σ<br />

• unbiased estimate of st<strong>and</strong>ard error of the mean<br />

ˆ σ M =<br />

ˆ σ<br />

=<br />

N<br />

s<br />

=<br />

N<br />

S<br />

N −1

Other Issues with Estimators<br />

• Pooling two or more samples results in better estimation<br />

– frequency weighted average (pp. 219-220)<br />

• For small populations, can’t assume sampling with<br />

replacement, <strong>and</strong> so the estimates need to be slightly<br />

larger (p.220)<br />

• Because the sample mean is typically different than the<br />

population mean, it’s useful to define a confidence<br />

interval for the population mean<br />

– using Tchebycheff inequality, the sample mean is between x<br />

±<br />

kσ M with at least probability 1 – (1/k 2 )<br />

• this is not the probability that x is μ<br />

– the probability that the interval from this sample covers μ<br />

• this is a very rough estimate <strong>and</strong> additional assumptions regarding<br />

the sampling distribution tighten the estimate<br />

– for unimodal symmetric sampling distribution, 95% interval with k=3