Task Independent Speech Verification Using SB-MVE Trained ...

Task Independent Speech Verification Using SB-MVE Trained ...

Task Independent Speech Verification Using SB-MVE Trained ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

60<br />

60<br />

50<br />

50<br />

Correct rejection rate %<br />

40<br />

30<br />

20<br />

Correct rejection rate %<br />

40<br />

30<br />

20<br />

10<br />

10<br />

0<br />

0 5 10 15 20 25<br />

False rejection rate %<br />

0<br />

0 5 10 15 20 25<br />

False rejection rate %<br />

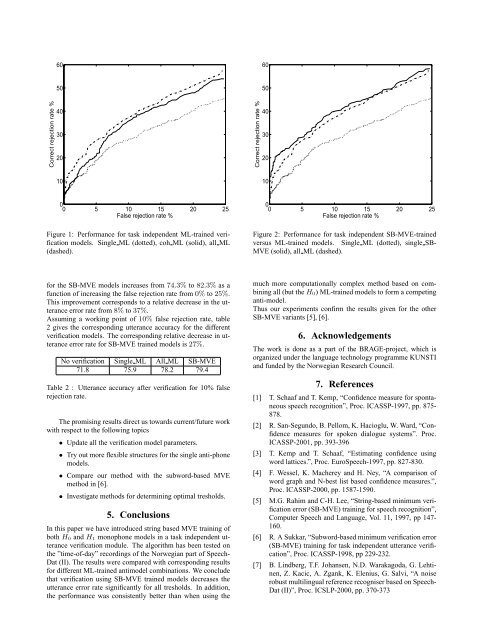

Figure 1: Performance for task independent ML-trained verification<br />

models. Single ML (dotted), coh ML (solid), all ML<br />

(dashed).<br />

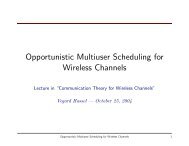

Figure 2: Performance for task independent <strong>SB</strong>-<strong>MVE</strong>-trained<br />

versus ML-trained models. Single ML (dotted), single <strong>SB</strong>-<br />

<strong>MVE</strong> (solid), all ML (dashed).<br />

for the <strong>SB</strong>-<strong>MVE</strong> models increases from 74.3% to 82.3% as a<br />

function of increasing the false rejection rate from 0% to 25%.<br />

This improvement corresponds to a relative decrease in the utterance<br />

error rate from 8% to 37%.<br />

Assuming a working point of 10% false rejection rate, table<br />

2 gives the corresponding utterance accuracy for the different<br />

verification models. The corresponding relative decrease in utterance<br />

error rate for <strong>SB</strong>-<strong>MVE</strong> trained models is 27%.<br />

No verification Single ML All ML <strong>SB</strong>-<strong>MVE</strong><br />

71.8 75.9 78.2 79.4<br />

Table 2 : Utterance accuracy after verification for 10% false<br />

rejection rate.<br />

The promising results direct us towards current/future work<br />

with respect to the following topics<br />

• Update all the verification model parameters.<br />

• Try out more flexible structures for the single anti-phone<br />

models.<br />

• Compare our method with the subword-based <strong>MVE</strong><br />

method in [6].<br />

• Investigate methods for determining optimal tresholds.<br />

5. Conclusions<br />

In this paper we have introduced string based <strong>MVE</strong> training of<br />

both H 0 and H 1 monophone models in a task independent utterance<br />

verification module. The algorithm has been tested on<br />

the ”time-of-day” recordings of the Norwegian part of <strong>Speech</strong>-<br />

Dat (II). The results were compared with corresponding results<br />

for different ML-trained antimodel combinations. We conclude<br />

that verification using <strong>SB</strong>-<strong>MVE</strong> trained models decreases the<br />

utterance error rate significantly for all tresholds. In addition,<br />

the performance was consistently better than when using the<br />

much more computationally complex method based on combining<br />

all (but the H 0) ML-trained models to form a competing<br />

anti-model.<br />

Thus our experiments confirm the results given for the other<br />

<strong>SB</strong>-<strong>MVE</strong> variants [5], [6].<br />

6. Acknowledgements<br />

The work is done as a part of the BRAGE-project, which is<br />

organized under the language technology programme KUNSTI<br />

and funded by the Norwegian Research Council.<br />

7. References<br />

[1] T. Schaaf and T. Kemp, “Confidence measure for spontaneous<br />

speech recognition”, Proc. ICASSP-1997, pp. 875-<br />

878.<br />

[2] R. San-Segundo, B. Pellom, K. Hacioglu, W. Ward, “Confidence<br />

measures for spoken dialogue systems”. Proc.<br />

ICASSP-2001, pp. 393-396<br />

[3] T. Kemp and T. Schaaf, “Estimating confidence using<br />

word lattices.”, Proc. Euro<strong>Speech</strong>-1997, pp. 827-830.<br />

[4] F. Wessel, K. Macherey and H. Ney, “A comparison of<br />

word graph and N-best list based confidence measures.”,<br />

Proc. ICASSP-2000, pp. 1587-1590.<br />

[5] M.G. Rahim and C-H. Lee, “String-based minimum verification<br />

error (<strong>SB</strong>-<strong>MVE</strong>) training for speech recognition”,<br />

Computer <strong>Speech</strong> and Language, Vol. 11, 1997, pp 147-<br />

160.<br />

[6] R. A Sukkar, “Subword-based minimum verification error<br />

(<strong>SB</strong>-<strong>MVE</strong>) training for task independent utterance verification”,<br />

Proc. ICASSP-1998, pp 229-232.<br />

[7] B. Lindberg, T.F. Johansen, N.D. Warakagoda, G. Lehtinen,<br />

Z. Kacic, A. Zgank, K. Elenius, G. Salvi, “A noise<br />

robust multilingual reference recogniser based on <strong>Speech</strong>-<br />

Dat (II)”, Proc. ICSLP-2000, pp. 370-373