DEAP: A Database for Emotion Analysis using Physiological Signals

DEAP: A Database for Emotion Analysis using Physiological Signals

DEAP: A Database for Emotion Analysis using Physiological Signals

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

IEEE TRANS. AFFECTIVE COMPUTING 3<br />

<strong>for</strong> determining an affect curve, shown to be useful <strong>for</strong><br />

extracting video highlights in a movie or sports video.<br />

Wang and Cheong [30] used audio and video features<br />

to classify basic emotions elicited by movie scenes. Audio<br />

was classified into music, speech and environment<br />

signals and these were treated separately to shape an<br />

aural affective feature vector. The aural affective vector<br />

of each scene was fused with video-based features such<br />

as key lighting and visual excitement to <strong>for</strong>m a scene<br />

feature vector. Finally, <strong>using</strong> the scene feature vectors,<br />

movie scenes were classified and labeled with emotions.<br />

Soleymani et. al proposed a scene affective characterization<br />

<strong>using</strong> a Bayesian framework [31]. Arousal and<br />

valence of each shot were first determined <strong>using</strong> linear<br />

regression. Then, arousal and valence values in addition<br />

to content features of each scene were used to classify<br />

every scene into three classes, namely calm, excited positive<br />

and excited negative. The Bayesian framework was<br />

able to incorporate the movie genre and the predicted<br />

emotion from the last scene or temporal in<strong>for</strong>mation to<br />

improve the classification accuracy.<br />

There are also various studies on music affective characterization<br />

from acoustic features [32]–[34]. Rhythm,<br />

tempo, Mel-frequency cepstral coefficients (MFCC),<br />

pitch, zero crossing rate are amongst common features<br />

which have been used to characterize affect in music.<br />

A pilot study <strong>for</strong> the current work was presented in<br />

[35]. In that study, 6 participants’ EEG and physiological<br />

signals were recorded as each watched 20 music videos.<br />

The participants rated arousal and valence levels and<br />

the EEG and physiological signals <strong>for</strong> each video were<br />

classified into low/high arousal/valence classes.<br />

In the current work, music video clips are used as the<br />

visual stimuli to elicit different emotions. To this end,<br />

a relatively large set of music video clips was gathered<br />

<strong>using</strong> a novel stimuli selection method. A subjective test<br />

was then per<strong>for</strong>med to select the most appropriate test<br />

material. For each video, a one-minute highlight was<br />

selected automatically. 32 participants took part in the<br />

experiment and their EEG and peripheral physiological<br />

signals were recorded as they watched the 40 selected<br />

music videos. Participants rated each video in terms of<br />

arousal, valence,like/dislike, dominance and familiarity.<br />

For 22 participants, frontal face video was also recorded.<br />

This paper aims at introducing this publicly available 2<br />

database. The database contains all recorded signal data,<br />

frontal face video <strong>for</strong> a subset of the participants and<br />

subjective ratings from the participants. Also included<br />

is the subjective ratings from the initial online subjective<br />

annotation and the list of 120 videos used. Due to<br />

licensing issues, we are not able to include the actual<br />

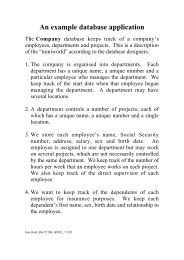

videos, but YouTube links are included. Table 1 gives an<br />

overview of the database contents.<br />

To the best of our knowledge, this database has the<br />

highest number of participants in publicly available<br />

databases <strong>for</strong> analysis of spontaneous emotions from<br />

2. http://www.eecs.qmul.ac.uk/mmv/datasets/deap/<br />

Number of videos 120<br />

TABLE 1<br />

<strong>Database</strong> content summary<br />

Online subjective annotation<br />

Video duration 1 minute affective highlight (section 2.2)<br />

Selection method<br />

No. of ratings per video 14 - 16<br />

Rating scales<br />

60 via last.fm affective tags,<br />

60 manually selected<br />

Arousal<br />

Valence<br />

Dominance<br />

Rating values Discrete scale of 1 - 9<br />

Number of participants 32<br />

Number of videos 40<br />

<strong>Physiological</strong> Experiment<br />

Selection method Subset of online annotated videos with<br />

clearest responses (see section 2.3)<br />

Rating scales<br />

Rating values<br />

Recorded signals<br />

Arousal<br />

Valence<br />

Dominance<br />

Liking (how much do you like the video?)<br />

Familiarity (how well do you know the video?)<br />

Familiarity: discrete scale of 1 - 5<br />

Others: continuous scale of 1 - 9<br />

32-channel 512Hz EEG<br />

Peripheral physiological signals<br />

Face video (<strong>for</strong> 22 participants)<br />

physiological signals. In addition, it is the only database<br />

that uses music videos as emotional stimuli.<br />

We present an extensive statistical analysis of the<br />

participant’s ratings and of the correlates between the<br />

EEG signals and the ratings. Preliminary single trial<br />

classification results of EEG, peripheral physiological<br />

signals and MCA are presented and compared. Finally,<br />

a fusion algorithm is utilized to combine the results of<br />

each modality and arrive at a more robust decision.<br />

The layout of the paper is as follows. In Section 2<br />

the stimuli selection procedure is described in detail.<br />

The experiment setup is covered in Section 3. Section<br />

4 provides a statistical analysis of the ratings given by<br />

participants during the experiment and a validation of<br />

our stimuli selection method. In Section 5, correlates between<br />

the EEG frequencies and the participants’ ratings<br />

are presented. The method and results of single-trial<br />

classification are given in Section 6. The conclusion of<br />

this work follows in Section 7.<br />

2 STIMULI SELECTION<br />

The stimuli used in the experiment were selected in<br />

several steps. First, we selected 120 initial stimuli, half<br />

of which were chosen semi-automatically and the rest<br />

manually. Then, a one-minute highlight part was determined<br />

<strong>for</strong> each stimulus. Finally, through a web-based<br />

subjective assessment experiment, 40 final stimuli were<br />

selected. Each of these steps is explained below.