Localized Supervised Metric Learning on ... - Researcher - IBM

Localized Supervised Metric Learning on ... - Researcher - IBM

Localized Supervised Metric Learning on ... - Researcher - IBM

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

epresented by a N-dimensi<strong>on</strong>al feature vector x. Examples<br />

of features are the mean and variance of the sensor<br />

measures, or Wavelet coefficients. The prior belief<br />

of physicians is captured as labels <strong>on</strong> some of the patients.<br />

With this formulati<strong>on</strong>, our goal is to learn a generalized<br />

Mahalanobis distance between patient x i and<br />

patient x j defined as:<br />

√<br />

d m (x i , x j ) = (x i − x j ) T P(x i − x j ) (1)<br />

where P ∈ R N×N is called the precisi<strong>on</strong> matrix. Matrix<br />

P is positive semi-definite and is used to incorporate<br />

the correlati<strong>on</strong>s between different feature dimensi<strong>on</strong>s.<br />

The key is to learn the optimal P such that the<br />

resulting distance metric has the following properties:<br />

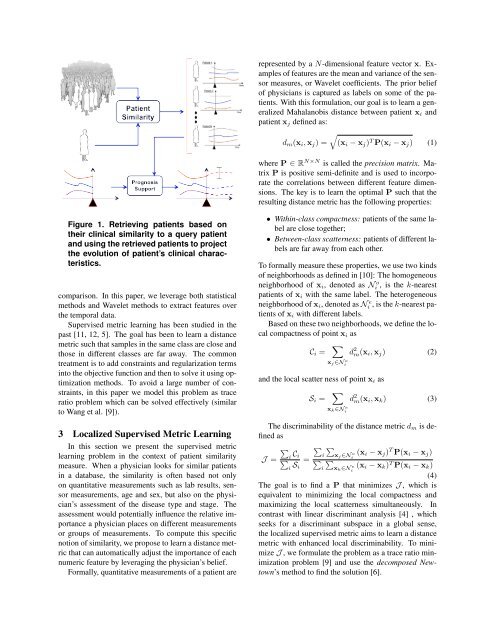

Figure 1. Retrieving patients based <strong>on</strong><br />

their clinical similarity to a query patient<br />

and using the retrieved patients to project<br />

the evoluti<strong>on</strong> of patient’s clinical characteristics.<br />

comparis<strong>on</strong>. In this paper, we leverage both statistical<br />

methods and Wavelet methods to extract features over<br />

the temporal data.<br />

<str<strong>on</strong>g>Supervised</str<strong>on</strong>g> metric learning has been studied in the<br />

past [11, 12, 5]. The goal has been to learn a distance<br />

metric such that samples in the same class are close and<br />

those in different classes are far away. The comm<strong>on</strong><br />

treatment is to add c<strong>on</strong>straints and regularizati<strong>on</strong> terms<br />

into the objective functi<strong>on</strong> and then to solve it using optimizati<strong>on</strong><br />

methods. To avoid a large number of c<strong>on</strong>straints,<br />

in this paper we model this problem as trace<br />

ratio problem which can be solved effectively (similar<br />

to Wang et al. [9]).<br />

3 <str<strong>on</strong>g>Localized</str<strong>on</strong>g> <str<strong>on</strong>g>Supervised</str<strong>on</strong>g> <str<strong>on</strong>g>Metric</str<strong>on</strong>g> <str<strong>on</strong>g>Learning</str<strong>on</strong>g><br />

In this secti<strong>on</strong> we present the supervised metric<br />

learning problem in the c<strong>on</strong>text of patient similarity<br />

measure. When a physician looks for similar patients<br />

in a database, the similarity is often based not <strong>on</strong>ly<br />

<strong>on</strong> quantitative measurements such as lab results, sensor<br />

measurements, age and sex, but also <strong>on</strong> the physician’s<br />

assessment of the disease type and stage. The<br />

assessment would potentially influence the relative importance<br />

a physician places <strong>on</strong> different measurements<br />

or groups of measurements. To compute this specific<br />

noti<strong>on</strong> of similarity, we propose to learn a distance metric<br />

that can automatically adjust the importance of each<br />

numeric feature by leveraging the physician’s belief.<br />

Formally, quantitative measurements of a patient are<br />

• Within-class compactness: patients of the same label<br />

are close together;<br />

• Between-class scatterness: patients of different labels<br />

are far away from each other.<br />

To formally measure these properties, we use two kinds<br />

of neighborhoods as defined in [10]: The homogeneous<br />

neighborhood of x i , denoted as Ni o , is the k-nearest<br />

patients of x i with the same label. The heterogeneous<br />

neighborhood of x i , denoted as Ni e , is the k-nearest patients<br />

of x i with different labels.<br />

Based <strong>on</strong> these two neighborhoods, we define the local<br />

compactness of point x i as<br />

C i =<br />

∑<br />

d 2 m(x i , x j ) (2)<br />

x j∈N o i<br />

and the local scatter ness of point x i as<br />

S i =<br />

∑<br />

x k ∈N e i<br />

d 2 m(x i , x k ) (3)<br />

The discriminability of the distance metric d m is defined<br />

as<br />

∑<br />

J = ∑ i C ∑ ∑<br />

i i x j∈N<br />

(x<br />

i<br />

i S =<br />

o i − x j ) T P(x i − x j )<br />

∑ ∑<br />

i i x k ∈N<br />

(x<br />

i<br />

e i − x k ) T P(x i − x k )<br />

(4)<br />

The goal is to find a P that minimizes J , which is<br />

equivalent to minimizing the local compactness and<br />

maximizing the local scatterness simultaneously. In<br />

c<strong>on</strong>trast with linear discriminant analysis [4] , which<br />

seeks for a discriminant subspace in a global sense,<br />

the localized supervised metric aims to learn a distance<br />

metric with enhanced local discriminability. To minimize<br />

J , we formulate the problem as a trace ratio minimizati<strong>on</strong><br />

problem [9] and use the decomposed Newtown’s<br />

method to find the soluti<strong>on</strong> [6].