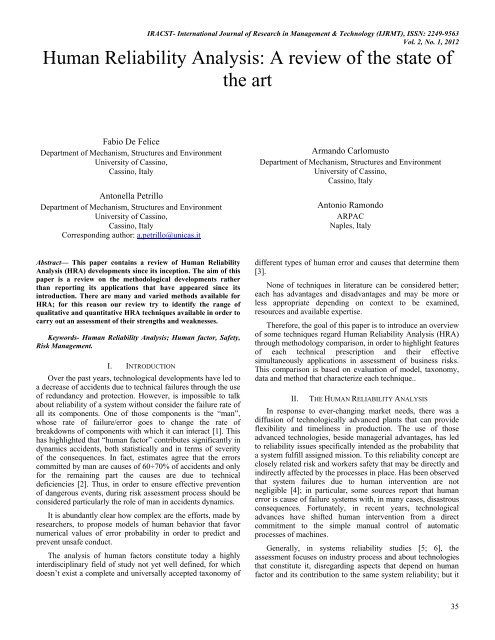

Human Reliability Analysis: A review of the state of the art

Human Reliability Analysis: A review of the state of the art

Human Reliability Analysis: A review of the state of the art

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

IRACST- International Journal <strong>of</strong> Research in Management & Technology (IJRMT), ISSN: 2249-9563<br />

Vol. 2, No. 1, 2012<br />

<strong>Human</strong> <strong>Reliability</strong> <strong>Analysis</strong>: A <strong>review</strong> <strong>of</strong> <strong>the</strong> <strong>state</strong> <strong>of</strong><br />

<strong>the</strong> <strong>art</strong><br />

Fabio De Felice<br />

Dep<strong>art</strong>ment <strong>of</strong> Mechanism, Structures and Environment<br />

University <strong>of</strong> Cassino,<br />

Cassino, Italy<br />

Antonella Petrillo<br />

Dep<strong>art</strong>ment <strong>of</strong> Mechanism, Structures and Environment<br />

University <strong>of</strong> Cassino,<br />

Cassino, Italy<br />

Corresponding author: a.petrillo@unicas.it<br />

Armando Carlomusto<br />

Dep<strong>art</strong>ment <strong>of</strong> Mechanism, Structures and Environment<br />

University <strong>of</strong> Cassino,<br />

Cassino, Italy<br />

Antonio Ramondo<br />

ARPAC<br />

Naples, Italy<br />

Abstract— This paper contains a <strong>review</strong> <strong>of</strong> <strong>Human</strong> <strong>Reliability</strong><br />

<strong>Analysis</strong> (HRA) developments since its inception. The aim <strong>of</strong> this<br />

paper is a <strong>review</strong> on <strong>the</strong> methodological developments ra<strong>the</strong>r<br />

than reporting its applications that have appeared since its<br />

introduction. There are many and varied methods available for<br />

HRA; for this reason our <strong>review</strong> try to identify <strong>the</strong> range <strong>of</strong><br />

qualitative and quantitative HRA techniques available in order to<br />

carry out an assessment <strong>of</strong> <strong>the</strong>ir strengths and weaknesses.<br />

Keywords- <strong>Human</strong> <strong>Reliability</strong> <strong>Analysis</strong>; <strong>Human</strong> factor, Safety,<br />

Risk Management.<br />

I. INTRODUCTION<br />

Over <strong>the</strong> past years, technological developments have led to<br />

a decrease <strong>of</strong> accidents due to technical failures through <strong>the</strong> use<br />

<strong>of</strong> redundancy and protection. However, is impossible to talk<br />

about reliability <strong>of</strong> a system without consider <strong>the</strong> failure rate <strong>of</strong><br />

all its components. One <strong>of</strong> those components is <strong>the</strong> “man”,<br />

whose rate <strong>of</strong> failure/error goes to change <strong>the</strong> rate <strong>of</strong><br />

breakdowns <strong>of</strong> components with which it can interact [1]. This<br />

has highlighted that “human factor” contributes significantly in<br />

dynamics accidents, both statistically and in terms <strong>of</strong> severity<br />

<strong>of</strong> <strong>the</strong> consequences. In fact, estimates agree that <strong>the</strong> errors<br />

committed by man are causes <strong>of</strong> 60÷70% <strong>of</strong> accidents and only<br />

for <strong>the</strong> remaining p<strong>art</strong> <strong>the</strong> causes are due to technical<br />

deficiencies [2]. Thus, in order to ensure effective prevention<br />

<strong>of</strong> dangerous events, during risk assessment process should be<br />

considered p<strong>art</strong>icularly <strong>the</strong> role <strong>of</strong> man in accidents dynamics.<br />

It is abundantly clear how complex are <strong>the</strong> efforts, made by<br />

researchers, to propose models <strong>of</strong> human behavior that favor<br />

numerical values <strong>of</strong> error probability in order to predict and<br />

prevent unsafe conduct.<br />

The analysis <strong>of</strong> human factors constitute today a highly<br />

interdisciplinary field <strong>of</strong> study not yet well defined, for which<br />

doesn’t exist a complete and universally accepted taxonomy <strong>of</strong><br />

different types <strong>of</strong> human error and causes that determine <strong>the</strong>m<br />

[3].<br />

None <strong>of</strong> techniques in literature can be considered better;<br />

each has advantages and disadvantages and may be more or<br />

less appropriate depending on context to be examined,<br />

resources and available expertise.<br />

Therefore, <strong>the</strong> goal <strong>of</strong> this paper is to introduce an overview<br />

<strong>of</strong> some techniques regard <strong>Human</strong> <strong>Reliability</strong> <strong>Analysis</strong> (HRA)<br />

through methodology comparison, in order to highlight features<br />

<strong>of</strong> each technical prescription and <strong>the</strong>ir effective<br />

simultaneously applications in assessment <strong>of</strong> business risks.<br />

This comparison is based on evaluation <strong>of</strong> model, taxonomy,<br />

data and method that characterize each technique..<br />

II. THE HUMAN RELIABILITY ANALYSIS<br />

In response to ever-changing market needs, <strong>the</strong>re was a<br />

diffusion <strong>of</strong> technologically advanced plants that can provide<br />

flexibility and timeliness in production. The use <strong>of</strong> those<br />

advanced technologies, beside managerial advantages, has led<br />

to reliability issues specifically intended as <strong>the</strong> probability that<br />

a system fulfill assigned mission. To this reliability concept are<br />

closely related risk and workers safety that may be directly and<br />

indirectly affected by <strong>the</strong> processes in place. Has been observed<br />

that system failures due to human intervention are not<br />

negligible [4]; in p<strong>art</strong>icular, some sources report that human<br />

error is cause <strong>of</strong> failure systems with, in many cases, disastrous<br />

consequences. Fortunately, in recent years, technological<br />

advances have shifted human intervention from a direct<br />

commitment to <strong>the</strong> simple manual control <strong>of</strong> automatic<br />

processes <strong>of</strong> machines.<br />

Generally, in systems reliability studies [5; 6], <strong>the</strong><br />

assessment focuses on industry process and about technologies<br />

that constitute it, disregarding aspects that depend on human<br />

factor and its contribution to <strong>the</strong> same system reliability; but it<br />

35

should be noted that human error is a major contributor to <strong>the</strong><br />

risks and reliability <strong>of</strong> many systems: over 90% in nuclear<br />

industry [7], over 80% in chemical and petro-chemical<br />

industries [8], over 75% <strong>of</strong> marine casualties [9], and over 70%<br />

<strong>of</strong> aviation accidents [10], [11]. For this reason, st<strong>art</strong>ing from<br />

high risk industrial areas, such as nuclear, aerospace and<br />

petrochemical, up to individual SMEs, <strong>the</strong>re was <strong>the</strong> need to<br />

common techniques <strong>of</strong> risk analysis with human factor<br />

evaluation methodologies, collected under <strong>the</strong> name <strong>of</strong> <strong>Human</strong><br />

<strong>Reliability</strong> <strong>Analysis</strong> (HRA).<br />

<strong>Human</strong> <strong>Reliability</strong> <strong>Analysis</strong> identifies errors and<br />

weaknesses in a system by examining methods <strong>of</strong> work<br />

including those who work in <strong>the</strong> system. HRA falls within <strong>the</strong><br />

field <strong>of</strong> human factors and has been defined as <strong>the</strong> application<br />

<strong>of</strong> relevant information about human characteristics and<br />

behavior to <strong>the</strong> design <strong>of</strong> objects, facilities and environments<br />

that people use [12]. HRA techniques may be used<br />

retrospectively, in incidents analysis, or more likely<br />

prospectively to examine a system. Most approaches are firmly<br />

grounded in a systemic approach which sees <strong>the</strong> human<br />

contribution in context <strong>of</strong> wider technical and organizational<br />

context [13]. The purpose is to examine task, process, system<br />

or organizational structure for where weakness may lie or<br />

create a vulnerability to errors, not to find fault or apportion<br />

blame. Any system in which human error can arise, can be<br />

analyzed with HRA, which in practice, means almost any<br />

process in which humans are involved [14].<br />

III. HRA METHODOLOGIES<br />

Over <strong>the</strong> years several methodologies for <strong>Human</strong><br />

<strong>Reliability</strong> <strong>Analysis</strong> have been made. This development has led<br />

researchers to analyze accurately information in order to<br />

understand what could be <strong>the</strong> best approach for HRA.<br />

Information refers to costs, ease <strong>of</strong> application, ease <strong>of</strong><br />

analysis, availability <strong>of</strong> data, reliability, and face validity [15].<br />

Developed methodologies can be distinguished into two<br />

macro-categories: First and Second generation methods.<br />

First generation methods include 35-40 methods for human<br />

reliability, many <strong>of</strong> which are variations on a single method.<br />

Theoretical basis which relates most <strong>of</strong> first-generation<br />

methods are: error classification method according to <strong>the</strong><br />

concept “omission-commission”; definition <strong>of</strong> “performance<br />

shaping factors” (PFS); cognitive model: skill-based, rulebased,<br />

knowledge-based.<br />

The most accredited <strong>the</strong>ory to define and classify wrong<br />

action is <strong>the</strong> error classification method according to <strong>the</strong><br />

concept “omission-commission” [16]. This concept contains<br />

<strong>the</strong> following meanings: Omission: identifies an action that is<br />

not done, is done late, or is done in advance; Commission: is<br />

<strong>the</strong> implementation <strong>of</strong> a performance by <strong>the</strong> operator that is not<br />

required by <strong>the</strong> process.<br />

St<strong>art</strong>ing from <strong>the</strong>se <strong>the</strong>ories, have been developed cognitive<br />

models <strong>of</strong> second generation; <strong>the</strong> most representative<br />

techniques are: OAT (Operator Action Tree); THERP<br />

(Technique for <strong>Human</strong> Error Rate Prediction); TESEO<br />

(Empirical technique to estimate operator’s error); HCR<br />

(<strong>Human</strong> Cognitive <strong>Reliability</strong> model).<br />

IRACST- International Journal <strong>of</strong> Research in Management & Technology (IJRMT), ISSN: 2249-9563<br />

Vol. 2, No. 1, 2012<br />

Second generation methods, term coined by Doughty [17]<br />

try to overcome limitations <strong>of</strong> traditional methods, in<br />

p<strong>art</strong>icular:<br />

- provide guidance on possible and probable decision<br />

paths followed by operator, using mental processes models<br />

provided by cognitive psychology;<br />

- extend errors description beyond usual binary<br />

classification (omission-commission), recognizing importance<br />

<strong>of</strong> so-called "cognitive errors";<br />

- consider dynamic aspects <strong>of</strong> human-machine<br />

interaction and can be used as basis for simulators development<br />

<strong>of</strong> operator performance.<br />

In order to estimate and analyze cognitive reliability, is<br />

required a suitable model <strong>of</strong> human information processing.<br />

The most popular cognitive models are based on <strong>the</strong> following<br />

<strong>the</strong>ories:<br />

- S.O.R. Paradigm (Stimulus-Organism-Response):<br />

argues that response is a function <strong>of</strong> stimulus and organism,<br />

thus a stimulus acts on organism which in turn generates a<br />

response;<br />

- Man as a mechanism <strong>of</strong> information processing:<br />

according to this vision, mental processes are strictly specified<br />

procedures and mental <strong>state</strong>s are defined by causal relations<br />

with o<strong>the</strong>r sensory inputs and mental <strong>state</strong>s. It is a recent <strong>the</strong>ory<br />

that sees man as an information processing system (IPS);<br />

- Cognitive Viewpoint: in this <strong>the</strong>ory, cognition [18] is<br />

seen as active ra<strong>the</strong>r than reactive; in addition, cognitive<br />

activity is defined in a cyclical mode ra<strong>the</strong>r than sequential<br />

mode.<br />

St<strong>art</strong>ing from <strong>the</strong>se <strong>the</strong>ories, have been developed cognitive<br />

models <strong>of</strong> second generation; <strong>the</strong> most representative<br />

techniques are: ATHEANA (A Technique for <strong>Human</strong> Error<br />

ANAlysis); CREAM (Cognitive <strong>Reliability</strong> and Error <strong>Analysis</strong><br />

Method).<br />

A. First generation methodologies<br />

These tools were <strong>the</strong> first to be developed to help risk<br />

evaluators predict and quantify <strong>the</strong> likelihood <strong>of</strong> human<br />

error.First generation approaches tend to be atomistic in nature;<br />

<strong>the</strong>y encourage <strong>the</strong> evaluator to break a task into component<br />

p<strong>art</strong>s and <strong>the</strong>n consider <strong>the</strong> potential impact <strong>of</strong> modifying<br />

factors such as time pressure, equipment design and stress.<br />

First generation methods focus on <strong>the</strong> skill and rule base level<br />

<strong>of</strong> human action and are <strong>of</strong>ten criticised for failing to consider<br />

such things as <strong>the</strong> impact <strong>of</strong> context, organisational factors and<br />

errors <strong>of</strong> commission. Despite <strong>the</strong>se criticisms <strong>the</strong>y are useful<br />

and many are in regular use for quantitative risk assessments.<br />

OAT (Operator Action Tree)<br />

Operator Action Tree (OAT) was developed by John<br />

Wreathall in early 1980s. The OAT approach to HRA is based<br />

on <strong>the</strong> premise that <strong>the</strong> response to an event can be described as<br />

consisting <strong>of</strong> three stages [19]: observing or noting <strong>the</strong> event;<br />

diagnosing or thinking about it; responding to it.<br />

36

The method is based on a logical tree, which identifies<br />

possible ways <strong>of</strong> worker fallibility, after that an accident has<br />

occurred. It’s contains <strong>the</strong> following five steps:<br />

Identify <strong>the</strong> relevant plant safety functions from<br />

system even trees;<br />

Identify event specific actions required to achieve <strong>the</strong><br />

plant safety functions;<br />

Identify displays that represent relevant alarm<br />

indications and operators’ available time to take appropriate<br />

mitigating actions;<br />

Represent error in fault trees;<br />

Estimate <strong>the</strong> probability <strong>of</strong> errors. Once <strong>the</strong> thinking<br />

interval has been established, nominal error probability is<br />

calculated from <strong>the</strong> time-reliability relationship.<br />

Thus, estimate <strong>of</strong> failure probability is closely related to <strong>the</strong><br />

nominal interval <strong>of</strong> time required to make a decision when an<br />

anomaly is detected. This interval can be written formally:<br />

T=t 1 -t 2 -t 3 (1)<br />

where:<br />

T represents required time to make decision;<br />

t 1 represents interval time between <strong>the</strong> beginning <strong>of</strong> incident<br />

and <strong>the</strong> end <strong>of</strong> actions that are related;<br />

t 2 represents time between st<strong>art</strong> <strong>of</strong> incident and planning <strong>of</strong><br />

mind intervention;<br />

t 3 represents required time to implement what is planned<br />

(t 2 ).<br />

OAT identifies three types <strong>of</strong> purely cognitive errors:<br />

failure to perceive that <strong>the</strong>re was an accident; failure to<br />

diagnose nature <strong>of</strong> incident and identify actions required to<br />

remedy it; error in temporal evaluation <strong>of</strong> correct behavior<br />

implementation.<br />

OAT methodology considers <strong>the</strong> probability <strong>of</strong> failure in<br />

diagnosing an event, i.e., <strong>the</strong> classical case <strong>of</strong> response or nonresponse.<br />

Because <strong>of</strong> this, OAT cannot be said to provide an<br />

adequate treatment <strong>of</strong> human erroneous actions. It however<br />

differs from <strong>the</strong> majority <strong>of</strong> first generation methodology for<br />

HRA by maintaining a distinction among three phases <strong>of</strong><br />

response: observation, diagnosis and response. This amounts to<br />

a simple process model and acknowledges that response is<br />

based on a development that includes various activities by<br />

operator.<br />

THERP (Technique for <strong>Human</strong> Error Rate Prediction)<br />

The Technique for <strong>Human</strong> Error Rate Prediction (THERP)<br />

began already in 1961, but <strong>the</strong> main work was done during<br />

1970s [20], resulting in <strong>the</strong> so-called THERP handbook [21].<br />

This is a first generation methodology which means that its<br />

procedures follow <strong>the</strong> way conventional reliability analysis<br />

models a machine.<br />

IRACST- International Journal <strong>of</strong> Research in Management & Technology (IJRMT), ISSN: 2249-9563<br />

Vol. 2, No. 1, 2012<br />

The aim <strong>of</strong> this methodology is to calculate <strong>the</strong> probability<br />

<strong>of</strong> successful performance <strong>of</strong> necessary activities for realization<br />

<strong>of</strong> a task. THERP involves performing a task analysis to<br />

provide a description <strong>of</strong> performance characteristics <strong>of</strong> human<br />

tasks being analysed. Results are represented graphically in an<br />

HRA event tree, which is a formal representation <strong>of</strong> required<br />

actions sequence.<br />

THERP relies on a large human reliability database<br />

containing HEPs (<strong>Human</strong> Error Probabilities) which is based<br />

upon both plant data and expert judgments. The technique was<br />

<strong>the</strong> first approach in HRA to come into broad use and is still<br />

widely used in a range <strong>of</strong> applications even beyond its original<br />

nuclear setting.<br />

The methodology consists <strong>of</strong> <strong>the</strong> following six steps:<br />

Define <strong>the</strong> system failures <strong>of</strong> interest. These failures<br />

include functions <strong>of</strong> <strong>the</strong> system in which human error has a<br />

greater likelihood <strong>of</strong> influencing <strong>the</strong> probability <strong>of</strong> a fault, and<br />

those which are <strong>of</strong> interest to <strong>the</strong> risk assessor;<br />

Identify, list and analyze related human operations<br />

performed and <strong>the</strong>ir relationship to system tasks and function<br />

<strong>of</strong> interest. This stage <strong>of</strong> process necessitates a comprehensive<br />

task and human error analysis. Task analysis lists and<br />

sequences <strong>the</strong> discrete elements and information required by<br />

task operators. For each step <strong>of</strong> task, possible occurring errors<br />

which may transpire are considered by analyst and precisely<br />

defined. An event tree visually displays all events which occur<br />

within a system. The event tree thus shows a number <strong>of</strong><br />

different paths each <strong>of</strong> which has an associated end <strong>state</strong> or<br />

consequence.<br />

Estimate relevant human error probabilities.<br />

Estimate <strong>the</strong> effects <strong>of</strong> human error on <strong>the</strong> system<br />

failure events. With <strong>the</strong> completion <strong>of</strong> <strong>the</strong> HRA <strong>the</strong> human<br />

contribution to failure can <strong>the</strong>n be assessed in comparison with<br />

<strong>the</strong> results <strong>of</strong> <strong>the</strong> overall reliability analysis<br />

Recommend changes to <strong>the</strong> system and recalculate <strong>the</strong><br />

system failure probabilities. Once <strong>the</strong> human factor<br />

contribution is known, sensitivity analysis can be used to<br />

identify how certain risks may be improved in <strong>the</strong> reduction <strong>of</strong><br />

HEPs. Error recovery paths may be incorporated into <strong>the</strong> event<br />

tree as this will aid <strong>the</strong> assessor when considering <strong>the</strong> possible<br />

approaches by which <strong>the</strong> identified errors can be reduced.<br />

Review consequences <strong>of</strong> proposed changes with<br />

respect to availability, reliability and cost-benefit.<br />

THERP is probably <strong>the</strong> best known <strong>of</strong> first-generation HRA<br />

methods. This methodology in complete than o<strong>the</strong>r because<br />

describes both how events should be modelled and how <strong>the</strong>y<br />

should be quantified. Dominance <strong>of</strong> HRA event tree, however,<br />

means that classification scheme and model necessarily remain<br />

limited, since event tree can only account for binary choices<br />

(success-failure). A final feature <strong>of</strong> THERP is <strong>the</strong> use <strong>of</strong><br />

performance shaping factors to complement task analysis. The<br />

use <strong>of</strong> this technique to account for non-specific influences is<br />

found in most first-generation HRA methods [22]. The separate<br />

use <strong>of</strong> performance shaping factor is relevant for an evaluation<br />

37

<strong>of</strong> operator model, since it suggests that <strong>the</strong> model by itself is<br />

context independent.<br />

TESEO(Empirical technique to estimate operator’s error)<br />

The empirical technique to estimate operator’s error<br />

(TESEO) was developed in 1980 [23]. The methodology is<br />

relatively straightforward and is easy to use but is also limited;<br />

it is useful for quick overview HRA assessments as opposed to<br />

those which are highly detailed and in-depth. Within <strong>the</strong> field<br />

<strong>of</strong> HRA, <strong>the</strong>re is a lack <strong>of</strong> <strong>the</strong>oretical foundation <strong>of</strong> <strong>the</strong><br />

technique as is widely acknowledged throughout.<br />

This technique is used in HRA for evaluating probability <strong>of</strong><br />

a human error occurring throughout <strong>the</strong> completion <strong>of</strong> a<br />

specific task. From such analyses measures can <strong>the</strong>n be taken<br />

to reduce likelihood <strong>of</strong> errors occurring within a system and<br />

<strong>the</strong>refore lead to an improvement in overall levels <strong>of</strong> safety.<br />

This method determines probability <strong>of</strong> operator error (Pe)<br />

through <strong>the</strong> product <strong>of</strong> five factors, each featuring an aspect <strong>of</strong><br />

system (man, plant, environment, etc.).<br />

P e =K 1 K 2 K 3 K 4 K 5 (2)<br />

where:<br />

K 1 : type <strong>of</strong> task to be executed;<br />

K 2 :time available to <strong>the</strong> operator to complete <strong>the</strong> task;<br />

K 3 : operator’s level <strong>of</strong> experience/characteristics;<br />

K 4 : operator’s <strong>state</strong> <strong>of</strong> mind;<br />

K 5 : environmental and ergonomic conditions prevalent.<br />

When putting this technique into practice, it is necessary for<br />

<strong>the</strong> designated HRA evaluator to thoroughly consider <strong>the</strong> task<br />

requiring assessment and <strong>the</strong>refore also consider <strong>the</strong> value for<br />

K n that applies in <strong>the</strong> context. Once this value has been decided<br />

upon, <strong>the</strong> tables are <strong>the</strong>n consulted from which a related value<br />

for each <strong>of</strong> <strong>the</strong> identified factors is found in order to allow <strong>the</strong><br />

HEP to be calculated.<br />

TESEO technique is typically quick and straightforward in<br />

comparison to o<strong>the</strong>r HRA tools, not only in producing a final<br />

result, but also in sensitivity analysis e.g. it is useful in<br />

identifying <strong>the</strong> effects improvements in human factors will<br />

have on <strong>the</strong> overall human reliability <strong>of</strong> a task. It is widely<br />

applicable to various control room designs or with procedures<br />

with varying characteristics [24].<br />

In contrast, it remains to be assumed that <strong>the</strong>se 5 factors are<br />

suffice for an accurate assessment <strong>of</strong> human performance; as<br />

no o<strong>the</strong>r factors are considered, this suggests that to solely use<br />

<strong>the</strong>se 5 factors to adequately describe <strong>the</strong> full range <strong>of</strong> error<br />

producing conditions fails to be highly realistic. Fur<strong>the</strong>r to this,<br />

<strong>the</strong> values <strong>of</strong> K1-5 are unsubstantiated and <strong>the</strong> suggested<br />

multiplicative relationship has no sufficient <strong>the</strong>oretical or<br />

empirical evidence for justification purposes.<br />

HCR (<strong>Human</strong> Cognitive <strong>Reliability</strong> model)<br />

HCR is a cognitive modeling approach to HRA developed<br />

in 1984 [25]. The method uses Rasmussen’s idea <strong>of</strong> rule-based,<br />

skill-based, and knowledge-based decision making to<br />

determine likelihood <strong>of</strong> failing a given task [26], as well as<br />

IRACST- International Journal <strong>of</strong> Research in Management & Technology (IJRMT), ISSN: 2249-9563<br />

Vol. 2, No. 1, 2012<br />

considering <strong>the</strong> PSFs <strong>of</strong> operator experience, stress and<br />

interface quality. The database underpinning this methodology<br />

was originally developed through <strong>the</strong> use <strong>of</strong> nuclear powerplant<br />

simulations due to a requirement for a method by which<br />

nuclear operating reliability could be quantified. The basis for<br />

HCR approach is actually a normalized time-reliability curve,<br />

where shape is determined by dominant cognitive process<br />

associated with task being performed.<br />

HCR method can be described as having <strong>the</strong> following six<br />

step:<br />

Identify actions that must be analyzed by HRA using<br />

task analysis method;<br />

Classify types <strong>of</strong> cognitive processing required by<br />

actions;<br />

Determine median response time (T 1/2 ) <strong>of</strong> a crew to<br />

perform required tasks;<br />

Adjust median response time (T* (1/2)) to account for<br />

performance influencing factors; This is done by means <strong>of</strong> <strong>the</strong><br />

PSF coefficients K 1 (operator experience), K 2 (stress level) and<br />

K 3 (quality <strong>of</strong> operator/plant interface) given in <strong>the</strong> literature<br />

and using <strong>the</strong> following formula:<br />

T (1/2)= T* (1/2) (1+K 1 )(1+K 2 )(1+K 3 ) (3)<br />

Determine, for each action, <strong>the</strong> system time window<br />

(TSW) in which action must be taken;<br />

Divide T SW with T 1/2 to obtain a normalised time<br />

value. Probability <strong>of</strong> non-response is found using a set <strong>of</strong> timereliability<br />

curves.<br />

HCR is probably <strong>the</strong> first-generation HRA approach that<br />

most explicitly refers to a cognitive model. Since it was<br />

developed, <strong>the</strong> treatment <strong>of</strong> human erroneous actions in HCR<br />

remains on <strong>the</strong> same level as in many o<strong>the</strong>r first-generation<br />

methods, i.e., a basic distinction between success and failure<br />

and in this case also no-response. Some advantages <strong>of</strong> this<br />

method are: <strong>the</strong> approach explicitly models <strong>the</strong> time-dependent<br />

nature <strong>of</strong> HRA; it is a fairly quick technique to carry out and<br />

has a relative ease <strong>of</strong> use; <strong>the</strong> three modes <strong>of</strong> decision-making,<br />

knowledge-based, skill-based and rule-based are all modeled;<br />

in contrast, some disadvantages are: <strong>the</strong> rules for judging<br />

Knowledge-based, Skill-based and Rule-based behavior are not<br />

exhaustive. Assigning <strong>the</strong> wrong behavior to a task can mean<br />

differences <strong>of</strong> up to two orders <strong>of</strong> magnitude in <strong>the</strong> HEP; <strong>the</strong><br />

method is very sensitive to changes in <strong>the</strong> estimate <strong>of</strong> <strong>the</strong><br />

median time. Therefore, this estimate must be very accurate<br />

o<strong>the</strong>rwise <strong>the</strong> estimation in <strong>the</strong> HEP will suffer as a<br />

consequence; as <strong>the</strong> HCR correlation was originally developed<br />

for use within <strong>the</strong> nuclear industry, it is not immediately<br />

applicable to situations out-with this domain.<br />

B. Second generation methodologies<br />

The development <strong>of</strong> ‘second generation’ tools began in <strong>the</strong><br />

1990s and is on-going. Benefits <strong>of</strong> second generation over first<br />

generation approaches is yet to be established. They have also<br />

yet to be empirically validated. Kirwan reports that <strong>the</strong> most<br />

notable tools <strong>of</strong> <strong>the</strong> second generation are ATHEANA and<br />

CREAM. Literature shows that second generation methods are<br />

38

IRACST- International Journal <strong>of</strong> Research in Management & Technology (IJRMT), ISSN: 2249-9563<br />

Vol. 2, No. 1, 2012<br />

generally considered to be still under development but that in detail that one could be sure that different teams would produce<br />

<strong>the</strong>ir current form <strong>the</strong>y can provide use fulinsight to human <strong>the</strong> same results; <strong>the</strong> quantification method is [29].<br />

reliability issues.<br />

ATHEANA(A Technique for <strong>Human</strong> Error ANAlysis)<br />

ATHEANA is both a retrospective and prospective HRA<br />

methodology developed by <strong>the</strong> US nuclear industry regulatory<br />

commission in 2000 [27]. It was developed in <strong>the</strong> hope that<br />

certain types <strong>of</strong> human behavior in nuclear plants and<br />

industries, which use similar processes, could be represented in<br />

a way in which <strong>the</strong>y could be more easily understood.<br />

It seeks to provide a robust psychological framework to<br />

evaluate and identify PSFs - including<br />

organizational/environmental factors - which have driven<br />

incidents involving human factors, primarily with intention <strong>of</strong><br />

suggesting process improvement. Essentially it is a method <strong>of</strong><br />

representing complex accident reports within a standardized<br />

structure, which may be easier to understand and communicate.<br />

The basic steps <strong>of</strong> <strong>the</strong> ATHEANA methodology are [28]:<br />

Define and interpret under consideration issue;<br />

Detail required scope <strong>of</strong> analysis;<br />

Describe <strong>the</strong> base case scenario for a given initiating<br />

event, including norm <strong>of</strong> operations within environment,<br />

considering actions and procedures;<br />

Define <strong>Human</strong> Failure Events (HFEs) and/or unsafe<br />

actions (UAs) which may affect task in question;<br />

Identify potential vulnerabilities in operators’<br />

knowledge base;<br />

Search for deviations from base case scenario for<br />

which UAs are likely;<br />

Identify and evaluate complicating factors and links to<br />

PSFs;<br />

Evaluate recovery potential;<br />

Quantify HFE probability;<br />

Incorporate results into <strong>the</strong> PRA.<br />

The probability <strong>of</strong> a HFE in ATHEANA, given a p<strong>art</strong>icular<br />

initiator, is determined by summing over different error forcing<br />

conditions associated to HEF, taking account <strong>of</strong> likelihood <strong>of</strong><br />

unsafe actions given <strong>the</strong> EFC, and likelihood <strong>of</strong> no recovery<br />

action given <strong>the</strong> EFC and <strong>the</strong> UA.<br />

The most significant advantage <strong>of</strong> ATHEANA is that it<br />

provides a much richer and more holistic understanding <strong>of</strong> <strong>the</strong><br />

context concerning <strong>the</strong> <strong>Human</strong> Factors known to be <strong>the</strong> cause<br />

<strong>of</strong> <strong>the</strong> incident, as compared with most first generation<br />

methods. Compared to many o<strong>the</strong>r HRA quantification<br />

methods, ATHEANA allows for <strong>the</strong> consideration <strong>of</strong> a much<br />

wider range <strong>of</strong> performance shaping factors and also does not<br />

require that <strong>the</strong>se be treated as independent. This is important<br />

as <strong>the</strong> method seeks to identify any interactions which affect<br />

<strong>the</strong> weighting <strong>of</strong> <strong>the</strong> factors <strong>of</strong> <strong>the</strong>ir influence on a situation. In<br />

contrast some criticisms are: <strong>the</strong> method is cumbersome and<br />

requires a large team; <strong>the</strong> method is not described in sufficient<br />

CREAM(Cognitive <strong>Reliability</strong> and Error <strong>Analysis</strong> Method)<br />

CREAM methodology was developed by Eric Hollnagel in<br />

1998 following an analysis <strong>of</strong> <strong>the</strong> methods for HRA already in<br />

place. It is <strong>the</strong> most widely utilized second generation HRA<br />

technique and is based on three primary areas <strong>of</strong> work; task<br />

analysis, opportunities for reducing errors and possibility to<br />

consider human performance with regards to overall safety <strong>of</strong> a<br />

system.<br />

This methodology is a technique used in HRA for <strong>the</strong><br />

purposes <strong>of</strong> evaluating <strong>the</strong> probability <strong>of</strong> a human error<br />

occurring throughout <strong>the</strong> completion <strong>of</strong> a specific task. From<br />

such analyses measures can <strong>the</strong>n be taken to reduce likelihood<br />

<strong>of</strong> errors occurring within a system and <strong>the</strong>refore lead to an<br />

improvement in <strong>the</strong> overall levels <strong>of</strong> safety. HRA techniques<br />

have been utilized in a range <strong>of</strong> industries including healthcare,<br />

engineering, nuclear, transportation and business; each<br />

technique has varying uses within different disciplines.<br />

Compared to many o<strong>the</strong>r methods, it takes a very different<br />

approach to modeling human reliability. There are two versions<br />

<strong>of</strong> this technique, <strong>the</strong> basic and <strong>the</strong> extended version, both <strong>of</strong><br />

which have in common two primary features; ability to identify<br />

<strong>the</strong> importance <strong>of</strong> human performance in a given context and a<br />

helpful cognitive model and associated framework, usable for<br />

both prospective and retrospective analysis. Prospective<br />

analysis allows likely human errors to be identified while<br />

retrospective analysis quantifies errors that have already<br />

occurred.<br />

Cognition concept is included in <strong>the</strong> model through use <strong>of</strong><br />

four basic ‘control modes’ which identify differing levels <strong>of</strong><br />

control that an operator has in a given context and<br />

characteristics which highlight occurrence <strong>of</strong> distinct<br />

conditions. The control modes which may occur are as follows:<br />

Scrambled control: choice <strong>of</strong> forthcoming action is<br />

unpredictable or haphazard;<br />

Opportunistic control: next action is determined by<br />

superficial characteristics <strong>of</strong> situation, possibly through habit or<br />

similarity matching. Situation is characterized by lack <strong>of</strong><br />

planning and this may possibly be due to <strong>the</strong> lack <strong>of</strong> available<br />

time;<br />

Tactical control: performance typically follows<br />

planned procedures while some ad-hoc deviations are still<br />

possible;<br />

Strategic control: plentiful time is available to<br />

consider actions to be taken in light <strong>of</strong> wider objectives to be<br />

fulfilled and within <strong>the</strong> given context.<br />

The p<strong>art</strong>icular control mode determines level <strong>of</strong> reliability<br />

that can be expected in a p<strong>art</strong>icular setting and this is in turn<br />

determined by collective characteristics <strong>of</strong> relevant Common<br />

Performance Conditions (CPCs).<br />

The basic steps <strong>of</strong> <strong>the</strong> CREAM methodology are:<br />

1.Task analysis. The basic method adopted by CREAM<br />

technique provides an immediate reliability interval based on<br />

39

an assessment <strong>of</strong> given control mode, as highlighted by figures<br />

provided in table below (Tab.1). As can be seen by contents <strong>of</strong><br />

table, each <strong>of</strong> specified control modes has an individual<br />

reliability level. In extended CREAM version, control modes<br />

play <strong>the</strong> role <strong>of</strong> a weighting factor which scales a nominal<br />

failure probability associated to a given cognitive function<br />

failure. This version <strong>of</strong> CREAM is intended to be used for<br />

purposes <strong>of</strong> a more in depth analysis <strong>of</strong> human interactions.<br />

TABLE I.<br />

Control mode<br />

RELIABILITY INTERVAL<br />

<strong>Reliability</strong> Interval<br />

strategic 0.5 E-5 < p < 1.0 E-2<br />

tactical 1.0 E-3 < p < 1.0 E-1<br />

opportunistic 1.0 E-2 < p < 0.5 E-0<br />

scrambled 1.0 E-1 < p < 1.0 E-0<br />

2.Context description. The intention <strong>of</strong> basic CREAM<br />

method is to use it as a screening technique with <strong>the</strong> aim <strong>of</strong><br />

identifying processes which require a deeper level <strong>of</strong> analysis;<br />

this analysis may <strong>the</strong>n be carried out by <strong>the</strong> extended CREAM<br />

method.<br />

3.Specification <strong>of</strong> Initiating Events. When using basic<br />

CREAM method, a task analysis is conducted prior to fur<strong>the</strong>r<br />

assessment. CPCs are assessed according to descriptors, given<br />

in <strong>the</strong> table below (Tab.2), in order to judge <strong>the</strong>ir expected<br />

effect on performance.<br />

TABLE 2.<br />

COMMON PERFORMANCE CONDITIONS (CPCS)<br />

CPC Descriptors Expected effect<br />

Adequacy <strong>of</strong> Very efficient Improved<br />

organisation<br />

Efficient Not significant<br />

Working<br />

conditions<br />

Adequacy <strong>of</strong><br />

MMI and<br />

operational<br />

support<br />

Availability<br />

<strong>of</strong><br />

procedures/<br />

Inefficient<br />

Deficient<br />

Advantageous<br />

Compatible<br />

Incompatible<br />

Supportive<br />

Adequate<br />

Tolerable<br />

Inappropriate<br />

Appropriate<br />

IRACST- International Journal <strong>of</strong> Research in Management & Technology (IJRMT), ISSN: 2249-9563<br />

Vol. 2, No. 1, 2012<br />

CPC Descriptors Expected effect<br />

plans<br />

Reduced<br />

Reduced<br />

Improved<br />

Not significant<br />

Reduced<br />

Improved<br />

Not significant<br />

Not significant<br />

Reduced<br />

Improved<br />

Number <strong>of</strong><br />

simultaneous<br />

goals<br />

Accettable<br />

Inappropriate<br />

Fewer than capacity<br />

Matching current<br />

capacity<br />

More than capacity<br />

Not significant<br />

Reduced<br />

Not significant<br />

Not significant<br />

Reduced<br />

Available Adequate<br />

Improved<br />

time<br />

Temporarily Not significant<br />

inadequate<br />

Continuously Reduced<br />

inadequate<br />

Time <strong>of</strong> day Day-time (adjusted) Not significant<br />

Adequacy <strong>of</strong><br />

training and<br />

expertise<br />

Crew<br />

collaboration<br />

quality<br />

Night-time<br />

(unadjusted)<br />

Adequate, high<br />

experience<br />

Adequate, limited<br />

experience<br />

Inadequate<br />

Very efficient<br />

Efficient<br />

Inefficient<br />

Deficient<br />

Reduced<br />

Improved<br />

Not significant<br />

Reduced<br />

Improved<br />

Not significant<br />

Not significant<br />

Reduced<br />

4.Error prediction. Assessments <strong>of</strong> <strong>the</strong> CPCs <strong>the</strong>n require to<br />

be adjusted according to some specified rules in order to take<br />

account <strong>of</strong> synergistic effects. Matrix above would be<br />

considered in <strong>the</strong> context <strong>of</strong> <strong>the</strong> situation under assessment and<br />

by this means <strong>the</strong> previously considered initiating events are<br />

<strong>review</strong>ed with respect to how <strong>the</strong>y could potentially lead to<br />

occurrence <strong>of</strong> an error. Rows <strong>of</strong> matrix identify <strong>the</strong> possible<br />

outcomes while <strong>the</strong> columns show <strong>the</strong> precursors. Analyst <strong>the</strong>n<br />

has task <strong>of</strong> identifying <strong>the</strong> columns for which all <strong>the</strong> rows have<br />

been similarly classified into <strong>the</strong> same group according to<br />

column headings. Predicting <strong>the</strong> possible outcomes for each <strong>of</strong><br />

rows should be done until <strong>the</strong>re are no remaining possible<br />

paths. Each <strong>of</strong> identified errors requires to be noted along with<br />

causes and <strong>the</strong> outcomes.<br />

5.Determination <strong>of</strong> control mode. Finally, a simple count is<br />

performed <strong>of</strong> <strong>the</strong> number <strong>of</strong> CPCs that are causing an<br />

improvement in reliability and those which are reducing it. On<br />

<strong>the</strong> basis <strong>of</strong> this number <strong>the</strong> probable control mode is<br />

determined.<br />

The main advantages <strong>of</strong> this methodology are: <strong>the</strong><br />

technique uses <strong>the</strong> same principles for retrospective and<br />

40

predictive analyses; <strong>the</strong> approach is very concise, wellstructured<br />

and follows a well laid out system <strong>of</strong> procedure; <strong>the</strong><br />

technique allows for <strong>the</strong> direct quantification <strong>of</strong> HEP; it also<br />

allows evaluator using <strong>the</strong> CREAM method to specifically<br />

tailor <strong>the</strong> use <strong>of</strong> technique to contextual situation [30]. Instead,<br />

<strong>the</strong> main criticism are: this technique requires a high level <strong>of</strong><br />

resource use, including lengthy time periods for completion;<br />

CREAM also requires an initial expertise in field <strong>of</strong> human<br />

factors in order to use technique successfully and may <strong>the</strong>refore<br />

appear ra<strong>the</strong>r complex for an inexperienced user; CREAM does<br />

not put forth potential means by which identified errors can be<br />

reduced; time required for application is very lengthy.<br />

REFERENCES<br />

[1] F. De Felice, A. Petrillo. Methodological Approach for Performing<br />

<strong>Human</strong> <strong>Reliability</strong> and Error <strong>Analysis</strong> in Railway Transportation<br />

System. International Journal <strong>of</strong> Engineering and Technology Vol.3 (5),<br />

2011, 341-353.<br />

[2] F. De Felice, A.Petrillo, A Decision support tool based on ANP and<br />

FMEA to determine cause failures. Proceedings <strong>of</strong> <strong>the</strong> International<br />

Conference on Modelling & Applied Simulation, Fez (Marocco), 13-15<br />

October, 2010.<br />

[3] F. De Felice, A.Petrillo, Development <strong>of</strong> a model for <strong>the</strong> improvement<br />

<strong>of</strong> safety in <strong>the</strong> work place through <strong>the</strong> Analityc Network Process.<br />

Proceedings <strong>of</strong> <strong>the</strong> International Symposium on <strong>the</strong> Analytic Hierarchy<br />

Process (ISAHP), Pittsburgh (PA – USA), 28 July 2009.<br />

[4] B. Kirwan (1994) Practical Guide to <strong>Human</strong> <strong>Reliability</strong> Assessment.<br />

Taylor and Francis (CRC Press), London.<br />

[5] R.E. Barlow and F. Proschan (1975) Statistical Theory <strong>of</strong> <strong>Reliability</strong> and<br />

Life Testing. Holt, Reinh<strong>art</strong> and Winston, New York.<br />

[6] A. Høyland and M. Rausand (1994) System <strong>Reliability</strong> Theory. John<br />

Wiley and Sons, New York.<br />

[7] J. Reason (1990) The contribution <strong>of</strong> latent human failures to <strong>the</strong><br />

breakdown <strong>of</strong> complex systems. Philosophical Transactions <strong>of</strong> <strong>the</strong> Royal<br />

Society <strong>of</strong> London.B327(1241) 475-484.<br />

[8] S.G. Kariuki and K. Lowe (2007) Integrating human factors into process<br />

analysis.<strong>Reliability</strong> Engineering and System Safety.921764-1773.<br />

[9] J. Ren, I Jenkinson, J. Wang, D.L. Xu, and J.B. Yang (2008) A<br />

methodology to model causal relationships in <strong>of</strong>fshore safety assessment<br />

focusing on human and organisational factors.Journal <strong>of</strong> Safety<br />

Research.3987-100.<br />

[10] R.L. Helmreich (2000) On error management: lessons from aviation.<br />

British Medical Journal.320(7237) 781–785.<br />

[11] E. Hollnagel (1993) <strong>Human</strong> <strong>Reliability</strong> <strong>Analysis</strong>: Context and Control.<br />

Academic Press, London.<br />

[12] E. Grandjean (1980) Fitting <strong>the</strong> Task to <strong>the</strong> Man. Taylor and Francis,<br />

London.<br />

[13] D. Embrey (2000) Data Collection Systems.<strong>Human</strong> <strong>Reliability</strong><br />

Associates, Wigan.<br />

IRACST- International Journal <strong>of</strong> Research in Management & Technology (IJRMT), ISSN: 2249-9563<br />

Vol. 2, No. 1, 2012<br />

[14] M. Lyons, A. Sally, M. Woloshynowych, C. Vincent (2004) <strong>Human</strong><br />

<strong>Reliability</strong> <strong>Analysis</strong> in healthcare: A <strong>review</strong> <strong>of</strong> technique.International<br />

Journal <strong>of</strong> Risk &Safety in medicine.<br />

[15] E. Hollnagel (1998) Cognitive <strong>Reliability</strong> and Error <strong>Analysis</strong> Method –<br />

CREAM. Elsevier Science, Oxford.<br />

[16] E. Hollnagel (2000) Looking for errors <strong>of</strong> omission and commission or<br />

The Hunting <strong>of</strong> <strong>the</strong> Snarkrevisited.<strong>Reliability</strong> Engineering and System<br />

Safety. 68 135–145.<br />

[17] E. Doughty (1990) <strong>Human</strong> reliability analysis - where shouldst thou<br />

turn<strong>Reliability</strong> Engineering and System Safety. 29(3) 283-299.<br />

[18] R.G. Lord, P.E. Levy (1994) Moving from cognition to action – a<br />

control <strong>the</strong>ory perspective. Applied Psychology - An International<br />

Review (Psychologieappliquee - Revue Internationale).<br />

[19] J. Wreatall (1982)Operator action trees. An approach to quantifying<br />

operator error probability during accident sequences, NUS-4159. NUS<br />

Corporation, San Diego (California).<br />

[20] A.D. Swain (1989) Comparative evaluation <strong>of</strong> methods for human<br />

reliability analysis,(GRS-71). Garching, FRG:<br />

GesellschaftfürReaktorsicherheit.<br />

[21] A.D. Swain & H.E. Guttman (1983) Handbook <strong>of</strong> human reliability<br />

analysis with emphasis on nuclear power plant applications, NUAREG<br />

CR-1278.NRC, Washington, DC.<br />

[22] B. Kirwan (1996) The validation <strong>of</strong> three human reliability<br />

quantification techniques - THERP, HEART, JHEDI: P<strong>art</strong> I -- technique<br />

descriptions and validation issues. Applied Ergonomics. 27(6) 359-373.<br />

[23] G.C Bello and C. Columbari (1980) The human factors in risk analyses<br />

<strong>of</strong> process plants: <strong>the</strong> control room operator model, TESEO. <strong>Reliability</strong><br />

Engineering.13-14.<br />

[24] P.C. Humphreys, <strong>Human</strong> <strong>Reliability</strong> Assessor’s Guide. 1995, <strong>Human</strong><br />

Factors in <strong>Reliability</strong> Group, SRD Association.<br />

[25] G.W. Hannaman, A.J. Spurgin, and Y.D. Lukic, <strong>Human</strong> cognitive<br />

reliability model for PRA analysis. Draft Report NUS-4531, EPRI<br />

Project RP2170-3. 1984, Electric Power and Research Institute: Palo<br />

Alto, CA.<br />

[26] J. Rasmussen (1983) Skills, rules, knowledge; signals, signs and<br />

symbols and o<strong>the</strong>r distinctions in human performance models.IEEE<br />

Transactions on Systems, Man and Cybernetics.SMC-13(3).<br />

[27] M., Barriere, D. Bley, S. Cooper, J. Forester, A. Kolaczkowski, W.<br />

Luckas, G. Parry, A. Ramey-Smith, C. Thompson, D. Whitehead, and J.<br />

Wreathall, NUREG-1624: Technical basis and implementation<br />

guidelines for a technique for human event analysis (ATHEANA). 2000,<br />

US Nuclear Regulatory Commission.<br />

[28] J. Forester, D. Bley, S. Cooper, E. Lois, N. Siu, A Kolaczkowski, and J.<br />

Wreathall, (2004) Expert elicitation approach for performing<br />

ATHEANA quantification.. <strong>Reliability</strong> Engineering and System<br />

Safety.83(2) 207-220.<br />

[29] J. Forester, A. Ramey-Smith, D. Bley, A. Kolaczkowski, S. Cooper, and<br />

J. Wreathall, SAND--98-1928C: Discussion <strong>of</strong> comments from a peer<br />

<strong>review</strong> <strong>of</strong> a technique for human event analysis (ATHEANA), .1998,<br />

Sandia Laboratory<br />

[30] P. Salmon, N.A. Stanton and G. Walker, <strong>Human</strong>s Factors Design<br />

Methods Review.2003, Defence Technology Centre.<br />

41