Evolutionary Computation : A Unified Approach

Evolutionary Computation : A Unified Approach

Evolutionary Computation : A Unified Approach

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

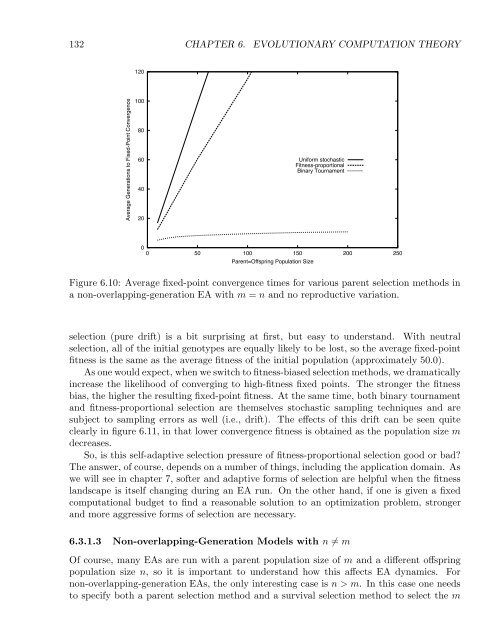

132 CHAPTER 6. EVOLUTIONARY COMPUTATION THEORY<br />

120<br />

Average Generations to Fixed-Point Convergence<br />

100<br />

80<br />

60<br />

40<br />

20<br />

Uniform stochastic<br />

Fitness-proportional<br />

Binary Tournament<br />

0<br />

0 50 100 150 200 250<br />

Parent=Offspring Population Size<br />

Figure 6.10: Average fixed-point convergence times for various parent selection methods in<br />

a non-overlapping-generation EA with m = n and no reproductive variation.<br />

selection (pure drift) is a bit surprising at first, but easy to understand. With neutral<br />

selection, all of the initial genotypes are equally likely to be lost, so the average fixed-point<br />

fitness is the same as the average fitness of the initial population (approximately 50.0).<br />

As one would expect, when we switch to fitness-biased selection methods, we dramatically<br />

increase the likelihood of converging to high-fitness fixed points. The stronger the fitness<br />

bias, the higher the resulting fixed-point fitness. At the same time, both binary tournament<br />

and fitness-proportional selection are themselves stochastic sampling techniques and are<br />

subject to sampling errors as well (i.e., drift). The effects of this drift can be seen quite<br />

clearly in figure 6.11, in that lower convergence fitness is obtained as the population size m<br />

decreases.<br />

So, is this self-adaptive selection pressure of fitness-proportional selection good or bad<br />

The answer, of course, depends on a number of things, including the application domain. As<br />

we will see in chapter 7, softer and adaptive forms of selection are helpful when the fitness<br />

landscape is itself changing during an EA run. On the other hand, if one is given a fixed<br />

computational budget to find a reasonable solution to an optimization problem, stronger<br />

and more aggressive forms of selection are necessary.<br />

6.3.1.3 Non-overlapping-Generation Models with n ̸=m<br />

Of course, many EAs are run with a parent population size of m and a different offspring<br />

population size n, so it is important to understand how this affects EA dynamics. For<br />

non-overlapping-generation EAs, the only interesting case is n>m. In this case one needs<br />

to specify both a parent selection method and a survival selection method to select the m