CPE432: Computer Design Fall 2010 Homework 1 Solution ...

CPE432: Computer Design Fall 2010 Homework 1 Solution ...

CPE432: Computer Design Fall 2010 Homework 1 Solution ...

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

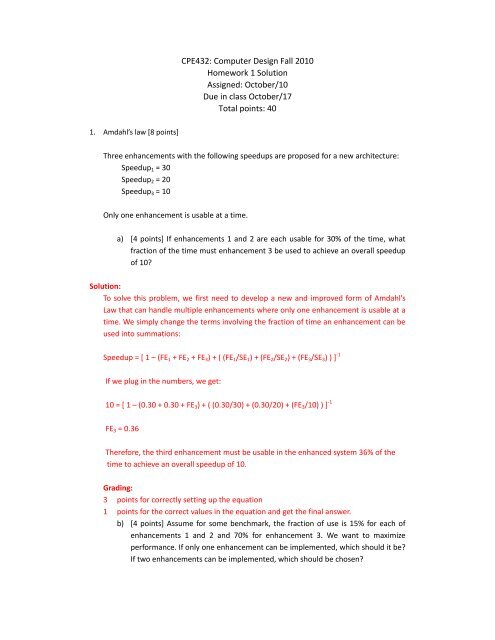

<strong>CPE432</strong>: <strong>Computer</strong> <strong>Design</strong> <strong>Fall</strong> <strong>2010</strong><br />

<strong>Homework</strong> 1 <strong>Solution</strong><br />

Assigned: October/10<br />

Due in class October/17<br />

Total points: 40<br />

1. Amdahl’s law [8 points]<br />

Three enhancements with the following speedups are proposed for a new architecture:<br />

Speedup 1 = 30<br />

Speedup 2 = 20<br />

Speedup 3 = 10<br />

Only one enhancement is usable at a time.<br />

a) [4 points] If enhancements 1 and 2 are each usable for 30% of the time, what<br />

fraction of the time must enhancement 3 be used to achieve an overall speedup<br />

of 10<br />

<strong>Solution</strong>:<br />

To solve this problem, we first need to develop a new and improved form of Amdahl’s<br />

Law that can handle multiple enhancements where only one enhancement is usable at a<br />

time. We simply change the terms involving the fraction of time an enhancement can be<br />

used into summations:<br />

Speedup = [ 1 – (FE 1 + FE 2 + FE 3 ) + ( (FE 1 /SE 1 ) + (FE 2 /SE 2 ) + (FE 3 /SE 3 ) ) ] ‐1<br />

If we plug in the numbers, we get:<br />

10 = [ 1 – (0.30 + 0.30 + FE 3 ) + ( (0.30/30) + (0.30/20) + (FE 3 /10) ) ] ‐1<br />

FE 3 = 0.36<br />

Therefore, the third enhancement must be usable in the enhanced system 36% of the<br />

time to achieve an overall speedup of 10.<br />

Grading:<br />

3 points for correctly setting up the equation<br />

1 points for the correct values in the equation and get the final answer.<br />

b) [4 points] Assume for some benchmark, the fraction of use is 15% for each of<br />

enhancements 1 and 2 and 70% for enhancement 3. We want to maximize<br />

performance. If only one enhancement can be implemented, which should it be<br />

If two enhancements can be implemented, which should be chosen

<strong>Solution</strong>:<br />

Here we will again use Amdahl’s law to compute speedups.<br />

Speedup for one enhancement only = [ 1 – FE 1 + (FE 1 /SE 1 ) ] ‐1<br />

Speedup for two enhancements = [ 1 – (FE 1 + FE 2 ) + ( (FE 1 /SE 1 ) + (FE 2 /SE 2 ) ) ] ‐1<br />

If we plug in the numbers, we get:<br />

Speedup 1 = (1 – 0.15 + 0.15/30) ‐1 = 1.169<br />

Speedup 2 = (1 – 0.15 + 0.15/20) ‐1 = 1.166<br />

Speedup 3 = (1 – 0.70 + 0.70/10) ‐1 = 2.703<br />

Therefore, if we are allowed to select a single enhancement, we would choose E 3<br />

Speedup 12 = [(1 ‐ 0.15 ‐ 0.15) + (0.15/30 + 0.15/20)] ‐1 = 1.4035<br />

Speedup 13 = [(1 ‐ 0.15 ‐ 0.70) + (0.15/30 + 0.70/10)] ‐1 = 4.4444<br />

Speedup 23 = [(1 ‐ 0.15 ‐ 0.70) + (0.15/20 + 0.70/10)] ‐1 = 4.3956<br />

Therefore, if two enhancements can be implemented, we would choose E 1 and E 3.<br />

Grading:<br />

2 points for correctly calculating one enhancement speedups<br />

2 points for the correctly calculating two enhancement speedups

2. Measuring processor’s time [8 points]<br />

After graduating, you are asked to become the lead computer designer at Hyper <strong>Computer</strong>,<br />

Inc. Your study of usage of high‐level language constructs suggests that procedure calls are one of<br />

the most expensive operations. You have invented a new architecture with an ISA that reduces<br />

the loads and stores normally associated with procedure calls and returns. The first thing you do<br />

is run some experiments with and without this optimization. Your experiments use the same<br />

state‐of‐the‐art optimizing compiler that will be used with either version of the computer. These<br />

experiments reveal the following information:<br />

‐ The clock cycle time of the optimized version is 5% lower than the unoptimized version<br />

‐ Thirty percent of the instructions in the unoptimized version are loads or stores.<br />

‐ The optimized version executes two‐thirds as many loads and stores as the unoptimized<br />

version. For all other instructions the dynamic execution counts are unchanged.<br />

‐ Every instruction (including load and store) in the unoptimized version takes one clock<br />

cycle.<br />

‐ Due to the optimization, the procedure call and return instructions take one extra cycle<br />

in the optimized version, and these instructions accounts for 5% of total instruction<br />

count in the optimized version.<br />

Which is faster Justify your decision quantitatively.<br />

<strong>Solution</strong>: To decide which is faster, we need to measure the CPU time:<br />

CPU Time = IC * CPI * Clk<br />

For the unoptimized case, we have the CPU Time:<br />

CPU un = IC un * CPI un * Clk un<br />

Because CPI un = 1.0, so we have:<br />

CPU un = IC un * 1.0 * Clk un<br />

Since 30% of the instructions are load and store, and in the optimized version, the machine<br />

executes 2/3 of them, so in the optimized version, we can reduce 30% * 1/3 = 10% of the<br />

instructions, making:<br />

IC new = 0.9 * IC un<br />

CPI new = 0.95 * 1 + 0.05 * 2 = 1.05<br />

Clk new<br />

= 0.95 * Clk un<br />

So we have:<br />

CPU new<br />

= IC new * CPI new * Clk new<br />

= 0.9 * IC un * 1.05 * 0.95 * Clk un<br />

= 0.89775 * IC un * Clk un<br />

= 0.89775 * CPU un<br />

So we should use the optimized version.<br />

Grading: 2 points for pointing out we should use CPU Time formula to compare. 2 points for each<br />

correct component calculation (IC, CPI and Clk)

3. Basic Pipelining [16 points]<br />

Consider the following code fragment:<br />

Loop:<br />

LW R1, 0(R2)<br />

DADDI R1, R1, 1<br />

SW R1, 0(R2)<br />

DADDI R2, R2, 4<br />

DADDI R4, R4, ‐4<br />

BNEZ R4, Loop<br />

Consider the standard 5 stage pipeline machine (IF ID EX MEM WB). Assume the initial value<br />

of R4 is 396 and all memory accesses hit in the cache.<br />

a. [5 points] Show the timing of the above code fragment for one iteration as well as<br />

for the load of the second iteration. For this part, assume there is no forwarding or<br />

bypassing hardware. Assume a register write occurs in the first half of the cycle<br />

and a register read occurs in the last half of the cycle. Also, assume that branches are<br />

resolved in the memory stage and are handled by flushing the pipeline. Use a<br />

pipeline timing chart to show the timing as below (expand the chart if you need<br />

more cycles). How many cycles does this loop take to complete (for all iterations, not<br />

just one iteration)<br />

Instruction C1 C2 C3 C4 C5 C6 C7 C8 C9 C10 C11 C12 C13 C14 C15<br />

LW R1, 0(R2) F D X M W<br />

DADDI R1, R1, 1<br />

SW R1, 0(R2)<br />

DADDI R2, R2, 4<br />

DADDI R4, R4, -4<br />

BNEZ R4, Loop<br />

LW R1, 0(R2)<br />

<strong>Solution</strong>:<br />

It is evident that the loop iterates 99 times. To calculate the total time the loop takes to<br />

iterate, we look at the length of the first 98 iterations, then factor in the 99th iteration which<br />

takes a bit longer to execute.<br />

The pipeline diagram:<br />

Instruction C1 C2 C3 C4 C5 C6 C7 C8 C9 C10 C11 C12 C13 C14 C15 C16 C17 C18 C19 C20<br />

LW R1, 0(R2) F D X M W<br />

DADDI R1, R1, 1 F D S S X M W<br />

SW R1, 0(R2) F S S D S S X M W<br />

DADDI R2, R2, 4 F S S D X M W<br />

DADDI R4, R4, -4 F D X M W<br />

BNEZ R4, Loop F D S S X M W

LW R1, 0(R2) F D X M W<br />

Here, “S” indicates a stall. The last cycle of an iteration is overlapped with the first cycle of<br />

the next, so it is not counted until the end. Therefore, the first 98 iterations take 15 cycles<br />

each, while the last iteration takes 16 cycles. Therefore, the total time taken from the code to<br />

execute is 98 x 15 + 16 = 1486 clock cycles.<br />

Grading: 1 points for line 2, 3, 6<br />

0.5 points for line 1, 4, 5, 7<br />

b. [5 points] Show the timing for the same instruction sequence for the pipeline with<br />

full forwarding and bypassing hardware (as discussed in class). Assume that branches<br />

are resolved in the MEM stage and are predicted as not taken. How many cycles<br />

does this loop take to complete<br />

<strong>Solution</strong>:<br />

Instruction C1 C2 C3 C4 C5 C6 C7 C8 C9 C10 C11 C12 C13 C14 C15<br />

LW R1, 0(R2) F D X M W<br />

DADDI R1, R1, 1 F D S X M W<br />

SW R1, 0(R2) F S D X M W<br />

DADDI R2, R2, 4 F D X M W<br />

DADDI R4, R4, -4 F D X M W<br />

BNEZ R4, Loop F D X M W<br />

LW R1, 0(R2) F D X M W<br />

The last cycle of an iteration is overlapped with the first cycle of the next, so it is not counted<br />

until the end. Therefore, the first 98 iterations take 10 cycles each, while the last iteration<br />

takes 11 cycles. Therefore, the total time taken from the code to execute is 98 x 10 + 11 =<br />

991 clock cycles.<br />

Grading: Same as problem (a).<br />

(c) [3 points] How does the branch delay slot improve performance Point out where in your<br />

solution for part b that it would be beneficial.<br />

<strong>Solution</strong>:<br />

The branch delay slot is a place after the branch instruction for an instruction that will be<br />

executed regardless of whether the branch is taken or not. By placing such an instruction<br />

after the branch and always executing it, we can do useful work while we are still calculating<br />

whether the branch is taken or not and what the target address is.<br />

More specifically, in part b, the LW instruction enters the IF stage when the branch is in the

WB stage (the LW cannot enter the IF stage until this point because we don’t know the<br />

branch target until after the MEM stage). If we had a branch delay slot, we could have fit an<br />

extra instruction between the 2 with no penalty.<br />

Grading:<br />

2 points for explanation.<br />

1 point for pointing out where in part b it is useful.<br />

(d) [3 points] Why does static branch prediction improve performance over no branch<br />

prediction<br />

<strong>Solution</strong>:<br />

It allows the processor to load an instruction and put it into the pipeline earlier in the<br />

pipeline after a branch. If the branch prediction is correct, then nothing needs to be done<br />

and we save a few clock cycles. If it mispredicts, we flush the pipeline and then load the<br />

correct instruction and so it is not different from not predicting at all in this case.<br />

Grading:<br />

3 points for explanation.

4. Hazards [8 points]<br />

Consider a pipeline with the following structure: IF ID EX MEM WB. Assume that the EX stage<br />

is 1 cycle long for all ALU operations, loads and stores. Also, the EX stage is 3 cycles long for<br />

the FP add, and 6 cycles long for the FP multiply. The pipeline supports full forwarding. All<br />

other stages in the pipeline take one cycle each. The branch is resolved in the ID stage. WAW<br />

hazards are resolved by stalling the later instruction. For the following code, list all the data<br />

hazards that cause stalls. State the type of data hazard and give a brief explanation why each<br />

hazard occurs.<br />

(A quick inspection should be ok. You don’t need to do a thorough pipeline diagram like in<br />

question 3).<br />

loop: L.D F0, 0(R1) #1<br />

L.D F2, 8(R1) #2<br />

L.D F4, 16(R1) #3<br />

L.D F6, 24(R1) #4<br />

MULT.D F8, F6, F0 #5<br />

ADD.D F10, F4, F0 #6<br />

ADD.D F8, F2, F0 #7<br />

S.D 0(R2), F8 #8<br />

DADDI R2, R2, 8 #9<br />

S.D 8(R2), F10 #10<br />

DSUBI R1, R1, 32 #11<br />

BNEZ R1, loop #12<br />

<strong>Solution</strong>:<br />

(a) RAW hazard between instructions 4 and 5 due to line 5 (MULT.D) needing the result from<br />

line 4 (L.D) before it is available.<br />

(b) WAW hazard between instructions 5 and 7 due to line 7 (ADD.D) wanting to WB to the<br />

same register before line 5 (MULT.D) would WB.<br />

(c) RAW hazard between instructions 7 and 8 due to line 8 (S.D) storing the value computed<br />

by line 7 (ADD.D)<br />

(d) RAW hazard between instructions 11 and 12 due to line 12 (BNEZ) wanting to use the<br />

result of the line 11 (DSUBI) in determining the branch result, this is a hazard because<br />

branches are determined in the ID stage.<br />

The BNEZ ID stage occurs at the same time as the SUBI EX stage so forwarding cannot<br />

eliminate this hazard.<br />

Grading:<br />

2 points per hazard (1 point for type, 1 point for reason).

![Problem 1: Loop Unrolling [18 points] In this problem, we will use the ...](https://img.yumpu.com/36629594/1/184x260/problem-1-loop-unrolling-18-points-in-this-problem-we-will-use-the-.jpg?quality=85)