Estimation of parameters of the Gompertz distribution using the least ...

Estimation of parameters of the Gompertz distribution using the least ...

Estimation of parameters of the Gompertz distribution using the least ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Applied Ma<strong>the</strong>matics and Computation 158 (2004) 133–147<br />

www.elsevier.com/locate/amc<br />

<strong>Estimation</strong> <strong>of</strong> <strong>parameters</strong> <strong>of</strong> <strong>the</strong><br />

<strong>Gompertz</strong> <strong>distribution</strong> <strong>using</strong> <strong>the</strong> <strong>least</strong><br />

squares method<br />

Jong-Wuu Wu a, *<br />

, Wen-Liang Hung b , Chih-Hui Tsai a<br />

a Department <strong>of</strong> Statistics, Tamkang University, Tamsui, Taipei 25137, Taiwan, ROC<br />

b Department <strong>of</strong> Ma<strong>the</strong>matics, National Hsinchu Teachers College, Hsin-Chu, Taiwan, ROC<br />

Abstract<br />

The <strong>Gompertz</strong> <strong>distribution</strong> has been used to describe human mortality and establish<br />

actuarial tables. Recently, this <strong>distribution</strong> has been again studied by some authors. The<br />

maximum likelihood estimates for <strong>the</strong> <strong>parameters</strong> <strong>of</strong> <strong>the</strong> <strong>Gompertz</strong> <strong>distribution</strong> has<br />

been discussed by Garg et al. [J. R. Statist. Soc. C 19 (1970) 152]. The purpose <strong>of</strong> this<br />

paper is to propose unweighted and weighted <strong>least</strong> squares estimates for <strong>parameters</strong> <strong>of</strong><br />

<strong>the</strong> <strong>Gompertz</strong> <strong>distribution</strong> under <strong>the</strong> complete data and <strong>the</strong> first failure-censored data<br />

(series systems; see [J. Statist. Comput. Simulat. 52 (1995) 337]). A simulation study is<br />

carried out to compare <strong>the</strong> proposed estimators and <strong>the</strong> maximum likelihood estimators.<br />

Results <strong>of</strong> <strong>the</strong> simulation studies show that <strong>the</strong> performance <strong>of</strong> <strong>the</strong> weighted <strong>least</strong><br />

squares estimators is acceptable.<br />

Ó 2003 Elsevier Inc. All rights reserved.<br />

Keywords: <strong>Gompertz</strong> <strong>distribution</strong>; Least squares estimate; Maximum likelihood estimate; First<br />

failure-censored; Series system<br />

1. Introduction<br />

The <strong>Gompertz</strong> <strong>distribution</strong> plays an important role in modeling human<br />

mortality and fitting actuarial tables. Historically, <strong>the</strong> <strong>Gompertz</strong> <strong>distribution</strong><br />

* Corresponding author.<br />

E-mail address: jwwu@stat.tku.edu.tw (J.-W. Wu).<br />

0096-3003/$ - see front matter Ó 2003 Elsevier Inc. All rights reserved.<br />

doi:10.1016/j.amc.2003.08.086

134 J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147<br />

was first introduced by <strong>Gompertz</strong> [8]. Recently, many authors have contributed<br />

to <strong>the</strong> studies <strong>of</strong> statistical methodology and characterization <strong>of</strong> this <strong>distribution</strong>;<br />

for example, Read [15], Makany [13], Rao and Damaraju [14], Franses [6],<br />

Chen [3] and Wu and Lee [17]. Garg et al. [7] studied <strong>the</strong> properties <strong>of</strong> <strong>the</strong><br />

<strong>Gompertz</strong> <strong>distribution</strong> and obtained <strong>the</strong> maximum likelihood (ML) estimates<br />

for <strong>the</strong> <strong>parameters</strong>. Gordon [9] provided <strong>the</strong> ML estimation for <strong>the</strong> mixture <strong>of</strong><br />

two <strong>Gompertz</strong> <strong>distribution</strong>s.<br />

Probability plots in <strong>the</strong>ir most common form are used with location-scale<br />

parameter models. Parameters were estimated from a probability plot by fitting<br />

a straight line through <strong>the</strong> points by eye, but it is clear that <strong>the</strong> line could have<br />

been determined by <strong>least</strong> squares method. A similar idea can be used more<br />

generally to propose parameter estimates in certain situations. In this paper, we<br />

consider <strong>Gompertz</strong> model in which <strong>the</strong> unknown <strong>parameters</strong> can be related to<br />

some transform <strong>of</strong> <strong>the</strong> cumulative <strong>distribution</strong> function under <strong>the</strong> complete<br />

data and <strong>the</strong> first failure-censored data (for example, series system; see [1]).<br />

The remainder <strong>of</strong> this paper is organized as follows. In Section 2, we propose<br />

unweighted and weighted <strong>least</strong> squares procedures for estimating <strong>the</strong><br />

<strong>parameters</strong> <strong>of</strong> <strong>the</strong> <strong>Gompertz</strong> <strong>distribution</strong> for both complete samples and <strong>the</strong><br />

first failure-censored samples. Numerical simulation studies are given in Section<br />

3. Some conclusions are presented in Section 4.<br />

2. Least squares estimation <strong>of</strong> <strong>parameters</strong><br />

In this section, we propose both unweighted and weighted <strong>least</strong> squares<br />

procedures for estimating <strong>the</strong> <strong>parameters</strong> c and k <strong>of</strong> <strong>the</strong> <strong>Gompertz</strong> <strong>distribution</strong>.<br />

For <strong>the</strong> case <strong>of</strong> complete sample is discussed in Section 2.1. In Section 2.2, we<br />

derive <strong>the</strong> unweighted and weighted <strong>least</strong> squares estimates <strong>of</strong> c and k under <strong>the</strong><br />

first failured-censored sampling plan [1]. The sampling plan proposed by<br />

Balasooriya [1] consists <strong>of</strong> grouping number <strong>of</strong> specimens into several sets or<br />

series systems <strong>of</strong> <strong>the</strong> same size and testing each <strong>of</strong> <strong>the</strong>se series systems <strong>of</strong><br />

specimens separately until <strong>the</strong> occurrence <strong>of</strong> first failure in each series system in<br />

reliability study. Compared to ordinary sampling plans, <strong>the</strong> first failured-censored<br />

sampling plan has an advantage <strong>of</strong> saving both test-time and resources.<br />

2.1. Least squares estimates under complete data<br />

The probability density function (p.d.f.) <strong>of</strong> <strong>the</strong> <strong>Gompertz</strong> <strong>distribution</strong> is<br />

k<br />

f ðxÞ ¼ke cx exp<br />

c ðecx 1Þ ; x > 0; ð1Þ

where c > 0andk > 0 are <strong>the</strong> <strong>parameters</strong>. It is noted that when c ! 0, <strong>the</strong><br />

<strong>Gompertz</strong> <strong>distribution</strong> will tend to an exponential <strong>distribution</strong>. The corresponding<br />

cumulative <strong>distribution</strong> function (c.d.f.) is<br />

k<br />

F ðxÞ ¼1 exp<br />

c ðecx 1Þ<br />

ð2Þ<br />

and F ðxÞ satisfies<br />

<br />

lnf lnð1 F ðxÞÞg ¼ ln k þ ln ecx 1<br />

: ð3Þ<br />

c<br />

Now suppose that X 1 ; X 2 ; ...; X n is a sample <strong>of</strong> size n from a <strong>Gompertz</strong><br />

<strong>distribution</strong> with <strong>parameters</strong> c and k, and that X ð1Þ < X ð2Þ < < X ðnÞ are <strong>the</strong><br />

order statistics. For observed ordered observations x ð1Þ < x ð2Þ < < x ðnÞ ,it<br />

follows from (3) that<br />

<br />

lnf lnð1 F ðx ðiÞ ÞÞg ¼ ln k þ ln ecx ðiÞ<br />

1<br />

; i ¼ 1; 2; ...; n: ð4Þ<br />

c<br />

Let <strong>the</strong> empirical <strong>distribution</strong> function <strong>of</strong> F ðxÞ be denoted by bF ðxÞ, where<br />

bF ðx ðiÞ Þ equals i=n. In order to avoid lnð0Þ in (4), we modify bF ðx ðiÞ Þ to be<br />

p i ¼ i d<br />

n 2d þ 1<br />

for some d ð0 6 d < 1Þ. The reader is referred to Barnett [2] and DÕAgostino<br />

and Stephens [4] for details. In this paper, we only choose three popular<br />

quantities d ¼ 0, 0.5, 0.3 [10,16], and let p 1i ¼ i=ðn þ 1Þ, p 2i ¼ði 0:5Þ=n, p 3i ¼<br />

ði 0:3Þ=ðn þ 0:4Þ. Alternatively, we use p 4i ¼ P i<br />

j¼1 f1=ðn j þ 1Þg to estimate<br />

lnð1 F ðx ðiÞ ÞÞ in (4) (see [12,16]).<br />

First, we estimate c and k by unweighted <strong>least</strong> squares (UWLS) method. Let<br />

and<br />

G k ðc; kÞ ¼ Xn<br />

i¼1<br />

G 4 ðc; kÞ ¼ Xn<br />

J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147 135<br />

i¼1<br />

<br />

lnð<br />

<br />

ln p 4i<br />

We solve <strong>the</strong> normal equations<br />

8<br />

oG k ðc; kÞ >< ¼ 0;<br />

oc<br />

>: oG k ðc; kÞ<br />

¼ 0<br />

ok<br />

<br />

lnð1 p ki ÞÞ ln k ln ecx ðiÞ 2<br />

1<br />

; k ¼ 1; 2; 3<br />

c<br />

<br />

ln k ln ecx ðiÞ 2<br />

1<br />

:<br />

c

136 J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147<br />

for each k ¼ 1, 2, 3, 4. Then <strong>the</strong> corresponding UWLS estimates <strong>of</strong> c and k<br />

satisfy <strong>the</strong> following normal equations for k ¼ 1, 2, 3:<br />

( !<br />

!)<br />

^c k ¼<br />

Xn ^c k x e^c kx ðiÞ ðiÞ þ e^c k x ðiÞ<br />

1 e^c kx ðiÞ<br />

1<br />

e^c kx ðiÞ<br />

ln<br />

1<br />

^c k<br />

^k k ¼<br />

<br />

(<br />

(<br />

i¼1<br />

X n<br />

Yn<br />

i¼1<br />

i¼1<br />

1 X n<br />

n<br />

i¼1<br />

ð<br />

^c k x ðiÞ e^c kx ðiÞ þ e^c k x ðiÞ<br />

1<br />

^c k ðe^c kx ðiÞ<br />

1Þ<br />

lnð lnð1 p ki ÞÞ þ 1 n<br />

lnð1<br />

!"<br />

lnð<br />

X n<br />

i¼1<br />

ln<br />

lnð1<br />

p ki ÞÞ<br />

e^c kx ðiÞ<br />

1<br />

^c k<br />

!#) 1<br />

;<br />

) 1=n ( !) ð 1=nÞ<br />

Y n e^c kx ðiÞ<br />

1<br />

p ki ÞÞ<br />

^c k<br />

i¼1<br />

and<br />

( !<br />

!)<br />

^c 4 ¼<br />

Xn ^c 4 x e^c 4x ðiÞ ðiÞ þ e^c 4 x ðiÞ<br />

1 e^c 4x ðiÞ<br />

1<br />

e^c 4x ðiÞ<br />

ln<br />

1<br />

^c 4<br />

<br />

(<br />

i¼1<br />

X n<br />

i¼1<br />

þ 1 n<br />

i¼1<br />

X n<br />

i¼1<br />

^c 4 x ðiÞ e^c 4x ðiÞ þ e^c 4 x ðiÞ<br />

1<br />

^c 4 ðe^c 4x ðiÞ<br />

1Þ<br />

ln<br />

e^c 4x ðiÞ<br />

1<br />

^c 4<br />

!#) 1<br />

;<br />

!"<br />

ln p 4i<br />

( ) 1=n ( !) ð 1=nÞ<br />

^k 4 ¼<br />

Yn Y n e^c 4x ðiÞ<br />

1<br />

p 4i<br />

:<br />

^c 4<br />

i¼1<br />

1 X n<br />

n<br />

i¼1<br />

ln p 4i<br />

Second, we can also estimate c and k via weighted <strong>least</strong> squares (WLS)<br />

method (see [5,11]). For a ¼ 1; 2 and k ¼ 1; 2; 3, let<br />

<br />

<br />

K ak ðc; kÞ ¼ Xn<br />

W aki lnð lnð1 p ki ÞÞ ln k ln ecx ðiÞ 2<br />

1<br />

c<br />

i¼1<br />

and<br />

K 34 ðc; kÞ ¼ Xn<br />

i¼1<br />

W 34i<br />

<br />

ln p 4i<br />

<br />

ln k ln ecx ðiÞ 2<br />

1<br />

;<br />

c<br />

where<br />

W 1ki ¼fð1 p ki Þ lnð1 p ki Þg 2 ;

J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147 137<br />

( )<br />

W 2ki ¼ 3:3p ki 27:5½1 ð1 p ki Þ 0:025 2<br />

Š<br />

;<br />

n<br />

W 34i ¼<br />

( ) 2 Xi<br />

:<br />

j¼1<br />

i¼1<br />

1<br />

n j þ 1<br />

Solving <strong>the</strong> normal equations as before, <strong>the</strong> WLS estimates <strong>of</strong> c and k satisfy<br />

<strong>the</strong> following normal equations:<br />

( !<br />

!)<br />

^c wak ¼<br />

Xn ^c wak x e^c wakx ðiÞ ðiÞ þ e^c wak x ðiÞ<br />

1 e^c wakx ðiÞ<br />

1<br />

W aki e^c wakx ðiÞ<br />

ln<br />

1<br />

^c wak<br />

8<br />

><<br />

<br />

>:<br />

X n<br />

i¼1<br />

W aki<br />

^c wak x ðiÞ e^c wakx ðiÞ þ e^c wak x ðiÞ<br />

1<br />

^c ak ðe^c akx ðiÞ<br />

1Þ<br />

2<br />

!<br />

6<br />

4 lnð<br />

lnð1<br />

p ki ÞÞ<br />

P n<br />

i¼1 W P 39<br />

n<br />

aki lnð lnð1 p ki ÞÞ<br />

i¼1 W aki ln e^c wak x ðiÞ 1 >=<br />

^c wak<br />

P<br />

7<br />

n<br />

i¼1 W 5<br />

aki<br />

>;<br />

1<br />

;<br />

8<br />

P n<br />

i¼1<br />

^k W P 9<br />

n<br />

aki lnð lnð1 p ki ÞÞ<br />

i¼1 W aki ln e^c wak x ðiÞ ><<br />

1 >=<br />

^c wak<br />

wak ¼ exp<br />

P n<br />

>:<br />

i¼1 W aki<br />

>;<br />

for a ¼ 1, 2 and k ¼ 1, 2, 3 and<br />

( !<br />

!)<br />

^c w34 ¼<br />

Xn ^c w34 x e^c w34x ðiÞ ðiÞ þ e^c w34 x ðiÞ<br />

1 e^c w34x ðiÞ<br />

1<br />

W 34i e^c w34x ðiÞ<br />

ln<br />

1<br />

^c w34<br />

8<br />

><<br />

<br />

>:<br />

i¼1<br />

X n<br />

i¼1<br />

!<br />

^c w34 x e^c w34x ðiÞ ðiÞ þ e^c w34 x ðiÞ<br />

1<br />

W 34i<br />

^c ðe^c w34x ðiÞ<br />

w34 1Þ<br />

2<br />

6<br />

4<br />

ln p 4i<br />

P n<br />

i¼1 W P 39<br />

n<br />

34i ln p 4i i¼1 W 34i ln e^c w34 x ðiÞ 1 >=<br />

^c w34<br />

P<br />

7<br />

n<br />

i¼1 W 5<br />

34i<br />

>;<br />

1<br />

;<br />

8<br />

P n<br />

i¼1<br />

^k W P 9<br />

n<br />

34i ln p 4i i¼1 W 34i ln e^c w34 x ðiÞ ><<br />

1 >=<br />

^c w34<br />

w34 ¼ exp<br />

P n<br />

>:<br />

i¼1 W 34i<br />

>; :

138 J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147<br />

Garg et al. [7] derived <strong>the</strong> ML estimates <strong>of</strong> c and k from<br />

^c ¼<br />

^k ¼<br />

(<br />

(<br />

n Xn<br />

i¼1<br />

^c Xn<br />

i¼1<br />

<br />

e^cx ðiÞ<br />

)(<br />

1<br />

x ðiÞ<br />

^c<br />

) (<br />

1<br />

X n<br />

i¼1<br />

<br />

X n<br />

i¼1<br />

e^cx ðiÞ<br />

x ðiÞ<br />

X n<br />

i¼1<br />

<br />

1<br />

<br />

e^cx ðiÞ<br />

X n<br />

i¼1<br />

<br />

1<br />

n Xn<br />

i¼1<br />

x ðiÞ e^cx ðiÞ) 1<br />

;<br />

ð5Þ<br />

x ðiÞ e^cx ðiÞ) 1<br />

: ð6Þ<br />

Thus it is only necessary to obtain a solution <strong>of</strong> Eq. (5) which will be <strong>the</strong> MLE<br />

<strong>of</strong> c. An iterative solution to Eq. (5) can be achieved by NewtonÕs method; <strong>the</strong><br />

initial estimate ^c 0 may be selected as <strong>the</strong> LSE <strong>of</strong> c. The MLE ^k can <strong>the</strong>n be<br />

obtained from Eq. (6).<br />

2.2. Least squares estimates under <strong>the</strong> first failured-censored sampling plan<br />

The p.d.f. <strong>of</strong> <strong>the</strong> first-order statistic X ð1Þ is<br />

k<br />

<br />

<br />

f ðx; c; k Þ¼k e cx exp<br />

c ðecx 1Þ ; ð7Þ<br />

where k ¼ nk. The corresponding c.d.f. is<br />

k<br />

<br />

<br />

F ðxÞ ¼1 exp<br />

c ðecx 1Þ : ð8Þ<br />

Suppose X ð1Þ1 ; X ð1Þ2 ; ...; X ð1Þm denote <strong>the</strong> set <strong>of</strong> first-order statistics <strong>of</strong> m<br />

samples <strong>of</strong> size n from (1) and let Y ð1Þ < Y ð2Þ < < Y ðmÞ be <strong>the</strong> corresponding<br />

order statistics. Clearly, X ð1Þ1 ; X ð1Þ2 ; ...; X ð1Þm can also be considered as a random<br />

sample from (7). Then F ðxÞ in (8) satisfies<br />

<br />

lnf lnð1 F ðxÞÞg ¼ ln n þ ln k þ ln ecx 1<br />

: ð9Þ<br />

c<br />

For observed ordered observations y ð1Þ < y ð2Þ < < y ðmÞ , (9) can be rewritten<br />

as<br />

<br />

lnf lnð1 F ðy ðiÞ ÞÞg ¼ ln n þ ln k þ ln ecy ðiÞ<br />

1<br />

; i ¼ 1; ...; m: ð10Þ<br />

c<br />

Proceeding as in Section 2.1, we can obtain <strong>the</strong> unweighted and weighted<br />

<strong>least</strong> squares estimates <strong>of</strong> c and k. Likewise, we can also obtain <strong>the</strong> ML estimates<br />

<strong>of</strong> c and k under <strong>the</strong> first failured-censored sampling plan.

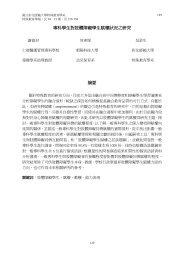

3. Simulation study<br />

J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147 139<br />

In this section, we compare <strong>the</strong> 12 estimation methods given in Section 2 in<br />

terms <strong>of</strong> <strong>the</strong> mean squared error over <strong>the</strong> 1000 simulated samples. The 12<br />

methods are as follows:<br />

Method Estimates p i Weight<br />

1 UWLSE p 1i –<br />

2 UWLSE p 2i –<br />

3 UWLSE p 3i –<br />

4 UWLSE p 4i –<br />

5 WLSE p 1i W 11i<br />

6 WLSE p 2i W 12i<br />

7 WLSE p 3i W 13i<br />

8 WLSE p 1i W 21i<br />

9 WLSE p 2i W 22i<br />

10 WLSE p 3i W 23i<br />

11 WLSE p 4i W 34i<br />

12 MLE – –<br />

Tables 1–6 list <strong>the</strong> results <strong>of</strong> complete data and <strong>the</strong> first failured-censored<br />

data, respectively. Based on <strong>the</strong> results shown in Tables 1–6, our proposed<br />

estimators and ML estimators are biased. For <strong>the</strong> complete data, by comparing<br />

SMSE <strong>of</strong> <strong>the</strong>se estimates, we obtain <strong>the</strong> main conclusions are: (a) <strong>the</strong> performance<br />

<strong>of</strong> WLS estimates obtained by Method 11 in c ¼ 0:01, 0.1, 2.0, k ¼ 0:01<br />

is better than ML estimates for n ¼ 10, 30; (b) for n ¼ 10, <strong>the</strong> WLS estimates<br />

obtained by Methods 6–7, Method 9 and Method 11 are more accurate than<br />

ML estimates in c ¼ 0:01, 0.1, 2.0, k ¼ 0:01; (c) for n ¼ 10, generally <strong>the</strong><br />

UWLS and WLS estimates are better than ML estimates in c ¼ 0:01, k ¼ 0:01,<br />

0.02; (d) for n ¼ 10, generally <strong>the</strong> UWLS and WLS estimates are better than<br />

ML estimates in c ¼ 0:1, k ¼ 0:02; and (e) for n ¼ 30, generally <strong>the</strong> UWLS and<br />

WLS estimates are better than ML estimates in c ¼ 0:01, k ¼ 0:02. From (a)–<br />

(e), it is suggested that Method 11 is useful for estimating c and k under <strong>the</strong><br />

complete data.<br />

For <strong>the</strong> first failured-censored data, by comparing SMSE <strong>of</strong> <strong>the</strong>se estimates,<br />

we obtain <strong>the</strong> main conclusions are: (a) for m ¼ n ¼ 10, <strong>the</strong> WLS estimates<br />

obtained by Method 6 and Method 9 are better than ML estimates in c ¼ 0:01,<br />

0.1, 2.0, k ¼ 0:01; (b) for m ¼ n ¼ 10, generally <strong>the</strong> performance <strong>of</strong> UWLS and<br />

WLS estimates is better than ML estimates in c ¼ 0:01, 0.1, k ¼ 0:01; (c) for<br />

m ¼ n ¼ 10, except Methods 1–2 and Method 4, <strong>the</strong> UWLS and WLS estimates<br />

are more accurate than ML estimates in c ¼ 2:0, k ¼ 0:02; (d) for<br />

m ¼ 10, n ¼ 30, except Methods 1–3, <strong>the</strong> UWLS and WLS estimates are better

Table 1<br />

The UWLS, WLS and ML estimates <strong>of</strong> c and k for n ¼ 10<br />

True<br />

value<br />

method<br />

c ¼ 0:01, ^c k ¼ 0:01, ^k c ¼ 0:1, ^c k ¼ 0:01, ^k c ¼ 2:0, ^c k ¼ 0:01, ^k<br />

1 0.00849 (0.00003) 0.01076 (0.00001) 0.08342 (0.00617) 0.01451 (0.00008) 1.8175 (0.5894) 0.02028 (0.00046)<br />

2 0.01107 (0.00003) 0.00993 (0.00001) 0.09827 (0.00863) 0.01171 (0.00005) 2.0194 (0.9584) 0.01437 (0.00031)<br />

3 0.00975 (0.00003) 0.01038 (0.00001) 0.09143 (0.00746) 0.01295 (0.00006) 1.9260 (0.8598) 0.01651 (0.00034)<br />

4 0.00902 (0.00003) 0.01107 (0.00001) 0.08631 (0.00663) 0.01446 (0.00008) 1.8405 (0.7915) 0.02010 (0.00047)<br />

5 0.00860 (0.00003) 0.01072 (0.00001) 0.08820 (0.00668) 0.01306 (0.00005) 1.8410 (0.6668) 0.01743 (0.00021)<br />

6 0.01053 (0.00003) 0.01014 (0.00001) 0.09755 (0.00827) 0.01148 (0.00003) 1.9761 (0.8937) 0.01348 (0.00011)<br />

7 0.00962 (0.00003) 0.01046 (0.00001) 0.09371 (0.00760) 0.01211 (0.00003) 1.9106 (0.7724) 0.01511 (0.00012)<br />

8 0.00941 (0.00003) 0.01081 (0.00001) 0.09573 (0.00811) 0.01210 (0.00004) 1.8970 (0.5641) 0.01710 (0.00022)<br />

9 0.01028 (0.00003) 0.01030 (0.00001) 0.09825 (0.00830) 0.01135 (0.00003) 1.9836 (0.5666) 0.01338 (0.00011)<br />

10 0.00964 (0.00003) 0.01033 (0.00008) 0.09139 (0.00745) 0.01294 (0.00006) 1.9155 (0.6334) 0.01698 (0.00034)<br />

11 0.00970 (0.00002) 0.01103 (0.00001) 0.09591 (0.00781) 0.01218 (0.00003) 1.9473 (0.8621) 0.01524 (0.00015)<br />

12 0.01197 (0.00003) 0.00868 (0.00005) 0.10607 (0.00969) 0.00963 (0.00003) 1.9002 (0.9065) 0.01693 (0.00041)<br />

c ¼ 0:01, ^c k ¼ 0:02, ^k c ¼ 0:1, ^c k ¼ 0:02, ^k c ¼ 2:0, ^c k ¼ 0:02, ^k<br />

1 0.00972 (0.00005) 0.01975 (0.00012) 0.08140 (0.00294) 0.02861 (0.00035) 1.7890 (0.4987) 0.03670 (0.00162)<br />

2 0.01242 (0.00007) 0.01910 (0.00011) 0.20326 (0.03550) 0.01638 (0.00009) 1.9950 (0.5874) 0.02680 (0.00080)<br />

3 0.01110 (0.00006) 0.01961 (0.00012) 0.09599 (0.00290) 0.02576 (0.00033) 1.9107 (0.5566) 0.03036 (0.00108)<br />

4 0.01039 (0.00005) 0.02049 (0.00014) 0.08546 (0.00280) 0.03042 (0.00044) 1.8259 (0.4469) 0.03604 (0.00157)<br />

5 0.00945 (0.00004) 0.02029 (0.00014) 0.07862 (0.00270) 0.02900 (0.00035) 1.8330 (0.4258) 0.03198 (0.00087)<br />

6 0.01189 (0.00006) 0.01972 (0.00013) 0.10260 (0.00289) 0.02420 (0.00028) 1.9629 (0.3298) 0.02595 (0.00055)<br />

7 0.01079 (0.00005) 0.02000 (0.00013) 0.09024 (0.00264) 0.02683 (0.00032) 1.9259 (0.3776) 0.02758 (0.00062)<br />

8 0.00992 (0.00005) 0.02085 (0.00015) 0.08610 (0.00258) 0.02948 (0.00041) 1.9498 (0.3951) 0.02930 (0.00078)<br />

9 0.01118 (0.00005) 0.02013 (0.00013) 0.09918 (0.00264) 0.02586 (0.00032) 1.9870 (0.4009) 0.02496 (0.00046)<br />

10 0.01107 (0.00006) 0.01964 (0.00012) 0.09570 (0.00300) 0.02594 (0.00033) 1.9104 (0.3765) 0.03034 (0.00107)<br />

11 0.00985 (0.00004) 0.02157 (0.00017) 0.08812 (0.00236) 0.03129 (0.00049) 1.9225 (0.3912) 0.02873 (0.00064)<br />

12 0.01338 (0.00006) 0.01982 (0.00025) 0.11024 (0.00966) 0.02159 (0.00276) 1.8854 (0.3799) 0.02475 (0.00035)<br />

Note. The values in paren<strong>the</strong>ses are sample mean squared error (SMSE) <strong>of</strong> ^c and ^k and Ô*Õ express SMSE less than Method 12.<br />

140 J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147

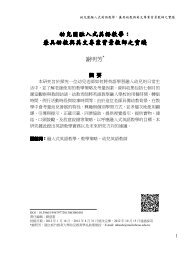

Table 2<br />

The UWLS, WLS and ML estimates <strong>of</strong> c and k for n ¼ 30<br />

True<br />

value<br />

method<br />

c ¼ 0:01, ^c k ¼ 0:01, ^k c ¼ 0:1, ^c k ¼ 0:01, ^k c ¼ 2:0, ^c k ¼ 0:01, ^k<br />

1 0.00837 (0.00003) 0.01087 (0.00001) 0.08422 (0.00630) 0.01440 (0.00008) 1.7930 (0.8687) 0.02093 (0.00051)<br />

2 0.10790 (0.00003) 0.01012 (0.00001) 0.09921 (0.00879) 0.01164 (0.00005) 2.0061 (0.9555) 0.01422 (0.00022)<br />

3 0.00962 (0.00003) 0.01053 (0.00001) 0.09234 (0.00760) 0.01288 (0.00006) 1.9088 (0.8121) 0.01697 (0.00032)<br />

4 0.00892 (0.00003) 0.01120 (0.00001) 0.08730 (0.00677) 0.01436 (0.00008) 1.8271 (0.7256) 0.02048 (0.00049)<br />

5 0.00837 (0.00003) 0.01089 (0.00001) 0.08836 (0.00669) 0.01314 (0.00004) 1.8211 (0.6249) 0.01784 (0.00021)<br />

6 0.01013 (0.00003) 0.01037 (0.00001) 0.09764 (0.00827) 0.01156 (0.00003) 1.9523 (0.9625) 0.01448 (0.00014)<br />

7 0.00938 (0.00003) 0.01060 (0.00001) 0.09386 (0.00760) 0.01218 (0.00003) 1.8902 (0.7805) 0.01568 (0.00016)<br />

8 0.00918 (0.00003) 0.01093 (0.00001) 0.09537 (0.00803) 0.01228 (0.00004) 1.8698 (0.5978) 0.01778 (0.00024)<br />

9 0.01006 (0.00003) 0.01049 (0.00001) 0.09808 (0.00826) 0.01148 (0.00003) 1.9715 (0.9474) 0.01365 (0.00011)<br />

10 0.00955 (0.00003) 0.01058 (0.00001) 0.09231 (0.00760) 0.01287 (0.00006) 1.9052 (0.7459) 0.01694 (0.00027)<br />

11 0.00933 (0.00002) 0.01123 (0.00001) 0.09570 (0.00778) 0.01235 (0.00003) 1.9345 (0.7954) 0.01508 (0.00013)<br />

12 0.01206 (0.00002) 0.00863 (0.00002) 0.10620 (0.00971) 0.00883 (0.00003) 1.9166 (0.9012) 0.01493 (0.00035)<br />

c ¼ 0:01, ^c k ¼ 0:02, ^k c ¼ 0:1, ^c k ¼ 0:02, ^k c ¼ 2:0, ^c k ¼ 0:02, ^k<br />

1 0.01004 (0.00005) 0.01985 (0.00012) 0.08460 (0.00666) 0.02580 (0.00039) 1.7850 (0.5608) 0.03770 (0.00175)<br />

2 0.01298 (0.00008) 0.01901 (0.00011) 0.10156 (0.00962) 0.02186 (0.00027) 2.0088 (0.4571) 0.02664 (0.00082)<br />

3 0.01149 (0.00006) 0.01950 (0.00012) 0.09451 (0.00827) 0.02331 (0.00030) 1.9069 (0.5418) 0.03122 (0.00116)<br />

4 0.01082 (0.00006) 0.02048 (0.00014) 0.08836 (0.00726) 0.02588 (0.00040) 1.8219 (0.4855) 0.03705 (0.00170)<br />

5 0.00983 (0.00005) 0.02007 (0.00013) 0.08895 (0.00713) 0.02433 (0.00032) 1.8322 (0.4288) 0.03232 (0.00090)<br />

6 0.01207 (0.00007) 0.01952 (0.00012) 0.10002 (0.00908) 0.02192 (0.00025) 1.9821 (0.4132) 0.02576 (0.00058)<br />

7 0.01159 (0.00006) 0.01758 (0.00013) 0.09551 (0.00825) 0.02285 (0.00027) 1.9264 (0.6287) 0.02805 (0.00069)<br />

8 0.01033 (0.00005) 0.01942 (0.00012) 0.09485 (0.00819) 0.02334 (0.00027) 1.9321 (0.6386) 0.02980 (0.00083)<br />

9 0.01020 (0.00004) 0.02136 (0.00016) 0.10027 (0.00900) 0.02189 (0.00024) 1.9890 (0.4291) 0.02536 (0.00053)<br />

10 0.01039 (0.00006) 0.02069 (0.00014) 0.09449 (0.00828) 0.02330 (0.00030) 1.9066 (0.5945) 0.03119 (0.00115)<br />

11 0.01105 (0.00006) 0.01982 (0.00012) 0.09694 (0.00825) 0.02344 (0.00027) 1.9122 (0.7068) 0.02933 (0.00066)<br />

12 0.01401 (0.00007) 0.02023 (0.00418) 0.10987 (0.01069) 0.02008 (0.00020) 1.8954 (0.5122) 0.02386 (0.00125)<br />

Note. The values in paren<strong>the</strong>ses are sample mean squared error (SMSE) <strong>of</strong> ^c and ^k and Ô*Õ express SMSE less than Method 12.<br />

J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147 141

Table 3<br />

The UWLS, WLS and ML estimates <strong>of</strong> c and k for m ¼ 10, n ¼ 10<br />

True<br />

value<br />

method<br />

c ¼ 0:01, ^c k ¼ 0:01, ^k c ¼ 0:1, ^c k ¼ 0:01, ^k c ¼ 2:0, ^c k ¼ 0:01, ^k<br />

1 0.04852 (0.00330) 0.00760 (0.00002) 0.10185 (0.01523) 0.01002 (0.00002) 1.6509 (0.7125) 0.01916 (0.00033)<br />

2 0.06270 (0.00583) 0.00745 (0.00004) 0.14774 (0.02963) 0.00922 (0.00003) 2.0926 (0.7657) 0.01413 (0.00022)<br />

3 0.05983 (0.00494) 0.00741 (0.00002) 0.12453 (0.02174) 0.00951 (0.00002) 1.8922 (0.6788) 0.01603 (0.00026)<br />

4 0.05601 (0.00426) 0.00813 (0.00002) 0.11167 (0.01791) 0.01073 (0.00002) 1.7396 (0.6707) 0.01973 (0.00038)<br />

5 0.04327 (0.00293) 0.00792 (0.00002) 0.09508 (0.01307) 0.01020 (0.00002) 1.5860 (0.6396) 0.01944 (0.00030)<br />

6 0.06003 (0.00543) 0.00780 (0.00002) 0.13019 (0.02247) 0.00960 (0.00002) 1.9579 (0.5300) 0.01479 (0.00020)<br />

7 0.04991 (0.00392) 0.00784 (0.00002) 0.11653 (0.01847) 0.00985 (0.00002) 1.8055 (0.5330) 0.01648 (0.00022)<br />

8 0.05072 (0.00433) 0.00739 (0.00002) 0.09787 (0.01288) 0.01085 (0.00002) 1.7376 (0.4510) 0.01962 (0.00034)<br />

9 0.05261 (0.00430) 0.00810 (0.00002) 0.12501 (0.02118) 0.00996 (0.00002) 1.9599 (0.4629) 0.01465 (0.00019)<br />

10 0.05995 (0.00496) 0.00740 (0.00002) 0.12446 (0.02177) 0.00950 (0.00002) 1.8899 (0.6609) 0.01600 (0.00025)<br />

11 0.05596 (0.00425) 0.00809 (0.00002) 0.10484 (0.01581) 0.01162 (0.00003) 1.8824 (0.5654) 0.01785 (0.00031)<br />

12 0.07250 (0.00745) 0.00656 (0.00004) 0.13765 (0.02303) 0.00773 (0.00091) 1.7767 (0.8457) 0.01966 (0.00021)<br />

c ¼ 0:01, ^c k ¼ 0:02, ^k c ¼ 0:1, ^c k ¼ 0:02, ^k c ¼ 2:0, ^c k ¼ 0:02, ^k<br />

1 0.09970 (0.01660) 0.01427 (0.00007) 0.14746 (0.01857) 0.01666 (0.00006) 1.6248 (0.5007) 0.06124 (0.00610)<br />

2 0.13263 (0.03015) 0.01407 (0.00008) 0.20322 (0.03550) 0.01638 (0.00009) 2.1286 (0.9220) 0.02497 (0.00050)<br />

3 0.11847 (0.02280) 0.01445 (0.00008) 0.17327 (0.02272) 0.01677 (0.00007) 1.8284 (0.4664) 0.04996 (0.00478)<br />

4 0.10560 (0.01838) 0.01608 (0.00007) 0.16110 (0.01936) 0.01848 (0.00007) 1.7102 (0.4756) 0.06049 (0.00640)<br />

5 0.10093 (0.01703) 0.01485 (0.00007) 0.13605 (0.01652) 0.01800 (0.00006) 1.6052 (0.4363) 0.05903 (0.00490)<br />

6 0.11640 (0.02291) 0.01516 (0.00007) 0.17993 (0.02876) 0.01755 (0.00008) 1.9063 (0.2940) 0.03777 (0.00199)<br />

7 0.10976 (0.02055) 0.01487 (0.00007) 0.16093 (0.02145) 0.01766 (0.00008) 1.7556 (0.3227) 0.04613 (0.00271)<br />

8 0.08593 (0.01096) 0.01633 (0.00006) 0.13608 (0.01135) 0.01900 (0.00006) 1.9563 (0.3035) 0.03605 (0.00199)<br />

9 0.10947 (0.02259) 0.01536 (0.00007) 0.17023 (0.02761) 0.01817 (0.00008) 1.8855 (0.5413) 0.03825 (0.00254)<br />

10 0.11648 (0.02211) 0.01442 (0.00007) 0.17707 (0.02362) 0.01670 (0.00008) 1.8295 (0.4590) 0.04910 (0.00442)<br />

11 0.10435 (0.02020) 0.01669 (0.00007) 0.15260 (0.02238) 0.01979 (0.00008) 1.7849 (0.3257) 0.05092 (0.00331)<br />

12 0.09857 (0.01689) 0.02968 (0.00122) 0.11024 (0.00966) 0.02159 (0.00276) 1.7858 (0.4688) 0.05685 (0.00587)<br />

Note. The values in paren<strong>the</strong>ses are sample mean squared error (SMSE) <strong>of</strong> ^c and ^k and Ô*Õ express SMSE less than Method 12.<br />

142 J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147

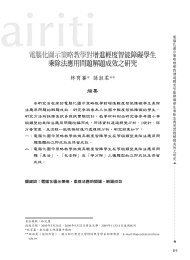

Table 4<br />

The UWLS, WLS and ML estimates <strong>of</strong> c and k for m ¼ 10, n ¼ 30<br />

True<br />

value<br />

method<br />

c ¼ 0:01, ^c k ¼ 0:01, ^k c ¼ 0:1, ^c k ¼ 0:01, ^k c ¼ 2:0, ^c k ¼ 0:01, ^k<br />

1 0.12498 (0.02916) 0.02231 (0.00024) 0.21182 (0.05235) 0.02374 (0.00028) 1.6616 (1.0561) 0.04366 (0.00194)<br />

2 0.17748 (0.05726) 0.02165 (0.00024) 0.27975 (0.08744) 0.02297 (0.00029) 2.1641 (1.2438) 0.03536 (0.00139)<br />

3 0.15482 (0.04213) 0.02203 (0.00030) 0.24466 (0.06722) 0.02368 (0.00031) 1.9169 (1.0140) 0.03884 (0.00156)<br />

4 0.13444 (0.03400) 0.02410 (0.00030) 0.22383 (0.05646) 0.02523 (0.00035) 1.7149 (0.9252) 0.04608 (0.00216)<br />

5 0.14243 (0.03706) 0.02237 (0.00025) 0.19104 (0.03676) 0.02463 (0.00031) 1.5993 (0.9509) 0.04487 (0.00196)<br />

6 0.15687 (0.05748) 0.02278 (0.00028) 0.24524 (0.06484) 0.02457 (0.00033) 2.0068 (0.8751) 0.03749 (0.00145)<br />

7 0.16454 (0.05346) 0.02270 (0.00026) 0.22254 (0.05444) 0.02486 (0.00034) 1.7870 (0.8540) 0.04076 (0.00159)<br />

8 0.13730 (0.02728) 0.02375 (0.00026) 0.18841 (0.03863) 0.02726 (0.00043) 1.8376 (0.6266) 0.04481 (0.00161)<br />

9 0.16120 (0.05008) 0.02315 (0.00028) 0.23236 (0.06028) 0.02553 (0.00036) 2.0356 (0.7844) 0.03627 (0.00136)<br />

10 0.15756 (0.04412) 0.02174 (0.00023) 0.24456 (0.06775) 0.02361 (0.00030) 1.8744 (0.9017) 0.03938 (0.00158)<br />

11 0.13941 (0.03891) 0.02599 (0.00039) 0.20372 (0.04678) 0.02848 (0.00049) 1.5885 (0.5526) 0.04683 (0.00186)<br />

12 0.09990 (0.01699) 0.03139 (0.00416) 0.08483 (0.0100) 0.01801 (0.00073) 1.8946 (1.0089) 0.04010 (0.00219)<br />

c ¼ 0:01, ^c k ¼ 0:02, ^k c ¼ 0:1, ^c k ¼ 0:02, ^k c ¼ 2:0, ^c k ¼ 0:02, ^k<br />

1 0.31994 (0.20926) 0.04295 (0.00086) 0.33734 (0.16769) 0.04502 (0.00094) 1.7073 (1.0609) 0.07517 (0.00454)<br />

2 0.37894 (0.27150) 0.04328 (0.00098) 0.43907 (0.27645) 0.04501 (0.00109) 2.2012 (1.2478) 0.06806 (0.00392)<br />

3 0.33716 (0.24223) 0.04484 (0.00108) 0.38326 (0.19587) 0.04676 (0.00129) 2.0267 (1.1409) 0.06878 (0.00399)<br />

4 0.32309 (0.22103) 0.04814 (0.00125) 0.34234 (0.17261) 0.05016 (0.00138) 1.8608 (1.1185) 0.07986 (0.00558)<br />

5 0.30241 (0.21794) 0.04457 (0.00099) 0.33130 (0.15508) 0.04800 (0.00120) 1.5958 (1.0209) 0.07722 (0.00456)<br />

6 0.33629 (0.21621) 0.04691 (0.00121) 0.39209 (0.22100) 0.04904 (0.00137) 1.8068 (1.0586) 0.08036 (0.00576)<br />

7 0.33059 (0.23176) 0.04529 (0.00104) 0.34958 (0.17269) 0.04746 (0.00123) 1.8196 (0.9108) 0.07591 (0.00464)<br />

8 0.31525 (0.17606) 0.04942 (0.00132) 0.38639 (0.20854) 0.05118 (0.00149) 1.7541 (1.2386) 0.08299 (0.00628)<br />

9 0.33750 (0.23450) 0.04664 (0.00116) 0.37508 (0.19942) 0.05005 (0.00148) 1.7744 (0.7914) 0.07802 (0.00488)<br />

10 0.35060 (0.26211) 0.04452 (0.00107) 0.40520 (0.21520) 0.04571 (0.00117) 1.9727 (1.0945) 0.06802 (0.00384)<br />

11 0.28843 (0.16646) 0.05238 (0.00163) 0.33549 (0.16274) 0.05502 (0.00182) 1.8865 (0.8574) 0.07999 (0.00578)<br />

12 0.06127 (0.01155) 0.02826 (0.00121) 0.05173 (0.01058) 0.08380 (0.58168) 1.8422 (1.0958) 0.01854 (0.00864)<br />

Note. The values in paren<strong>the</strong>ses are sample mean squared error (SMSE) <strong>of</strong> ^c and ^k and Ô*Õ express SMSE less than Method 12.<br />

J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147 143

Table 5<br />

The UWLS, WLS and ML estimates <strong>of</strong> c and k for m ¼ 30, n ¼ 10<br />

True<br />

value<br />

method<br />

c ¼ 0:01, ^c k ¼ 0:01, ^k c ¼ 0:1, ^c k ¼ 0:01, ^k c ¼ 2:0, ^c k ¼ 0:01, ^k<br />

1 0.02710 (0.00083) 0.00852 (0.00001) 0.08408 (0.00828) 0.01084 (0.00001) 1.7177 (0.1401) 0.01537 (0.00011)<br />

2 0.03325 (0.00116) 0.01859 (0.00076) 0.11010 (0.01348) 0.00998 (0.00001) 1.9839 (0.1408) 0.01199 (0.00006)<br />

3 0.03001 (0.00099) 0.00848 (0.00001) 0.09822 (0.01095) 0.01035 (0.00001) 1.8621 (0.0662) 0.01344 (0.00008)<br />

4 0.02889 (0.00093) 0.00885 (0.00001) 0.09119 (0.00950) 0.01101 (0.00001) 1.7667 (0.3134) 0.01527 (0.00011)<br />

5 0.02597 (0.00071) 0.00875 (0.00001) 0.08556 (0.00820) 0.01076 (0.00001) 1.7934 (0.3427) 0.01379 (0.00006)<br />

6 0.03141 (0.00104) 0.00873 (0.00001) 0.10360 (0.01177) 0.01028 (0.00001) 1.9514 (0.0445) 0.01191 (0.00004)<br />

7 0.02846 (0.00086) 0.00875 (0.00001) 0.09642 (0.01026) 0.01047 (0.00001) 1.8905 (0.2056) 0.01258 (0.00005)<br />

8 0.02731 (0.00091) 0.00898 (0.00001) 0.09506 (0.01024) 0.01082 (0.00001) 1.9157 (0.2736) 0.01280 (0.00005)<br />

9 0.02873 (0.00083) 0.00890 (0.00001) 0.10220 (0.01113) 0.01042 (0.00001) 1.9595 (0.0536) 0.01180 (0.00004)<br />

10 0.03025 (0.00104) 0.00848 (0.00001) 0.09815 (0.01097) 0.01038 (0.00001) 1.8617 (0.0614) 0.01343 (0.00008)<br />

11 0.02461 (0.00618) 0.00932 (0.00001) 0.09604 (0.00968) 0.01115 (0.00001) 1.9146 (0.1403) 0.01255 (0.00004)<br />

12 0.03002 (0.00099) 0.00908 (0.00388) 0.12221 (0.01504) 0.00912 (0.00002) 1.9024 (0.2102) 0.01283 (0.00008)<br />

c ¼ 0:01, ^c k ¼ 0:02, ^k c ¼ 0:1, ^c k ¼ 0:02, ^k c ¼ 2:0, ^c k ¼ 0:02, ^k<br />

1 0.01004 (0.00005) 0.01985 (0.00012) 0.08460 (0.00666) 0.02580 (0.00039) 1.7850 (0.5608) 0.03770 (0.00175)<br />

2 0.01298 (0.00008) 0.01901 (0.00011) 0.10156 (0.00962) 0.02186 (0.00027) 2.0088 (0.4571) 0.02664 (0.00082)<br />

3 0.01149 (0.00006) 0.01950 (0.00012) 0.09451 (0.00827) 0.02331 (0.00030) 1.9069 (0.5418) 0.03122 (0.00116)<br />

4 0.01082 (0.00006) 0.02048 (0.00014) 0.08836 (0.00726) 0.02588 (0.00040) 1.8219 (0.4855) 0.03705 (0.00170)<br />

5 0.00983 (0.00005) 0.02007 (0.00013) 0.08895 (0.00713) 0.02433 (0.00032) 1.8322 (0.4288) 0.03232 (0.00090)<br />

6 0.01207 (0.00007) 0.01952 (0.00012) 0.10002 (0.00908) 0.02192 (0.00025) 1.9821 (0.4132) 0.02576 (0.00058)<br />

7 0.01159 (0.00006) 0.01758 (0.00013) 0.09551 (0.00825) 0.02285 (0.00027) 1.9264 (0.6287) 0.02805 (0.00069)<br />

8 0.01033 (0.00005) 0.01942 (0.00012) 0.09485 (0.00819) 0.02334 (0.00027) 1.9321 (0.6386) 0.02980 (0.00083)<br />

9 0.01020 (0.00004) 0.02136 (0.00016) 0.10027 (0.00900) 0.02189 (0.00024) 1.9890 (0.4291) 0.02536 (0.00053)<br />

10 0.01039 (0.00006) 0.02069 (0.00014) 0.09449 (0.00828) 0.02330 (0.00030) 1.9066 (0.5945) 0.03119 (0.00115)<br />

11 0.01105 (0.00006) 0.01982 (0.00012) 0.09694 (0.00825) 0.02344 (0.00027) 1.9122 (0.7068) 0.02933 (0.00066)<br />

12 0.01401 (0.00007) 0.02023 (0.00418) 0.10987 (0.01069) 0.02008 (0.00020) 1.8954 (0.5122) 0.02386 (0.00125)<br />

Note. The values in paren<strong>the</strong>ses are sample mean squared error (SMSE) <strong>of</strong> ^c and ^k and Ô*Õ express SMSE less than Method 12.<br />

144 J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147

Table 6<br />

The UWLS, WLS and ML estimates <strong>of</strong> c and k for m ¼ 30, n ¼ 30<br />

True<br />

value<br />

method<br />

c ¼ 0:01, ^c k ¼ 0:01, ^k c ¼ 0:1, ^c k ¼ 0:01, ^k c ¼ 2:0, ^c k ¼ 0:01, ^k<br />

1 0.07070 (0.00765) 0.02505 (0.00026) 0.12255 (0.02113) 0.02807 (0.00038) 1.6523 (0.1005) 0.04146 (0.00141)<br />

2 0.08837 (0.00927) 0.02469 (0.00026) 0.15620 (0.03299) 0.02736 (0.00036) 1.9855 (0.3238) 0.03401 (0.00090)<br />

3 0.08021 (0.00962) 0.02479 (0.00026) 0.13711 (0.02650) 0.02784 (0.00037) 1.8384 (0.0492) 0.03707 (0.00108)<br />

4 0.07410 (0.00812) 0.02618 (0.00030) 0.12839 (0.02336) 0.02921 (0.00043) 1.7218 (0.3381) 0.04141 (0.00142)<br />

5 0.06721 (0.00662) 0.02559 (0.00028) 0.11644 (0.01898) 0.02858 (0.00039) 1.7589 (0.3585) 0.03775 (0.00104)<br />

6 0.08205 (0.00995) 0.02549 (0.00028) 0.14350 (0.02813) 0.02823 (0.00038) 1.9649 (0.1467) 0.03363 (0.00080)<br />

7 0.07771 (0.00865) 0.02539 (0.00027) 0.13003 (0.02372) 0.02856 (0.00039) 1.8826 (0.2017) 0.03518 (0.00088)<br />

8 0.07119 (0.00792) 0.02636 (0.00031) 0.11006 (0.01025) 0.02688 (0.00030) 1.9285 (0.0286) 0.03494 (0.00085)<br />

9 0.07568 (0.00824) 0.02569 (0.00028) 0.13476 (0.02423) 0.02879 (0.00041) 1.9467 (0.0398) 0.03473 (0.00086)<br />

10 0.08053 (0.00970) 0.02478 (0.00026) 0.13647 (0.02534) 0.02782 (0.00037) 1.8397 (0.0564) 0.03700 (0.00108)<br />

11 0.06355 (0.00613) 0.02729 (0.00034) 0.12046 (0.01985) 0.03059 (0.00048) 1.8408 (0.5426) 0.03758 (0.00098)<br />

12 0.07790 (0.00927) 0.02332 (0.00241) 0.13135 (0.02174) 0.02921 (0.00265) 1.7855 (0.2101) 0.03561 (0.00545)<br />

c ¼ 0:01, ^c k ¼ 0:02, ^k c ¼ 0:1, ^c k ¼ 0:02, ^k c ¼ 2:0, ^c k ¼ 0:02, ^k<br />

1 0.13062 (0.02762) 0.04956 (0.00170) 0.08106 (0.00610) 0.02680 (0.00042) 1.6299 (0.1508) 0.07580 (0.00531)<br />

2 0.16327 (0.04236) 0.04972 (0.00174) 0.09788 (0.00889) 0.02262 (0.00028) 2.0093 (0.2152) 0.06577 (0.00408)<br />

3 0.14950 (0.03574) 0.04947 (0.00171) 0.09088 (0.00766) 0.02449 (0.00034) 1.8261 (0.1715) 0.07067 (0.00466)<br />

4 0.14173 (0.03129) 0.05114 (0.00184) 0.08439 (0.00663) 0.02705 (0.00044) 1.7083 (0.4253) 0.07666 (0.00550)<br />

5 0.12624 (0.02500) 0.05107 (0.00183) 0.08596 (0.00657) 0.02488 (0.00032) 1.6845 (0.2483) 0.07323 (0.00480)<br />

6 0.15096 (0.03533) 0.05168 (0.00190) 0.09724 (0.00847) 0.02226 (0.00023) 1.9278 (0.1171) 0.06673 (0.00395)<br />

7 0.13699 (0.02981) 0.05190 (0.00191) 0.09265 (0.00766) 0.02329 (0.00027) 1.8587 (0.1245) 0.07121 (0.00366)<br />

8 0.13385 (0.02925) 0.05373 (0.00208) 0.09470 (0.00808) 0.02328 (0.00026) 1.7083 (0.1253) 0.07666 (0.00550)<br />

9 0.13650 (0.02987) 0.05275 (0.00199) 0.09780 (0.00843) 0.02220 (0.00023) 1.8771 (0.0501) 0.06947 (0.00424)<br />

10 0.15190 (0.03677) 0.04943 (0.00171) 0.08999 (0.00755) 0.02460 (0.00035) 1.9733 (0.1918) 0.06508 (0.00371)<br />

11 0.12643 (0.02632) 0.05466 (0.00215) 0.09550 (0.00791) 0.02360 (0.00027) 1.8148 (0.1989) 0.06998 (0.00439)<br />

12 0.09648 (0.01589) 0.02182 (0.01922) 0.10775 (0.01018) 0.02358 (0.00028) 1.7855 (0.1441) 0.05620 (0.00652)<br />

Note. The values in paren<strong>the</strong>ses are sample mean squared error (SMSE) <strong>of</strong> ^c and ^k and Ô*Õ express SMSE less than Method 12.<br />

J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147 145

146 J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147<br />

than ML estimates in c ¼ 2:0, k ¼ 0:01; (e) for m ¼ 10, n ¼ 30, <strong>the</strong> estimates<br />

obtained by Method 1, Methods 5–7 and Methods 9–11 are better than ML<br />

estimates in c ¼ 2:0, k ¼ 0:02; (f) for m ¼ 30, n ¼ 10, <strong>the</strong> performance <strong>of</strong><br />

UWLS and WLS estimates is better than ML estimates in c ¼ 0:1, k ¼ 0:01 and<br />

c ¼ 0:01, k ¼ 0:02, respectively; (g) for m ¼ 30, n ¼ 10, <strong>the</strong> UWLS estimates<br />

obtained by Method 3 and <strong>the</strong> WLS estimates obtained by Method 7 and<br />

Method 9 are better than ML estimates in c ¼ 0:01, 0.1, 2.0, k ¼ 0:01; (h) for<br />

m ¼ 30, n ¼ 30, <strong>the</strong> WLS estimates obtained by Method 8 are better than ML<br />

estimates in c ¼ 0:01, 0.1, 2.0, k ¼ 0:01; (i) for m ¼ 30, n ¼ 30, generally <strong>the</strong><br />

UWLS and WLS estimates are better than ML estimates in c ¼ 0:01, k ¼ 0:01;<br />

(j) for m ¼ 30, n ¼ 30, <strong>the</strong> WLS estimates obtained by Methods 6–10 are better<br />

than ML estimates in c ¼ 2:0, k ¼ 0:01 and (k) for m ¼ 30, n ¼ 30, <strong>the</strong> WLS<br />

estimates obtained by Methods 6–9 are better than ML estimates in c ¼ 0:1,<br />

2.0, k ¼ 0:02. In addition, from <strong>the</strong> above results, it is suggested that Method 9<br />

is useful for estimating c and k under <strong>the</strong> first failured-censored data.<br />

4. Concluding remarks<br />

In summary, <strong>least</strong> squares methods <strong>of</strong>ten provide simple and fairly effective<br />

ways <strong>of</strong> obtaining estimates with complete data and <strong>the</strong> first failured-censored<br />

data. The procedures described were based on transform <strong>of</strong> F ðxÞ, which is <strong>the</strong><br />

<strong>Gompertz</strong> cumulative <strong>distribution</strong> function. Results from simulation studies<br />

illustrate <strong>the</strong> performance <strong>of</strong> <strong>the</strong> WLS estimates is acceptable.<br />

Acknowledgement<br />

This research was partially supported by <strong>the</strong> National Science Council,<br />

ROC (Plan No. NSC 89-2118-M-032-013).<br />

References<br />

[1] U. Balasooriya, Failure-censored reliability sampling plans for <strong>the</strong> exponential <strong>distribution</strong>,<br />

Journal <strong>of</strong> Statistical Computation and Simulation 52 (1995) 337–349.<br />

[2] V. Barnett, Probability plotting methods and order statistics, Applied Statistics 24 (1975) 95–<br />

108.<br />

[3] Z. Chen, Parameter estimation <strong>of</strong> <strong>the</strong> <strong>Gompertz</strong> population, Biometrical Journal 39 (1997)<br />

117–124.<br />

[4] R.B. DÕAgostino, M.A. Stephens, Goodness-<strong>of</strong>-fit Techniques, Marcel Dekker, New York,<br />

1986.<br />

[5] B. Faucher, W.R. Tyson, On <strong>the</strong> determination <strong>of</strong> Weibull <strong>parameters</strong>, Journal <strong>of</strong> Materials<br />

Science Letters 7 (1988) 1199–1203.

J.-W. Wu et al. / Appl. Math. Comput. 158 (2004) 133–147 147<br />

[6] P.H. Franses, Fitting a <strong>Gompertz</strong> curve, Journal <strong>of</strong> <strong>the</strong> Operational Research Society 45 (1994)<br />

109–113.<br />

[7] M.L. Garg, B.R. Rao, C.K. Redmond, Maximum likelihood estimation <strong>of</strong> <strong>the</strong> <strong>parameters</strong> <strong>of</strong><br />

<strong>the</strong> <strong>Gompertz</strong> survival function, Journal <strong>of</strong> <strong>the</strong> Royal Statistical Society C 19 (1970) 152–159.<br />

[8] B. <strong>Gompertz</strong>, On <strong>the</strong> nature <strong>of</strong> <strong>the</strong> function expressive <strong>of</strong> <strong>the</strong> law <strong>of</strong> human mortality and on<br />

<strong>the</strong> new mode <strong>of</strong> determining <strong>the</strong> value <strong>of</strong> life contingencies, Philosophical Transactions <strong>of</strong><br />

Royal Society A 115 (1825) 513–580.<br />

[9] N.H. Gordon, Maximum likelihood estimation for mixtures <strong>of</strong> two <strong>Gompertz</strong> <strong>distribution</strong>s<br />

when censoring occurs, Communications in Statistics B: Simulation and Computation 19<br />

(1990) 733–747.<br />

[10] K.C. Kapur, L.R. Lamberson, Reliability in Engineering Design, Wiley, New York, 1977.<br />

[11] R. Langlois, <strong>Estimation</strong> <strong>of</strong> Weibull <strong>parameters</strong>, Journal <strong>of</strong> Materials Science Letters 10 (1991)<br />

1049–1051.<br />

[12] J.F. Lawless, Statistical Models and Methods for Lifetime Data, Wiley, New York, 1982.<br />

[13] R. Makany, A <strong>the</strong>oretical basis <strong>of</strong> <strong>Gompertz</strong>Õs curve, Biometrical Journal 33 (1991) 121–128.<br />

[14] B.R. Rao, C.V. Damaraju, New better than used and o<strong>the</strong>r concepts for a class <strong>of</strong> life<br />

<strong>distribution</strong>, Biometrical Journal 34 (1992) 919–935.<br />

[15] C.B. Read, <strong>Gompertz</strong> Distribution, Encyclopedia <strong>of</strong> Statistical Sciences, Wiley, New York,<br />

1983.<br />

[16] S.M. Ross, Introduction to Probability and Statistics for Engineers and Scientists, Wiley, New<br />

York, 1987.<br />

[17] J.W. Wu, W.C. Lee, Characterization <strong>of</strong> <strong>the</strong> mixtures <strong>of</strong> <strong>Gompertz</strong> <strong>distribution</strong>s by<br />

conditional expectation <strong>of</strong> order statistics, Biometrical Journal 41 (1999) 371–381.