A survey of graph edit distance

A survey of graph edit distance

A survey of graph edit distance

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

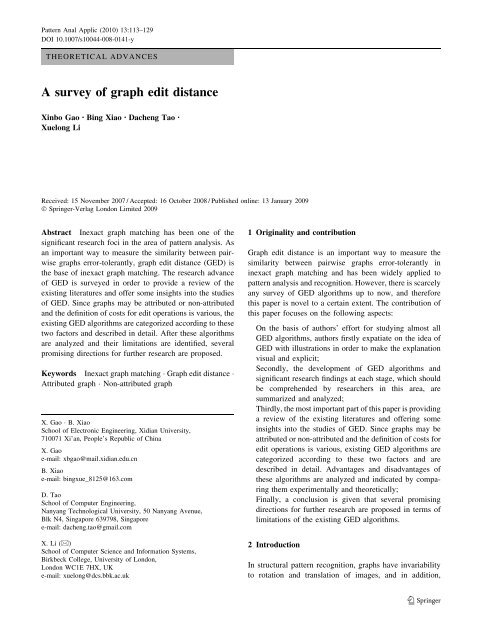

Pattern Anal Applic (2010) 13:113–129DOI 10.1007/s10044-008-0141-yTHEORETICAL ADVANCESA <strong>survey</strong> <strong>of</strong> <strong>graph</strong> <strong>edit</strong> <strong>distance</strong>Xinbo Gao Æ Bing Xiao Æ Dacheng Tao ÆXuelong LiReceived: 15 November 2007 / Accepted: 16 October 2008 / Published online: 13 January 2009Ó Springer-Verlag London Limited 2009Abstract Inexact <strong>graph</strong> matching has been one <strong>of</strong> thesignificant research foci in the area <strong>of</strong> pattern analysis. Asan important way to measure the similarity between pairwise<strong>graph</strong>s error-tolerantly, <strong>graph</strong> <strong>edit</strong> <strong>distance</strong> (GED) isthe base <strong>of</strong> inexact <strong>graph</strong> matching. The research advance<strong>of</strong> GED is <strong>survey</strong>ed in order to provide a review <strong>of</strong> theexisting literatures and <strong>of</strong>fer some insights into the studies<strong>of</strong> GED. Since <strong>graph</strong>s may be attributed or non-attributedand the definition <strong>of</strong> costs for <strong>edit</strong> operations is various, theexisting GED algorithms are categorized according to thesetwo factors and described in detail. After these algorithmsare analyzed and their limitations are identified, severalpromising directions for further research are proposed.Keywords Inexact <strong>graph</strong> matching Graph <strong>edit</strong> <strong>distance</strong> Attributed <strong>graph</strong> Non-attributed <strong>graph</strong>X. Gao B. XiaoSchool <strong>of</strong> Electronic Engineering, Xidian University,710071 Xi’an, People’s Republic <strong>of</strong> ChinaX. Gaoe-mail: xbgao@mail.xidian.edu.cnB. Xiaoe-mail: bingxue_8125@163.comD. TaoSchool <strong>of</strong> Computer Engineering,Nanyang Technological University, 50 Nanyang Avenue,Blk N4, Singapore 639798, Singaporee-mail: dacheng.tao@gmail.comX. Li (&)School <strong>of</strong> Computer Science and Information Systems,Birkbeck College, University <strong>of</strong> London,London WC1E 7HX, UKe-mail: xuelong@dcs.bbk.ac.uk1 Originality and contributionGraph <strong>edit</strong> <strong>distance</strong> is an important way to measure thesimilarity between pairwise <strong>graph</strong>s error-tolerantly ininexact <strong>graph</strong> matching and has been widely applied topattern analysis and recognition. However, there is scarcelyany <strong>survey</strong> <strong>of</strong> GED algorithms up to now, and thereforethis paper is novel to a certain extent. The contribution <strong>of</strong>this paper focuses on the following aspects:On the basis <strong>of</strong> authors’ effort for studying almost allGED algorithms, authors firstly expatiate on the idea <strong>of</strong>GED with illustrations in order to make the explanationvisual and explicit;Secondly, the development <strong>of</strong> GED algorithms andsignificant research findings at each stage, which shouldbe comprehended by researchers in this area, aresummarized and analyzed;Thirdly, the most important part <strong>of</strong> this paper is providinga review <strong>of</strong> the existing literatures and <strong>of</strong>fering someinsights into the studies <strong>of</strong> GED. Since <strong>graph</strong>s may beattributed or non-attributed and the definition <strong>of</strong> costs for<strong>edit</strong> operations is various, existing GED algorithms arecategorized according to these two factors and aredescribed in detail. Advantages and disadvantages <strong>of</strong>these algorithms are analyzed and indicated by comparingthem experimentally and theoretically;Finally, a conclusion is given that several promisingdirections for further research are proposed in terms <strong>of</strong>limitations <strong>of</strong> the existing GED algorithms.2 IntroductionIn structural pattern recognition, <strong>graph</strong>s have invariabilityto rotation and translation <strong>of</strong> images, and in addition,123

114 Pattern Anal Applic (2010) 13:113–129Fig. 1 The development <strong>of</strong>GEDtransformation <strong>of</strong> an image into a ‘mirror’ image; thus theyare used widely as the potent representation <strong>of</strong> objects.With the representation <strong>of</strong> <strong>graph</strong>s, pattern recognitionbecomes a problem <strong>of</strong> <strong>graph</strong> matching. The presence <strong>of</strong>noise means that the <strong>graph</strong> representations <strong>of</strong> identical realworld objects may not match exactly. One commonapproach to this problem is to apply inexact <strong>graph</strong>matching [1–5], in which error correction is made part <strong>of</strong>the matching process. Inexact <strong>graph</strong> matching has beensuccessfully applied to recognition <strong>of</strong> characters [6, 7],shape analysis [8, 9], image and video indexing [10–14]and image registration [15]. Since photos and their correspondingsketches are similar to each other geometricallyand their difference mainly focuses on texture information,photos and sketches can be represented with <strong>graph</strong>s andthen sketch-photo recognition [16] will be realized throughinexact <strong>graph</strong> matching in the future. Central to thisapproach is the measurement <strong>of</strong> the similarity <strong>of</strong> pairwise<strong>graph</strong>s. This can be measured in many ways but oneapproach which <strong>of</strong> late has garnered particular interestbecause it is error-tolerant to noise and distortion is the<strong>graph</strong> <strong>edit</strong> <strong>distance</strong> (GED), defined as the cost <strong>of</strong> the leastexpensive sequence <strong>of</strong> <strong>edit</strong> operations that are needed totransform one <strong>graph</strong> into another.In the development <strong>of</strong> GED which is demonstrated inFig. 1, Sanfeliu and Fu [17] plays an important role, wh<strong>of</strong>irst introduced <strong>edit</strong> <strong>distance</strong> into <strong>graph</strong>. It is computed bycounting node and edge relabelings together with thenumber <strong>of</strong> node and edge deletions and insertions necessaryto transform a <strong>graph</strong> into another. Extending theiridea, Messmer and Bunke [18, 19] defined the sub<strong>graph</strong><strong>edit</strong> <strong>distance</strong> by the minimum cost for all error-correctingsub<strong>graph</strong> isomorphisms, in which common sub<strong>graph</strong>s <strong>of</strong>different model <strong>graph</strong>s are represented only once and thelimitation <strong>of</strong> inexact <strong>graph</strong> matching algorithms workingon only two <strong>graph</strong>s once can be avoided. But the directGED lacks some <strong>of</strong> the formal underpinning <strong>of</strong> string <strong>edit</strong><strong>distance</strong>, so there is considerable current effort aimed atputting the underlying methodology on a rigorous footing.There have been some development for overcoming thisdrawback, for instance, the relationship between GED andthe size <strong>of</strong> the maximum common sub<strong>graph</strong> (MCS) hasbeen demonstrated [20], the uniqueness <strong>of</strong> the cost-functionis commented [21], a probability distribution for localGED has been constructed, extending <strong>of</strong> string <strong>edit</strong> to treesand <strong>graph</strong>s, etc. GED has been computed for both attributedrelational <strong>graph</strong>s and non-attributed <strong>graph</strong>s so far.Attributed <strong>graph</strong>s have the attribute <strong>of</strong> nodes, edges, orboth nodes and edges according to which the GED iscomputed directly. Non-attributed <strong>graph</strong>s only include theinformation <strong>of</strong> connectivity structure; therefore they areusually converted into strings and <strong>edit</strong> <strong>distance</strong> is used tocompare strings, the coded patterns <strong>of</strong> <strong>graph</strong>s. The <strong>edit</strong><strong>distance</strong> between strings can be evaluated by dynamicprogramming [5], which has been extended to comparetrees and <strong>graph</strong>s on a global level [22, 23]. Hancock et al.used Levenshtein <strong>distance</strong>, an important kind <strong>of</strong> <strong>edit</strong> <strong>distance</strong>,to evaluate the similarity <strong>of</strong> pairwise strings whichare derived from <strong>graph</strong>s [24]. Whereas Levenshtein <strong>edit</strong><strong>distance</strong> does not fully exploit the coherence or statisticaldependencies existing in the local context, Wei made use<strong>of</strong> Markov random field to develop the Markov <strong>edit</strong> <strong>distance</strong>[25] in 2004. Recently, Marzal and Vidal [26]normalized the <strong>edit</strong>-<strong>distance</strong> so that it may be consistentlyapplied across a range <strong>of</strong> objects in different size and thisidea has been used to model the probability distribution for<strong>edit</strong> path between pairwise <strong>graph</strong>s [27]. The Hamming<strong>distance</strong> between two strings is a special case <strong>of</strong> the <strong>edit</strong><strong>distance</strong>. Hancock measures the GED with Hamming <strong>distance</strong>between the structural units <strong>of</strong> <strong>graph</strong>s together withthe size difference between <strong>graph</strong>s [28]. So, in the development<strong>of</strong> GED, the role <strong>of</strong> <strong>edit</strong> <strong>distance</strong> [5, 29] cannot beneglected and its advancement promotes the birth <strong>of</strong> newGED algorithms.Although the research <strong>of</strong> GED has been developedflourishingly, GED algorithms are influenced considerablyby cost functions which are related to <strong>edit</strong> operations. TheGED between pairwise <strong>graph</strong>s changes with the change <strong>of</strong>cost functions and its validity is dependent on the rationality<strong>of</strong> cost functions definition. Researchers havedefined the cost functions in various ways by far, andparticularly, the definition <strong>of</strong> cost functions is cast into aprobability framework mainly by Hancock and Bunkelately [30, 31]. The problem is not solved radically. Bunke[21] connects cost functions with multifold <strong>graph</strong> isomorphismin theory and then the necessity <strong>of</strong> cost functiondefinition can be removed. But <strong>graph</strong> isomorphism is akind <strong>of</strong> NP-complete problem and has to work togetherwith constraints and heuristics in practice. So, efforts arestill needed to develop new GED algorithms having123

Pattern Anal Applic (2010) 13:113–129 115reasonable cost functions or independent <strong>of</strong> defining costfunctions, such as the algorithms in [32, 33].The aim <strong>of</strong> this paper is to provide a <strong>survey</strong> <strong>of</strong> currentdevelopment <strong>of</strong> GED which is related to computing thedissimilarity <strong>of</strong> <strong>graph</strong>s in error correcting <strong>graph</strong> matching.This paper is organized as follows: after some concepts andbasic algorithms are given in Sect. 3, the existing GEDalgorithms are categorized and described in detail inSect. 4, which compares existing algorithms to show theiradvantages and disadvantages. In Sect. 5, a summary ispresented and some important problems <strong>of</strong> GED deservingfurther research are proposed.3 Basic concepts and algorithmsMany concepts <strong>of</strong> <strong>graph</strong> theory and basic algorithms <strong>of</strong>search and learning strategies are regarded as the foundation<strong>of</strong> existing GED algorithms. In order to describe andanalyze GED algorithms thoroughly, these concepts haveto be expounded in advance.3.1 Concepts related to <strong>graph</strong> theoryThe subject investigated by GED is the <strong>graph</strong> representation<strong>of</strong> objects, and, judging from current research results,<strong>graph</strong> theory is the basis <strong>of</strong> GED research; therefore,introduction <strong>of</strong> some concepts related to <strong>graph</strong> theory isnecessary, such as the definitions <strong>of</strong> the <strong>graph</strong> with orwithout attributes, the directed acyclic <strong>graph</strong>, the commonsub<strong>graph</strong>, the common super<strong>graph</strong>, the maximum weightclique, the isomorphism <strong>of</strong> <strong>graph</strong>s, the transitive closure,the Fiedler vector and the super clique.3.1.1 Definitions <strong>of</strong> the <strong>graph</strong> and the attributed <strong>graph</strong>A <strong>graph</strong> is denoted by G = (V, E), whereV ¼f1; 2; ...; Mgis the set <strong>of</strong> vertices (nodes), E is the edge set(E 7 V 9 V). If nodes in a <strong>graph</strong> have attributes, the<strong>graph</strong> is an attributed <strong>graph</strong> denoted by G = (V, E, l),where l is a labeling functionl : V ! L N :If both nodes and edges in a <strong>graph</strong> have attributes, the<strong>graph</strong> is an attributed <strong>graph</strong> denoted by G = (V, E, a, b),wherea : V ! L N and b : E ! L Eare node and edge labeling functions. L N and L E arerestricted to labels consisting <strong>of</strong> fixed-size tuples, that is,L N = R p , L E = R q , p, q [ N [ {0}.3.1.2 Definitions <strong>of</strong> the directed <strong>graph</strong> and directedacyclic <strong>graph</strong>Given a <strong>graph</strong> G = (V, E), if E is a set <strong>of</strong> ordered pairs <strong>of</strong>vertices, G is a directed <strong>graph</strong> and edges in E are calleddirected edges. If there is no non-empty directed path thatstarts and ends on v for any vertex v in V, G is a directedacyclic <strong>graph</strong>.3.1.3 Definition <strong>of</strong> the sub<strong>graph</strong> and super<strong>graph</strong>Let G = (V, E, a, b) and G 0 = (V 0 , E 0 , a 0 , b 0 ) be two<strong>graph</strong>s; G 0 is a sub<strong>graph</strong> <strong>of</strong> G, G is a super<strong>graph</strong> <strong>of</strong> G 0 ,G 0 7 G, if• V 0 7 V,• E 0 7 E,• a 0 (x) = a(x) for all x [ V 0 ,• b 0 ((x, y)) = b((x, y)) for all (x, y) [ E 0 .For non-attributed <strong>graph</strong>s, only the first two conditions areneeded.3.1.4 Definition <strong>of</strong> the <strong>graph</strong> isomorphismLet G 1 = (V 1 , E 1 , a 1 , b 1 ) and G 2 = (V 2 , E 2 , a 2 , b 2 ) be two<strong>graph</strong>s. A <strong>graph</strong> isomorphism between G 1 and G 2 is abijective mapping f : V 1 ? V 2 such that• a 1 (x) = a 2 (f(x)), Vx [ V 1 ,• b 1 ((x, y)) = b 2 ((f(x), f(y))), V(x, y) [ E 1 .0For non-attributed <strong>graph</strong>s G 1 = (V 0 1 , E 0 1 ) and0G 2 = (V 0 2 , E 0 0 02 ), a bijective mapping f : V 1 ? V 2 suchthat ðu; vÞ 2E1 0 ,ðf ðuÞ; f ðvÞÞ 2 E0 2 ; Vu, v [ V 1 0 is a <strong>graph</strong>isomorphism between these two <strong>graph</strong>s.If V 1 = V 2 = /, then f is called the empty <strong>graph</strong>isomorphism.3.1.5 Definitions <strong>of</strong> the common sub<strong>graph</strong>and the maximum common sub<strong>graph</strong>Let G 1 and G 2 be two <strong>graph</strong>s and G 107 G 1 , G 207 G 2 .Ifthere exists a <strong>graph</strong> isomorphism between G 1 0 and G 2 0 , thenboth G 1 0 and G 2 0 are called a common sub<strong>graph</strong> <strong>of</strong> G 1 andG 2 . If there exists no other common sub<strong>graph</strong> <strong>of</strong> G 1 and G 2that has more nodes than G 10and G 2 0 , G 10and G 20arecalled a MCS <strong>of</strong> G 1 and G 2 .3.1.6 Definitions <strong>of</strong> the common super<strong>graph</strong>and the minimum common super<strong>graph</strong>A <strong>graph</strong> G^is a common super<strong>graph</strong> <strong>of</strong> two <strong>graph</strong>s G 1 andG 2 if there exist <strong>graph</strong>s G^1 G^and G^2 G^such that thereexists a <strong>graph</strong> isomorphism between G^ 1 and G 1 , and a123

116 Pattern Anal Applic (2010) 13:113–129<strong>graph</strong> isomorphism between G^ 2 and G 2 .Itisaminimumcommon super<strong>graph</strong> if there is no other common super<strong>graph</strong><strong>of</strong> G 1 and G 2 smaller than G^:3.1.7 Definition <strong>of</strong> the Fiedler vectorIf the degree matrix and adjacency matrix <strong>of</strong> a <strong>graph</strong> arediagonal matrixD ¼ diagðdegð1Þ; degð2Þ; ...; degðMÞÞ;where M is the last node in the <strong>graph</strong>, and the symmetricmatrix A, respectively, the Laplacian matrix is the matrixL = D - A. The eigenvector corresponding to the secondsmallest eigenvalue <strong>of</strong> the <strong>graph</strong> Laplacian is referred to asthe Fiedler vector.3.1.8 Definitions <strong>of</strong> the clique and the maximum weightcliqueA clique in a <strong>graph</strong> is a set <strong>of</strong> nodes which are adjacent toeach other, for example, in Fig. 2, node 3, node 4 and node5 form a clique in the <strong>graph</strong>. Weight clique is the extension<strong>of</strong> clique to weighted <strong>graph</strong>s. Maximum weight clique isthe clique with the largest weight.3.1.9 Definition <strong>of</strong> the super cliqueGiven a <strong>graph</strong> G = (V, E), the super clique (or neighborhood)<strong>of</strong> the node i [ V consists <strong>of</strong> its center node itogether with its immediate neighbors connected by edgesin the <strong>graph</strong>.3.1.10 Transitive closureThe transitive closure <strong>of</strong> a directed <strong>graph</strong> G = (V, E) isa<strong>graph</strong> G ?=(V, E?) such that for all v and w in V, (v,w) [ E? if and only if there is a non-null path from v to win G.3.2 Basic algorithms used in the existing GEDalgorithmsThe definition <strong>of</strong> cost functions is key issue <strong>of</strong> GEDalgorithms and self-organizing map (SOM) can be used tolearn cost functions automatically to some extent [34].GED is defined as the cost <strong>of</strong> least expensive <strong>edit</strong>sequences; thus search strategy for shortest path is closelyrelated to GED algorithms. Dijkstra’s algorithm is the mostpopular shortest path algorithm and is applied by Robles-Kelly [35]. In addition, expectation maximum (EM) algorithmis applied to parameter optimization [30]. So, EMalgorithm, Dijkstra’s algorithm and SOM are presentedhere before GED algorithms are given.3.2.1 EM algorithmExpectation maximum algorithm [36] is one <strong>of</strong> the mainapproaches for estimating the parameters <strong>of</strong> a Gaussianmixture model (GMM). There exist two sample spaces Xand Y, and a many-one mapping from X to Y. Data Yderived in space Y is observed directly, and correspondingdata x in X is not observed directly, but only indirectlythrough Y. Data x and y are referred to as the complete dataand incomplete data, respectively. Given a set <strong>of</strong> N randomvectorsZ ¼fz 1 ; z 2 ; ...; z N gin which each random vector is drawn from an independentand identically distributed mixture model, the likelihood <strong>of</strong>the observed samples (conditional probability) is defined asthe joint densityPðZjhÞ¼P N i¼1 pðz ijhÞ:Z is the complete data and z i is the incomplete data, and theaim <strong>of</strong> EM algorithm is to determine the parameter h thatmaximizes P(Z|h) given an observed Z.The EM algorithm is an iterative maximum likelihood(ML) estimation algorithm. Each iteration <strong>of</strong> EM algorithminvolves two steps: expectation step (E-step) and maximizationstep (M-step). In E-step, the updated posteriorprobability is computed with the prior probability, and inM-step, according to the posterior probability transferredfrom E-step, conditional probability is maximized to obtainthe updated prior probability and the parameters correspondingto the updated prior probability are transferred toE-step.3.2.2 Dijkstra’s algorithmFig. 2 An example <strong>of</strong> clique in the <strong>graph</strong>Dijkstra’s algorithm [37] is developed by Dijkstra. It is agreedy algorithm that solves the single-source shortest pathproblem for a directed <strong>graph</strong> with non-negative edge123

Pattern Anal Applic (2010) 13:113–129 117weights and it can be extended to undirected <strong>graph</strong>. Givena weighted directed <strong>graph</strong> G = (V, E), each <strong>of</strong> edges in E isgiven a weight value, the cost <strong>of</strong> moving directly along thisedge. The cost <strong>of</strong> a path between two vertices is the sum <strong>of</strong>costs <strong>of</strong> the edges in that path. Given a pair <strong>of</strong> vertices sand t in V, the algorithm finds the shortest path from s to t.Let S be the set <strong>of</strong> nodes visited along the shortest pathfrom s to t. The adjacency matrix <strong>of</strong> G is the weight valuematrix C. The element d(i) is the cost <strong>of</strong> path from s tov i [ V, and d(s) = 0. The algorithm can be described asbelow:• Initialization: S = /, and d(i) is the weight value <strong>of</strong> theedge (s, v i );• If d(j) = min{d(i)|v i [ V - S.} is true, S = S [ {v j };• For every node v k [ V - S, ifd(j) ? C(j, k) \ d(k) istrue, d(k) is updated, that is, d(k) = d(j) ? C(j, k);• The last two steps are repeated until vertex t is visitedand d(t) is unchanged such that the shortest path from sto t is achieved.3.2.3 SOMSelf-organizing map [38–40] is an unsupervised artificialneural network and maps the training samples into lowdimensionalspace with the topological properties <strong>of</strong> theinput space unchanged. In SOM, neighboring neuronscompete in their activities by means <strong>of</strong> mutual lateralinteractions, and develop adaptively into specific detectors<strong>of</strong> different signal patterns, so it is unsupervised, selforganizingand competitive.The SOM network consists <strong>of</strong> two layers: one is inputlayer and another is competitive layer. As shown in Fig. 3,hollow nodes denote neurons in input layer and solid nodesare competitive neurons. Each input is connected to allneurons in the competitive layer and every neuron in thecompetitive layer is connected to the neurons in itsneighborhood. For each neuron j, its position is describedas neural weight W j , and for neurons in the competitivelayer, the grid connections are regarded as their neighborhoodrelation. The training process is as below:• The neurons <strong>of</strong> the input layer selectively feed inputelements into the competitive layer;• When an input element D is mapped onto the competitivelayer, the neurons in competitive layer competefor the input element’s position to represent the inputelement well. The closest neuron c, the winner neuron,is chosen in terms <strong>of</strong> the <strong>distance</strong> metric d v which is the<strong>distance</strong> <strong>of</strong> neural weights in the vector space;• Neighborhood N c = {c, n c 1 , n c 2 , …, n c M }, where M isthe number <strong>of</strong> neighbors, <strong>of</strong> the winner neuron c isdetermined with the <strong>distance</strong> d n between neurons whichis defined by means <strong>of</strong> the neighborhood relations. Theneuron c and its neighbors in N c are drawn closer to theinput element and weights <strong>of</strong> the whole neighborhoodare moved in the same direction, similar items tend toexcite adjacent neurons. The strength <strong>of</strong> the adaptationfor a competitive neuron is decided by a non-increasingactivation function a(t), and the weight <strong>of</strong> the neuron jin competitive layer is adapted according to thefollowing formula:(W j ðt þ 1Þ ¼ W jðtÞþaðtÞðDðtÞ W j ðtÞÞ; j 2 N cW j ðtÞ;j 62 N c :ð1Þ• The last two steps are repeated until a terminalcondition is achieved.4 Graph <strong>edit</strong> <strong>distance</strong>A <strong>graph</strong> can be transformed to another one by a finitesequence <strong>of</strong> <strong>graph</strong> <strong>edit</strong> operations which may be defineddifferently in various algorithms, and GED is defined bythe least-cost <strong>edit</strong> operation sequence. In the following, anexample is used to illustrate the definition <strong>of</strong> GED. Formodel <strong>graph</strong> shown in Fig. 4 and data <strong>graph</strong> shown inFig. 3 The structure <strong>of</strong> SOMFig. 4 The model <strong>graph</strong>123

118 Pattern Anal Applic (2010) 13:113–1294.1.1 SOM based algorithmFig. 5 The data <strong>graph</strong>Fig. 5, the task is transforming data <strong>graph</strong> into model<strong>graph</strong>. All <strong>edit</strong> operations are performed on the data<strong>graph</strong>. One <strong>of</strong> the <strong>edit</strong> operation sequences includes nodeinsertion and edge insertion (node 6 and its relative edge),node deletion and edge deletion (node a and its relativeedges), node substitution (node 1) and edge substitution(the edge relative to node 5 and node 3). A cost functionis defined for each operation and the cost for this <strong>edit</strong>operation sequence is sum <strong>of</strong> costs for all operations inthe sequence. The sequence <strong>of</strong> <strong>edit</strong> operations and its costneeded for transforming a data <strong>graph</strong> into a model <strong>graph</strong>is not unique, but the least cost is exclusive. Then <strong>edit</strong>operation sequence with the least cost is requested and itscost is the GED between these two <strong>graph</strong>s. It is obviousthat how to determine the similarity <strong>of</strong> components in<strong>graph</strong>s and define costs <strong>of</strong> <strong>edit</strong> operations are the keyissues.A <strong>graph</strong> may be an attributed relational <strong>graph</strong> withattributes <strong>of</strong> nodes, edges, or both nodes and edges,according to which the GED is computed directly. On theother hand, for a structural <strong>graph</strong> only having the information<strong>of</strong> connectivity structure, <strong>graph</strong>s are usuallyconverted into strings according to nodes, edges or theconnectivity, and the GED is computed based on the <strong>edit</strong><strong>distance</strong> methods concerning strings. GED algorithms,whose ideas are given in brief, are classified from these twoaspects. Algorithms for different kinds <strong>of</strong> <strong>graph</strong>s are notcomparable. The <strong>distance</strong>s obtained with algorithms <strong>of</strong> thesame kinds are compared in the ability <strong>of</strong> clustering andclassifying images, and accordingly their superiorities andflaws can be concluded, which may be in favor <strong>of</strong> ourfurther research.4.1 GED for attributed <strong>graph</strong>sGraph <strong>edit</strong> <strong>distance</strong> for attributed <strong>graph</strong>s is computeddirectly according to the attributes which are various indifferent algorithms. In the SOM based method [34],probability based approach [30], convolution <strong>graph</strong> kernelbased method [41] and sub<strong>graph</strong> and super<strong>graph</strong> basedmethod [42], attributes are <strong>of</strong> both nodes and edges,whereas the attribute is <strong>of</strong> nodes in binary linear programming(BLP) based method [43].In the existing algorithms for GED, the automatic inference<strong>of</strong> the cost for <strong>edit</strong> operations remains an open problem. Tothis end, the SOM based algorithm [34] is developed, inwhich the attributed <strong>graph</strong>s G = (V, E, a, b) are theobjects to be processed. Every node and edge labels arem-dimensional and n-dimensional vectors, respectively.The space <strong>of</strong> the node and edge labels in a population <strong>of</strong><strong>graph</strong>s is mapped into a regular grid which is an untrainedSOM network, and the grid will be deformed after beingtrained. One type <strong>of</strong> <strong>edit</strong> operation is described by a SOM.The actual <strong>edit</strong> costs are derived from a <strong>distance</strong> measurefor labels that is defined with the distribution encoded inthe SOM. The encoding <strong>of</strong> node substitution is described asbelow:• The m-dimensional node label space is reduced to aregular grid by being sampled at equidistant positions.Each vertex <strong>of</strong> the grid is connected to its nearestneighbor along the dimensions so as to obtain arepresentation <strong>of</strong> the full space. The regular grid isSOM neural network;• Grid vertices and connections correspond to competitiveneurons and neighborhood relations in the SOM;• When it is being trained, the SOM corresponds to adeformed grid. A label vector at a vertex position <strong>of</strong> theregular grid is mapped directly onto the same vertex inthe deformed map. Any vector in the original space thatis not at a vertex can be mapped into the deformedspace by a simple linear interpolation <strong>of</strong> its adjacentgrid points.• The cost <strong>of</strong> substituting node v 2 for node v 1 is definedwith d v , that is,cðv 1 ! v 2 Þ¼b n sub d vðv 0 1 ; v0 2 Þ;nwhere b sub is the weighting factor, and the vector v 0 i isv i in the deformed space. The weighting factor compensatefor the dependency <strong>of</strong> the initial <strong>distance</strong>between vertexes.For other <strong>edit</strong> operations, the SOM networks are constructedin an analogous way. The vertex distribution <strong>of</strong>each SOM will be changed iteratively in the learningprocedure, which results in different costs. The object is toderive the cost functions resulting in small intraclass andlarge interclass <strong>distance</strong>s; therefore activation function a(t)is defined such that the value <strong>of</strong> the function decreaseswhen the <strong>distance</strong> between neurons increases.The experiments demonstrating the performance <strong>of</strong>SOM based method are performed on the <strong>graph</strong> samplesconsisting <strong>of</strong> ten distorted copies for each <strong>of</strong> the threeletter-classes A, E, and X. The instances <strong>of</strong> letter A beingsheared are illustrated in Fig. 6. Shearing factor a is used to123

Pattern Anal Applic (2010) 13:113–129 1194.1.2 Probability based algorithmα = 0 α = 0. 25 α = 0. 5 α = 0. 75 α = 1Fig. 6 Sheared letter A with shearing factor a in [34]indicate the degree <strong>of</strong> letter distortion. For every shearingfactor, the best average index is computed and shown inFig. 7. The average index, which is normalized to the unitinterval [0, 1], is defined by the average value <strong>of</strong> eightvalidation indices to evaluate the performance <strong>of</strong> clusteringquantitatively. The Smaller values, the better clustering.Eight validation indices are the Davies–Bouldin index [44],the Dunn index [45], the C-index [46], the Goodman–Kruskal index [47], the Calinski–Harabasz index [48],Rand statistics [49], the Jaccard coefficient [50], and theFowlkes–Mallows index [51]. In Fig. 7, the average indicescorresponding to SOM learning are smaller than those<strong>of</strong> the Euclidean model under every shearing factor and thesuperiority <strong>of</strong> SOM over the Euclidean model is increasinglyobvious with the shearing factor increasing, so <strong>edit</strong>costs derived through SOM learning make the differencebetween intraclass and interclass <strong>distance</strong>s greater than thatderived through Euclidean model, which illustrates that theSOM performs better than Euclidean model for clustering.In this method, GED is computed based the metric d v ;therefore, the obtained GED is a metric. For a certainapplication, some areas <strong>of</strong> the label space are <strong>of</strong> greatrelevancy, while other areas are irrelevant. Other existingcost functions treat every part <strong>of</strong> label space equally, whichcan be overcome by the SOM based method learning therelevant areas <strong>of</strong> the label space from <strong>graph</strong> sample set.Similar to the cost learning system based on the frequencyestimation <strong>of</strong> <strong>edit</strong> operations for the string matching [52],Neuhaus proposed a probability based algorithm [30] tocompute GED. In this algorithm, if the GED <strong>of</strong> <strong>graph</strong>s G 1and G 2 is to be computed by transferring G 1 into G 2 , twoindependent empty <strong>graph</strong>s EG 1 and EG 2 are constructed forG 1 and G 2 , respectively, by a stochastic generation process.The sequence <strong>of</strong> node and edge insertion is applied toeither both or only one <strong>of</strong> the two constructed <strong>graph</strong>s EG 1and EG 2 , which can be interpreted as an <strong>edit</strong> operationsequence transforming G 1 into G 2 and whose effects on G 1are presented in Table 1. Edit costs are derived from thedistribution estimation <strong>of</strong> <strong>edit</strong> operations. Each type <strong>of</strong> <strong>edit</strong>operations, regarded as a random event, is modeled with aGMM and the mixtures are weighted to form the probabilitydistribution <strong>of</strong> <strong>edit</strong> events. Initialization starts withthe empiric mean and covariance matrices <strong>of</strong> a singlecomponent, and new components are added sequentially ifthe mixture density appears to converge in the trainingprocess. Training pairs <strong>of</strong> <strong>graph</strong>s required to be similar areextracted and the EM algorithm is employed to find alocally optimized parameter set in terms <strong>of</strong> the likelihood<strong>of</strong> the <strong>edit</strong> events occurring between the pairwise training<strong>graph</strong>s. If a probability distribution <strong>of</strong> <strong>edit</strong> events sequenceðe 1 ; e 2 ; ...; e l Þis given, the probability <strong>of</strong> two <strong>graph</strong>s p(G 1 , G 2 ) is definedasZpðG 1 ; G 2 Þ¼dpðe 1 ; e 2 ; ...; e l Þ ð2Þðe 1 ;e 2 ;...;e l Þ2wðG 1 ;G 2 Þwhere w(G 1 , G 2 ) denotes the set <strong>of</strong> all <strong>edit</strong> operationstransferring G 1 to G 2 . Finally, the <strong>distance</strong> between thesetwo <strong>graph</strong>s is obtained by settingdðG 1 ; G 2 Þ¼ logðpðG 1 ; G 2 ÞÞ: ð3ÞThis algorithm is compared with the SOM based algorithm[34]. Three letter classes Z, A, and V are chosen, and 90<strong>graph</strong>s (30 samples per class) are constructed to produce fivesample sets with different values <strong>of</strong> the distortion parameter,0.1, 0.5, 0.8, 1.0, and 1.2. Examples <strong>of</strong> these <strong>graph</strong>s areshown in Fig. 8. The average index consisting <strong>of</strong> the Calinski–Harabaszindex [48], Davies–Bouldin index [44],Table 1 Effects <strong>of</strong> <strong>edit</strong> operations on original <strong>graph</strong>Edit operations Effects on G 1EG 1 EG 2Fig. 7 Comparison <strong>of</strong> average index on sheared line drawing sample[34]Node/edge insertion / Node/edge insertion/ Node/edge insertion Node/edge deletionNode/edge insertion Node/edge insertion Node/edge substitution123

120 Pattern Anal Applic (2010) 13:113–129(a) (b) (c)Fig. 8 Line drawing example. a Original drawing <strong>of</strong> letter A; bdistorted instance <strong>of</strong> the same letter with distortion parameter 0.5 andc distortion parameter 1.0 [30]Fig. 9 Comparison <strong>of</strong> performance with increasing strength <strong>of</strong>distortion in [30]Goodman–Kruskal index [47], and C-index [46] is computedfor every sample set, the result <strong>of</strong> which is shown in Fig. 9.As mentioned ahead, smaller average indices correspond tobetter clustering and this method corresponds to smalleraverage index in every sample set and for every distortionlevel; thus, it is confirmed that this method clearly leads tobetter clustering results than SOM based algorithm, and thebest average index value is obtained for the second-strongestdistortion, although the matching task becomes harder andharder with increasing distortion strength.Although SOM neural network can derive <strong>edit</strong> costsautomatically and distinct the relevant areas <strong>of</strong> the labelspace, <strong>edit</strong> costs derived according to probability distribution<strong>of</strong> <strong>edit</strong> operations are more effective for clusteringdistorted letters. The advantage <strong>of</strong> this method is that it isable to cope with large samples <strong>of</strong> <strong>graph</strong>s and strong distortionsbetween samples <strong>of</strong> the same class. It can be foundthat the key <strong>of</strong> this algorithm is the probability distribution<strong>of</strong> <strong>edit</strong> events.4.1.3 Method based on convolution <strong>graph</strong> kernelKernel method is a new class <strong>of</strong> algorithms for patternanalysis based on statistical learning. When kernel functionsare used to evaluate <strong>graph</strong> similarity, the <strong>graph</strong> matchingproblem can be formulated in an implicitly existing vectorspace, and then statistical methods for pattern analysis canbe applied. In the algorithm based on convolution <strong>graph</strong>kernel [41], a novel <strong>graph</strong> kernel function is proposed tocompute the GED so as to avoid the lack <strong>of</strong> mathematicalstructure in the space <strong>of</strong> <strong>graph</strong>s.For <strong>graph</strong>s G = (V, E, l, m) and G 0 = (V 0 , E 0 , l 0 , m 0 ), thecost <strong>of</strong> node substitution u ? u 0 replacing node u [ V bynode u 0 [ V 0 is given by the radial basis function:K sim ðu; u 0 Þ¼exp klðuÞ l 0 ðu 0 Þk 2. 2r 2 : ð4ÞThe same function with different parameter r is also usedto evaluate the similarity <strong>of</strong> edge labels. These radial basisfunctions favor <strong>edit</strong> paths containing more substitutions,fewer insertions and fewer deletions. Hence, substitutionsare modeled explicitly, while insertions and deletionsimplicitly.The set <strong>of</strong> <strong>edit</strong> decompositions (sequence consisting <strong>of</strong>all nodes and edges in <strong>graph</strong>) <strong>of</strong> G is denoted by R -1 (G)and a function evaluating whether two <strong>edit</strong> decompositionsare equivalent to a valid <strong>edit</strong> path is denoted by K val . Forx [ R -1 (G) and x 0 [ R -1 (G 0 ), the function K val is defined asfollows:K val ðx; x 0 Þ¼ 1; if <strong>edit</strong> path x and x0 is valid: ð5Þ0; otherwiseWith these notations, the proposed <strong>edit</strong> kernel functionon <strong>graph</strong>s can finally be written as:kðG; G 0 Þ¼XK val ðx; x 0 Þ Y K sim ððxÞ i; ðx 0 Þ iÞ; ð6Þx2R 1 ðgÞix 0 2R 1 ðg 0 Þwhere the index i indicates all nodes and edges present inthe <strong>edit</strong> decomposition. In the computation <strong>of</strong> the kernelvalue k(G, G 0 ), only valid <strong>edit</strong> paths are considered with thehelp <strong>of</strong> function K val .On the one hand, convolution <strong>edit</strong> kernel based GEDand support vector machines (EK-SVM) are broughttogether, whose classification performance is comparedwith that <strong>of</strong> the traditional <strong>edit</strong> <strong>distance</strong> together with the k-nearest neighbor classifier [53] (ED-kNN). This experimentis conducted on the 15 letters that can be drawn withstraight lines only, such as A, E, F, etc. The distorted letter<strong>graph</strong>s are split into a training set <strong>of</strong> 150 <strong>graph</strong>s, a validationset <strong>of</strong> 150 <strong>graph</strong>s, and a test set <strong>of</strong> 750 <strong>graph</strong>s. Theexperimental results are shown in Fig. 10, the accuracy <strong>of</strong>these two methods are heightened gradually with theincrease <strong>of</strong> running time and convolution <strong>edit</strong> kernel basedGED method has higher rate <strong>of</strong> classification than traditional<strong>edit</strong> <strong>distance</strong> under the same running time.On the other hand, this method is compared with kernelfunctions derived directly from <strong>edit</strong> <strong>distance</strong> [54] (ED-123

Pattern Anal Applic (2010) 13:113–129 1214.1.4 Method based on binary linear programmingFig. 10 Running time and accuracy <strong>of</strong> the proposed kernel functionand <strong>edit</strong> <strong>distance</strong> in [41]The BLP based algorithm [43] is for <strong>graph</strong>s with vertexattributes only and a framework for computing GED onthe set <strong>of</strong> <strong>graph</strong>s is introduced. Every attributed <strong>graph</strong> inthe set is treated as a sub<strong>graph</strong> <strong>of</strong> a larger <strong>graph</strong> referredto as <strong>edit</strong> grid, and <strong>edit</strong> operations <strong>of</strong> converting a <strong>graph</strong>into another one are equivalent to the state altering <strong>of</strong> the<strong>edit</strong> grid, from which GED can be derived. With the help<strong>of</strong> <strong>graph</strong> adjacency matrix, it can be treated as a problem<strong>of</strong> the BLP.If the GED between <strong>graph</strong> G 0 = (V 0 , E 0 , l 0 ) and <strong>graph</strong>G 1 = (V 1 , E 1 , l 1 ) is to be computed, the <strong>graph</strong> G 0 is firstlyembedded in a labeled complete <strong>graph</strong>G X ¼ðX; X X; l X Þ;such thatTable 2 Accuracy <strong>of</strong> two <strong>edit</strong> <strong>distance</strong> methods (ED), a random walkkernel (RW), and the proposed <strong>edit</strong> kernel (EK) in [41] (%)Letter datasetED-kNN 69.33 48.15ED-SVM 73.2 59.26RW-SVM 75.2 33.33EK-SVM 75.2 68.52Image datasetSVM), and random walks in <strong>graph</strong>s [55] (RW-SVM),respectively. The Letters dataset used in the last experimentand the image dataset which is split into a training set,a validation set, and a test set, each <strong>of</strong> size 54, is used inthis experiment. The images are assigned to one <strong>of</strong> theclasses snowy, countryside, city, people, and streets andthey are described in [56] in detail. The classificationaccuracy <strong>of</strong> four methods mentioned above is shown inTable 2. The EK-SVM method outperforms all othermethods on the second dataset and achieves significantlyhigher classification accuracy than the traditional <strong>edit</strong> <strong>distance</strong>method. RW-SVM performs as well as EK-SVM onthe first dataset, but significantly worse than all othermethods on the second dataset. Convolution <strong>edit</strong> kernelbased performs best <strong>of</strong> other methods.In a word, the convolution <strong>edit</strong> kernel based GEDtogether with SVM outperforms not only the cooperation <strong>of</strong>traditional <strong>edit</strong> <strong>distance</strong> and kNN, but also other kernelfunctions combining with SVM in classification. Unlike thetraditional <strong>edit</strong> <strong>distance</strong>, this kernel function makes gooduse <strong>of</strong> statistical learning theory in the inner product ratherthan the <strong>graph</strong> space directly. The convolution <strong>edit</strong> kernelis defined by decomposing pairs <strong>of</strong> <strong>graph</strong>s into <strong>edit</strong> path, soit is more closely related to GED than other kernelfunctions.• Graph G 0 is a sub<strong>graph</strong> <strong>of</strong> <strong>graph</strong> G X ,• Label l X (x i ) = / for all nodes x i [ X - V 0 ,• Label l X (x i , x j ) = 0 for all edgesðx i ; x j Þ2ðX XÞ E 0The G X = (X, X 9 X, l X ) is the <strong>edit</strong> grid and its statevector is denoted by g 2ðR [ /Þ N f0; 1g ðN2 NÞ=2 ; whereR is the label alphabet <strong>of</strong> nodes in the <strong>graph</strong> G 0 and N is thenumber <strong>of</strong> nodes in the <strong>edit</strong> grid.Then, a sequence <strong>of</strong> <strong>edit</strong>s used to convert <strong>graph</strong> G 0 intothe <strong>graph</strong> G 1 can be specified by the sequence <strong>of</strong> <strong>edit</strong> gridstate vectors {g k } M k=0 . The GED between G 0 and G 1 is theminimum cost <strong>of</strong> state transition <strong>of</strong> <strong>edit</strong> grid, that is,d c ðG 0 ; G 1 Þ¼¼X Mminfg k g M j k¼1 g M2C 1 k¼1cðg k 1 ; g k 1 ÞX M X Iminfg k g M j k¼1 g M2C 1 k¼1 i¼1X I¼ minp2Pi¼1 c g i ; 0 gpi 1i¼Nþ1c g i k1 ; gi k; ð7Þwhere I = N ? (N 2 - N)/2, C 1 is the set <strong>of</strong> state vectorscorresponding to all isomorphisms <strong>of</strong> G 1 on the <strong>edit</strong> grid,and P is the set <strong>of</strong> all permutation mappings forisomorphisms <strong>of</strong> the <strong>edit</strong> grid. Permutation maps theelement i <strong>of</strong> a set to other element p i <strong>of</strong> the same set. Byintroducing the Kronecker delta function d : < 2 ? {0, 1},formula (7) is equalized to formula (8):X N d c ðG 0 ; G 1 Þ¼min c gdp i ; g j i ; j 0 1p2Pi¼1þ cð0; 1Þ XI 1 d g i ; 0 gpið8Þ1123

122 Pattern Anal Applic (2010) 13:113–129Finally, the <strong>edit</strong> grid state vector g k is represented withthe adjacency matrix A k whose elements correspond toedge labels in the state vector and rows (columns) areindexed with node labels, that isA ijk ¼ giNþji 2 þi2kand ðA i k Þ¼gi k where 1 i; j NFormula (8) is converted into formula (9)d c ðG 0 ; G 1 Þ¼X N X NmincðlðA iP;S;T2f0;1g NN 0 Þ; lðA j 1 ÞÞPiji¼1 j¼1þ 1 cð0; 1ÞðS þ TÞij2s:t: ðA 0 P PA 1 þ S TÞ ij ¼ 0 8 i; j;and X P ik ¼ X P kj ¼ 1 8 k; ð9Þijwhere P ij = d(p i , j), i, j [ [1, N] is a permutation matrix, Sand T are the introduced matrices for formula conversion.Formula (9) is a BLP, and the solved optimal permutationmatrix P* can be used to determine the optimal <strong>edit</strong> operations.This method is tested on 135 similar molecules whichhave only 18 or fewer atoms in the Klotho BiochemicalCompounds Declarative Database [57]. Ideally, pairwise<strong>distance</strong>s <strong>of</strong> all these molecules are the same. Two MCSbased<strong>distance</strong> metrics are used as references. The GEDcomputed with this method is more concentrated than that<strong>of</strong> MCS-based <strong>distance</strong>s. Furthermore, classification performanceis examined with the ‘‘classifier ratio’’ which isthe ratio <strong>of</strong> the GED between sample <strong>graph</strong> and the correctprototype to the <strong>distance</strong> <strong>of</strong> sample and the nearest incorrectprototype. This method leads to the lowest classifierratio which indicates the least ambiguous classification.As demonstrated above, this method tends to reduce thelevel <strong>of</strong> ambiguity in <strong>graph</strong> recognition. But the complexity<strong>of</strong> BLP makes the computation <strong>of</strong> GED for large <strong>graph</strong>sdifficult.4.1.5 Method based on sub<strong>graph</strong> and super<strong>graph</strong>Concrete <strong>edit</strong> costs for GED are strongly applicationdependentand cannot be obtained in a general way, so sub<strong>graph</strong>and super<strong>graph</strong> based method [42] is proposed. It is aspecial kind <strong>of</strong> <strong>graph</strong> <strong>distance</strong> to approximate the <strong>edit</strong> <strong>distance</strong>,which is totally independent <strong>of</strong> <strong>edit</strong> costs. This methodis based on the conclusion that GED coincides with the MCS<strong>of</strong> two <strong>graph</strong>s under the certain cost function [20]. Let G _ andG^be a MCS and a minimum common super<strong>graph</strong> <strong>of</strong>G 1 ¼ðV 1 ; E 1 ; a 1 Þ and G 2 ¼ðV 2 ; E 2 ; a 2 ÞThe <strong>distance</strong> between G 1 and G 2 is defined by dðG 1 ; G 2 Þ¼G^ G _ ;where G^ is the number <strong>of</strong> nodes in <strong>graph</strong> G^ and G _ issimilar. A cost function C is defined as a vector consisting<strong>of</strong> non-negative real functionsðc nd ðvÞ; c ni ðvÞ; c ns ðv 1 ; v 2 Þ; c ed ðeÞ; c ei ðeÞ; c es ðe 1 ; e 2 ÞÞ;where v, v 1 [ V 1 , e, e 1 [ E 1 , v 2 [ V 2 , e 2 [ E 2 and thecomponents orderly represent costs for node deletion, nodeinsertion, node substitution, edge deletion, edge insertionand edge substitution. If the cost function C is specified asC ¼ðc; c; c ns ; c; c; c es Þ;where c is a constant function which holds thatc ns ðv 1 ; v 2 Þ [ 2c and c es ðe 1 ; e 2 Þ [ 2cfor all v 1 [ V 1 and v 2 [ V 2 with a 1 (v 1 ) = a 2 (v 2 ), the GEDbetween G 1 and G 2 can be computed by the formulad(G 1 , G 2 ) = cd(G 1 , G 2 ).Construction <strong>of</strong> this method is simple and this method isnot relying on fundamental <strong>graph</strong> <strong>edit</strong> operations, that is tosay, it is independent <strong>of</strong> cost functions.The first four algorithms take different approaches todefining cost functions and they are proved to be potent forclassifying or clustering some specific images; therefore,they are limited to some specific data. The last method hasless limitation and can be used for general attributed<strong>graph</strong>s; however, this is related to the search <strong>of</strong> MCS and aminimum common super<strong>graph</strong>, which is also difficult forimplementing in practice.4.2 GED for non-attributed <strong>graph</strong>sFor the non-attributed <strong>graph</strong>s only having the information<strong>of</strong> connectivity structure, GED algorithms [31, 35] usuallyinclude two parts: conversion <strong>of</strong> <strong>graph</strong>s to stringsand computation <strong>of</strong> <strong>edit</strong> <strong>distance</strong> for strings [58–60].Especially, a structural <strong>graph</strong> may be a tree. Althoughtrees can be viewed as a special kind <strong>of</strong> <strong>graph</strong>s, specificcharacteristics <strong>of</strong> trees suggest that posing the treematchingproblem as a variant on <strong>graph</strong> matching is notthe best approach. In particular, complexity <strong>of</strong> both treeisomorphism and subtree isomorphism problems is polynomialtime, which is more efficient than general <strong>graph</strong>s.The similarity <strong>of</strong> labeled trees is compared in [61] byvarious methods, in which definitions <strong>of</strong> cost functionsare given ahead. In this paper, specific methods for nonattributedtree matching problem are summarized. Tree<strong>edit</strong> <strong>distance</strong> (TED) can be obtained by searching for themaximum weight cliques [62, 63], or embedding treesinto a pattern space by constructing super-tree [64],which are presented separately from those <strong>of</strong> general<strong>graph</strong>s.123

Pattern Anal Applic (2010) 13:113–129 1234.2.1 Tree <strong>edit</strong> <strong>distance</strong>4.2.1.1 Maximum weight cliques based method The <strong>edit</strong><strong>distance</strong> <strong>of</strong> the unordered tree still presents a computationalbottleneck, therefore, the computation <strong>of</strong> unordered TEDshould be efficiently approximated. Bunke’s idea <strong>of</strong> theequivalence <strong>of</strong> MCS and <strong>edit</strong> <strong>distance</strong> computation hasbeen applied to the GED [42], and it can also be extendedto the TED [62, 63, 65]. In these algorithms, there is astrong connection between the computation <strong>of</strong> maximumcommon subtree and the TED, and searching for themaximum common subtree is transformed into finding amaximum weight clique, so computation <strong>of</strong> TED is convertedinto a series <strong>of</strong> maximum weight clique problems,which is illustrated in Fig. 11.Similar with the <strong>graph</strong>s, data tree needs converting intomodel tree. Under the constraint [66] that the cost <strong>of</strong>deleting and reinserting the same element with a differentlabel is not greater than the cost <strong>of</strong> relabeling it, nodesubstitution is to be replaced by node removal and insertionon the data tree. The cost <strong>of</strong> node insertion on the data treeis dual to that <strong>of</strong> node removal on the model tree, so theoperations to be performed are further reduced to noderemoval on both trees, which makes the optimal matchingcompletely determined by the subset <strong>of</strong> nodes left after theminimum <strong>edit</strong> sequence. The <strong>edit</strong> <strong>distance</strong> problem is equalto a particular substructure isomorphism problem.Given two directed acyclic <strong>graph</strong>s (DAGs) t and t 0 0 to bematched, the transitive closures ‘(t) and ‘(t 0 ) are calculated.A tree _ t is an obtainable subtree <strong>of</strong> the ‘(t) if and only if _tis generated from a tree t with a sequence <strong>of</strong> node removaloperations. The minimum cost <strong>edit</strong>ed tree isomorphismbetween t and t 0 0 is a maximum common obtainable subtree<strong>of</strong> the two ‘(t) and ‘(t 0 ).Then a maximum common obtainable subtree <strong>of</strong> the twotrees ‘(t) and ‘(t 0 ) is searched to induce the optimal matches,which can be transformed into computing the maximumweight clique. It is a quadratic programming problem:• The objective function is: min x T Cx;xs:t: x 2 D where C ¼ðc ij Þ i;j2V; ð10Þ81

124 Pattern Anal Applic (2010) 13:113–129least cost between pairwise strings is determined with Dijkstra’salgorithm.Based on the conclusion that adjacency matrix is associatedto the Markov chain, the transition matrix <strong>of</strong> theMarkov chains is the normalized adjacency matrix <strong>of</strong> a<strong>graph</strong> G = (V, E), where V = {1, 2, …, N}. Its leadingeigenvector gives the node sequence <strong>of</strong> the steady staterandom walk on the <strong>graph</strong> so that a <strong>graph</strong> is converted intoa string and global structural properties <strong>of</strong> <strong>graph</strong>s is characterized.The procedure is shown as below:1. The adjacent matrix A <strong>of</strong> the <strong>graph</strong> is defined;2. A transition probability matrix P is defined as:.XPði; jÞ ¼Aði; jÞ Aði; jÞ;ð12Þj2V3. The matrix P is converted into a symmetric form for aneigenvector expansion. The diagonal degree matrix Dis computed, and its elements are(Dði; jÞ ¼. P 1=dðiÞ ¼1 jVjj¼1 Pði; jÞ;0;i ¼ jotherwise ;ð13ÞThe symmetric version <strong>of</strong> the matrix P is W ¼ D 1 12 AD2:4. The spectral analysis for the symmetric transitionmatrix W isW ¼ X jVjk i¼1i/ i / T i ;ð14Þwhere k i is an eigenvalue <strong>of</strong> W and / i is the correspondingeigenvector <strong>of</strong> unit length.5. The leading eigenvector / * gives the sequence <strong>of</strong>nodes in an iterative procedure and at each iteration k,a list L k denotes the nodes visited:• In the first step, let L 1 = j 1 , wherej 1 ¼ arg max / ðjÞ;jand neighbors <strong>of</strong> j 1 is the set N j1 ¼fmjðj 1 ; mÞ 2Eg;• In the second step, node j 2 satisfyingj 2 ¼ arg max / ðjÞj2N j1is found to form L 2 ¼fj 1 ; j 2 g and the set <strong>of</strong>neighbors¼fmjðj 2 ; mÞ 2E ^ m 6¼ j 1 gN j2<strong>of</strong> j 2 is hunted;In the kth step, the node visited is j k and the list <strong>of</strong> nodesvisited is L k . The set N jk ¼fmjðj k ; mÞ 2Eg consists <strong>of</strong>neighbors <strong>of</strong> j k , and then in the k ? 1th step, node j k?1satisfying j kþ1 ¼ arg max j2Ck / ðjÞ is chosen, whereC k ¼fjj2 N jk ^ j 62 L k g;• The number <strong>of</strong> step k = k ? 1;• The third and fourth steps are repeated until every nodein the <strong>graph</strong> is traversed.Given the model <strong>graph</strong> G M = (V M , E M ) and the data<strong>graph</strong> G D = (V D , E D ) whose GED is to be computed,strings <strong>of</strong> these two <strong>graph</strong>s are determined by the procedureabove. The model <strong>graph</strong> is denoted byX ¼fx 1 ; x 2 ; ...; xjVM jgand the data <strong>graph</strong> is denoted byY ¼fy 1 ; y 2 ; ...; yjVD jg:A lattice is constructed, rows <strong>of</strong> which are indexed usingthe data-<strong>graph</strong> string, whereas columns <strong>of</strong> which areindexed using the model-<strong>graph</strong> string. An <strong>edit</strong> path can befound to transform string <strong>of</strong> data <strong>graph</strong> into string <strong>of</strong> model<strong>graph</strong>, which is denoted byC ¼ hc 1 ; c 2 ; ...; c k ; ...; c L iand its elements are Cartesian pairs c k [ (V D [ e) 9(V M [ e), where e denotes the empty set. The path isconstrained to be connected on the <strong>edit</strong> lattice. Thediagonal transition corresponds to the match <strong>of</strong> an edge<strong>of</strong> the data-<strong>graph</strong> to an edge <strong>of</strong> the model <strong>graph</strong>. Ahorizontal transition corresponds to the case where thetraversed nodes <strong>of</strong> the model <strong>graph</strong> do not have matchednodes in data <strong>graph</strong>. Similarly, when a vertical transition ismade, then the traversed nodes <strong>of</strong> the data <strong>graph</strong> do nothave matched nodes in model <strong>graph</strong>. The cost <strong>of</strong> the <strong>edit</strong>path is the sum <strong>of</strong> the costs for the elementary <strong>edit</strong>operations:CðCÞ ¼ X c k 2C gðc k ! c kþ1 Þ;ð15Þwhere g(c k ? c k?1 ) =-ln P(c k ? c k?1 ) is the cost <strong>of</strong> thetransition from state c k = (a, b) to c k?1 = (c, d). Theprobability P(c k ? c k?1 ) is defined as below:Pðc k ! c kþ1 Þ¼b a;b b c;d R D ða; cÞR M ðb; dÞ;where b a,b and b c,d are the morphological affinity,8< P D ða; cÞ if ða; cÞ 2E D2jðjV R D ða; cÞ ¼M j jV D jÞjjV M jþjV D jif a ¼ e or c ¼ e ;:0 otherwiseP D is the transition probability matrix <strong>of</strong> data <strong>graph</strong> G D ,and R M (b, d) is similar with R D (a, c). The optimal <strong>edit</strong> pathis the one with the minimum cost, that is, C * = arg -min C C(C). So, the problem <strong>of</strong> computing GED is posed asfinding the shortest path through the lattice by Dijkstra’salgorithm and the GED between these two <strong>graph</strong>s is C(C * ).This is a relatively preliminary work for applying theeigenstructure <strong>of</strong> the <strong>graph</strong> adjacency matrix to the <strong>graph</strong>-123

Pattern Anal Applic (2010) 13:113–129 125matching, and it is improved to be the method in theprobability framework.4.2.2.2 MAP based method The idea <strong>of</strong> the MAP estimationbased algorithm [31, 59] is developed from theDijkstra’s algorithm based method [35]. They differ in theestablishment <strong>of</strong> strings and match <strong>of</strong> strings. Edit costs arerelated to different features. All these differences are presentedin Table 3.In the MAP based algorithm, <strong>graph</strong>s are converted intostrings with <strong>graph</strong> spectral method according to the leadingeigenvectors <strong>of</strong> their adjacency matrices. Similar with theDijkstra’s algorithm based method, GED is the cost <strong>of</strong> theleast expensive <strong>edit</strong> path C * , but the path C * is found basedon the idea <strong>of</strong> Levenshtein <strong>distance</strong> in probability framework.The cost for the elementary <strong>edit</strong> operations is definedas:gðc k ! c kþ1 Þ¼ ln Pðc k / X ðx jÞ; / Y ðy iÞÞln Pðc kþ1 / X ðx jþ1Þ; / Y ðy ð16Þiþ1ÞÞ ln R k;kþ1 ;where the edge compatibility coefficient R k,k?1 isandPðc k / X ðx jÞ; / Y ðy iÞÞ( nop 1ffiffiffiffi1¼ 2p rexp2rð/ 2 X ðx jÞ / Y ðy iÞÞ 2aif x j 6¼ e and y i 6¼ eif x j ¼ e or y i ¼ eGiven images in three sequences: CMU-VASC sequence[67], the INRIA MOVI sequence [68], and a sequence <strong>of</strong>views <strong>of</strong> a model Swiss chalet, their GED matrix is computedwith this method. The result is shown in Fig. 12.Each element <strong>of</strong> the matrix specifies the color <strong>of</strong> a rectilinearpatch in Fig. 12 and the deeper color corresponds tothe smaller <strong>distance</strong>. All patches constitute nine blocks.Coordinates 1–10, 11–20 and 21–30 correspond to CMU-VASC sequence, INRIA MOVI sequence and Swiss chaletsequence, respectively; therefore blocks along the diagonalpresent within-class <strong>distance</strong>s and other blocks presentbetween-class <strong>distance</strong>s. In each block, the row and columnindexes increase monotonically according to the viewingangle <strong>of</strong> each sequence. Color <strong>of</strong> diagonal blocks is deeperthan that <strong>of</strong> other areas, and it is obvious that GED within aclass is lower than that between classes on the whole.R k;kþ1 ¼Pðc k; c kþ1 ÞPðc k ÞPðc kþ1 Þ8q M q D if c k ! c kþ1 is a diagonal transition on the the <strong>edit</strong>>:lattice; i:e:; y i ¼ e or y iþ1 ¼ e and ðx j ; x jþ1 Þ2E M1 if y i ¼ e or y iþ1 ¼ e and x j ¼ e or x jþ1 ¼ eTable 3 Comparison <strong>of</strong> the MAP based algorithm and the Dijkstra’salgorithm based methodMethods MAP based algorithm Dijkstra’s algorithmbased methodEstablishment<strong>of</strong> the serialorderingStringmatchingEdit costsUsing the leadingeigenvector <strong>of</strong> the<strong>graph</strong> adjacencymatrixA MAP alignment <strong>of</strong>the strings forpairwise <strong>graph</strong>sRelated to the edgedensity <strong>of</strong> two <strong>graph</strong>sUsing the leadingeigenvector <strong>of</strong> thenormalized <strong>graph</strong>adjacency matrixSearching for the optimal<strong>edit</strong> sequence usingDijkstra’s algorithmRelated to the degree andadjacency <strong>of</strong> nodesCompared with the Dijkstra’s algorithm based method,this method has the following two advantages: when <strong>graph</strong>sare converted into strings, the adjacency matrix needs notnormalization, which decreases computation complexity;when strings are matched, the computation <strong>of</strong> minimal <strong>edit</strong><strong>distance</strong> is cast in a probabilistic setting so that statisticalmodels can be used for the cost definition.4.2.2.3 String kernel based method String kernels can beused to measure the similarity <strong>of</strong> seriated <strong>graph</strong>s, whichmakes the computation <strong>of</strong> GED more efficient. In the stringkernel based algorithm [58], <strong>graph</strong>s are seriated into stringswith semidefinite programming (SDP) whose steps aregiven as below.123

126 Pattern Anal Applic (2010) 13:113–129images between classes and images corresponding to differentobjects can be clustered well.The SDP overcomes local optimality <strong>of</strong> the <strong>graph</strong>spectral method used in the Dijkstra’s algorithm based andMAP based methods, and string kernel function is moreefficient than aligning strings with Dijkstra’s algorithm.4.2.2.4 Sub<strong>graph</strong> based method Because <strong>of</strong> potentiallyexponential complexity <strong>of</strong> the general inexact <strong>graph</strong>matchingproblem, it is decomposed into a series <strong>of</strong> simplersub<strong>graph</strong> matching problems [60]. A <strong>graph</strong> G = (V, E) ispartitioned into non-overlapping super cliques according toFiedler vector:Fig. 12 GED matrix in [31]• Let B be X 1/2 AX -1/2 and y be X 1/2 x * , where231 0 0 0 00 2 0 0 0.X ¼6740 0 0 2 05 ;0 0 0 0 1A is the adjacency matrix <strong>of</strong> the <strong>graph</strong> to be convertedinto a string, and x * denotes the value to be solved. IfY * = yy T , the SDP is represented as the followingformula:arg min traceðBY Þ;Y such that trace(EY * ) = 1, where E is the unit matrix.Matrix Y * can be solved with the method in [69] soasto obtain x * .• Similar with the idea <strong>of</strong> converting a <strong>graph</strong> into a stringwith the leading eigenvector in [35], the <strong>graph</strong> isconverted into a string according to the vector x * .With strings obtained, kernel feature is applied to presentingthe times <strong>of</strong> a substring occurring in a string and isweighted by the length <strong>of</strong> the substring. Elements <strong>of</strong> akernel feature vector for a string correspond to substrings.The inner product <strong>of</strong> the kernel feature vectors correspondingto two strings is called as string kernel function.The kernel function gives sum <strong>of</strong> frequency <strong>of</strong> all commonsubstrings weighted by their lengths. String kernel functionworks based on the idea that the strings are more similar ifthey share more common substrings.COIL image database [70] is used to evaluate thismethod. Six objects are selected from the database andeach object has 20 different views. Distance <strong>of</strong> imagesbelonging to the same class is much smaller than that <strong>of</strong>• The list C = {j 1 , j 2 , …, j |V| } is the node rank-orderwhich is determined under the conditions that thepermutation satisfiespðj 1 Þ\pðj 2 Þ\ \pðj jVjÞand the components <strong>of</strong> the Fiedler vector isx j1 [ x j2 [ [ x jjVj:The weight assigned to the node i [ V is w i = r-ank(p(i)) and the significance score <strong>of</strong> the node i beinga center node is computed, that is S i , according todegree and weight <strong>of</strong> node i;• The list C is traversed until a node k is founded which isneither in the perimeter nor whose score s k exceedsthose <strong>of</strong> its neighbors. Node k and its neighborhood N kconstitute a super clique and they are deleted from thelist C, that is C = C - {k} [ N k . This procedure isrepeated until C = /, and then the non-overlappingneighborhoods <strong>of</strong> the <strong>graph</strong> G are located.With super cliques in hand, a <strong>graph</strong> G 0 containing supercliques <strong>of</strong> the original <strong>graph</strong> G is constructed, in which thenodes denote the super cliques and the edges indicatewhether these super cliques are connected in the original<strong>graph</strong>. Such <strong>graph</strong>s are matched based on the matching <strong>of</strong>the super clique set, that is, the super clique-to-super cliquematching, which is computed by the conversion <strong>of</strong> supercliques into strings based on the cyclic permutations <strong>of</strong> theperipheral nodes about the center nodes and the Levenshtein<strong>distance</strong> between strings.This method partitions a <strong>graph</strong> into sub<strong>graph</strong>s, andtherefore the process may cast into a hierarchical frameworkand be suitable for parallel computation.Trees, as a special kind <strong>of</strong> <strong>graph</strong>s, have some attributessuperior to general <strong>graph</strong>s and TED can be computedwithout definition <strong>of</strong> cost functions, which has beenapplied to shape classification [71] with shock-tree as astructural representation <strong>of</strong> 2D shape. But it is obviousthat the key issue <strong>of</strong> GED algorithms for general <strong>graph</strong>s isstill definition <strong>of</strong> cost functions. Each method defines cost123

Pattern Anal Applic (2010) 13:113–129 127functions in a task specific way from heuristics <strong>of</strong> theproblem domain in a trial-and-error fashion and furtherresearch is still needed to derive cost functions in ageneral method.5 ConclusionGraph <strong>edit</strong> <strong>distance</strong> is a flexible error-tolerant mechanismto measure <strong>distance</strong> between two <strong>graph</strong>s, which has beenwidely applied to pattern recognition and image retrieval.The research <strong>of</strong> GED is studied and <strong>survey</strong>ed in thispaper. Existing GED algorithms are categorized andpresented in detail. Advantages and disadvantages <strong>of</strong>these algorithms are uncovered by comparing themexperimentally and theoretically. Although the researchhas remained for several decades and yielded substantialresults, there are few robust algorithms suitable for allkinds <strong>of</strong> <strong>graph</strong>s and several problems deserve futureresearch.1. In the computation <strong>of</strong> GED, how to compare thesimilarity <strong>of</strong> corresponding nodes and edges in two<strong>graph</strong>s is still not solved well. For attributed <strong>graph</strong>s,attributes <strong>of</strong> nodes and edges can be used forcomparing the similarity. But which attributes shouldbe adopted and available for computing <strong>distance</strong>remains an open problem. For non-attributed <strong>graph</strong>s,the connectivity <strong>of</strong> the <strong>graph</strong> can be used for comparingthe similarity. But how to characterize theconnectivity to achieve a better evaluation <strong>of</strong> similarityremains unsolved.2. The definition <strong>of</strong> costs for <strong>edit</strong> operations is alsoimportant for GED, which affects the rationality <strong>of</strong>GED directly. Existing researches <strong>of</strong> GED mainlyfocus on this problem and each <strong>of</strong> them is available forlimited applications, or under some constrains, sosome definitions <strong>of</strong> costs, which can be appliedextensively and easily, are demanded.3. Many ways <strong>of</strong> searching for least expensive <strong>edit</strong>sequence have been used previously. The searchstrategy should be consistent with the method <strong>of</strong>similarity comparison and the definition <strong>of</strong> <strong>edit</strong> cost,instead <strong>of</strong> the best one in theory. So an appropriatesearch strategy for the minimum <strong>edit</strong> costs sequenceshould be studied to improve both the efficiency andaccuracy <strong>of</strong> GED algorithms.Acknowledgments We want to thank the helpful comments andsuggestions from the anonymous reviewers. This research has beenpartially supported by National Science Foundation <strong>of</strong> China(60771068, 60702061, 60832005), the Open-End Fund <strong>of</strong> NationalLaboratory <strong>of</strong> Pattern Recognition in China and National Laboratory<strong>of</strong> Automatic Target Recognition, Shenzhen University, China, theProgram for Changjiang Scholars and innovative Research Team inUniversity <strong>of</strong> China (IRT0645).References1. Umeyama S (1988) An eigendecomposition approach to weighted<strong>graph</strong> matching problems. IEEE Trans Pattern Anal MachIntell 10(5):695–7032. Bunke H (2000) Recent developments in <strong>graph</strong> matching. In:Proceedings <strong>of</strong> IEEE international conference on pattern recognition,Barcelona, pp 117–1243. Caelli T, Kosinov S (2004) An eigenspace projection clusteringmethod for inexact <strong>graph</strong> matching. IEEE Trans Pattern AnalMach Intell 26(4):515–5194. Cross ADJ, Wilson RC, Hancock ER (1997) Inexact <strong>graph</strong>matching using genetic search. Pattern Recognit 30(7):953–9705. Wagner RA, Fischer MJ (1974) The string-to-string correctionproblem. J ACM 21(1):168–1736. Pavlidis JRT (1994) A shape analysis model with applications toa character recognition system. IEEE Trans Pattern Anal MachIntell 16(4):393–4047. Wang Y-K, Fan K-C, Horng J-T (1997) Genetic-based search forerror-correcting <strong>graph</strong> isomorphism. IEEE Trans Syst ManCybern B Cybern 27(4):588–5978. Sebastian TB, Klien P, Kimia BB (2004) Recognition <strong>of</strong> shapesby <strong>edit</strong>ing their shock <strong>graph</strong>s. IEEE Trans Pattern Anal MachIntell 26(5):550–5719. He L, Han CY, Wee WG (2006) Object recognition and recoveryby skeleton <strong>graph</strong> matching. In: Proceedings <strong>of</strong> IEEE internationalconference on multimedia and expo, Toronto, pp 993–99610. Shearer K, Bunke H, Venkatesh S (2001) Video indexing andsimilarity retrieval by largest common sub<strong>graph</strong> detection usingdecision trees. Pattern Recognit 34(5):1075–109111. Lee J (2006) A <strong>graph</strong>-based approach for modeling and indexingvideo data. In: Proceedings <strong>of</strong> IEEE international symposium onmultimedia, San Diego, pp 348–35512. Tao D, Tang X (2004) Nonparametric discriminant analysis inrelevance feedback for content-based image retrieval. In: Proceedings<strong>of</strong> IEEE international conference on pattern recognition,Cambridge, pp 1013–101613. Tao D, Tang X, Li X et al (2006) Kernel direct biased discriminantanalysis: a new content-based image retrieval relevancefeedback algorithm. IEEE Trans Multimedia 8(4):716–72714. Tao D, Tang X, Li X (2008) Which components are important forinteractive image searching? IEEE Trans Circuits Syst VideoTechnol 18(1):1–1115. Christmas WJ, Kittler J, Petrou M (1995) Structural matching incomputer vision using probabilistic relaxation. IEEE Trans PatternAnal Mach Intell 17(8):749–76416. Gao X, Zhong J, Tao D et al (2008) Local face sketch synthesislearning. Neurocomputing 71(10–12):1921–193017. Sanfeliu A, Fu KS (1983) A <strong>distance</strong> measure between attributedrelational <strong>graph</strong>s for pattern recognition. IEEE Trans Syst ManCybern 13(3):353–36218. Messmer BT, Bunke H (1994) Efficient error-tolerant sub<strong>graph</strong>isomorphism detection. Shape Struct Pattern Recognit:231–24019. Messmer BT, Bunke H (1998) A new algorithm for error-tolerantsub<strong>graph</strong> isomorphism detection. IEEE Trans Pattern Anal MachIntell 20(5):493–50420. Bunke H (1997) On a relation between <strong>graph</strong> <strong>edit</strong> <strong>distance</strong> andmaximum common sub<strong>graph</strong>. Pattern Recognit Lett 18(8):689–69421. Bunke H (1999) Error correcting <strong>graph</strong> matching: on the influence<strong>of</strong> the underlying cost function. IEEE Trans Pattern AnalMach Intell 21(9):917–922123