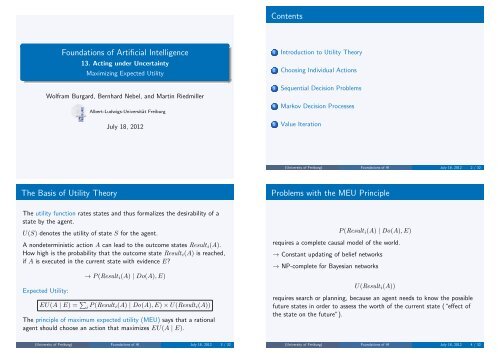

13. Acting under Uncertainty Maximizing Expected Utility

13. Acting under Uncertainty Maximizing Expected Utility

13. Acting under Uncertainty Maximizing Expected Utility

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

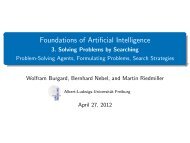

The Axioms of <strong>Utility</strong> Theory (1)The Axioms of <strong>Utility</strong> Theory (2)Justification of the MEU principle, i.e., maximization of the average utility.Scenario = Lottery LPossible outcomes = possible prizesThe outcome is determined by chanceL = [p 1 , C 1 ; p 2 , C 2 ; . . . ; p n , C n ]Example:Lottery L with two outcomes, C 1 and C 2 :L = [p, C 1 ; 1 − p, C 2 ]Preference between lotteries:L 1 ≻ L 2 The agent prefers L 1 over L 2L 1 ∼ L 2 The agent is indifferent between L 1 and L 2L 1 L 2 The agent prefers L 1 or is indifferent between L 1 and L 2Given lotteries A, B, COrderability(A ≻ B) ∨ (B ≻ A) ∨ (A ∼ B)An agent should know what it wants: it must either prefer one of the 2lotteries or be indifferent to both.Transitivity(A ≻ B) ∧ (B ≻ C) ⇒ (A ≻ C)Violating transitivity causes irrational behavior: A ≻ B ≻ C ≻ A. Theagent has A and would pay to exchange it for C. C would do the samefor A.→ The agent loses money this way.(University of Freiburg) Foundations of AI July 18, 2012 5 / 32(University of Freiburg) Foundations of AI July 18, 2012 6 / 32The Axioms of <strong>Utility</strong> Theory (3)The Axioms of <strong>Utility</strong> Theory (4)ContinuityA ≻ B ≻ C ⇒ ∃p[p, A; 1 − p, C] ∼ BIf some lottery B is between A and C in preference, then there is someprobability p for which the agent is indifferent between getting B forsure and the lottery that yields A with probability p and C withprobability 1 − p.SubstitutabilityA ∼ B ⇒ [p, A; 1 − p, C] ∼ [p, B; 1 − p, C]If an agent is indifferent between two lotteries A and B, then the agentis indifferent beteween two more complex lotteries that are the sameexcept that B is substituted for A in one of them.MonotonicityA ≻ B ⇒ (p ≥ q ⇔ [p, A; 1 − p, B] [q, A; 1 − q, B])If an agent prefers the outcome A, then it must also prefer the lotterythat has a higher probability for A.Decomposability[p, A; 1 − p, [q, B; 1 − q, C]] ∼ [p, A; (1 − p)q, B; (1 − p)(1 − q), C]Compound lotteries can be reduced to simpler ones using the laws ofprobability. This has been called the “no fun in gambling”-rule: twoconsecutive gambles can be reduced to a single equivalent lottery.(University of Freiburg) Foundations of AI July 18, 2012 7 / 32(University of Freiburg) Foundations of AI July 18, 2012 8 / 32

<strong>Utility</strong> Functions and AxiomsAssessing UtilitiesThe axioms only make statements about preferences.The existence of a utility function follows from the axioms!<strong>Utility</strong> Principle If an agent’s preferences obey the axioms, then thereexists a function U : S ↦→ R withU(A) > U(B) ⇔ A ≻ BU(A) = U(B) ⇔ A ∼ BMaximum <strong>Expected</strong> <strong>Utility</strong> (MEU)- PrincipleU([p 1 , S 1 ; . . . ; p n , S n ]) = ∑ i p iU(S i )How do we design utility functions that cause the agent to act as desired?The scale of a utility function can be chosen arbitrarily. We therefore candefine a ’normalized’ utility:’Best possible prize’ U(S) = u max = 1’Worst catastrophe’ U(S) = u min = 0Given a utility scale between u min and u max we can asses the utility of anyparticular outcome S by asking the agent to choose between S and astandard lottery [p, u max ; 1 − p, u min ]. We adjust p until they are equallypreferred.Then, p is the utility of S. This is done for each outcome S to determineU(S).(University of Freiburg) Foundations of AI July 18, 2012 9 / 32(University of Freiburg) Foundations of AI July 18, 2012 10 / 32Possible <strong>Utility</strong> FunctionsSequential Decision Problems (1)From economic models: The value of money3+ 1UU2− 1ooo o o ooo1STARTooo o150,000 o800,000$ $1 2 34oo(a)left: utility from empirical data; right: typical utility function over the fullrange.(b)Beginning in the start state the agent must choose an action at eachtime step.The interaction with the environment terminates if the agent reachesone of the goal states (4,3) (reward of +1) or (4,2) (reward -1). Eachother location has a reward of -.04.In each location the available actions are Up, Down, Left, Right.(University of Freiburg) Foundations of AI July 18, 2012 11 / 32(University of Freiburg) Foundations of AI July 18, 2012 12 / 32

Sequential Decision Problems (2)Markov Decision Problem (MDP)Deterministic version: All actions always lead to the next square in theselected direction, except that moving into a wall results in no change inposition.Stochastic version: Each action achieves the intended effect withprobability 0.8, but the rest of the time, the agent moves at right anglesto the intended direction.0.10.80.1Given a set of states in an accessible, stochastic environment, an MDP isdefined byInitial state S 0Transition Model T (s, a, s ′ )Reward function R(s)Transition model: T (s, a, s ′ ) is the probability that state s ′ is reached, ifaction a is executed in state s.Policy: Complete mapping π that specifies for each state s which actionπ(s) to take.Wanted: The optimal policy π ∗ is the policy that maximizes the expectedutility.(University of Freiburg) Foundations of AI July 18, 2012 13 / 32(University of Freiburg) Foundations of AI July 18, 2012 14 / 32Optimal Policies (1)Optimal Policies (2)Given the optimal policy, the agent uses its current percept that tells itits current state.It then executes the action π ∗ (s).We obtain a simple reflex agent that is computed from the informationused for a utility-based agent.Optimal policy for stochasticMDP with R(s) = −0.04:3+12–111 2 3 4Optimal policy changes with choice of transition costs R(s).How to compute optimal policies?(University of Freiburg) Foundations of AI July 18, 2012 15 / 32(University of Freiburg) Foundations of AI July 18, 2012 16 / 32

Finite and Infinite Horizon ProblemsPerformance of the agent is measured by the sum of rewards for thestates visited.To determine an optimal policy we will first calculate the utility of eachstate and then use the state utilities to select the optimal action foreach state.The result depends on whether we have a finite or infinite horizonproblem.<strong>Utility</strong> function for state sequences: U h ([s 0 , s 1 , . . . , s n ])Finite horizon: U h ([s 0 , s 1 , . . . , s N+k ]) = U h ([s 0 , s 1 , . . . , s N ]) for allk > 0.For finite horizon problems the optimal policy depends on the currentstate and the remaining steps to go. It therefore depends on time andis called nonstationary.In infinite horizon problems the optimal policy only depends on thecurrent state and therefore is stationary.(University of Freiburg) Foundations of AI July 18, 2012 17 / 32Assigning Utilities to State SequencesFor stationary systems there are just two coherent ways to assignutilities to state sequences.Additive rewards:U h ([s 0 , s 1 , s 2 , . . .]) = R(s 0 ) + R(s 1 ) + R(s 2 ) + · · ·Discounted rewards:U h ([s 0 , s 1 , s 2 , . . .]) = R(s 0 ) + γR(s 1 ) + γ 2 R(s 2 ) + · · ·The term γ ∈ [0, 1[ is called the discount factor.With discounted rewards the utility of an infinite state sequence isalways finite. The discount factor expresses that future rewards haveless value than current rewards.(University of Freiburg) Foundations of AI July 18, 2012 18 / 32Utilities of StatesExampleThe utility of a state depends on the utility of the state sequences thatfollow it.Let U π (s) be the utility of a state <strong>under</strong> policy π.Let s t be the state of the agent after executing π for t steps. Thus, theutility of s <strong>under</strong> π is[ ∞]∑U π (s) = E γ t R(s t ) | π, s 0 = st=0The true utility U(s) of a state is U π∗ (s).R(s) is the short-term reward for being in s andU(s) is the long-term total reward from s onwards.The utilities of the states in our 4 × 3 world with γ = 1 and R(s) = −0.04for non-terminal states:3210.8120.7620.7050.8680.6550.9180.6600.6111 2 3+ 1–10.3884(University of Freiburg) Foundations of AI July 18, 2012 19 / 32(University of Freiburg) Foundations of AI July 18, 2012 20 / 32

Choosing Actions using theMaximum <strong>Expected</strong> <strong>Utility</strong> PrincipleBellman-EquationThe agent simply chooses the action that maximizes the expected utilityof the subsequent state:∑π(s) = argmax T (s, a, s ′ )U(s ′ )as ′The utility of a state is the immediate reward for that state plus theexpected discounted utility of the next state, assuming that the agentchooses the optimal action:U(s) = R(s) + γ maxa∑s ′ T (s, a, s ′ )U(s ′ )The equation∑U(s) = R(s) + γ max T (s, a, s ′ )U(s ′ )as ′is also called the Bellman-Equation.(University of Freiburg) Foundations of AI July 18, 2012 21 / 32(University of Freiburg) Foundations of AI July 18, 2012 22 / 32Bellman-Equation: ExampleIn our 4 × 3 world the equation for the state (1,1) isU(1, 1) = −0.04 + γ max{ 0.8U(1, 2) + 0.1U(2, 1) + 0.1U(1, 1),(Up)0.9U(1, 1) + 0.1U(1, 2),(Left)0.9U(1, 1) + 0.1U(2, 1),(Down)0.8U(2, 1) + 0.1U(1, 2) + 0.1U(1, 1)}(Right)= −0.04 + γ max{ 0.8 · 0.762 + 0.1 · 0.655 + 0.1 · 0.705, (Up)0.9 · 0.705 + 0.1 · 0.762, (Left)0.9 · 0.705 + 0.1 · 0.655, (Down)0.8 · 0.655 + 0.1 · 0.762 + 0.1 · 0.705} (Right)= −0.04 + 1.0 ( 0.6096 + 0.0655 + 0.0705), (Up) = −0.04 + 0.7456 = 0.7056→ Up is the optimal action in (1,1).320.8120.7620.8680.9180.660+ 1–1Value Iteration (1)An algorithm to calculate an optimal strategy.Basic Idea: Calculate the utility of each state. Then use the state utilitiesto select an optimal action for each state.A sequence of actions generates a branch in the tree of possible states(histories). A utility function on histories U h is separable iff there exists afunction f such thatU h ([s 0 , s 1 , . . . , s n ]) = f(s 0 , U h ([s 1 , . . . , s n ]))The simplest form is an additive reward function R:U h ([s 0 , s 1 , . . . , s n ]) = R(s 0 ) + U h ([s 1 , . . . , s n ]))10.7050.6550.6110.388In the example, R((4, 3)) = +1, R((4, 2)) = −1, R(other) = −1/25.1 2 34(University of Freiburg) Foundations of AI July 18, 2012 23 / 32(University of Freiburg) Foundations of AI July 18, 2012 24 / 32

Value Iteration (2)Value Iteration (3)If the utilities of the terminal states are known, then in certain cases wecan reduce an n-step decision problem to the calculation of the utilities ofthe terminal states of the (n − 1)-step decision problem.→ Iterative and efficient processProblem: Typical problems contain cycles, which means the length of thehistories is potentially infinite.Solution: Use∑U t+1 (s) = R(s) + γ max T (s, a, s ′ )U t (s ′ )as ′where U t (s) is the utility of state s after t iterations.17Remark: As t → ∞, MAKING the utilities of the individual COMPLEXstates converge to stablevalues.DECISIONS(University of Freiburg) Foundations of AI July 18, 2012 25 / 32The Bellman equation is the basis of value iteration.Because of the max-operator the n equations for the n states arenonlinear.We can apply an iterative approach in which we replace the equality byan assignment:∑U(s) ← R(s) + γ max T (s, a, s ′ )U(s ′ )as ′(University of Freiburg) Foundations of AI July 18, 2012 26 / 32The Value Iteration AlgorithmConvergence of Value Iterationfunction VALUE-ITERATION(mdp, ǫ) returns a utility functioninputs: mdp, an MDP with states S, actions A(s), transition model P (s ′ | s, a),rewards R(s), discount γǫ, the maximum error allowed in the utility of any statelocal variables: U , U ′ , vectors of utilities for states in S, initially zeroδ, the maximum change in the utility of any state in an iterationrepeatU ← U ′ ; δ ← 0for each state s in S doU ′ [s] ← R(s) + γXmaxa ∈ A(s)s ′ P (s ′ | s, a) U [s ′ ]if |U ′ [s] − U [s]| > δ then δ ← |U ′ [s] − U [s]|until δ < ǫ(1 − γ)/γreturn USince the algorithm is iterative we need a criterion to stop the process ifwe are close enough to the correct utility.In principle we want to limit the policy loss ‖U π t− U‖ that is the mostthe agent can lose by executing π t .It can be shown that value iteration converges and thatif ‖U t+1 − U t ‖ < ɛ(1 − γ)/γ then ‖U t+1 − U‖ < ɛif ‖U t − U‖ < ɛ then ‖U π − U‖ < 2ɛγ/(1 − γ)The value iteration algorithm yields the optimal policy π ∗ .Figure 17.4 The value iteration algorithm for calculating utilities of states. The termination conditionis from Equation (??).(University of Freiburg) Foundations of AI July 18, 2012 27 / 32(University of Freiburg) Foundations of AI July 18, 2012 28 / 32

Application ExamplePolicy Iteration<strong>Utility</strong> estimates10.80.60.40.20-0.20 5 10 15 20 25 30Number of iterations(4,3)(3,3)(1,1)(3,1)(4,1)Max error/Policy loss10.80.60.40.2Max errorPolicy loss00 2 4 6 8 10 12 14Number of iterationsIn practice the policy often becomes optimal before the utility has converged.Value iteration computes the optimal policy even at a stage when theutility function estimate has not yet converged.If one action is better than all others, then the exact values of the statesinvolved need not to be known.Policy iteration alternates the following two steps beginning with aninitial policy π 0 :Policy evaluation: given a policy π t , calculate U t = U π t, the utility ofeach state if π t were executed.Policy improvement: calculate a new maximum expected utility policyπ t+1 according to∑π t+1 (s) = argmax T (s, a, s ′ )U(s ′ )as ′(University of Freiburg) Foundations of AI July 18, 2012 29 / 32(University of Freiburg) Foundations of AI July 18, 2012 30 / 32Chapter 17. Making Complex DecisionsThe Policy Iteration AlgorithmSummaryfunction POLICY-ITERATION(mdp) returns a policyinputs: mdp, an MDP with states S, actions A(s), transition model P (s ′ | s, a)local variables: U , a vector of utilities for states in S, initially zeroπ, a policy vector indexed by state, initially randomrepeatU ← POLICY-EVALUATION(π, U , mdp)unchanged? ← truefor each state sXin S doifmaxa ∈ A(s)P (s ′ | s, a) U [s ′ ] > X P (s ′ | s, π[s]) U [s ′ ] then dos ′ Xs ′P (s ′ | s, a) U [s ′ ]s ′π[s] ← argmaxa ∈ A(s)unchanged? ← falseuntil unchanged?return πRational agents can be developed on the basis of a probability theoryand a utility theory.Agents that make decisions according to the axioms of utility theorypossess a utility function.Sequential problems in uncertain environments (MDPs) can be solvedby calculating a policy.Value iteration is a process for calculating optimal policies.Figure 17.7The policy iteration algorithm for calculating an optimal policy.(University of Freiburg) Foundations of AI July 18, 2012 31 / 32(University of Freiburg) Foundations of AI July 18, 2012 32 / 32