MTRNNを用いた階層的言語構造の創発 - 奥乃研究室 - 京都大学

MTRNNを用いた階層的言語構造の創発 - 奥乃研究室 - 京都大学

MTRNNを用いた階層的言語構造の創発 - 奥乃研究室 - 京都大学

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

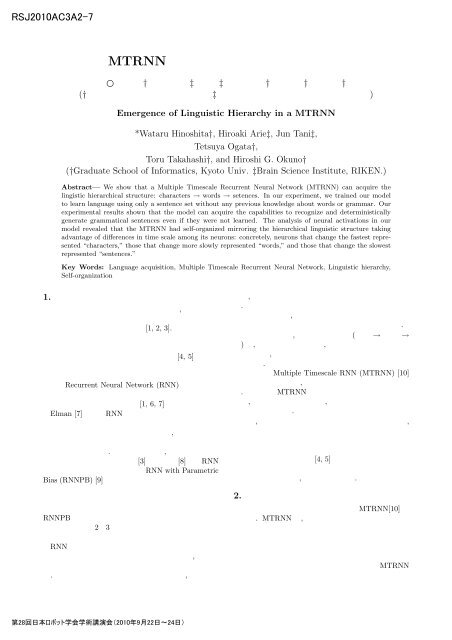

RJ2002MTRNN † ‡ ‡ † † †(† ‡ )Emergence of Linguistic Hierarchy in a MTRNN*Wataru Hinoshita†, Hiroaki Arie‡, Jun Tani‡,Tetsuya Ogata†,Toru Takahashi†, and Hiroshi G. Okuno†(†Graduate School of Informatics, Kyoto Univ. ‡Brain Science Institute, RIKEN.)Abstract— We show that a Multiple Timescale Recurrent Neural Network (MTRNN) can acquire thelingistic hierarchical structure: characters → words → setences. In our experiment, we trained our modelto learn language using only a sentence set without any previous knowledge about words or grammar. Ourexperimental results shown that the model can acquire the capabilities to recognize and deterministicallygenerate grammatical sentences even if they were not learned. The analysis of neural activations in ourmodel revealed that the MTRNN had self-organized mirroring the hierarchical linguistic structure takingadvantage of differences in time scale among its neurons: concretely, neurons that change the fastest represented“characters,” those that change more slowly represented “words,” and those that change the slowestrepresented “sentences.”Key Words: Language acquisition, Multiple Timescale Recurrent Neural Network, Linguistic hierarchy,Self-organization1. , [1, 2, 3]. [4, 5]Recurrent Neural Network (RNN) [1, 6, 7]Elman [7] RNN , . , [3] [8] RNN RNN with ParametricBias (RNNPB) [9] RNNPB 23 RNN , . , , . , ., ( → →) , , , . Multiple Timescale RNN (MTRNN) [10], . MTRNN , , ., , [4, 5], .2. MTRNN[10] . MTRNN , MTRNN28 日 本 ロッ 術 講 (200 年 22 日 〜24 日

RJ2002IO : 30 node, τ = 2a(t+1) b(t +1) … z(t+1) …… …Cf : 40 node, τ = 5…Cs : 11 node, τ = 70Csc : 6 node……I IO , I Cf , I Cs , I Csc : (I Csc ⊂ I Cs )IO Node Numbera(t)30 (?)25 (y)20 (t)15 (o)10 (j)5 (e)b(t)………z(t) ……Fig.1 MTRNN p u n c h t h e s m a l l b a l l .5 10 15 20 25 30 35 40 45StepFig.2 : “punch the small ball.”……I all : I IO ∪ I Cf ∪ I Csu t,i : t i b i : i Csc 0,i : MTRNN τ i : i w ij : j i w ij = 0···(i∈I IO ∧j∈I Cs )∨(i∈I Cs ∧j∈I IO )x t,j : t j MTRNN Back Propagation Through Time(BPTT) [11] . (w ij ), (b i ), (Ccs 0,i ) MTRNN A Z , , , , 4 30 (IO) , , Fast Context(Cf,40 ) Slow Context(Cs, 11 ) 2 (Fig. 1)IO, Cf, Cs (τ) , . Cs 6 Controlling SlowContext(Csc) Csc , IO (Fig. 2). IO t i (y t,i ) ⎧exp(u t,i + b i )∑···(i∈I IO )⎪⎨ exp(u t,j + b j )y t,i = j∈I IO(1)1⎪⎩···(i/∈I IO )1 + exp(−(u t,i + b i ))⎧0 ···(t=0∧i/∈I Csc )⎪⎨Cscu t,i = 0,i ···(t=0∧i∈I Csc )⎪⎩(1 − 1 )u t−1,i + 1 [ ∑ ] (2)w ij x t,j ···(o/w)τ i τ ij∈I allx t,j = y t−1,j ···(t≥1) (3)w (n+1)ijb (n+1)i= w (n)ij= w (n)ij= b (n)i= b (n)i− η ∂E∂w ij− η ∑τ itx t,j∂E(4)∂u t,i− β ∂E∂b i− β ∑ ∂E(5)∂ut t,iCcs (n+1)0,i = Ccs (n)0,i − α ∂E∂Ccs 0,i= Ccs (n)0,i − α ∂E∂u 0,i···(i∈I Csc ) (6)E = ∑ ∑ ( y∗ )yt,i ∗ t,i· log(7)ytt,ii∈I⎧IOy t,i − yt,i ∗ + (1 − 1 ∂E) ···(i∈I IO )τ i ∂u t+1,i∂E⎪⎨= y∂u t,i (1 − y t,i ) ∑ w ki ∂E(8)t,i τ k ∂u t+1,kk∈I all⎪⎩ +(1 − 1 ∂E)τ i∂u t+1,i···(o/w)n : E : yt,i ∗ : t i η, β, α : BPTT IO (x t,j ) (9) x t,j = (1 − r) × y t−1,j + r × yt−1,j ∗ ···(t≥1∧j∈I IO)(9)r : (0 ≤ r ≤ 1)28 日 本 ロッ 術 講 (200 年 22 日 〜24 日

RJ2002Table 1 LexiconNonterminal symbol WordsV I (intransitive verb)V T (transitive verb)N (Noun)ART (article)ADV (adverb)ADJ S (adjective:size)ADJ C (adjective:color)jump, run, walkkick, punch, touchball, boxa, thequickly, slowlybig, smallblue, red, yellowCsc MTRNN Csc Csc 0 ( w ij b i ) Csc 0 Csc 0 BPTT , Csc 0 ( (6))IO (9) (3) MTRNN Csc 0 ( (1), (2), (3)) 3. .1. 1 100 .2. 80 MTRNN .3. 20 100 , MTRNN . .(1) , BPTT ,Cs 0 .(2) Cs 0 .(3) , ., , , , Cs , Cs 0 . , 7 17 (Table 1) 9 (Table 2) 26 .4. , MTRNN , 2 .4·1 13. , MTRNN , 100 95 . , 75/80, 20/20 . , (%) 100.0AccuracyS → V IS → V I ADVS → V T NPS → V T NP ADV95.090.085.080.075.070.065.060.055.050.0Table 2 Regular grammarNP → ART NNP → ART ADJ NADJ → ADJ SADJ → ADJ CADJ → ADJ S ADJ CCLEANNOISY NOISE0 5 10 20 30 40ρ (Noise addition probability)Fig.3 ρ 20 , 0 . , MTRNN . , , Cs 0 .4·2 212 MTRNN , . , ρ MTRNN , ρ . , ρ = 0, 5, 10, 20, 30, 40(%) , 20. ρ = 30 , 86/100, 98/100 . ρ , 3 Fig.3 . Fig. 3 , ρ = 30 , , ρ = 0 ., ,, .5. MTRNN , , IO , Cf, Cs . .• IOIO , .(%)28 日 本 ロッ 術 講 (200 年 22 日 〜24 日

RJ2002PCA1PCA2151050-5-10-1.6-1.8-2-2.2-2.4-2.6-2.8-3transitive verbpunchtouchkickrunjumpwalkintransitive verbthearticleaadjectiveredsmalladverbbigyellow-2 -1.5 -1 -0.5 0 0.5 1 1.5PCA2blueslowlyquicklyballboxnounFig.4 Cf NonexistencePresenceof AdverbExistenceSimplicity of sentencesSimplicity ofobjectival phrases: sentence with itv: sentence with tv and 0 adj: sentence with tv and 1 adj: sentence with tv and 2 adj: existence of adverbitv = intransitive verbtv = transitive verbadj = adjective-15-60 -50 -40 -30 -20 -10 0 10PCA1Fig.5 Cs • CfCf .1. , .2. , .3. , ., Cf , . Fig. 4 , Cf (40 ) , ., 1 (. “run” “red”) (1) . , , , (2), (3) .• CsCs .1. , .2. .3. , ., Cs , . Fig. 5 , Cs (11 ) , . , 1 , (1), (2) . , , , , (3) .6. , MTRNN . MTRNN MTRNN MTRNN “” “” “” , . MTRNN - MTRNN 2 MTRNN JST 19GS0208 (B)21300076[1] K. D. Bot, W. Lowie, M. Verspoor: “A dynamicsystems theory approach to second language acquisition,”Bilingualism: Language and Cognition, vol.10,pp. 7–21, 2007.[2] S. Lawrence and C. L. Giles: “Natural LanguageGrammatical Inference with Recurrent Neural Networks,”IEEE Trans. on Knowledge and Data Engineering,vol.12, no.1, pp. 126–140, 2000.[3] Y. Sugita, J. Tani: “Learning semantic combinatorialityfrom the interaction between linguistic and behavioralprocesses,” Adaptive Behavior, vol.13, no.1,2005.[4] N. Chomsky: “Barrier,” MIT Press, 1986.[5] N. Chomsky: “Rules and Representations,” ColumbiaUniversity Press, 2005.[6] J. B. Pollack: “The induction of dynamical recognizers,”Machine Learning, vol.7, no.2–3, pp. 227–252,1991.[7] J. L. Elman: “Language as a dynamical system,”Mind as Motion: Explorations in the Dynamics Cognition,MIT Press, pp. 195–223, 1995.[8] T. Ogata et al.: “Two-way Translation of CompoundSentences and Arm Motions by Recurrent Neural Networks,”IROS, pp. 1858–1863, 2007.[9] J. Tani and M. Ito: “Self-Organization of BehavioralPrimitives as Multiple Attractor Dynamics: A RobotExperiment,” IEEE Trans. on Systems, Man, and CyberneticsPart A: Systems and Humans, vo.33, no.4,pp. 481-488, 2003.[10] Y. Yamashita and J. Tani: “Emergence of FunctionalHierarchy in a Multiple Timescale Neural NetworkModel: a Humanoid Robot Experiment,” PLoS Comput.Biol., vol.4, no.11, 2008.[11] D. E. Rumelhart, G. E. Hinton, and R. J. Williams:“Learning internal representations by error propagation,”MIT Press., Ch. 8, pp. 318–362, 1986.28 日 本 ロッ 術 講 (200 年 22 日 〜24 日