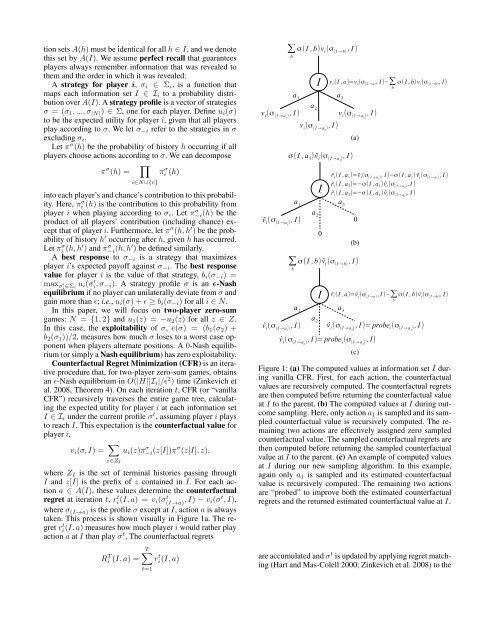

tion sets A(h) must be identical for all h ∈ I, <strong>and</strong> we denotethis set by A(I). We assume perfect recall that guaranteesplayers always remember <strong>in</strong>formation that was revealed tothem <strong>and</strong> the order <strong>in</strong> which it was revealed.A strategy for player i, σ i ∈ Σ i , is a function thatmaps each <strong>in</strong>formation set I ∈ I i to a probability distributionover A(I). A strategy profile is a vector of strategiesσ = (σ 1 , ..., σ |N| ) ∈ Σ, one for each player. Def<strong>in</strong>e u i (σ)to be the expected utility for player i, given that all playersplay accord<strong>in</strong>g to σ. We let σ −i refer to the strategies <strong>in</strong> σexclud<strong>in</strong>g σ i .Let π σ (h) be the probability of history h occurr<strong>in</strong>g if allplayers choose actions accord<strong>in</strong>g to σ. We can decomposeπ σ (h) =∏πi σ (h)i∈N∪{c}<strong>in</strong>to each player’s <strong>and</strong> chance’s contribution to this probability.Here, πi σ (h) is the contribution to this probability fromplayer i when play<strong>in</strong>g accord<strong>in</strong>g to σ i . Let π−i σ (h) be theproduct of all players’ contribution (<strong>in</strong>clud<strong>in</strong>g chance) exceptthat of player i. Furthermore, let π σ (h, h ′ ) be the probabilityof history h ′ occurr<strong>in</strong>g after h, given h has occurred.Let πi σ(h, h′ ) <strong>and</strong> π−i σ (h, h′ ) be def<strong>in</strong>ed similarly.A best response to σ −i is a strategy that maximizesplayer i’s expected payoff aga<strong>in</strong>st σ −i . The best responsevalue for player i is the value of that strategy, b i (σ −i ) =max σ ′i ∈Σ iu i (σ i ′, σ −i). A strategy profile σ is an ɛ-Nashequilibrium if no player can unilaterally deviate from σ <strong>and</strong>ga<strong>in</strong> more than ɛ; i.e., u i (σ) + ɛ ≥ b i (σ −i ) for all i ∈ N.In this paper, we will focus on two-player zero-sumgames: N = {1, 2} <strong>and</strong> u 1 (z) = −u 2 (z) for all z ∈ Z.In this case, the exploitability of σ, e(σ) = (b 1 (σ 2 ) +b 2 (σ 1 ))/2, measures how much σ loses to a worst case opponentwhen players alternate positions. A 0-Nash equilibrium(or simply a Nash equilibrium) has zero exploitability.<strong>Counterfactual</strong> <strong>Regret</strong> M<strong>in</strong>imization (CFR) is an iterativeprocedure that, for two-player zero-sum games, obta<strong>in</strong>san ɛ-Nash equilibrium <strong>in</strong> O(|H||I i |/ɛ 2 ) time (Z<strong>in</strong>kevich etal. 2008, Theorem 4). On each iteration t, CFR (or “vanillaCFR”) recursively traverses the entire game tree, calculat<strong>in</strong>gthe expected utility for player i at each <strong>in</strong>formation setI ∈ I i under the current profile σ t , assum<strong>in</strong>g player i playsto reach I. This expectation is the counterfactual value forplayer i,v i (σ, I) = ∑u i (z)π−i(z[I])π σ σ (z[I], z),z∈Z Iwhere Z I is the set of term<strong>in</strong>al histories pass<strong>in</strong>g throughI <strong>and</strong> z[I] is the prefix of z conta<strong>in</strong>ed <strong>in</strong> I. For each actiona ∈ A(I), these values determ<strong>in</strong>e the counterfactualregret at iteration t, ri t(I, a) = v i(σ(I→a) t , I) − v i(σ t , I),where σ (I→a) is the profile σ except at I, action a is alwaystaken. This process is shown visually <strong>in</strong> Figure 1a. The regretri t (I, a) measures how much player i would rather playaction a at I than play σ t . The counterfactual regretsR T i (I, a) =T∑ri(I, t a)t=1∑ σ (I ,b)v i(σ (I → b), I )bIa 1a 2a 3v i(σ (I →a1 ) , I ) v i(σ (I →a2 ), I )v i(σ (I →a3 ) , I )σ (I ,a 1) ̃v i(σ ( I → a 1), I )Ia 1a 2a 3r i (I ,a)=v i (σ ( I →a ) , I )−∑ σ (I , b)v i (σ ( I →b) , I)b(a)̃v i(σ (I →a1 ), I ) 00Ĩr i (I ,a 1 )= ̃v i (σ ( I →a 1), I)−σ (I , a 1 ) ̃v i (σ (I → a1 ), I )̃r i (I ,a 2 )=−σ(I , a 1 ) ̃v i (σ ( I → a1 ) , I )̃r i (I ,a 3 )=−σ (I , a 1 ) ̃v i (σ ( I → a1 ), I )(b)∑ σ (I ,b) ̂v i(σ (I → b), I )ba 1a 2a 3̂v i(σ (I →a1 ) , I ) ̂v i (σ (I →a 3) , I )= probe i (σ (I →a 3) , I )̂v i(σ (I → a2 ), I )= probe i(σ (I → a 2), I)̂r i (I ,a)= ̂v i (σ ( I →a ) , I )−∑ σ (I , b) ̂v i (σ ( I →b) , I)b(c)Figure 1: (a) The computed values at <strong>in</strong>formation set I dur<strong>in</strong>gvanilla CFR. First, for each action, the counterfactualvalues are recursively computed. The counterfactual regretsare then computed before return<strong>in</strong>g the counterfactual valueat I to the parent. (b) The computed values at I dur<strong>in</strong>g outcomesampl<strong>in</strong>g. Here, only action a 1 is sampled <strong>and</strong> its sampledcounterfactual value is recursively computed. The rema<strong>in</strong><strong>in</strong>gtwo actions are effectively assigned zero sampledcounterfactual value. The sampled counterfactual regrets arethen computed before return<strong>in</strong>g the sampled counterfactualvalue at I to the parent. (c) An example of computed valuesat I dur<strong>in</strong>g our new sampl<strong>in</strong>g algorithm. In this example,aga<strong>in</strong> only a 1 is sampled <strong>and</strong> its estimated counterfactualvalue is recursively computed. The rema<strong>in</strong><strong>in</strong>g two actionsare “probed” to improve both the estimated counterfactualregrets <strong>and</strong> the returned estimated counterfactual value at I.are accumulated <strong>and</strong> σ t is updated by apply<strong>in</strong>g regret match<strong>in</strong>g(Hart <strong>and</strong> Mas-Colell 2000; Z<strong>in</strong>kevich et al. 2008) to the

accumulated regrets,σ T +1 (I, a) =R T,+i (I, a)∑R T,+i (I, b)b∈A(I)where x + = max{x, 0} <strong>and</strong> actions are chosen uniformly atr<strong>and</strong>om when the denom<strong>in</strong>ator is zero. This procedure m<strong>in</strong>imizesthe average of the counterfactual regrets, which <strong>in</strong> turnm<strong>in</strong>imizes the average (external) regret Ri T /T (Z<strong>in</strong>kevichet al. 2008, Theorem 3), whereR T i= maxσ ′ ∈Σ iT∑t=1(ui (σ ′ , σ t −i) − u i (σ t i, σ t −i) ) .It is well known that <strong>in</strong> a two-player zero-sum game, ifRi T /T < ɛ for i ∈ {1, 2}, then the average profile ¯σT isa 2ɛ-Nash equilibrium.For large games, CFR’s full game tree traversal can bevery expensive. Alternatively, one can still obta<strong>in</strong> an approximateequilibrium by travers<strong>in</strong>g a smaller, sampled portionof the tree on each iteration us<strong>in</strong>g Monte Carlo CFR (MC-CFR) (Lanctot et al. 2009a). Let Q be a set of subsets, orblocks, of the term<strong>in</strong>al histories Z such that the union of Qspans Z. On each iteration, a block Q ∈ Q is sampled accord<strong>in</strong>gto a probability distribution over Q. Outcome sampl<strong>in</strong>gis an example of MCCFR that uses blocks conta<strong>in</strong><strong>in</strong>ga s<strong>in</strong>gle term<strong>in</strong>al history (Q = {z}). On each iteration ofoutcome sampl<strong>in</strong>g, the block is chosen dur<strong>in</strong>g traversal bysampl<strong>in</strong>g a s<strong>in</strong>gle action at the current decision po<strong>in</strong>t untila term<strong>in</strong>al history is reached. The sampled counterfactualvalue for player i,ṽ i (σ, I) =∑u i (z)π−i(z[I])π σ σ (z[I], z)/q(z)z∈Z I ∩Qwhere q(z) is the probability that z was sampled, def<strong>in</strong>es thesampled counterfactual regret on iteration t for action a atI, ˜r t i (I, a) = ṽ i(σ t (I→a) , I) − ṽ i(σ t , I). The sampled counterfactualvalues are unbiased estimates of the true counterfactualvalues (Lanctot et al. 2009a, Lemma 1). In outcomesampl<strong>in</strong>g, for example, only the regrets along the sampledterm<strong>in</strong>al history are computed (all others are zero by def<strong>in</strong>ition).Outcome sampl<strong>in</strong>g converges to equilibrium fasterthan vanilla CFR <strong>in</strong> a number of different games (Lanctot etal. 2009a, Figure 1).As we sample fewer actions at a given node, the sampledcounterfactual value is potentially less accurate. Figure 1b illustratesthis po<strong>in</strong>t <strong>in</strong> the case of outcome sampl<strong>in</strong>g. Here, an“<strong>in</strong>formative” sampled counterfactual value for just a s<strong>in</strong>gleaction is obta<strong>in</strong>ed at each <strong>in</strong>formation set along the sampledblock (history). All other actions are assigned a sampledcounterfactual value of zero. While E Q [ṽ i (σ, I)] = v i (σ, I),variance is <strong>in</strong>troduced, affect<strong>in</strong>g both the regret updates <strong>and</strong>the value recursed back to the parent. As we will see <strong>in</strong>the next section, this variance plays an important role <strong>in</strong> thenumber of iterations required to converge.<strong>Generalized</strong> <strong>Sampl<strong>in</strong>g</strong>Our ma<strong>in</strong> contributions <strong>in</strong> this paper are new theoretical f<strong>in</strong>d<strong>in</strong>gsthat generalize those of MCCFR. We beg<strong>in</strong> by pre-(1)sent<strong>in</strong>g a previously established bound on the average regretachieved through MCCFR. Let |A i | = max I∈Ii |A(I)|<strong>and</strong> suppose δ > 0 satisfies the follow<strong>in</strong>g: ∀z ∈ Z eitherπ−i σ (z) = 0 or q(z) ≥ δ > 0 at every iteration.We can then bound the difference between any two samplesṽ i (σ (I→a) , I) − ṽ i (σ (I→b) , I) ≤ ˜∆ i = ∆ i /δ, where∆ i = max z∈Z u i (z) − m<strong>in</strong> z∈Z u i (z). The average regretcan then be bounded as follows:Theorem 1 (Lanctot et al. (2009a), Theorem 5) Let p ∈(0, 1]. When us<strong>in</strong>g outcome-sampl<strong>in</strong>g MCCFR, with probability1 − p, average regret is bounded by( √ )RiT 2T ≤ ˜∆i |I i | √ |A i |˜∆ i + √ √ . (2)p TA related bound holds for all MCCFR <strong>in</strong>stances (Lanctotet al. 2009b, Theorem 7). We note here that Lanctot etal. present a slightly tighter bound than equation (2) where|I i | is replaced with a game-dependent constant M i that is<strong>in</strong>dependent of the sampl<strong>in</strong>g scheme <strong>and</strong> satisfies √ |I i | ≤M i ≤ |I i |. This constant is somewhat complicated to def<strong>in</strong>e,<strong>and</strong> thus we omit these details here. Recall that m<strong>in</strong>imiz<strong>in</strong>gthe average regret yields an approximate Nash equilibrium.Theorem 1 suggests that the rate at which regret is m<strong>in</strong>imizeddepends on the bound ˜∆ i on the difference betweentwo sampled counterfactual values.We now present a new, generalized bound on the averageregret. While MCCFR provides an explicit form for thesampled counterfactual values ṽ i (σ, I), we let ˆv i (σ, I) denoteany estimator of the true counterfactual value v i (σ, I).We can then def<strong>in</strong>e the estimated counterfactual regret oniteration t for action a at I to be ˆr t i (I, a) = ˆv i(σ t (I→a) , I) −ˆv i (σ t , I). This generalization creates many possibilities notconsidered <strong>in</strong> MCCFR. For <strong>in</strong>stance, <strong>in</strong>stead of sampl<strong>in</strong>g ablock Q of term<strong>in</strong>al histories, one can consider a sampled setof <strong>in</strong>formation sets <strong>and</strong> only update regrets at those sampledlocations. Another example is provided later <strong>in</strong> the paper.The follow<strong>in</strong>g lemma probabilistically bounds the averageregret <strong>in</strong> terms of the variance, covariance, <strong>and</strong> bias betweenthe estimated <strong>and</strong> true counterfactual regrets:Lemma 1 Let p ∈ (0, 1] <strong>and</strong> suppose that there existsa bound ˆ∆ i on the difference between any two estimates,ˆv i (σ (I→a) , I) − ˆv i (σ (I→b) , I) ≤ ˆ∆ i . If strategies are selectedaccord<strong>in</strong>g to regret match<strong>in</strong>g on the estimated counterfactualregrets, then with probability at least 1 − p, theaverage regret is bounded byR T iwhereT ≤ |I i| √ |A i |Var =max⎛t∈{1,...,T }I∈I ia∈A(I)⎝ ˆ∆ i√T+with Cov <strong>and</strong> E similarly def<strong>in</strong>ed.√VarpT + Covp+ E2pVar [ r t i(I, a) − ˆr t i(I, a) ] ,⎞⎠