Chapter 4 General Vector Spaces Face Recognition ( á¹¢å ± å½å¬)

Chapter 4 General Vector Spaces Face Recognition ( á¹¢å ± å½å¬)

Chapter 4 General Vector Spaces Face Recognition ( á¹¢å ± å½å¬)

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

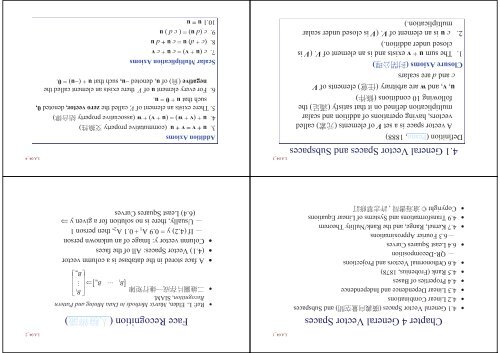

<strong>Vector</strong> <strong>Spaces</strong> of Matrices (1/2)• Use vector notation () for the elements of M 22 (the set ofreal 2 2 matrices). Let a bu and v c d be two arbitrary 2 2 matrices. We get egfh LA 04_5Axiom 1: au v cbd e gfh a ce bg df h u + v is a 2 2 matrix. Thus M 22 is closed under addition.Axiom 3: (Theorem 2.2). a e b f u v v u c g d h <strong>Vector</strong> <strong>Spaces</strong> of Functions (1/2)LA 04_7Let V be the set of all functions () having the realnumbers as their domain ()Axiom 1: f + g is defined by (f + g)(x) = f (x) + g (x).• f + g is thus a functions with domain the set of real numbers.• f + g is an element of V closed under addition.•Example: f (x) = x and g (x) = x 2 . Then define (f + g)(x) = x + x 2Axiom 2:• c f is defined by ( c f )( x ) = c [ f(x) ].• c f is thus a functions with domain the set of real numbers.• c f is an element of V closed under scalar multiplication.• Example: Define (3 g)(x) = 3 x 2<strong>Vector</strong> <strong>Spaces</strong> of Matrices (2/2)Axiom 5: 0 0The 2 2 zero matrix is 0 , since 0 0 b 0 0 a bd 0 0 c d a b, since c d b a a b b d c c d d u 0 a c Axiom 6:If u a b c d , then uu ( u ) a b a c d c u 000 00 LA 04_6M mn , the set of m n matrices, is a vector space.<strong>Vector</strong> <strong>Spaces</strong> of Functions (2/2)Axiom 5: zero functionLet 0 be the function such that 0(x) = 0 for every real number x.(f + 0)(x) = f(x) + 0(x) = f(x) + 0 = f(x) for every real number x. f + 0 = fLA 04_8Axiom 6:[ f ( f )]( x ) f ( x ) 0 0 ( x( f )( x ) f ( x ) [ f ( x )]) f is the negative of f.• —

Subspaces ()DefinitionLet V be a vector space and U be a nonempty subset () of V. Then U is said to be a subspace of V if it is closedunder addition and under scalar multiplication.LA 04_9Example: Let U be the subset of R 3 consisting of ()all vectors of the form (a, 0, 0). (x )Show that U is a subspace of R 3 .ProofLet (a, 0, 0), (b, 0, 0) U and let k be a scalar(a, 0, 0) + (b, 0, 0) = ( a + b, 0, 0) Uk (a, 0, 0) = (ka, 0, 0) UExample 3Let P n denote the set of real polynomial () functions ofdegree () n. Prove that P n is a subspaceLA 04_11Proof: Let f and g be two elements of P n defined bynn 11f ( x ) a x a x ... a x a andng ( x ) bnxnn b1n 1xn 1 ... 1b x110 b0(1) [(fa xnnn ( a b g)(x) na) xn 1nfxn 1 ( a( x ) ...n 1g b( xan 1)1x)xan 10] [ bnx ... ( an1bbn 11xn 1) x ( a ... b0 b01x b) Pn0](2) ( cf )( x ) cc ca[[fann(xxxn)]nacann11xxnn11 ... a1 ... cax a10]x ca0 PnExample 1Let W be the set of vectors of the form (a, a 2 , b).Show that W is not a subspace of R 3 .LA 04_10•Let (a, a 2 , b) and (c, c 2 , d) be elements of W.(a, a 2 , b) + (c, c 2 , d) = (a+ c, a 2 + c 2 , b + d)(a + c, (a + c) 2 , b + d), for all a, b, c, d For example, a = 1, c = 3 Not equal W is not closed under addition.•Let (a, a 2 , b) be element of W.k (a, a 2 , b) = ( ka, k a 2 , k b ) ( k a, (k a) 2 , k b), for all a, b,k For example, k = 2 , a = 3 Not equal W is not under scalar multiplicationLA 04_12Theorem 4.2 If U is a subspace of a vector space V, then Ucontains () the zero vector of V.Proof•Let u be an arbitrary () vector in U and 0 be the vector of V.Let 0 be the zero scalar. We then know that 0 u = 0.• Since U is closed under scalar multiplication 0 U. • Logic: A subspace 0 U (p q )Not a subspace 0 U ( p q )• Example 6: Let W be the set of vectors of the form (a, a, a+2). Let (a, a, a + 2) = (0, 0, 0) a = 0 and a + 2 = 0 No solution— Thus (0, 0, 0) is not an element of W W is not a subspace.???

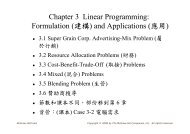

Section 4.2 to 4.4• Consider R 2• Section 4.4: ( 1 , 0 ), ( 0 , 1 ), 2• Mean 2 things:— (1) Section 4.2: For any vector ( a , b ) in R 2 , it couldbe represented () as a linear combination () in terms of () this basis.( a , b ) = a ( 1, 0 ) + b ( 0 , 1 )— (2) Section 4.3: Basis is linearly independent ()c ( 1, 0 ) + d ( 0 , 1 ) = 0 c = d = 0— That is, we could represent every vector withminimal () spanning vectors.• Applications: After Section 4.5LA 04_13Example 2Express the vector (4, 5, 5) as () a linear combination of thevectors (1, 2, 3), (1, 1, 4), and (3, 3, 2).SolutionExamine the following identity () for values of c 1 , c 2 , c 3 .c1(1, 2, 3) c2( 1 , 1, 4) c3(3, 3, 2) (4, 5, 5)LA 04_15 c1 c2 3 c3 4 2 c1 c2 3 c3 5 c1 2 r 3, c2 r 1, c3 3 c 4 c 2 c 5123 r ( 4, 5, 5) ( 2 r 3)(1, 2, 3) ( r 1)( 1,1, 4) r (3,3,2)• r = 3 gives (4, 5, 5) = 3 (1, 2, 3) + 2 (1,1,4) + 3 (3,3,2)• r = 1 gives (4, 5, 5) = 5 (1, 2, 3) 2 (1,1,4) (3,3,2)4.2 Linear Combinations ()• Definition: Let v 1 , v 2 , …, v m be vectors in a vector space V.The vector v in V is a linear combination of v 1 , v 2 , …, v mif there exist scalars c 1 , c 2 , …, c m such thatv = c 1 v 1 + c 2 v 2 + … + c m v mLA 04_14• Example: (5, 4, 2) = (1, 2, 0) + 2 (3, 1, 4) – 2 (1, 0, 3)• Example: (3, 4, 2) = a ( 1, 0, 0) + b ( 0, 1, 0) is impossible(), so the vector (3, 4, 2) is not a linear combinationof ( 1, 0, 0) and ( 0, 1, 0).LA 04_16DefinitionThe vectors v 1 , v 2 , …, v m are said to span () a vector space ifevery vector in the space can be expressed as a linearcombination of these vectors.Example 3Show that the vectors (1, 2, 0), (0, 1, 1), and (1, 1, 2) span R 3 .Proof: Let (x, y, z) be an arbitrary element of R 3 . Determinewhether we can writeThus( x , y , z ) c1(1, 2, 0) c2(0,1, 1) c32cc11cc222ccc333xyz(1,1,2)

Example 3: Gauss-Jordan Elimination ( x , y , z ) 120011111 200 1 1xyz1 10 0xy 2 x0 0 1 z y 2 (3 x y z )(1,2,0) ( 4 x 2 y 01 1x z12 11xy z02 x 0 3 x y z0 1 0 4 x 2 y 0 0 1 z y 2 xz LA 04_17)(0,1, 1) ( 2 x y z )(1,1, 2)• For example, (x, y, z) = (1, 0, 0) (1, 0, 0) = 3 (1, 2, 0) – 4 (0, 1, – 1) – 2 (1, 1, 2)•(x, y, z) = (2, 4, 1) (2, 4, 1) = (6 4 + 1) (1, 2, 0) (0, 1, 1) (1,1,2)Example 4LA 04_19 (1, 5, 3) and (2, 3, 4) in R 3 . Let U = Span{(1, 5, 3), (2, 3, 4)} A subspace: All vectors of theform c 1 (1, 5, 3) + c 2 (2, 3, 4) c 1 = 1, c 2 = 1: vector (1, 2, 7) c 1 = 2, c 2 = 3: vector (4, 1, 18) U is made up of () all vectorsin the plane () defined by thevectors (1, 5, 3) and (2, 3, 4).LA 04_18Theorem 4.3: Let v 1 , …, v m be vectors in a vector space V.Let U be the set consisting of all linear combinations of v 1 , …, v m .Then U is a subspace of V spanned by the vectors v 1 , …, v m .( U: the vector space generated () by v 1 , …, v m .Denoted by Span {v 1 , …, v m } )ProofLetu 1 = a 1 v 1 + … + a m v m and u 2 = b 1 v 1 + … + b m v mbe arbitrary elements of U. And c be an arbitrary scalar. Then(1) U is closed under vector addition.u 1 + u 2 = (a 1 v 1 + … + a m v m ) + (b 1 v 1 + … + b m v m )= (a 1 + b 1 ) v 1 + … + (a m + b m ) v m U.(2) U is closed under scalar multiplication.c u 1 = c (a 1 v 1 + … + a m v m ) = c a 1 v 1 + … + c a m v m U.Example 6Determine whether the matrix 1 7 is a linear combination 8 1 of the matrices 1 0 2 3 0 1, , and in the vector space 2 1 0 2 2 0 M 22 of 2 2 matrices.LA 04_20Solution c1 12 c2 c1101 c2 2c2 c23 2 0 3 cc123 c2 3 2 c c230 12 0 1 8• Unique solution c 1 = 3, c 2 = 2, c 3 = 1. 7 1 18 7 1 1 87 3 1 120 21 2 032 0 21 0

Example 7Show that the function h (x) = 4 x 2 + 3 x – 7 lies in () thespace Span {f, g} generated by f (x) = 2 x 2 – 5 and g(x) = x + 1.SolutionCheck if there exist scalars c 1 and c 2 such thatc 1 (2 x 2 –5) + c 2 ( x + 1) = 4 x 2 + 3 x –7Equating () corresponding coefficients ():x 2 : 2 c 1 = 4x : c 2 = 3x 0 : –5 c 1 + c 2 = –7Unique solution c 1 = 2, c 2 = 3. Thus2 (2 x 2 –5) + 3 ( x + 1) = 4 x 2 + 3 x –7Example 2(a)• Consider f(x) = x 2 + 1, g(x) = 3x –1, h(x) = – 4x + 1 of thevector space P 2 of polynomials of degree 2.• Show that { f, g, h } is linearly independent.Solutionc 1 f + c 2 g + c 3 h = c 1 ( x 2 + 1 ) + c 2 (3 x 1 ) + c 3 (4 x + 1 ) = 0.xxx20: : : c113c cc22c4 c33 000• Unique solutionc 1 = 0, c 2 = 0, c 3 = 0LA 04_21LA 04_234.3 Linear Dependence () andIndependence ()Definition(a) The set of vectors { v 1 , …, v m } in a vector space V issaid to be linearly dependent if there exist scalars c 1 , …,c m , not all zero, such that c 1 v 1 + … + c m v m = 0LA 04_22• Not all zero: (1, 0, …, 0), (1, 1, …, 1), (1, 1, …, 1)(b) The set of vectors { v 1 , …, v m } is linearly independent ifc 1 v 1 + … + c m v m = 0 can only be satisfied () whenc 1 = 0, …, c m = 0.Theorem 4.4 A set consisting of two or more vectors in a vectorspace is linearly dependent if and only if it is possible toexpress one of the vectors as a linear combination of the othervectors.Proof () Let the set { v 1 , v 2 , …, v m } be linearly dependent.Therefore, there exist scalars c 1 , c 2 , …, c m , not all zero, such thatc 1 v 1 + c 2 v 2 + … + c m v m = 0Assume that c 1 0. Then c2 cmv1 v2 c1 c1 vmLA 04_24() Assume that v 1 is a linear combination of v 2 , …, v m exist scalars d 2 , …, d m , such thatv 1 = d 2 v 2 + … + d m v m 1 v 1 + (d 2 ) v 2 + … + ( d m ) v m = 0Linearly dependent because the coefficients NOT all zero

LA 04_25LA 04_26Linear dependence and independence of {v 1 , v 2 } in R 3Linear Dependence of {v 1 , v 2 , v 3 }{v 1 , v 2 } linearly dependent; {v 1 , v 2 } linearly independent;vectors lie on a line () vectors do not lie on a linev 2 = c v 1()Theorem 4.5Let V be a vector space. Any set of vectors in V thatcontains the zero vector is linearly dependent.Proof• Consider the set { 0, v 2 , …, v m }, which contains the zerovector.• Let us examine the identityc 0 c v v 12 2c m m• The identity is true for c 1 = 1, c 2 = 0, …, c m = 0 (not allzero).0LA 04_274.4 Properties of Bases• Definition: A set of vectors {v 1 , …, v m } is called a basis for avector space V if the set spans V and is linearly independent.• Linearly independent and spanning set are DIFFERENTconcepts. Take R 3 as an example— (1, 0, 0) and (0, 1, 0) linearly independent (li), but notspanning set (ss) li: p (1, 0, 0) + q (0, 1, 0) = (0, 0, 0) p = q = 0 Not ss: Consider (1, 1, 1) = p (1, 0, 0) +q (0, 1, 0) ?— (1, 0, 0), (0, 1, 0), (0, 0, 1), (1, 1, 0) ss, but not li ss: (a, b,c) = a (1, 0, 0) + b (0, 1, 0) + c (0, 0, 1) +0 (1, 1, 0) Not li: 1 (1, 0, 0) + 1 (0, 1, 0) + 0 (0, 0, 1) +(1) (1, 1, 0) = 0LA 04_28

Theorem 4.8Let {v 1 , …, v n } be a basis for a vector space V.If {w 1 , …, w m } is a set of more than n vectors in V,then this set is linearly dependent.LA 04_29Idea: In R 2 , the third vector is linearly dependent.More detail in the book.• Application:( p q) If m > n, then {w 1 , …, w m } linearly dependent(q p) If {w 1 , …, w m } linearly independent, then m n.LA 04_31DefinitionIf a vector space V has a basis consisting of n vectors, then thedimension of V is said to be n. dim(V)• Example: The set of n vectors {(1, 0, …, 0), …,(0, …, 0, 1)} forms a basis (the standard basis) for R n .Thus the dimension of R n is n.• If a basis for a vector space is a finite set, then the vectorspace is finite dimensional.• If such a finite set does not exist, then the vector spaceis infinite dimensional. For example, function space.?n 1x c c x ... 01cnxnTheorem 4.9Any two bases for a vector space V consist of the same numberof vectors.LA 04_30Proof•Let {v 1 , …, v n } and {w 1 , …, w m } be two bases for V.•{v 1 , …, v n } a basis and {w 1 , …, w m } as a set of linearlyindependent vectors, then the previous theorem tells us that m n.•{w 1 , …, w m } a basis and {v 1 , …, v n } as a set of linearlyindependent vectors, then n m.• Thus n = m (same number of vectors)Theorem 4.10• The origin is a subspace of R 3 . Dimension defined as 0• The one-dimensional subspaces of R 3 are lines throughthe origin• The two-dimensional subspaces of R 3 are planes throughthe origin.LA 04_32

TheoremLet {v 1 , …, v n } be a basis for a vector space V. Then each vectorin V can be expressed uniquely as a linear combination of thesevectors.LA 04_33Proof•Let v be a vector in V.•{v 1 , …, v n } a basis express v as a linear combination ofthese vectors. Suppose we can writev a1v a and v1nvnv b v b1 1nn a1v1 av b ( a1 b1) v1 ( ann1nv1 bn) vnbnv0n• Since {v 1 , …, v n } is a basis v 1 , …, v n linearly independent. (a 1 – b 1 ) = 0, …, (a n – b n ) = 0 a 1 = b 1 , …, a n = b n .Examples• The vector space M 22 of 2 2 matrices. The matricesLA 04_35 1 00 0 , 0 10 0 , 0 01 0 , 0 00 1 spans M 22 and are linearly independent. Dimension 4.Similarly, the dimension of M mn is m n.• <strong>Vector</strong> space P n of polynomial of degree n.{x n , x n 1 , …, x, 1} is a basis for P n , dimension is n + 1.• Consider the vector space C n . The set {(1, 0, …, 0), …,(0, …, 0, 1)} is a (standard) basis for C n and itsdimension is n (C: complex ) (1 + i , i) = (1 + i) (1 0) + (i) (0 1)Theorem 4.11Let V be a vector space of dimension n.(a) If S = {v 1 , …, v n } is a set of n linearly independent vectorsin V, then S is a basis for V.(b) If S = {v 1 , …, v n } is a set of n vectors that spans V, then S isa basis for V.LA 04_34Example 2 :Prove that the set {(1, 3, 1), (2, 1, 0), (4, 2, 1)} is a basis for R 3 .Solution• Dimension of R 3 = 3. Thus a basis consists of three vectors• Check for linear independence only by Theorem 4.11c1( 1, 3, 1) c2(2,1, 0) c3(4, 2,1) (0, 0, 0)• Unique solution: c 1 = c 2 = c 3 = 0.LA 04_364.5 (Rank)Definition: Let A be an 3 4 matrix. 1 2 3 45 4• Row space (: Row vectors span a subspace of R 14r1 ( 1, 2, 1, 2), r2 (3, 4,1, 6), r3 (5, 4,1, 0)• Column space (): Column vectors span a subspaceof R 31c 2 1 24c31 4 1 1 1 3 cc 5 4 260111260 Application: A x = b, A R mn , x R n1 , b R m1 ,A i R m1 x A b x A x A bA1n 1 1n nx 1n

Rank• Theorem 4.13: The row space and the column space of amatrix A have the same dimension. See the book for a proof The dimension is called the rank of A, denoted rank(A) rank(A) min (m, n) for A R mnLA 04_37• Example:— Row space 1 2 0 00 0 1 01 412 0 0 , 0 0 1 0 R — Column space: 1 2 0 0 1 02 0 , 0 , 1 , 0 0 , 1 R1Theorem 4.15Let A and B be row equivalent matrices. Then A and Bhave the same row space and rank(A) = rank(B).• Idea of Proof:Theorem 4.16BB 110010010100001001AAAAAA123123AAAA1213AA, 13A2BLA 04_39 100010004AAA1234AA12A3Let E be the reduced echelon form of a matrix A. Thenonzero row vectors of E form a basis for the row space ofA. The rank of A is the number of nonzero row vectors in E.Proof: A and E and row equivalent and Theorem 4.15.Properties of the Rank 1 2 3 Example 2 A 0 1 2 2 5 8 — (2, 5, 8) = 2(1, 2, 3) + (0, 1, 2) LA 04_38— (1, 2, 3) and (0, 1, 2) linearly independent rank(A) = 2. Theorem 4.14: The nonzero row vectors of a matrix A thatis in reduced echelon form are a basis for the row space ofA.— Example 3: Three nonzero row vectors Rank(A) = 3.A 1000200001000010A SolutionExample 4Use elementary row operations to find a reduced echelon form121251345LA 04_40121251345 10021 13 22 1000107 20• The two vectors (1, 0, 7) and (0, 1, 2) form a basis for the rowspace of A Rank(A) = 2.• (1, 2, 3) = (1, 0, 7) + 2 (0, 1, 2).• (2, 5, 4) = 2 (1, 0, 7) + 5 (0, 1, 2).• (1, 1, 5) = (1, 0, 7) + (0, 1, 2).

Example 5Find a basis for the column space 1 1 0 1 2 1 A 2 3 2At1 3 4 1 4 6 0 2 6 • (1) The column space of A becomes the row space of A t .• (2) Find a basis for the row space of A t by reduced echelonform of A t . 1 2 1 1 2 1 1 0 51 3 4 0 1 3 0 1 30 2 6 0 2 6 0 0 0 • (3) A basis for the column space105 ,013 06 121 105 2 013 134 105 3 013, , 22013Example 8A 12 1 3 51325 1 0 00 1 00 0 1 (a) Nonsingular ( |A| = 1 0) 0 1 1 (b) Invertible and 1A 5 3 1 3 2 1 (c) Row equivalent to I 3(d) Unique solution.• If b 1 = 1, b 2 = 3, b 3 = –2, then x 1 = 1, x 2 = –2, x 3 = 1(e) Rank(A) = 3(f) column vectors of A form a basisLA 04_41LA 04_43LA 04_42Theorem 4.18Let A be an n n matrix. The following statements are equivalent.(a) |A| 0 (A is nonsingular.) (Ch. 3)(b) A is invertible. (Ch. 2)(c) A is row equivalent to I n . (Ch. 2)(d) The system of equations AX = B has a unique solution.(Ch. 1)(e) Rank(A) = n. (Sec 4.5)(f) The column vectors of A form a basis for R n . (Sec 4.4)— (Slide 4-33) Each vector in V can be expresseduniquely as a linear combination of the basis. x A B x A x A BA1n 1 1n nx1n LA 04_44Singular Case | A | = 0 and b Span {Column space(A)} one ormany solutions 240 CS 100010000 , 240 2 100 4 010 , 110 CS 1 0 00 1 10 0 0 , 110 100 (1 r ) 010 r 010 | A | = 0 and b Span{column space(A)} no solution CS 0411 0 00 1 00 0 0

4.6 Orthonormal <strong>Vector</strong>s and ProjectionsLA 04_45Definition: A set of vectors is said to be an orthogonal () set if every pair () of vectors in the set is orthogonal.• The set is said to be an orthonormal () set if itis orthogonal and each vector is a unit () vector.• Example 1(1,0,0),0,35,45,0,45,35(1) orthogonal: 3 4 4 3 ( 1,0,0) 0, , 0;(1,0,0) 0, , 0; 0, 5 5 5 5 n n ( n 1)nn 2 2Check . Now 3 3 times, 4 6(2) unit vector22 2 2 0 1, 0, 02 ( 1,0,0) 1 01, 0,02 24535452353545354535,45 0,45,3520;1Definition:Orthogonal basis: basis and orthogonal ( + )Orthonormal basis: basis and orthonormal ( + , || || = 1)LA 04_47Standard Bases• R 2 : {(1, 0), (0, 1)}• R 3 : {(1, 0, 0), (0, 1, 0), (0, 0, 1)} orthonormal bases• R n : {(1, …, 0), …, (0, …, 1)}Theorem 4.20: Let {u 1 , …, u n } be an orthonormal basis and v beany vector. Thenv ( v u1) u1 ( v u2) u2 ( v u n) u nProof: v = c 1 u 1 + … + c n u n uniquely v • u i = (c 1 u 1 + … + c n u n ) • u i = c i u i • u i = c i• Do not need Gauss-Jordan elimination as shown in slide 17.ATheorem 4.19An orthogonal set of nonzero vectors is linearly independent.LA 04_46Proof Let {v 1 , …, v m } be an orthogonal set of nonzero vectorsc 1 v 1 + c 2 v 2 + … + c m v m = 0 c i = 0 ?c1v1(vc1v1c2cv22v2vcmcvmmv)mvv00v• If j i v j v i = 0 (mutually () orthogonal)• c i v i v i = 0• Since v i is a nonzero vector v i v i 0 c i = 0.• Letting i = 1, …, m, we get c 1 = 0, …, c m = 0.Orthogonal Matrices (1/2)LA 04_48 Definition: An orthogonal matrix is an invertible matrixthat has the property A 1 = A t . That is, A A t =A t A = I Theorem 4.21: Equivalent statements— (a) A is orthogonal— (b) The column vectors of A form an orthonormal set.— (c) The row vectors of A form an orthonormal set.— Proof: We will prove (a) (b) only.Let A = [A 1 … A n ]. By assumption,A I AA1nA A 1n I AA1nAA11AA1nAAnn 1 0 0 1 ,,tttiittiitti

Orthogonal Matrices (2/2)LA 04_49 Theorem 4.22: If A is orthogonal matrix, then— (a) |A| = 1— (b) A 1 is an orthogonal matrix. Proof:— (a) By assumption A A t = I. Then | A A t | = | I | | A | | A t | = |A| |A| = 1— (b)• A orthogonal column vectors of Aorthonormal by Thm 4.21 (b) row vectors of A t orthonormal• A orthogonal A t = A 1 A 1 orthogonal byThm 4.21 (c)Example 3Determine the projection of the vector v = (6, 7) onto the vectoru = (1, 4).Solutionv uu u(6,(1,7)4)(1,(1,4)4)6128163417LA 04_51Thusproj v u 34uv u (1, 4)u u 17(2,8)• Suppose the vector v = (6, 7) represents a force () acting ona body located at the origin. Then proj u v = (2, 8) is thecomponent of the force in the direction () of u = (1, 4).Projection of One <strong>Vector</strong> onto Another <strong>Vector</strong>Definition: The projection () of a vector v onto a nonzerovector u in R n :OA proj || v || cos u v||uu||u||uv||||uu||LA 04_50vuuuuExample: Project (x, y) onto the x-axis.(1, 3) onto the x-axis:((x , y(1,0)) 1,3)(1,0)(1,0)(1,0)(1,0)(1,0)(1,0)(1,0)x111000(1,0)0(1,0)(x,0)(1,0)Theorem 4.23The Gram-Schmidt Orthogonalization () Process:•Let {v 1 , …, v n } be a basis for a vector space V.The set of vectors {u 1 , …, u n } defined as follows is orthogonal.LA 04_52uuu123uvvv123projprojuuvvn vn proj vn proj vnu11123proju2v3u1n(u 2 )(v 2 )(u 1 = v 1 )

ExampleLA 04_53 v 1 = (1 0 0), v 2 = (2 1 0), v 3 = (3 2 1) (1)(2)u1u2 v v12 (1, 0, 0) proj(2,1,0) (2,1,0) (1,0,0)u 1v2v2(((1,0,0)(1,0,0)vu21uu11)u)1(1,0,0) (2,1,0) (2,0,0) (0,1,0) ( u1)(3') u3 v3 proju 1v3 (0,2,1) ( u1)(Not u2)(3) u3 v3 proju 1v3 proju 2v3 (0,0,1)( u1, u2) 1 4 8 2 0 1A 0 5 5 3 8 6 From Example 4, orthonormal basis {q 1 , q 2 , q 3 }: 1 2 3 2 4 5 2 4 1 , , 0, , , , , , , , 0, 14 14 14 7 7 7 7 21 21QR-Decomposition (1/2)uu u 12212 LA 04_553 Then by Theorem 4.20: u 1 = (u 1 · q 1 ) q 1 + (u 1 · q 2 ) q 2 +(u 1 · q 3 ) q 3 = (u 1 · q 1 ) q 1 = 14q 1 u 2 = (u 2 · q 1 ) q 1 + (u 2 · q 2 ) q 2 + (u 2 · q 3 ) q 3 =2 14 q q172 u 3 = (u 3 · q 1 ) q 1 + (u 3 · q 2 ) q 2 + (u 3 · q 3 ) q 3 =2 14 q q1 7 q2213Example 4: {(1, 2, 0, 3), (4, 0, 5, 8), (8, 1, 5, 6)} linearlyindependent in R 4 a basis for a 3-dim subspace.Construct an orthonormal basis for V.• Solution: Let v 1 = (1, 2, 0, 3), v 2 = (4, 0, 5, 8), v 3 = (8, 1, 5, 6).LA 04_54uuu123vvv123 (1, 2, 0, 3)projproju 1u 1vv23(2,proj 4, 5,u 2v32)(4,1, 0, 2)• The set {(1, 2, 0, 3), (2, – 4, 5, 2), (4, 1, 0, – 2)} orthogonal:Check 1 of 2: (1, 2, 0, 3) · (2, – 4, 5, 2) = 2 – 8 + 0 + 6 = 0(1, 2, 0, 3) 12 22 02 32 14, (2, 4, 5, 2) 7, (4,1, 0, 2) 21• Orthonormal basis 114,214,0,314 , 27,47,57,27 , 421,121,0,221 QR-Decomposition (2/2)LA 04_5614 21 1 4 8 2 4 1 14 2 14 2 14 A 2 0 1 1 2 3 0 5 5 5 0 0 0 0 21 3 8 6 7 3 2 2 14 7 21 uu u 14 7 21 0 7 7 QR1274 Theorem: If A R mn has linearly independent columnvectors, then A = QR, where Q is an mn matrix withorthonormal column vectors and R is an nn invertibleupper triangular matrix. One of top 10 algorithms in the 20th century (computeeigenvalue in chapter 5 by QR algorithm, check courseweb site)ttt

LA 04_57Projection of a <strong>Vector</strong> onto a Subspace• Let W be a subspace of R n .• Let {u 1 , …, u m } be anorthonormal basis for W.• If v is a vector in R n , theprojection of v onto W isdenoted proj W v and isdefined byproj2Wv ( v u1) u1 ( v u2) u ( v um) umExample 5• Consider the vector v = (3, 2, 6) in R 3 .• W : Subspace of R 3 of the form (a, b, b).• Decompose v into the sum of a vector that lies in W and a vectororthogonal to W.SolutionLA 04_59• Need an orthonormal basis for W.• Write an arbitrary vector of W as follows(a, b, b) = a (1, 0,0) + b ( 0, 1, 1)• The set {(1, 0, 0), (0, 1, 1)} spans W and is linearly independent. a basis for W• Orthogonal Normalize () to get an orthonormal basisu 1 1, 0, 0), u(2 0,21,21 LA 04_58Definition: A vector v is orthogonal to a subspace Wif v is orthogonal to every vector u in W.-- Basis: v u = v (c 1 u 1 + … + c n u n )• Theorem 4.24: Let W be asubspace of R n .Every vector v in R n can bewritten uniquely in the formv = w + w where w is in W and w isorthogonal to W.The vectors w and w arew = proj W vw = v –proj W vLA 04_60,1(3,2w projWv ( v u1) u1 ( v u2) u2 ((3, 2, 6) (1, 0, 0))(1, 0, 0) (3, 0, 0) (0, 4, 4) (3, 4, 4) W2,6)0,12,12 0,12wW v proj v (3, 2, 6) (3, 4, 4) (0, 2, 2) Orthogonal to W:(0, 2, 2) · (1, 0, 0) = 0 , (0, 2, 2) · (0, 1, 1) = 0• Thus the decomposition of v is (3, 2, 6) = (3, 4, 4) + (0, 2, 2)• Another example: t = (3, 2, 2) W12,12projWt((3,(3,2,2,2)2)(1,0,0))(1,0,0)(3,2,2)0,12,12 0,

Example 6Find the distance of the point x = (4, 1, 7) of R 3 from thesubspace W consisting of all vectors of the form (a, b, b).SolutionFrom previous Example 5:is an orthonormal basis for W.proj x W ( x u1) u1 ( x u2) u2 (4, 3, The distance from x to W isu 1 1, 0, 0), u(2x projWx (0, 4, 4) 0,3213)2,12LA 04_61(a6.4 Least-Squares Curves () Gauss 1797, Legendre 1806LA 04_62 Given a set of data points (x 1 , y 1 ), …, (x n , y n ), find a curvef (x) = a 0 + a 1 x + … + a m1 x m1 that minimizes the sum ofsquares of the errors (). Assume n m (explained later)AaT Min f ( a ) (a 0 + a 1 x 1 + … + a m1 xm11 y1 ) 2 + …+ (a 0 + a 1 x n + … + a m1 xm1n yn ) 2T a 11a 11 m 1mxnx na my 1nxnx1 1 na 1 m 1m 11 x x 0 y1 1 x x 0 y1ATy)AaT(AaaTATyy)(yAa— Given A and y, Unknown aaTTATyyTTy)(Aay)m1ynLA 04_63TAa 2 A y 0 for critical pointLeast-Square Curvesd: difference (a 0 + a 1 x 1 –y 1 ) 2 = (y 1 – a 0 – a 1 x 1 ) 2 LA 04_64Minimization of Least-Squares Curves by Calculus For simplified notation, assume 2 data points (x 1 , y 1 ), (x 2 , y 2 ),and a linear equation y = a 0 + a 1 xT T T T T Tf ( a ) a A Aa a A y y Aa y yT T T T a Ma a N N a y y M11M12 a0 N1 a0 T a0a1 a0a1 N1N2 y y M12M22 a1 N2 a1 22T M11a0 2 M12a0a1 M22a1 2 N1a0 2 N2a1 y yTT T T T T Twhere M A A , N A y , N ( A y ) y A , M M f ( a ) 2 M11a0 2 M12a1 2 N1 a0 M11M12 a0 N1 2 2( ) f a12 22 12 2 2 2 M M a NM12a0 M22a1 N2 a1 f ( a ) a 2 Ma 2 N 2 TA

Full Column Rank and InverseLA 04_65 Want to solve A T A a =A T y for a Theorem: Consider A R n m with n m. If rank(A) = m, thenA T A R mm invertible.— Note rank(A) min (m , n).— If n < m rank(A) min (m , n) = n < m— If rank(A) = m, called full column rank— Proof: Want to prove that the columns of A T A are linearlyindependent. That is, (A T A) x = 0 x = 0. (Then columns abasis (Thm. 4.11) invertible (Thm. 4.18) )— Assume (A T A) x = 0 x T (A T A) x = 0— Then x T (A T A) x = (A x) T A x = 0 A x = 0— We have A x = x 1 A 1 + …+ x m A m =0 x i = 0 because Ahas full column rank by assumptionExample 4 (1/2)Find the least-squares parabola () for the data points (1, 7),(2, 2), (3, 1), (4, 3)(1) Equation of the parabola: y = a + bx + cx 2 .a b c 71 1a 2 b 4 c 21 2A 1 3a 3 b 9 c 1 1 4a4b16c314916and y7213LA 04_67• Matrix A has full column rank if x i x j Top 3 rows: Vandermonde matrix (3.4 )111123149(21)(3 Nonsingular Row rank = 3 Row rank = column rank A column rank = 3 (full)1)(32)20Solution for Least-Squares CurvesLA 04_66 If matrix A has full column rank, then( A A )TTa A y a ( A A ) T 1ATy— (A T A) 1 A T : pseudoinverse () , pinv(A)— [(A T A) 1 A T ] A = (A T A) 1 A T A = I— If A square, invertible (A T A) 1 A T =A 1 (A T ) 1 A T =A 1— Residual () or approximating error ()|| Aa y || || A ( ATA ) 1ATy y ||Examplepinv(A1)A( A112A)1234 A(AA1125) 1 2976776729 1 1 2613292 4 171257 29 7 3 110567 62 (Thm 3.5)• The least squares solution is[( AtA ) 1At] y120Example 4 (2/2) 45315152352527• Least-squares parabolay = 15.25 – 10.05 x + 1.75 x 2515195 721315.2510.051.75LA 04_68T 1 T• Residual: 0.05 by || y A ( A A ) A y ||(2) Consider linear equation y = a + b x = 6.5 – 1.3 xa 4 b 3Residual: 12.3aaab23bb721 A 1 11 21 31 4, y 7213 ,[( AA ) 1A 6.5 ] y 1.3 '''''''':ttttt

Example 2 (1/2)x x2 x 3 y yy639 121113639 1000101.54.57.5 • Slide 59: b Span{column space(A)} No solution• Overdetermined () problem• Try to find a least-squares solution: (Another application)min ( x + y –6) 2 + (– x + y –3) 2 + (2 x + 3 y –9) 2 1 1 1 12 3 xy 639 1 1 1 12 3 xy 639 Geometric Interpretation ()Aa• y Span{column space(A)} No solution Least-squares solution a = pinv(A) y =y 100010 aa12 234 23 is the closest we can get to a true solution Projectiony y230 2 100 3100010010, 2 3 004 234100 0,230 0 04 004 , 010 2 34 0LA 04_69LA 04_71Example 2 (2/2)x x2 x 3 y yy63 A 9 1 1 1 12 3 LA 04_70• rank (A) = 2 full column rank least-squares solution is 6 11 5 17 4 pinv ( A ) Y 32 30 0 12 6 3 9 • (least-squares) Error:(0.536)2(0.533)2(199)27.5• Compare with () other solutions:22— (2, 3): Error (2 3 6) ( 2 3 3)— (0, 3): Error 9 (4 9 9)2 21Example 7• Find the projection matrix for the plane x –2y – z = 0 in R 3 .• Find the projection of the vector (1, 2, 3) onto this plane.LA 04_72Solution: Let W be the subspace of vectors that lie in thisplane W = {(x, y, z)} = {(2y + z, y, z)} = { y (2, 1, 0) +z (1, 0, 1)}, the space generated by the vectors (2, 1, 0)and (1, 0, 1).• Let A be the matrix having these vectors as columns. 2 1 A 1 0 0 1 • (1) Projection matrix (2) Projection of any vector.A pinv(A)16 521222125 16 521222125123202W't

<strong>Face</strong> <strong>Recognition</strong> () Ref: L. Elden, Matrix Methods in Data Miningand Pattern <strong>Recognition</strong>, SIAM. Matrix A: <strong>Face</strong>s stored in the database Column vector y: Image of an unknown person— Usually, y Span{column space(A)} Solve least squares problem || A x – y || If || x – h p || < tolerance, then classify as person p— Otherwise, Not in the databaseExample 2Find the fourth-order Fourier approximation to f(x) = x over theinterval [, ].Solutiona21 1 1 x 0 f ( x ) dx xdx 2 2 2 2 0 k11 1 x 1( f ( x ) cos kx ) dx ( x cos kx ) dx sin kx 2 k k cos kxabk1 1 ( f ( x ) sin kx ) dx 2( 1)( x sin kx ) dx kk 1n 12( 1)•The Fourier approximation of f is g ( x ) sink 1 k• Taking n = 4, we get the fourth-order approximation(11g x ) 2(sin x sin 2 x sin 3 x 2341ksin 4 xkx)LA 04_73LA 04_75 06.3 Fourier Approximations• History: By Fourier in 1807 for heat equation•Let f be a function is C[, ] with inner product(C: continuous ) f , g f ( x ) g ( x ) dx , • Orthonormal basis for T[, ] (T: Trigonometric ){ 1 1 1 1 1g , , gn} , cos x , sin x , , cos nx , sin nx0 2 LA 04_74• Least square approximation g of f.g ,( x ) projTf f , g0g0 f gngn•As n increase, this approximation naturally becomes anincreasingly better approximation in the sense that ||f – g|| getssmaller. ()• Applications: Image, video, audio signal processingExample: Fourth-order approximation8 1 1 1g ( x ) (sin x sin 3 x sin 5 x sin 7 x 3 5 7)LA 04_76Computer Graphics

4.7 Kernel (), Range (), and theRank/Nullity () TheoremLA 04_77 Definition: Let U and V be vector spaces. Let u and v bevectors in U and let c be a scalar. A transformation T: U V is said to be linear if T(u + v) = T(u) + T(v), T(cu) = c T(u) Example 2: Let P n be the vector space of real polynomialfunctions of degree n. Prove TP 2 P 1 is linearT(ax 2 + bx + c) = (a + b) x + c— Proof: Let ax 2 + bx + c, px 2 + qx + r P 2 .— (1) <strong>Vector</strong> addition: T((ax 2 + bx + c) + (px 2 + qx + r)) =(a + p + b + q) x + (c + r) = (a + b) x+c + (p + q) x + r= T (ax 2 + bx + c) + T (px 2 + qx + r)—(2) Scalar multiplication: Assume k is any scalar.T ( k ( a x 2 + b x + c ) ) = ( k a + k b ) x + k c= k ( (a + b) x + c ) = k T ( a x 2 + b x + c )LA 04_79Definition: Let T: U V be a linear transformation.•The kernel () of T = { u U | T u = 0 V }. Denotedker(T). ()•The range () of T : { T u : u U }.Denoted range(T).Example 3 D D = d D P ndxDxDn(4x3nxn3x122x1) f g P nc D ( f g ) Df D ( cf ) cD ( f• Others: Integration (), Mean value () of a randomvariable ()Ef[f)12Dg gdx fdx gdx , cfdx c g ] E [ f ] E [ g ], E [ cf ] cEx26x[fdxf2]Theorem 4.26 : Let T: U V be a linear transformation.(a) The kernel of T is a subspace of U.(b) The range of T is a subspace of V.Proof:(a) If u 1 , u 2 ker(T), that is T u 1 = T u 2 = 0, then(1) T ( u 1 + u 2 ) = T u 1 + T u 2 = 0 + 0 = 0,(2) T ( c u 1 ) = c T u 1 = c 0 = 0(b) If v 1 , v 2 Range (T), that is, there exist u 1 , u 2 such thatT u 1 = v 1 , T u 2 = v 2 . Then(1) v 1 + v 2 = T u 1 + T u 2 = T (u 1 + u 2 )(2) c v 1 = c T u 1 = T ( c u 1 )LA 04_78LA 04_80

Example 4: T ( x, y, z ) = ( x, y, 0 )LA 04_81 Kernel:— T (x, y, z) = (x, y, 0) (),T (x, y, z) = (0, 0, 0) () x = 0, y = 0— Thus, ker(T) = {(0, 0 , z)},all vectors on the z axis.— Check T ( 0, 0, z ) = ( 0, 0, 0 ) Range(T) = {(x, y, 0)},all vectors that lie in the xy plane.LA 04_83.Example 5: 1 2 3 A 0 1 1 A is a 3 3 matrix. Thus A defines a 1 1 4 linear operator T: R3 R 3 . T(x) = A x• Kernel: All vectors x = (x 1 , x 2 , x 3 ) in R 3 such that T(x) = 0. Thus 1 2 3 x 10 5 r 0 1 1 x2 0 X r 1 1 4 x 0 r 3ker(T) is a one-dimensional subspace of R 3 with basis (5, 1, 1) t .• Range: range spanned by the column vectors of A, or row vectorof A t .10 1 10 1 10 1 10 1 A t 2 1 1 0 1 1 01 1 01 1 3 1 4 0 1 1 0 1 1 0 0 0 • The basis vectors (1, 0, 1) t and (0, 1, 1) t span range( T ). Thus therange of T is Range(T) = {a(1, 0, 1) t + b(0, 1, 1) t = (a, b, a+ b) t }Theorem 4.27The range of T: R n R m , defined by T(u) = Au, is spanned by thecolumn vectors of A. (The dim of the column space is the rank.)LA 04_82Proof: Let v be a vector in the range u such that T(u) = v.Express u in terms of the standard basis u = a 1 e 1 + … + a n e n• Thus v = T(a 1 e 1 + … + a n e n ) = a 1 T(e 1 ) + … + a n T(e n )where 1 a11a12 a1 n1 a110 a21a22 a2 n0 a21T ( e1) A , T ( en) A 0 a a a 0 a• The column vectors of A, namely, T(e 1 ), …, T(e n ), span the rangeof A.m1m2mnm1001aaa12nnmnTheorem 4.28Let T: U V be a linear transformation. Thendim ker(T) + dim range(T) = dim domain(T)•dim ker(T) is called the nullity (). Another way to saythis rank/nullity theorem: nullity(T) + rank(T) = dim domain(T)Example:A100010001000LA 04_84Solution•Matrix A is in reduced echelon form.• The column vectors are linear independent. Thus rank(A) = 3 dim range(T) = 3.• The domain of T is R 4 ; dim domain(T) = 4.• Therefore, dim ker(T) = 4 3 = 1 (Basis {(0, 0, 0, 1) T })

4.9 Transformations and Systems of Linear Equations Consider A x = y, where A R m n . Let T : R n R m bethe linear transformation defined by A, written as T(x) = yx1x1 2 x xExample 2 Nonhomogeneous (Ex 1)2x223xx 4 x333 11 2 9Using Gauss-Jordan elimination,1 2 30 1 11 1 411 29 1 0 5 70 1 1 20 0 0 0 5 r X r 2 r7 • Solutions:( –5r + 7, r + 2, r) t = r (–5, 1,1) t + (7, 2, 0) tarbitrary solution ker(A) a particular solutionAx = 0 to Ax = y with r = 0• Geometrically: Sliding () the kernel (the line defined bythe vector ( –5, 1, 1) t ) in the direction and distance defined by(7, 2, 0) t .LA 04_85LA 04_87x1 2 x2 3Example 1 x2x1 x2 4Using Gauss-Jordan elimination we get3 0 1 21 0 0 11 0 0 1 1 2 3 0 1 2 0 1 1 0 0 1 1 1 4 0 0 1 xxx333311000000100010510000LA 04_86• Solutions: ( –5 r, r, r) T• One-dimensional subspace of R 3 ,with basis ( –5, 1, 1) T .• Theorem 4.33 Solution of(homogenous )A x = 0 is a subspace. Proof: Slide 2-16.Theorem 6.13 Nonhomogeneous EquationsConsider T(x) A x = y. Let x 1 be a (particular) solutionEvery other solution x = z + x 1 , where T (z) = 0.LA 04_88Proof: x 1 is a solution. Thus A x 1 = y.•Let x be any solution. Thus A x = y y = A x 1 = A x Ax – A x 1 = 0 A (x – x 1 )= 0 T ( x – x 1 )= 0• Thus x – x 1 is an element of the kernel of T; call it z.x – x 1 = z x = x 1 + z• Note that the solution is unique if the only value of z is 0.

x1 2Example 32 x1 33 x1 5x1Solve using Gauss-Jordan elimination.1 2 3 1 1 1 2 3 1xxxx2222325 xxxx3333 20100xxx44454001453233 25 51 1 11 40 50 10 12 3 0 14443 23 23 2110005 53 20 00 0• The arbitrary solution is(5 r 5 s 5, 4 r 3 s 2, r , s )Arbitrary () solution to Ax = y r (5, 4,1, 0) s (5, 3, 0,1) (5, 2, 0,Kernel of mappingdefined by A x = 00)a particular () solutionto Ax = y with r = s = 0• Consider dy / dx –9 y = 0dyydy 9 x c 9dx19 y 9 dx ln y 9 x c y e • D e mx = e mx D( mx ) = m e mx1ce• Solve (2D 9) y 2 xArbitrary solution element of kernel particular solution to 22 of D 9 D y 9 y 2 x• (1) kernelD2mx2 mxmx 9) e 0 ( m 9) e 0 ( m 3)( m 3) e 0 m LA 04_89LA 04_91x3 or 3(• Basis vectors of the kernel are thus e 3x and e -3x . An arbitraryvector in the kernel can be written 3 x 3re sex .Example 4Solve the differential equation d 2 y / dx 2 –9 y = 2 x.Solution•Let D be the operation of taking the derivative.22D y 9 y 2 x ( D 9) y 2x• Let V be the vector space of functions that have secondderivatives.• Then D 2 – 9 is a linear transformation of V into itself.— (D 2 –9) (f + g) = D 2 f + D 2 g –9 f –9 g= (D 2 –9) (f) + (D 2 –9) (g)— (D 2 – 9) (c f) = c (D 2 – 9) ( f ) Denoted by T. So the problem becomes T (y) = 2 x. A linear algebra problem! (2) Particular solution for (2D 9) y 2 x y = c x D y = c , D 2 y = 0 9 c x = 2 x c = 2 / 9 (3) An arbitrary solution to the differential equationy re3 x se 3 x 2 x / 92 Another example ( D 9) y 3 e2 x— Particular solution: y = c e 2x D y = 2 c e 2x ,D 2 y = 4 c e 2x 4 c e 2x 9 c e 2x = 3 e 2x c = 3 / 5 An arbitrary solution to the differential equation can bewritten3 x 3 x 3 2 xy re se e5LA 04_90LA 04_92

Demo Tacoma BridgeLA 04_93