SPEECH RECOGNITION FOR A TRAVEL RESERVATION SYSTEM ...

SPEECH RECOGNITION FOR A TRAVEL RESERVATION SYSTEM ...

SPEECH RECOGNITION FOR A TRAVEL RESERVATION SYSTEM ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

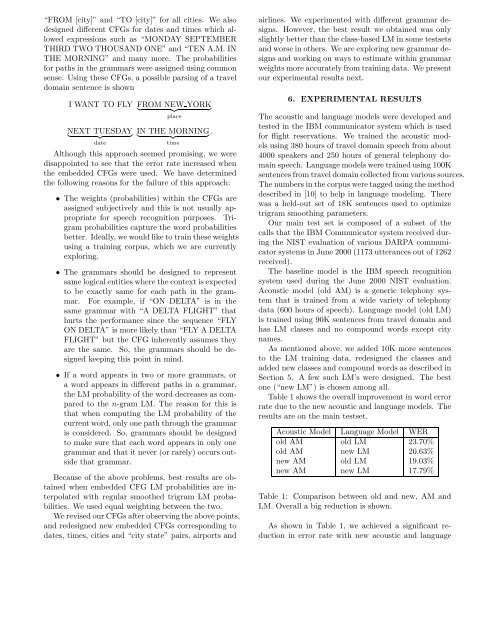

“FROM [city]” and “TO [city]” for all cities. We alsodesigned different CFGs for dates and times which allowedexpressions such as “MONDAY SEPTEMBERTHIRD TWO THOUSAND ONE” and “TEN A.M. INTHE MORNING” and many more. The probabilitiesfor paths in the grammars were assigned using commonsense. Using these CFGs, a possible parsing of a traveldomain sentence is shownI WANT TO FLY FROM NEW YORK} {{ }placeNEXT TUESDAY} {{ }dateIN}THE MORNING{{ }.timeAlthough this approach seemed promising, we weredisappointed to see that the error rate increased whenthe embedded CFGs were used. We have determinedthe following reasons for the failure of this approach:• The weights (probabilities) within the CFGs areassigned subjectively and this is not usually appropriatefor speech recognition purposes. Trigramprobabilities capture the word probabilitiesbetter. Ideally, we would like to train these weightsusing a training corpus, which we are currentlyexploring.• The grammars should be designed to representsame logical entities where the context is expectedto be exactly same for each path in the grammar.For example, if “ON DELTA” is in thesame grammar with “A DELTA FLIGHT” thathurts the performance since the sequence “FLYON DELTA” is more likely than “FLY A DELTAFLIGHT” but the CFG inherently assumes theyare the same. So, the grammars should be designedkeeping this point in mind.• If a word appears in two or more grammars, ora word appears in different paths in a grammar,the LM probability of the word decreases as comparedto the n-gram LM. The reason for this isthat when computing the LM probability of thecurrent word, only one path through the grammaris considered. So, grammars should be designedto make sure that each word appears in only onegrammar and that it never (or rarely) occurs outsidethat grammar.Because of the above problems, best results are obtainedwhen embedded CFG LM probabilities are interpolatedwith regular smoothed trigram LM probabilities.We used equal weighting between the two.We revised our CFGs after observing the above points,and redesigned new embedded CFGs corresponding todates, times, cities and “city state” pairs, airports andairlines. We experimented with different grammar designs.However, the best result we obtained was onlyslightly better than the class-based LM in some testsetsand worse in others. We are exploring new grammar designsand working on ways to estimate within grammarweights more accurately from training data. We presentour experimental results next.6. EXPERIMENTAL RESULTSThe acoustic and language models were developed andtested in the IBM communicator system which is usedfor flight reservations. We trained the acoustic modelsusing 380 hours of travel domain speech from about4000 speakers and 250 hours of general telephony domainspeech. Language models were trained using 100Ksentences from travel domain collected from various sources.The numbers in the corpus were tagged using the methoddescribed in [10] to help in language modeling. Therewas a held-out set of 18K sentences used to optimizetrigram smoothing parameters.Our main test set is composed of a subset of thecalls that the IBM Communicator system received duringthe NIST evaluation of various DARPA communicatorsystems in June 2000 (1173 utterances out of 1262received).The baseline model is the IBM speech recognitionsystem used during the June 2000 NIST evaluation.Acoustic model (old AM) is a generic telephony systemthat is trained from a wide variety of telephonydata (600 hours of speech). Language model (old LM)is trained using 90K sentences from travel domain andhas LM classes and no compound words except citynames.As mentioned above, we added 10K more sentencesto the LM training data, redesigned the classes andadded new classes and compound words as described inSection 5. A few such LM’s were designed. The bestone (“new LM”) is chosen among all.Table 1 shows the overall improvement in word errorrate due to the new acoustic and language models. Theresults are on the main testset.Acoustic Model Language Model WERold AM old LM 23.70%old AM new LM 20.63%new AM old LM 19.03%new AM new LM 17.79%Table 1: Comparison between old and new, AM andLM. Overall a big reduction is shown.As shown in Table 1, we achieved a significant reductionin error rate with new acoustic and language