GPU Performance Analysis and Optimization - GPU Technology ...

GPU Performance Analysis and Optimization - GPU Technology ...

GPU Performance Analysis and Optimization - GPU Technology ...

- No tags were found...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

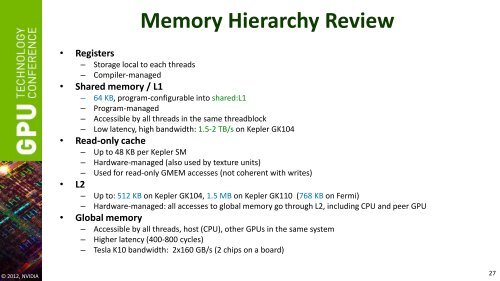

Memory Hierarchy Review• Registers– Storage local to each threads– Compiler-managed• Shared memory / L1– 64 KB, program-configurable into shared:L1– Program-managed– Accessible by all threads in the same threadblock– Low latency, high b<strong>and</strong>width: 1.5-2 TB/s on Kepler GK104• Read-only cache– Up to 48 KB per Kepler SM– Hardware-managed (also used by texture units)– Used for read-only GMEM accesses (not coherent with writes)• L2– Up to: 512 KB on Kepler GK104, 1.5 MB on Kepler GK110 (768 KB on Fermi)– Hardware-managed: all accesses to global memory go through L2, including CPU <strong>and</strong> peer <strong>GPU</strong>• Global memory– Accessible by all threads, host (CPU), other <strong>GPU</strong>s in the same system– Higher latency (400-800 cycles)– Tesla K10 b<strong>and</strong>width: 2x160 GB/s (2 chips on a board)© 2012, NVIDIA27