Interaction with co-located haptic feedback in virtual reality

Interaction with co-located haptic feedback in virtual reality

Interaction with co-located haptic feedback in virtual reality

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

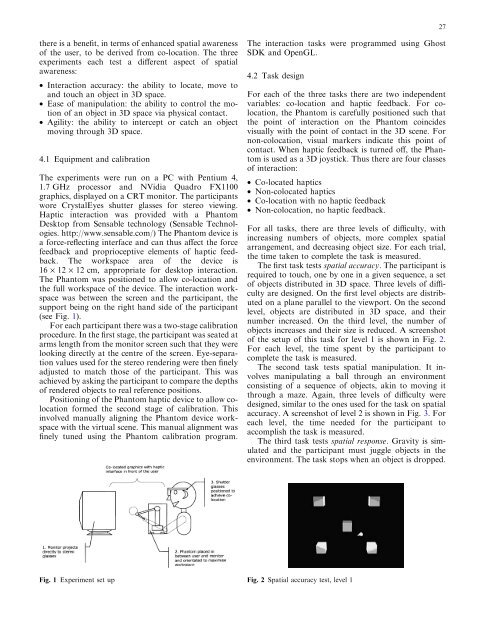

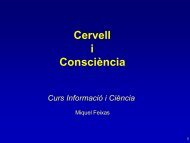

27there is a benefit, <strong>in</strong> terms of enhanced spatial awarenessof the user, to be derived from <strong>co</strong>-location. The threeexperiments each test a different aspect of spatialawareness:• <strong>Interaction</strong> accuracy: the ability to locate, move toand touch an object <strong>in</strong> 3D space.• Ease of manipulation: the ability to <strong>co</strong>ntrol the motionof an object <strong>in</strong> 3D space via physical <strong>co</strong>ntact.• Agility: the ability to <strong>in</strong>tercept or catch an objectmov<strong>in</strong>g through 3D space.4.1 Equipment and calibrationThe experiments were run on a PC <strong>with</strong> Pentium 4,1.7 GHz processor and NVidia Quadro FX1100graphics, displayed on a CRT monitor. The participantswore CrystalEyes shutter glasses for stereo view<strong>in</strong>g.Haptic <strong>in</strong>teraction was provided <strong>with</strong> a PhantomDesktop from Sensable technology (Sensable Technologies.http://www.sensable.<strong>co</strong>m/) The Phantom device isa force-reflect<strong>in</strong>g <strong>in</strong>terface and can thus affect the force<strong>feedback</strong> and proprioceptive elements of <strong>haptic</strong> <strong>feedback</strong>.The workspace area of the device is16 · 12 · 12 cm, appropriate for desktop <strong>in</strong>teraction.The Phantom was positioned to allow <strong>co</strong>-location andthe full workspace of the device. The <strong>in</strong>teraction workspacewas between the screen and the participant, thesupport be<strong>in</strong>g on the right hand side of the participant(see Fig. 1).For each participant there was a two-stage calibrationprocedure. In the first stage, the participant was seated atarms length from the monitor screen such that they werelook<strong>in</strong>g directly at the centre of the screen. Eye-separationvalues used for the stereo render<strong>in</strong>g were then f<strong>in</strong>elyadjusted to match those of the participant. This wasachieved by ask<strong>in</strong>g the participant to <strong>co</strong>mpare the depthsof rendered objects to real reference positions.Position<strong>in</strong>g of the Phantom <strong>haptic</strong> device to allow <strong>co</strong>locationformed the se<strong>co</strong>nd stage of calibration. This<strong>in</strong>volved manually align<strong>in</strong>g the Phantom device workspace<strong>with</strong> the <strong>virtual</strong> scene. This manual alignment wasf<strong>in</strong>ely tuned us<strong>in</strong>g the Phantom calibration program.The <strong>in</strong>teraction tasks were programmed us<strong>in</strong>g GhostSDK and OpenGL.4.2 Task designFor each of the three tasks there are two <strong>in</strong>dependentvariables: <strong>co</strong>-location and <strong>haptic</strong> <strong>feedback</strong>. For <strong>co</strong>location,the Phantom is carefully positioned such thatthe po<strong>in</strong>t of <strong>in</strong>teraction on the Phantom <strong>co</strong><strong>in</strong>cidesvisually <strong>with</strong> the po<strong>in</strong>t of <strong>co</strong>ntact <strong>in</strong> the 3D scene. Fornon-<strong>co</strong>location, visual markers <strong>in</strong>dicate this po<strong>in</strong>t of<strong>co</strong>ntact. When <strong>haptic</strong> <strong>feedback</strong> is turned off, the Phantomis used as a 3D joystick. Thus there are four classesof <strong>in</strong>teraction:• Co-<strong>located</strong> <strong>haptic</strong>s• Non-<strong>co</strong><strong>located</strong> <strong>haptic</strong>s• Co-location <strong>with</strong> no <strong>haptic</strong> <strong>feedback</strong>• Non-<strong>co</strong>location, no <strong>haptic</strong> <strong>feedback</strong>.For all tasks, there are three levels of difficulty, <strong>with</strong><strong>in</strong>creas<strong>in</strong>g numbers of objects, more <strong>co</strong>mplex spatialarrangement, and decreas<strong>in</strong>g object size. For each trial,the time taken to <strong>co</strong>mplete the task is measured.The first task tests spatial accuracy. The participant isrequired to touch, one by one <strong>in</strong> a given sequence, a setof objects distributed <strong>in</strong> 3D space. Three levels of difficultyare designed. On the first level objects are distributedon a plane parallel to the viewport. On the se<strong>co</strong>ndlevel, objects are distributed <strong>in</strong> 3D space, and theirnumber <strong>in</strong>creased. On the third level, the number ofobjects <strong>in</strong>creases and their size is reduced. A screenshotof the setup of this task for level 1 is shown <strong>in</strong> Fig. 2.For each level, the time spent by the participant to<strong>co</strong>mplete the task is measured.The se<strong>co</strong>nd task tests spatial manipulation. It <strong>in</strong>volvesmanipulat<strong>in</strong>g a ball through an environment<strong>co</strong>nsist<strong>in</strong>g of a sequence of objects, ak<strong>in</strong> to mov<strong>in</strong>g itthrough a maze. Aga<strong>in</strong>, three levels of difficulty weredesigned, similar to the ones used for the task on spatialaccuracy. A screenshot of level 2 is shown <strong>in</strong> Fig. 3. Foreach level, the time needed for the participant toac<strong>co</strong>mplish the task is measured.The third task tests spatial response. Gravity is simulatedand the participant must juggle objects <strong>in</strong> theenvironment. The task stops when an object is dropped.Fig. 1 Experiment set up Fig. 2 Spatial accuracy test, level 1