Beam Weapons

Beam Weapons

Beam Weapons

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

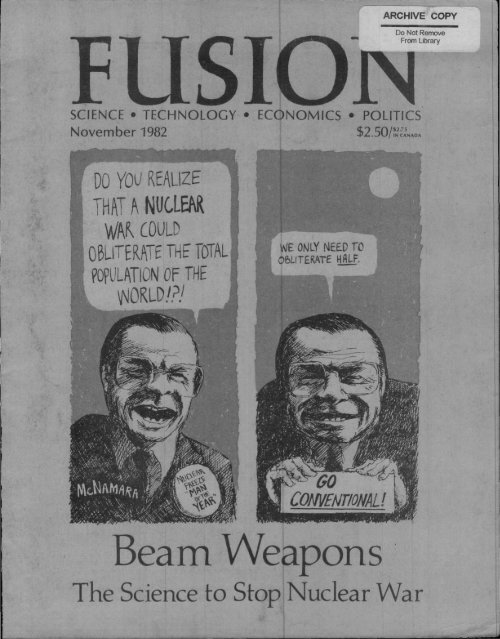

FUSIONSCIENCE • TECHNOLOGY • ECONOMICS • POLITICSNovember 1982<strong>Beam</strong> <strong>Weapons</strong>The Science to Stop Nuclear War

An Open Letter to ReadersAt a time when "nuclear freeze" advocates are using antiwar rhetoric to promote afreeze on nuclear power plants, Fusion is not just a good magazine. It is the onlyscience magazine fighting to continue the American tradition of progress.The printing and mailing of our 1982 issues have been delayed because of our financialdifficulties—difficulties that have been fostered by the same forces who bankroll thenuclear freeze and environmentalist movements.We resumed regular publication in September 1982 with a special format issue ofFusion. This November issue, which contains feature material from the unpublishedMay 1982 issue, is our third special format issue. We plan to continue to publish morethan one issue a month in order to send readers the highlights of all the back issueswe have prepared, plus new materials. How fast we can catch up to our regularschedule and our full 64-page format depends on you.With your financial help, we can win this fight for America, and get Fusion out regularlyto its 200,000 readers.• Join the Fusion Energy Foundation today. Memberships are $75 (individual), $250(sustainer), and $1,000 (corporate).• Send us a contribution to further our research and educational work and publiclectures. Contributions to the FEF are tax-deductible.• Donate subscriptions to your local schools, libraries, and legislators.Paul Gallagher, Executive Director, Fusion Energy Foundation

FUSIONVol. 5, No. 5 November 19822. Editorial<strong>Beam</strong> <strong>Weapons</strong>, the Nuclear Freeze, and the 1982 ElectionsFeaturesT" <strong>Beam</strong> <strong>Weapons</strong>: The Science to Stop Nuclear WarDr. Steven BardwellYes, there is a way to stop nuclear war—by developing directedenergy beam weapons. A crash program to pursue these frontiertechnologies would have a revolutionary impact on society,accelerating the development of fusion energy and bringing usinto the plasma age.3/ Systems Analysis: White Collar GenocideLyndon H. LaRouche, jr.When applied to the planning of whole economies, the method ofsystems analysis leads inescapably to genocide. The opposing Platonictheory of scientific inquiry is the most powerful weapon not only fordestroying Malthusianism, but also for building the technologiesrequired to prevent genocide. Part 2 of this provocative articlediscusses geometry as the "language of vision" and its application toeconomic analysis.The US. NeedsA <strong>Beam</strong> <strong>Weapons</strong> ProgramPresident Ronald ReaganThe White HouseWashington, D.C. 20500Send Your Letter to President Reagan!Dear Mr. President:I urge you to end the threat of nuclear war by committing the United Statesto a crash program to develop directed energy beam weapons. By developingthis ballistic missile defense system we will be able to destroy nuclear missilesin mid-flight, before their warheads have been released. At the same time,beam research will also speed the development of nuclear fusion power, asource of unlimited cheap energy from seawater, and bring us the technologiesof the plasma age.Like NASA's Apollo program, a crash program on the frontiers of science todevelop beam weapons will revitalize the economy, our industries, and ourscience education system.EDITORIAL STAFFEditor-in-ChiefDr. Steven BardwellManaging EditorMarjorie Mazel HechtAssistant Managing EditorLydia Dittler SchulmanFusion Technology EditorCharles B. StevensWashington EditorMarsha FreemanBiology EditorCarol ClearyEnergy EditorWilliam EngdahlArt DirectorAlan YuePhoto EditorCarlos de HoyosAdvertising ManagerAnton ChaitkinCirculation and Subscription ManagerDenise Ham(212)247-8820FUSION is published 4 times a year in 1982 inSeptember, Octpber. Nevember, and Decemberby the Fusion Energy Foundation, 304 West 58thStreet, Fifth Floor, New York, N.Y. 10019. Tel. (212)247-8439. Dedicated to providing accurate andccmprehensive informatipn pn advanced energytechnclpgies and pplicies, FUSION is committedto restoring American scientific and technologicalleadership. FUSION coverage of the frontiers pfscience focuses on the self-developing qualities ofthe physical universe in such areas as plasmaphysics—the basis fpr fusion ppwer— as well asbiolpgy and micrcphysics, and includes groundbreakingstudies of the historical development ofscience and technology.The views of the FEF are stated in the editorials.Opinions expressed in articles are not necessarilythose of the FEF directors or advisory board.Subscriptions by mail are $20 for 10 issues orS38 for 20 issues in the USA; $25 for 10 issues inCanada. Airmail subscriptions to other countriesare $40 for 10 issues.Address ail correspondence to FUSION, FusionEnergy Foundation, P.O. Box 1438, Radio CityStation, New York, N.Y. 10101.Second class ppstage is paid at New York, N.Y.and at additional mailing offices.Note to Libraries and SubscribersTo comply with postal regulations, the issues ofFUSION in Volume 5 are numbered in the orderin which they were printed and mailed. October1982. Vol. 5, No. 4, follows September 1982, Vol.5, NP. 3, which fcllpws December 1981, Vol. 5,Ne. 2.Subscribers who purchased a 10-issue subscriptionwill receive all 10 issues. The FEF expectsto publish ten issues of FUSION in 1983, but onlyfour issues in 1982. Highlights of the six remaining1982 issues—January through August—will be includedin future issues.Copyright 6 1982Fusion Energy FoundationPrinted in the USAAll Rights ReservedISSN 0148-0537 USPS 437-370NAME..STATE.Cover design by Ginny Baier. Front coverillustration by Christopher Sloan.

Editorial<strong>Beam</strong> <strong>Weapons</strong>, the Nuclear Freeze,And the 1982 ElectionsThis is a guest editorial by Lyndon H. LaRouche, jr., afounding member of the Fusion Energy Foundation and amember of its board of directors. LaRouche heads one ofthe major political action committees in the DemocraticParty, the National Democratic Policy Committee(NDPC).The November 1982 congressional elections were, in thewords of a popular idiom, a "Mexican stand-off." The Democratsgained 25 seats in the House of Representatives, again of no trend-setting significance, while the Republicansheld their majority in the Senate. As a result, the real factionalissues in the nation's capital at this moment are notbetween the two major parties, but across party lines.The major fight at this moment is between backers of aKissinger-guided State Department and backers of the ReaganDefense Department's push for the development ofspace-based antimissile beam weapons.Otherwise, the important feature of the election is aprofound discrediting of the right-wing conservative factionsof both major parties, and also a significant, if indecisive,weakening of the left-wing forces of the DemocraticParty. Under the pressure of a new economicdepression, increasing portions of the electorate are lookingback affectionately to the memory of President FranklinD. Roosevelt, and are turning their backs to both rightwingand left-wing varieties of political eccentricities.The <strong>Beam</strong> Weapon IssueAlthough the proposal to develop space-based antimissilebeam weapons is only one among many majorissues dividing forces in Washington, D.C., it is at thepresent moment the single issue upon which the entiretyof near-term U.S. policy directions will turn. A summaryof the history of the beam-weapon policy helps to makethe issue and its connections clearer.The discussions leading to the proposal of beam weaponsbegan during the summer of 1977, through collaborativedeliberations between retired Air Force Intelligencechief Major-General George Keegan and Dr. Steven Bardwellof the Fusion Energy Foundation. Putting their headstogether, Keegan and Bardwell established beyond doubtthat both superpowers had the scientific and technologicalmeans to launch crash programs to develop and deploybeam weapons capable of destroying large parts of thenuclear missile arsenal of the opposing superpower.Although Keegan broke off direct collaboration duringautumn 1977 over the issue of this writer's opposition tothe "Camp David" policy, Keegan's and Bardwell's collaboratorsseparately launched public campaigns for beamweapondevelopment during that autumn period, overhysterical opposition to both from the London InternationalInstitute for Strategic Studies (IISS).During early 1982, this writer composed a comprehensivestrategic policy draft, centered around a detailed proposalfor a U.S. space-based antimissile beam-weaponsdevelopment. This policy draft was circulated in prepublishedform to key military and other circles, and laterpublished with wide circulation as a policy study issuedby the NDPC. A concerted effort of support for this policyproposal was launched during April-May 1982, and knowledgeand support for the NDPC proposal spread.As Dr. Edward Teller reported during an Oct. 26 publicaddress in Washington, D.C., some of his "younger friends"won him over to becoming a spokesman for this policy.Teller has adopted the full range of proposed features ofthe policy. Defense Secretary Caspar Weinberger has publiclysupported, at the very least, the military hardwarefeatures and implications of the same policy.Currently, opposition to the policy comes chiefly fromthe allied forces of Henry A. Kissinger and AFL-CIO PresidentLane Kirkland, both supported by the "conventionalweapons" build-up liberal faction of Governor Averell Harriman,Senator Edward Kennedy, and Senator Gary Hart.In the layman's terms, the NDPC's beam-weapons policyhas the following leading points:(1) That the only possible means for ending the age ofnuclear terror is the development of technologies throughwhich nuclear missiles can be destroyed with more than99 percent effectiveness in mid-flight. Without beam weapons,under present or foreseeable political conditions, neithersuperpower would be willing to reduce nuclear capabilitybelow its estimate of assured minimal capabilityfor total physical destruction of the home-base of the opposingpower.(2) That the science and technology for such weaponssystemsdeployment exist at the established or earlypotentialcapacity of both superpowers.(3) That a "crash effort" to develop and deploy suchantimissile defenses would incur no net cost to the U.S.economy, since the civilian-technological by-products ofthe development effort would stimulate a high-technologyeconomic boom in the United States.(4) That the only foreseeable trigger for actual thermonuclearwar between the two superpowers now in sightwould be a combination of "conventional wars" amongregions of the developing sector and a significant weakeningof the relative strategic power of one of the twosuperpowers. A weakened and threatened superpower,either the United States or the Soviet Union, would fall2 November 1982 FUSION

ack on the last resort of its thermonuclear arsenal, usingthermonuclear blackmail to correct the imbalance. Thus,the savage weakening of the West through the presentworld economic depression, combined with regional conventionalwarfare in the developing sector, makes the otherwiseunthinkable thermonuclear war an increasing possibilityfor the years immediately ahead.(5) That the agenda of arms-negotiations between Washingtonand Moscow must be scrapped and replaced witha new agenda based on a policy of development of defensivebeam weapons to the end of ending the age ofthermonuclear terror (the policy of mutually assured destruction—MAD}.(6) That this qualitative change in military technologywill, by itself, merely postpone the danger of thermonuclearwar. We must include a feature which carries usbeyond mere war postponement into circumstances ofdurable peace.(7) That the basis for durable peace, as Teller emphasizedin his own choice of terms, is a U.S. commitment tohigh-technology economic development of the developingnations, seeking to win the Soviet Union to cooperatingin this effort on behalf of the "common aims of mankind."The additional special feature of the NDPC-policy outlineis that it specifies that the research and development forthis be civilian-based, rather than locked away in secretmilitary projects. The NDPC has proposed that the UnitedStates launch several civilian-economy crash programs toperfect the technology used in antimissile beam weapons.For example: NASA should be given the assignment forkeystone tasks, including the development of manned Earthorbitingspace laboratories, and a manned Mars landing,as well as manned Moon stations. Such civilian researchwill develop all the technology required to be properlyrepackaged as space-based beam-weapons systems.An intensive schedule of speaking engagements by theFEF's Dr. Steven Bardwell substantially weakened the causeof the "nuclear freeze" movement in California during thetwo weeks immediately preceding the election. Bardwell'sspeaking-tour had two significant kinds of effects. AlthoughBardwell was one of the designers of the beamweaponsproject, he has done his work outside the boundsof secret research. Therefore, Bardwell is free to offer thekind of technical-scientific clarifications of beam-weaponsprinciples which Teller is not presently legally free to revealpublicly. Second, Dr. Bardwell succeeded in winning asignificant number of university students and some specialistsaway from the "nuclear freeze" cause, by convincingthem that beam-weapon deployment is the effectiveapproach to ending the age of thermonuclear terror.The General Line-UpAt the highest level of U.S. circles, the practical divisionof forces is between the supporters and adversaries of the"New Yalta" policy of Britain's Lord Carrington. Carrington,'along-standing controller of Henry A. Kissinger, is atthe center of an Anglo-Soviet game intended to break Europeaway from the United States, and to kick U.S. influenceout of the Middle East and other parts of the world.The principal bastion of pro-Carrington policies in theU.S. government is the State Department. Kissinger alliesin the State Department and ultra-liberals in the DemocraticParty are the principal backers of neo-Malthusianpolicies concerning population reduction, technology, andeconomic policy. This faction opposes beam-weapons development,and proposes to develop a reduced U.S. conventionalmilitary establishment, designed to conduct regionalwars against nations in the developing sector.This division of forces along policy lines overlaps a seconddivision within U.S. leading circles. This second issuecenters around the publicized case of alleged Soviet spyGeoffrey Arthur Prime, alleged to have delivered detailedNATO plans and U.S.-NATO codes to Moscow. British refusalto inform the U.S. government of the massive leakof U.S. secrets by Britain's secret services, and massiveother indications of Anglo-Soviet collaboration against theUnited States, have infuriated large parts of leading military,intelligence, and other circuits in and around Washington,D.C.Informed sources indicate that the Prime affair is generallyviewed as a British smoke-screen, a diversionary operation,covering up a much more serious business. Thefingers point in the direction of Lord Carrington's "NewYalta" package, viewed as "treasonous" by some critics,and also repeated charges to the effect that Kissinger wasa Soviet intelligence asset during the period of his postwarservice in the Oberammergau Center in occupied Germany:the so-called Odra Cell affair.Although the general U.S. public is only dimly aware ofsuch issues up to the present moment, the lines of crosspartydivisions in and around Washington, D.C, intersectan eruption of rage against the effect of the policies ofFederal Reserve Chairman Paul Volcker among the populationgenerally. The recent elections reflect this rage onlyindirectly. Except in isolated cases, the percentage of theelectorate participating in the elections was typical for amidterm election: approximately 34 percent. Also typical,the mobilization of certain sections of the electorate aroundspecial issues, such as the "nuclear freeze" referendum,tilted the results of the elections such that more militantlyorganized minority views within the electorate affected theoverall vote in such a way that the result of votes cast doesnot efficiently represent the moods in the population as awhole.In this election, the voters voted less frequently for candidatesthan against candidates. With relatively few exceptions,voters voted for candidates not because they likethose candidates, but because they wished to destroy thepolitical career of the opponent. The voters are not to beblamed for this; with few exceptions, they had no candidateor party policy worth voting for.At the top, and among the electorate, the politicians ofthe United States are wobbling on a political knife's edge.Both the government and the electorate could easily fallto one side or the other, to the side of beam-weaponssupporters, or to the side of Kissinger's friends. WhetherPresident Reagan capitulates to Kissinger and Kirkland, oroverrides Kissinger's State Department-centered backers,will probably determine the way the United States and theworld go during the months immediately ahead.FUSION November 1982 3

<strong>Beam</strong> <strong>Weapons</strong>: The Scienceby Dr. Steven BardwellFor years the threat of nuclear warhas hung over the heads of Americans,the fear that by accident or designsomeone would press a red button andsend a nuclear-armed intercontinentalballistic missile (ICBM) to turn U.S.cities into infernos. Now, after 30 yearsof living with this horrible possibility,there is a definite means to ensure thatnuclear war will never happen: thetechnology of directed energy beamweapons.We could have the first generationof beam weapons within 5 years—ifthe United States put a priority onbeam research. Such beam weaponswould be able to find, track, and destroya nuclear-armed ICBM, preventingits explosion. And in another10 to 15 years, we could develop asecond generation of space-basedbeam weapons, giving even greaterprotection with a capacity to destroy10,000 ICBMs at once.Such beam weapons would makenuclear war obsolete. <strong>Beam</strong> weaponsdo not simply hit a missile silo or preventa nuclear missile from beinglaunched; they ensure that the nuclearwarhead is destroyed in the stratosphere.There is no nuclear explosion,no nuclear holocaust.The news media have portrayed thebeam weapon as a "Buck Rogers" fantasyor as a "third generation nuclearweapon" that will "militarize" space by putting bombs there.But beam weapons are neither wonder weapons nor nuclearweapons. Directed energy beams can be of intenseelectromagnetic waves of the same wavelength (laserbeams), of subatomic particles (electron beams, protonbeams, or neutral particle beams), or microwave and plasmabeams—all of which travel at or near the speed of light.The development of such beams would represent a qualitativeleap in our understanding of physical science andtechnology, similar to the leap the world made with theuse of nuclear fission.For this reason, Dr. Edward Teller, who is often calledthe "father" of the hydrogen bomb, has termed the beamweapon the most important military development sincethe atom bomb. Whether or not the United States willembark on a new "Manhattan Project" effort to developdirected energy beam weapons depends on the successof Teller's ongoing public and private interventions into4 November 1982 FUSIONSandia National LaboratoriesThe PBFA 1, a particle beam accelerator used for electron beam production andfocusing experiments in the fusion research program at Sandia National Laboratoriesin Albuquerque, N.M.military policymaking, along with the initiatives of DemocraticParty leader Lyndon H. LaRouche and the FusionEnergy Foundation, to shift this nation's defense policyaway from the absurd concepts of mutually assured destruction(MAD) and deterrence, authored by RobertMcNamara and the Rand Corporation.A new Manhattan Project, ironically, would for the firsttime in 30 years free this nation from being held hostageto the threat of nuclear holocaust. More important, asLaRouche, the Fusion Energy Foundation, and, recently,Teller have stressed, a brute force research effort in beamtechnologies would have a revolutionary impact in acceleratingthe development of nuclear fusion for energy production,the development of plasma torches for materialsprocessing and mining, and a host of other technologies.Like the NASA Apollo program in the 1960s, an all-outresearch program in this frontier science area would spurindustrial productivity and revive the U.S. economy, at the

to Stop Nuclear Warsame time making the industrialization and developmentof the underdeveloped sector a reality.The development of a beam weapon will open up a newchapter in man's history: the age of plasma technologies.These new technologies will have a more revolutionaryimpact on society than the introduction of electricity had100 years ago.The real source of the revolutionary impact of thesetechnologies is the scientific challenge they present. Thedevelopment of a beam weapon would require the resolutionof the most profound problems of theoretical physicsof the past several centuries.How a <strong>Beam</strong> Weapon WorksA beam weapon system would have to meet the samerequirements as any other conventional defense systemagainst ballistic missiles. The Los Alamos National Laboratory's1980 report on ballistic missile defense specifiedthe problems to solve as follows: (1) early warning thathostile ICBMs have been launched; (2) detection and assessmentof the threat; (3) derivation of trajectories andprediction of targets; (4) discrimination of warheads, reentryvehicles, and decoys; (5) targeting the interceptor (thebeam); (6) guidance of the beam; and (7) destruction ofthe target.Over the past 15 years, both the United States and theSoviet Union have perfected the early warning technologyusing satellites, and both countries routinely monitor allmissile launchings. The more refined assessments are nowaccomplished using ground-based radar, but there are severalnew technologies that will greatly enhance the quickdetermination of where a missile is coming from, how fastit is going, and what its target is, and also discriminatebetween those missiles meant to hit their mark and thedecoys.The Los Alamos group has stated that the best way ofusing these new technologies for the job is to launch rocketborneprobes into trajectories above the atmosphereequipped with (1) a long wavelength infrared sensor technologythat can detect fuel tanks in the hostile missile atdistances of 3,500 miles, and (2) computer and communicationstechnologies that can handle the data for as manyas 20,000 targets in the infrared telescope's field of view.Once the necessary information is processed, of course,the beam weapon must be aimed and must hit and destroyits target, as well as assess whether another shot is necessary.The technologies needed here are completely new,requiring unique solutions: Aiming a beam weapon at atarget 3,500 miles away is like hitting an image about .00003degrees wide—the same as a piece of thread seen at 100meters—while it is moving at 20,000 feet per second!It is generally agreed that the aiming and tracking technologiesare within our scientific grasp today. What is requiredis concentrated work on the problem using existingoptical systems (there are now cameras on civilian satellitesthat can point to a region of the sky with the accuracyrequired by a beam weapon) and fourth-generation gyroscopesnow on the drawing boards.The more difficult task will be powering the beam to thetarget with energy enough to destroy the target and energyof a sort that can be absorbed by the target so that it willbe disabled.These three problems—power, energy, and absorptionby the target—are solved differently by each of the fourtypes of beam weapons proposed: laser beams, particlebeams, and microwave and plasma beams. However, allof them appear capable in principle of generating the requiredpower and energy in a form efficiently absorbedby the missile.The timetable is as follows: Within five years we couldhave a hybrid beam weapon system with an on-groundlaser and a mirror in space (see figure). This would offercomplete protection against an accidental ICBM launch oran attack by a runaway third power. In another ten years,a second-generation beam system could give us substantialprotection; and in fifteen years, more advanced and muchmore attractive possibilities, like the X-ray laser, could provideus with defense against all-out nuclear attacks.Laser <strong>Beam</strong> <strong>Weapons</strong>Laser beams are perhaps the most familiar of the beamtechnologies. A laser beam consists of a beam of veryintense, single wavelength electromagnetic waves, eitherlight or high energy X-rays. Because the wavelengths oflight are coherent—all the same frequency and phase—the beam of light can be focused very precisely.There are four different types of lasers considered, allbeing researched at U.S. national laboratories both forweapons and fusion energy applications:(1) In a gas laser, such as carbon dioxide, a burning gasis suddenly compressed and the resulting energy distributionis stimulated to emit single frequency light at highenergies. Huge gas lasers are now being used here and inJapan in the nuclear fusion effort.(2) A chemical laser uses a gaseous medium in which achemical reaction is induced, the products of which thenemit laser light. The Soviet Union last year in tests usedsuch a laser to down a ballistic missile.(3) An electron discharge laser uses the replaceable energyfrom an electron beam to create the source for laserlight. This is the most attractive laser for space use becauseits energy source is not a chemical fuel that is used up butrather the electricity that drives the electron beam.(4) There are two kinds of more speculative lasers thathave not been perfected technologically but that promisethe advantages of energy density and flexibility: the X-raylaser and the free-electron laser.The X-ray laser, tested in the United States in 1981 andopenly discussed in the literature last year, uses the X-radiation produced by a small nuclear explosive to "pump"FUSION November 1982 5

ods of a heavy metal medium that then releases its storedenergy in a very concentrated, short pulse of X-rays.The X-ray laser has the capability of such high powersand such efficient missile destruction (because the targetefficiently absorbs the X-rays) that it is widely recognizedas the most promising long-range laser for ballistic missiledefense based in space. Although just a single pulse, theX-ray laser delivers thousands of times more energy perpulse than conventional lasers." Given the state of technological development of the opticaland power systems, there is not a competent scientistwho doubts that a laser weapon capable of destroying anICBM can be built. It is critical to understand that the mostimportant objections to beam weapons, especially laserweapons, are political and military, not scientific.Particle <strong>Beam</strong> <strong>Weapons</strong>Particle beams, like lasers, deliver energy in a highlycontrolled pulse traveling at near the speed of light. Butinstead of a pulse of intense electromagnetic radiation, aparticle beam is made up of subatomic particles (specificallyelectrons or protons), neutral atoms (usually hydrogen),or macroscopic particles (usually magnetized) thatare accelerated to high speeds.The destructive power of a particle beam is the intenseshock wave that it creates in the target, which is like asmall, very powerful hammer slamming down on the target.All three types of beams have similar acceleration mechanisms,although the engineering is different: A magneticwave generated by radiowaves or a set of magnetic coilsis used to "push along" the particle. The magnetic wavestores energy from some electrical power source and transfersthis energy to the particles.The generation of electron beams is similar to the oldidea of electron acceleration used in a vacuum tube. Electronbeams are now routinely generated in the range ofmillions of electron volts, millions of amperes of current,and power densities inside the beam of trillions of wattsper square centimeter.What has continued to surprise scientists researchingelectron beams for military and civilian energy applicationsis that an intense electron beam is not actually a beam ofindividual electrons, but rather a complex structure of electronsand magnetic field. In electron beams of the orderrequired for a beam weapon, the electrons actually increasetheir intensity by forming into a tightly bundledsheaf of separate beams in a vortex structure. These structuredbeams, which propagate together inside a protectiveshell of their own magnetic fields, can then carry highercurrents and more energy longer distances at greater powerlevels.Proton beams have been a subject of intense research,especially in the Soviet Union, for the past 30 years. Theseuse an electron beam as a "seed" and then are acceleratedin their own right. One of the newer technologies is a typeof collective acceleration that uses an electromagnetic interactionto accelerate the protons to the same velocity asthe electron beam "seed." Because the protons weigh almost2,000 times as much as the electrons, having the samevelocity means that they have 2,000 times greater energy.Again, these proton beams use their self-organizing capacityto "keep up" with the electron beam seed in theaccelerator. The result is a very high quality beam withuniform energy density and uniform energy.Neutral particles, because they are not charged, as arethe electrons and protons, eliminate problem areas suchas deflection in the atmosphere and electric forces betweenthe charged particles and the background atmospherethat can degrade the efficiency and controllabilityof the beam. The technology for accelerating neutral beamswas developed in the fusion program, where high densityneutral beams are used as auxiliary heating for magneticfusion devices. Because the technologies are not availableto directly accelerate neutral atoms, the atoms (usually ofhydrogen) are first ionized and then electrically or collectivelyaccelerated. These accelerated particles are thenpassed through a dilute background gas and stripped oftheir charge, or neutralized.By spring 1983, U.S. researchers will have produced abeam of protons at an energy of 2.5 million electron volts,traveling at 99 percent of the speed of light, as the firststep in the large-scale testing of particle beam weapons.The use of macroscopic particles accelerated in a railgun—a magnetic track much like a linear induction motor—hasbeen studied for applications that range fromspace propulsion, magnetically levitated trains, inertialconfinement fusion energy, to weapons. These devices usea magnetic field to push particles (or whatever object isbeing propelled) down a track in the same way that theexhaust gases from a conventional shell push a bullet downthe barrel of a rifle. Replacing the exhaust gases is a magneticfield capable of generating much higher pressuresand therefore, much higher velocities (100 kilometers persecond).Although control and targeting would be more difficultwith a macroscopic particle beam, its unparalleled powerdensities—because of the large mass of the particle—makeit attractive as a beam weapon. According to U.S. weaponsresearchers, the Soviet Union has a large program devotedto the development of macroparticle beam anti-aircraft andantiballistic missile weapons, as well as anti-armor weaponsand antiship weapons. There is no known armor thatcan withstand even a small projectile moving at the velocitiesattainable for macroscopic particle beams.Microwave and Plasma <strong>Beam</strong>sMicrowave and plasma beams have only been discussedin the United States in the last two years, although theSoviet Union has had a large program in both areas andis estimated to be about two to three years ahead in theproduction and propagation of microwaves and in the understandingof the interaction of intense microwaves withmatter.Intense, directional microwaves are generated whenelectron beams are propagated at or near the speed oflight through a plasma. These microwave sources provideintensities many orders of magnitude greater than thoseassociated with radar; and such beams, if focused, coulddestroy delicate electronic equipment in a target.Plasma weapons offer even more of a challenge to sci-6 November 1982 FUSION

The two modes of deploying beam weapons are shown here. The ground-based beam weapon (r) is simpler and canbe developed in five years using high energy chemical lasers. A relay mirror in orbit around the earth provides aimingand tracking for the beam weapon. The longer-term space-based beam weapon design (I) shows a beam weaponmounted directly on a satellite orbiting the earth.entists, and operate very differently from other beams. Aplasma beam consists of the highest energy dense formof matter, a gas so hot that the electrons and nuclei havebeen separated. The "gas" is made up of electrically chargedparticles and the electric and magnetic fields they generate.This so-called fourth state of matter quite naturally formsinto complex structures of particles and magnetic fields,which are spontaneously created and stable. One of these,shaped like a donut, is called a plasmoid and is the mostfrequent form of spontaneous plasma-field structure. Itoccurs naturally in the form of ball lightning, and has beenreproduced on a small scale in the laboratory.Plasmoids are contained by a toroidal magnetic field thatspirals around the donut and reconnects with itself. Thismagnetic field simultaneously confines and insulates theplasmoid. Contrary to other beam weapons, for which theatmosphere hinders the guidance and propagation of thebeam, the plasma beam could not exist without it; it requiressomething to "push against" to hold in the plasma.These closed field plasma blobs are extremely stable giventheir energy density, with a lifetime measured in seconds.The exact physical mechanism involved in such closedfield structures is the subject of heated debate here (someU.S. scientists even doubt the existence of such structures).Nevertheless, the Soviet Union has had a majorresearch program in plasmoids since the middle 1950s,when the first plasmoids were produced at Lawrence LivermoreNational Laboratory in California. The first publicindication of serious interest in a high energy plasma beamin U.S. military literature appeared in an April 1982 articlethat discussed the applications of a "collective acceleratorbased on magnetically confined plasma rings."Such rings would be an almost ideal plasma weapon:They carry high energies, are stable, and can be acceleratedto very high velocities. However, how such beams couldbe guided and targeted is still a matter of speculation.The Science of the <strong>Beam</strong> WeaponAn engineering analysis shows that beam weapons forballistic missile defense can be built. The problems of detection,discrimination, target acquisition, beam generation,and target destruction are all within our scientific andtechnological capabilities. Even for a chemical laser beamweapon, which is not "off the shelf" technology, there isno question that such a weapon could be built in the nextfive years with technologies available today. Despite thisengineering certainty, the beam weapon confronts, us witha host of fundamental scientific questions when it comesto a detailed understanding of the scientific basis of beamgeneration and propagation.The fundamental scientific problems raised by the physicalphenomena occurring in the energy-dense regimesnecessary for beam weapons require working from theperspective of the German classical school of mathematicalphysics. This is not the method of analysis used by Westernscientists. In fact, the tradition of Leibniz, Gauss, Weber,Riemann, and their successors, from which all the majordiscoveries of the past period have been derived, havebeen attacked with increasing success by the British empiricistor Newtonian school.There are two main areas central to the question of thescience of the beam weapon where the Newtonian approachcannot possibly explain the phenomena actuallyobserved in experiments with high energy plasma physics.First is the question of energy. While conventional Newtonianor Maxwellian physics defines energy as an inter-FUSION November 1982 7

nally measurable, conserved, scalar quantity, this view isuntenable given the role of energy in the evolutionaryproperties of various beam weapon systems (for example,the self-organizing acceleration of proton beams).Second is the question of the direction of evolution inphysical systems. The fundamental assumptions of modernphysics insist that changes with time in a physical systemare the result of a summation of microscopic dynamics. Inother words, particle-by-particle interactions supposedly"add up" to the global behavior of a physical system. Inthis view, the quality of the global evolution is statisticaland random.The behavior of high energy plasmas overthrows thispremise. <strong>Beam</strong> weapon experiments, in fact, present strikingevidence of a kind of global causality that directs themicroscopic evolution of a physical system but is not reducibleto microscopic interactions. There is a well-documented,spontaneous self-organizing behavior tendingtoward large-scale structure and more order.These two opposing methods—Leibniz versus Newton—have been at the center of an intellectual and policy fightfor 200 years, pitting the followers of the Newtonian atomistictradition against the followers of the Leibnizian school.This is not merely an academic question today. In thiscountry, the information that is classified in both fusionenergy research and beam weapons research is the resultsof Riemannian physics that threaten the intellectual hegemonyof the Newtonian ideas. At the same time, themainstream of Soviet science is in the Riemann-Leibniztradition, which explains the lead the Soviets have in physicalresearch on relativistic beam weapons. If the UnitedStates is to develop beam weapons—as the Soviets arenow doing—the Riemannian tradition will have to prevailin U.S. science.The critics of beam weapons who challenge the verypossibility of developing this technology do so on the basisof their Newtonian physics. How can beams propagatethrough the atmosphere without losing so much energydensity that they will be unable to destroy the target? thecritics ask. The answer is in the actual behavior of theplasma itself, which is not describable by means of conventionalparticle-based plasma physics.The basic point of physical science is that a plasma isnot in any real sense made up of charged particles andfields. To define a plasma in this way is much like sayinga human being can be understood by studying the resultof combining billions of single cells and the fluids aroundthem. Rigorously, the problem a Newtonian faces whenconfronting these phenomena is that the causality for themacroscopic behavior is not contained at the microscopicparts of the system. The microscopic entities exist, butthey are not primary; they are determined by the sameglobal causality that shapes the general evolution of thesystem.The Plasma AgeThe development of beam weapons will not only freehumanity from the insanity of having no defense againstnuclear war; it will bring mankind out of the atomic ageand into the plasma age, where we will be able to mastertechnologies using the highest energy form of matter knowntoday.Mastery of a plasma would put at our command (1) anenergy source—nuclear fusion—that has an unlimited fuelsupply taken from seawater and is cheap, clean, and inherentlysafe; (2) access to a supply of raw materials thatwould be virtually inexhaustible through the technologyof a fusion torch, which is capable of refining the lowestgrade ores economically; and (3) new materials processingtechnologies that allow the creation of nuclear-tailoredmaterials (isotope separation on a large scale), the degradationof radioactive wastes, and the ultimate recyclingof wastes, using the plasma torch.This is not science fiction, but what we can do in thenext few years—if we win the policy fight for an Apollostyleprogram to develop beam technologies.Real National Security<strong>Beam</strong> weapons are inherently defensive technologies.The most important military consequence of this fact isthat the national security of a country is not substantiallyaffected if its putative adversary obtains these technologies.This, as Teller and the FEF have pointed out, removesany rationale behind the Schlesinger-authored securityclassification of the basic scientific research in the laserfusion program and in other beam-related areas. Such classificationhas hindered not helped the advancement ofbeam weapon research and scientific research in general.For the layman trying to understand the military policyfight and how it intersects with the nuclear freeze movement,the important thing to keep in mind is the relationshipof economic growth to national security. McNamara,Schlesinger, General Maxwell Taylor, and other freezeleaders advocate a postindustrial society, a curb on advancedtechnology, and new wars fought with conventionalweapons to depopulate the Third World.The traditional American military strategy of "winningthe peace," nation-building domestically and abroad, hasbeen the very opposite. This was the tradition in whichGeneral MacArthur rebuilt Japan, in which PresidentEisenhower proposed the Atoms for Peace program, andin which Admiral Rickover forged the Nuclear Navy andthe civilian nuclear program as well.The only way for the United States to ensure its nationalsecurity is through the kind of rapid economic developmentthat would result from a new Apollo program for thefrontier science area of beam weapons. Real national securityrests on economic growth, technological development,and human advancement that simultaneously providea strong military and the objective self-interest thatmake war unlikely, if not unnecessary. A country producingnew resources (rather than fighting for old ones), educatingand training its citizens (rather than being plaguedby unemployment or apparent overpopulation), and providinga hopeful future for its people, is a country withreal national security.Steven Bardwell, a plasma physicist, is editor-in-chief ofFusion. This article was adapted from his comprehensivereport on beam weapons available from the FEF at $250.8 November 1982 FUSION

SYSTEMSANALYSISWhite Collar Genocideby Lyndon H. LaRouche, jr.Part 2

EDITOR'S NOTE"To replace Nazi-smelling eugenics, a new pseudosciencehas been cooked up, and promoted as the putative'scientific' basis for genocidal policies such as the Carteradministration's Ciobal 2000 and Global Futures. ThatMalthusian pseudoscience in currency today is called'systems analysis.' "The proof of this thesis, that systems analysis is nothingbut a rationale for policies leading to genocide, is thesubject of part 1 of this provocative article by FEF boardmember Lyndon H. LaRouche, Jr. (see October 1982Fusion, p. 3). Systems analysis, LaRouche demonstrates,as a "linear equilibrium model," is a doctrine of genocidebecause it ignores the role of technological innovation ineconomies and thereby precludes those very policiesessential not only to support population growth but topermit the maintenance of even a fixed level of population.Under present world economic conditions, systemsanalysis is leading to mass-murder on a scale two ordersof magnitude greater than that perpetrated by the Austrianhippie Adolf Hitler.After noting that this evil methodology infects circles inboth the East and West—including the State Departmentauthors of the Global 2000 Report, the Draper Fund/Population Crisis Committee, the Aspen Institute, andother neo-Malthusian institutions based in the West; thePeking-allied Pol Pot regime that murdered 40 percent ofthe population of Kampuchea between 1975 and 1978;and the Gvishiani faction in the Soviet bureaucracy linkedto the Vienna International Institute for Applied SystemsAnalysis—LaRouche traces the origin of modern systemsanalysis to the immoral "hedonistic calculus" of utilitarianphilosopher Jeremy Bentham. Bentham, and followinghim the British economists John Stuart Mill and WilliamJevons, founders of the modern doctrine of marginalutility, rejected the possibility of founding a universalscience of economics and instead maintained that economicprocesses are determined by the aggregation ofmarginal pleasure and pain experienced by individualbuyers and sellers.This pseudoscientific theory was continued by AlfredMarshall and J. M. Keynes and finally appeared in its mostradical form in the modern doctrine and practice ofsystems analysis, typified by the influential Theory ofGames and Economic Behavior coauthored by the mathematicianJohn von Neumann and the Vienna neopositivistOskar Morgenstern. Like all econometrics today, Theoryof Games degrades economies to mechanical sorts oflinear equilibrium models, which are axiomatically entropicprocesses; it considers nothing bearing on thosecrucial processes by which the productivity of labor risesor falls. As a policy-making tool, applied to "macroeconomics," systems analysis leads inescapably to economicdevolution (depression).There has been an equally continuous tradition ofeconomic science opposing the marginal utility/systemsanalysis disease: the Platonic theory of scientific inquiryand practice, based on uncovering the negentropic qualityof natural law. This theory is the most powerful weaponnot only for destroying Malthusianism but also for buildingthe technologies required to prevent genocide.In part 1 of this article, LaRouche shows the coherenceof the Platonic scientific tradition, leading up to his owndiscoveries as an economic scientist: Kepler's proof, in hisworks founding modern mathematical physics, that theIndicted Nazi leaders in the dock at the Nuremberg Tribunal October 1946, await sentencing for their crimes againsthumanity. In the first row of the dock (from left) are Goering, Hess, and von Ribbentrop.10 November 1982 FUSION

universe is fundamentally negentropic, not entropic;Leibniz's insight (which grew out of hlis training in bothGerman cameralism and French mercantilism) into thebroad implications of the heat-powered machine—"bywhich one man can accomplish the work of a hundredothers"—that successive rises in productivity, grounded inadvancing technology, is the general principle of humanexistence; the unifying thesis of the anti-free trade AmericanSystem economics of Alexander Hamilton, HenryCarey, and other patriots that value is located in "artificiallabor," human improvements in raw nature; the rigorousproof by Riemannian physics (Bernhard Riemann, GeorgCantor, and their tradition in German 19th century science)that the Second Law of Thermodynamics, the socalledlaw of entropy, is a groundless fiction; and thenegentropic outlook underlying the Judeo-Christian tradition,exemplified by the consubstantiality doctrine ofApostolic Christianity. LaRouche shows that far from beinga merely theological issue of no importance to science,Apostolic Christianity's insistence on the universe as aprocess of lawfully ordered continuing creation is tracedin literature to Plato's notion of the hypothesis of thehigher hypothesis, which was the fundamental principle(often explicitly so) guiding the work of Riemann, Cantor,and every other major discoverer in the work of continentalscience.LaRouche then establishes the notion of potential relativepopulation-density as the central principle of Platoniceconomic science: "We must interpret all actual andproposed changes in society's productive behavior by thesingle parameter: Do such changes increase or fail toincrease society's potential relative population-density?"Taking the example of resource exploitation, he demonstrateshow this parameter functions as a measure of asociety's progress: If a society remains within a relativelyfixed level of technology, the variety of natural resourcesavailable to it is delimited, and the cost of exploiting theseresources—the portion of the labor force that must beallotted to exploitation—must tend to rise through apparentdepletion of the richest grades of ore. Less is producedper capita of the work force and per capita for the entirepopulation. The relative potential population-density ofthe society falls accordingly. "Even the maintenance of afixed level of population requires a constant change inhuman behavior, constant advances in the general levelof technology of practice," LaRouche concludes.From there, LaRouche argues that man's successfulmastery of the universe, as measured by successive increasesin potential relative population-density, is the basisof all science. Science is emphatically not a collection ofisolated scientific experiments.LaRouche's final point in part 1 concerns the notion ofenergy. Although advances in technology appear to correlatewith increases in the energy transmitted per capitaof the population, current notions of energy, as measuredin scalar units such as calories, joules, watts, and so forth,are inadequate to mathematically describe the causa)connection between the increasing energy density ofproduction processes and rate of increase of productivity.Geometry As the "Language of Vision'The best approach to succinct statement of the issuesinvolved in study of the "energy" problem is found byreferencing Kepler's three major published writings. 1 Admittedly,this writer did not have competent command ofKepler's writings, or of many other materials now in hispossession, back in 1952. His principal methodologicalguides were a hatred of the mechanistic method, and apositive knowledge adduced from a few works of Leibniz 2plus the fruit of his own de novo refutation of ImmanuelKant. However, the conclusions which this writer reachedin 1952, and which continued to be his independentreference-points-in-chief into the 1960s, have proven, notaccidentally, to be congruent with the superior quality ofargument to be developed directly with reference to thework of Kepler and other resources added to the writer'srepertoire at various points over the past two decades.It were better to employ the improved form of argumentbrought directly in reach by resources not at the writer'soriginal disposal, than to burden the reader with the morelaborious approach the writer actually employed to developthe views described here.One must appreciate the writer's anger at the wastedyears occupied in accomplishing something which hemight have more quickly accomplished and better, hadthe educational institutions to which he was exposed notbeen degenerated to the point their- decay had alreadyreached during his childhood and youth—long before the"new math" began destroying students' scientific potentials.He is therefore resolved that new generations ofchildren and youth shall not be obliged to suffer the samecostly deprivation, that the precious sources which everystudent ought to have available hereafter be available tothem all. If there is any complaint to the effect that thewriter is presently employing an argument considerablyimproved over that he actually employed to reach thesesame conclusions, the reader should not blame the writerfor that, or regard it as in any way "intellectual dishonesty"that he honors a right method superior to the morelaborious course he actually employed. Educate our studentsproperly and such discrepancies need not arise inthe future.In respect to mathematical knowledge, the central issueof scientific work has been the question whether or notthe universe as we see it is an adequate representation ofthe universe as it is.If we see the answer to the question we have justidentified rightly, we are led by rigorous steps to thediscovery that there is no ontologically axiomatic "energy"in the universe, but that the phenomena of energy are adetermined aspect of a more fundamental principle ofour universe: negentropy.This correction in thermodynamics, from the vantagepoint of Riemannian physics, is indispensable for solvingthe crucial problem of economic science. It leads us to asuccessful determination of the equivalence between an"injection" of negentropy into the productive process,and a correlated increase in the negentropy of the pro-FUSION November 1982 11

"Proceeding from the fact that visible space ischaracterized by the case of five uniquely definedPlatonic solids, Kepler proved conclusively, with aid ofsolar observations, that visible space is not adequaterepresentation of physical space."ductive process, a negentropy measurable in terms of thework of increasing potential relative population-density.The reader will soon discover why it has been necessaryto identify the range of issues we have identified so far, tobring the report up to this point of elaboration.Proceeding from the fact that visible space is characterizedby the case of five, uniquely defined Platonic solids,Kepler proved conclusively, with aid of solar observations,that visible space is not an adequate representation ofphysical space (Figure 1). The fact that only five regularpolyhedral solids can be constructed in Euclidean space—the space of vision—proves conclusively that Euclideanspace itself is shaped by something external to it.This was not new with Kepler. Plato understood thissame point in exactly the terms we argue it here, inreporting that man does not see reality directly, but onlythe shadows of reality, as if viewing such shadows in acave. Saint Paul writes that we see as in a mirror, darkly.Plato's scientific knowledge was enormous, in fact, aknowledge for which he was significantly indebted to theleading scientific institution of that period, the Cyrenaictemple of Amon, at which the uniqueness of the fivesolids was proven during Plato's lifetime. It is Plato's reportof those solids and of their significance for physics whichresulted in their designation as the "five Platonic solids."The principal stimulus for the revival of this Platonicapproach to physics in relatively modern times was thescientific work of Cardinal Nicholas of Cusa, includingCusa's anticipation of modern topology in such locationsas his "Sphere Play," and Cusa's general emphasis on acritical examination of the work of Archimedes.The generation of Cusa's Italian successors, especiallythe circle associated with Leonardo da Vinci, and thatgeneration's successors in the School of Raphael, hadextensively explored all of the questions on which Keplerfocused his own work. The Divina Proportione of LucaPacioli, a collaborator of Leonardo, and of Pacioli's studentAlbrecht Durer, as well as Giordano Bruno, are the immediatesources most strongly influencing Kepler in thedirection of work he undertook. 3Also probably influential on Kepler was the work onwell-tempered harmony by Bishop Zarlino, the latter alsothe proper ancestor of Bach, the late Mozart and, mostexplicitly, the late work of Beethoven. Although the workof al-Farrabi was key to pre-14th-century developments inmusic in Europe, and Leonardo is also influential on thismatter, the agreement between Kepler and Zarlino oncrucial points is most striking, perhaps conclusive, evidenceof such an explicit indebtedness.It was well developed before Kepler that principles ofaction in living systems, and other phenomena as well,were coherent with the so-called divine proportion, thegolden mean. Kepler, who stressed this point, noted thecentral position of the golden mean relationship in theuniqueness of the five Platonic solids, as had his predecessors.4 To test whether or not this predominance was infact a lawful ordering of the universe, Kepler attacked themost conclusive body of evidence available to test thishypothesis: the solar orbits.He proved that the orbits, including their variations inorbital velocity, were fully subsumed by the same principlesof geometry which prescribed the well-tempered, 12-tone octave scale as the only lawfully determined tonalvalues and relationships within music. Although Keplermerely approximated values later determined more exactlyby Riemann's comprehensive solution to ellipticalfunctions, this element of approximation in Kepler's mathematicshas proven to be no source of defect in the proofas a whole, as a proof of the hypothesis. Today, Kepler'smethod is eerily superior in quality as well as quantity to12 November 1982 FUSION

any alternative method for describing the orbits of planetsand moons. 5The proportions of the spiral nebulas are properly takenas complementary proof of Kepler's hypothesis, relationshipscoherent with the same principle of the five Platonicsolids Kepler employed for the solar Orbits. The fact thatthe principal asteroids, despite wildly different orbitalpaths, all "sing" in the same tone, is also collateral reenforcementof the notion of uniqueness for Kepler'sproof. 6As Leibniz stressed the point against Descartes/ Kepler'sproof subsumes the necessary conclusion that real physicaltransformations occur not as straight-line action in visiblespace, but in a manner implied by the Archimedean spiral,as the Riemannian theory of a complex variable elaboratesthis for physics today.What, then, is the higher order of physical space, whichshapes the characteristic form of transformations seen invisible space? This, since Kepler's proof, has been thecentral question of scientific work. In brief: How do wedefine a higher continuous manifold, such that the characteristicform of action is vortical (helical), such thatprojections of that higher space into the visible domainnecessarily determine all of the characteristic features ofthe visible domain?This is the problem which Riemann essentially solved,beginning with the statement of his program as early asthe 1854 habilitation dissertation. There are omissions inRiemann's program, as he himself located such a deficiencyin respect to the concluding subsection of his 1854dissertation. These deficiencies, which bear on the determinationof number relationship in a Riemannian form ofcontinuous manifold, are implicitly overcome by the 1871-1883 work of Georg Cantor. For related reasons, it was notaccidental that this writer discovered the significance ofthe 1854 dissertation for himself from the vantage point ofCantor's notion of the ordering of transfinites. ThisCantor-Riemann method for analysis of higher-order continuousmanifolds supplies, and uniquely so, the basis forsolving the indicated problem of economic analysis.We now elaborate the principal conceptual steps bywhich the meaning of the 1854 habilitation dissertation isto be correctly understood.Visible space, with its apparent objects floating aboutand sometimes bumping one another, is what is describedas a discrete manifold. Our problem is that of defining acontinuous manifold, in which individual objects (quaobjects) have no self-evident existence, but only a determinedexistence. The projection of the events occurringin this continuous manifold must account fully for all ofthe visible events of the discrete manifold.The objects of visible space are not real objects, at leastnot in the sense naive opinion mistakenly assumes themto be ontologically self-evident. They are shadows ofreality, and are real as shadows. Shadows do not efficientlyact directly on one another to cause the movementsreflected as movements of shadows. They do not efficientlybump, nor do they act upon another efficiently ata distance. There is no efficient causality contained adequatelywithin visible space as such.Efficient action, including our own efficient actions,occurs "out of sight"—as the movement of ducks swimmingon the surface of the water occurs beneath thesurface. So, in attempting to comprehend efficient physicalspace, we attempt to look beyond the "surface." Weimagine the aspect of physical space which we see asbroadly analogous to the projection of action in threecoordinatespace and time upon two-coordinate spaceand time. We do not imagine that the higher-order spacewe infer "looks like" the images we construct to representit. Rather, we use the analogies of projection of threecoordinatespace upon two-coordinate and one-coordinatespace as a "language of vision," and through refinementof use of that language, through observation andexperiment, we make that language rigorous.This heurism of "language of vision" we know to bevalid, and uniquely so. If we know, as we do, that theordering of events in visible space is geometrically rational,then reality, the projection of a continuous manifold upona discrete manifold, is coherent with the rationality itprojects. Therefore, we are permitted, on principle, toemploy images which we construct in terms agreeable toour developed visual-conceptual powers, to representvisually thus a world we in fact cannot see. Our choicesof imagery in this are limited to those options which aresufficient and necessary to describe a continuous manifoldconsistent with projections into a discrete manifold correspondingto the shadow-reality of our visible space.The second of the two special problems is that ofdefining a continuous manifold which has an intrinsicmetrical quality of action. Although the metrical qualitiesof a continuous manifold must be qualitatively differentfrom those of a discrete manifold, there must be somemetrical feature of the continuous manifold which ac-FUSION November 1982 13

counts for a properly adduced, characteristic metricalfeature of our discrete manifold. This latter pair of problemsof projections is the crux of Riemann's 1854 habitationdissertation.There is only one kind of continuous manifold whichhas both the needed, inherent metrical qualities, andwhich subsumes efficiency of local existence under thatmetrical quality. This kind of manifold is one in which theexisting order, denotable arbitrarily by n, is passing overto a higher-order configuration, denotable by n + 1.The most appropriate term in the mathematics lexiconto describe the characteristic action of such an n into n+1manifold is negentropy. What appears to us as rigorouslydefined negentropic phenomena in terms of the visiblemanifold, is a projection of the characteristic, universalnegentropy of the continuous manifold. .For the purposes of this report, it is sufficient to directour attention only to the most crucial features of thisprojective correspondence.Our central problem is this.' Although we know thecontinuous manifold to be in projective correspondenceto our vision of the discrete manifold, there are characteristicdifferences between continuous manifolds anddiscrete manifolds, such that one cannot simply carry overcertain kinds of secondary phenomena of the one to theother.In a continuous manifold, for example, there can beobjects (singularities), but no ontologically self-evidentobjects of the sort which naive common sense imaginesitself to recognize in visible space. Similarly, there is noaction at a distance among objects in a continous manifold.There are two leading additional considerations mostcrucial to defining the proper selection of continuousmanifold.Although the objects of visible space are merely shadowsof reality, the shadows each correspond to an existencewhich is itself causally efficient. This is an impossiblecondition within a continuous manifold of a fixed order.That is the gist of Riemannian physics.This leaves us with an important problem to be resolved,a different sort of problem than we were obliged toemphasize on the pathway to Riemann's 1854 dissertation.First, we were obliged to specify the conditions a continuousmanifold must satisfy. Having defined those conditions,we must next concentrate efforts on developingmethods for representing the conceptions we havereached in that fashion. Although one can develop geometricimages of the transition from a specific n-manifoldto a successor n + 7-manifold rather directly, how do wegeneralize all such transformations, in the sense of generalizationassociated with geometrical mathematics as awhole? What we require, in order to represent such largerconceptions within our language of vision, is a methodfor defining coordinates in a three-space (visualizableimage of space), such that conical-helical action (for example)in a three-space of such coordinates might subsumeimplicitly the general form of successive transformations.To this purpose, Cantor's notion of the orderingsof transfinites is indispensable.14 November 1982 FUSION

Figure 2KEPLER'S PLANETARY GEOMETRYKepler developed his "Three Laws of Planetary Motion,"upon which modern astronomy is based, outof his certainty that the universe was coherent andthe Platonic insight that the laws of physics are thesame on the Earth as in the heavens. His first lawsays that a planet sweeps out equal areas in equaltime, no matter how far it is from the Sun. Thesecond law states that the orbits of the planets areellipses, with the Sun at one focus of the ellipse.Kepler's third law, which he considered to be his"great law," says that the ratio of the cube of thedistance of a planet from the Sun to the square ofthe time it takes the planet to go around the Sun isthe same for every planet.Kepler insisted that the existence of the solarsystem in its present form must also be explained. Inhis years' long search to find a lawful principle toexplain why the planets were spaced from the Sunin the distances that we empirically know them tobe, Kepler initially determined that these distancescould be derived by considering the planets to travelon orbits around spheres whose distance, one fromthe other, is determined by placing a three-dimensional,regular solid figure between each sphere, asshown in the model here. First, Kepler inscribed acube inside a globe, representing the orbit of thefarthermost known planet, Saturn. Then he put anotherglobe inside the cube, representing the orbitof Jupiter. Then between the globes representingJupiter and Mars, he put a tetrahedron. Around theEarth, Kepler placed a dodecahedron; inside theglobe representing the Earth, he placed an icosahedron;and between Venus and Mercury, he putan octahedron.As the accompanying table shows, Kepler was ableto account for the six known planets and theirdistances with this construction. Later he rejectedthe specific construction using the Platonic solids,but he continued to point to it as an example of hisgeometrical method.AVERAGE MEAN DISTANCES OFPLANETS FROM THE SUN(measured in astronomical units, A.U.; the distanceof Earth from the Sun equals 1 A.Li.—actually 92.9million miles)Kepler'sModernPlanet Polyhedrons AstronomyMercury .400 .387Venus .738 .723Earth 1 1Mars 1.432 1.524Jupiter 4.881 5.203Saturn 9.726 9.539For spaces of orders n, n + 1, n+2, ... we are able inthe highest practical importance. Yet, a preliminary theayseach case (actually or at least implicitly) to define arraysoretical step toward that undertaking is far more impor-of characteristic relationships peculiar to that order of tant. The first, indispensable step, without which thespace. Yet, each such array for one space must be different ;ntfrom the array proper to another. Insofar as we can treat =atsequences of arrays, arrays each distinguished by a fixedorder of space, as an ordered sequence, our problem ofrepresentation becomes implicitly solvable. The changes ;esin the array denoted by n to yield the array denoted byn + 1, are treated as the characteristic feature of the transformationfrom n to n + 1. The characteristic feature ofismasterdetailed elaboration cannot begin to be developed, is toa clear conception of this generalization as such,There are, in the best judgment of the matter we havebeen able to assemble so far, two elementary problemswhich tend to prevent educated mathematicians fromaccepting the combined work of Riemann and Cantor onits own terms.First, in order of commonplaceness, is the prevailing"extension" common to successive such characteristics ics notion of number as such. Leopold Kronecker's famous,implies the notion of ordering principle we require.To elaborate a mathematical structure for this work is ofand mind-disabling dictum, "God made the integers," isexemplary of this problem. In fact, the evidence is over-FUSION November 1982 15

Figure 3THE INADEQUACY OF PYTHAGOREAN DISTANCEAS A METRIC FOR PHYSICAL SPACEIn Euclidean-Cartesian two-dimensional space, themagnitude of displacement ds of a point is determinedin terms of the changes of its coordinates dx,and dx, by the Pythagorean formula ds 2 = dx, 2 + dx, 2 ,or ds = v 1 dx, 2 + dx 2 2 . (More familiarly, this is h 2 = a 2+ b 2 and h = %• a 2 + b 2 , where h is the hypotenuseand a and b are the sides of a triangle).In the generalization of Euclidean-Cartesian spaceto any number n, of dimensions, the correspondingformula is ds = J dx, 2 + dx 2 2 +. . + dx 2 n . Anotherway of writing this isVnn£ dx 2 , where £1-1 wrepresents the summation of all numbers from 1 asthe index, i, to n.This notion of magnitude of linear displacementas the model of the general notion of metric hasbecome a major stumbling block for the furtherdevelopment of physics and economics, because itexcludes the possibility of negentropic developmentin the universe. Suppose, however, that instead ofmeasuring the internal geometry of a given manifoldwith such a displacement, we look at the change inthe magnitude of this displacement as the manifoldincreases its number of degrees of freedom, ordimensionality. If we call ds, the displacement forthe first space, and ds 2 the displacement for thesecond, then a change in the displacement, d(ds) ord 2 s can be heuristically written:jn/n+fd 2 s=d 1 s-d 2 s=-^2: dXi 2 --l/Z dx; 2 .This formula expresses the magnitude of thechange in metric effected by a change in the orderof the space from n to n + 1 dimensions.whelming, that only what we term "complex numbers"have any primary sort of existence as number in respectto the metrical characteristic of our universe. The notionthat the "simplest object" must be the most elementaryis the broader fallacy underlying this.Second, topology is burdened by repetition of thenonsense-assertion that there are no metrical features totopology as such. This is a tricky problem, since thedevelopment of topology has depended upon reexamininggeometry freed of the burden of those metrical assumptionswe associate with the visible manifold. Suchmetrics had to be discarded, in order to develop topologyto the point at which the metrical features of a continuousmanifold could be rigorously defined in such a way thatwe could be certain we had not carried over some exogeneousassumption borrowed from our naive view of thediscrete manifold.Plainly, many students of topology have forgotten whyLeibniz undertook analysis situs in the first place. In orderto adduce the characteristics of a continuous manifold interms of characteristic transformations in a visible, discretemanifold, it was indispensable to discard from geometryof visible space only those features which were peculiarbv their nature to a discrete manifold. We assume forproperly obvious reasons, that those features of geometry• which are not idiosyncrasies of discrete manifolds are thequalities carried over by projection from the continuousto the discrete. The end-object of this process of searchingwhich is called topology, was properly to discover themetrical characteristics intrinsic to the continuous manifold.The topologist is too often like the fellow who took ajourney to another city to fetch his bride, but, midjourney,became so fascinated with this excursion itself, that hebegan walking around aimlessly in one direction, thenanother, having forgotten what the purpose of the departurehad been.What we require is a characteristic metrical feature ofa negentropic continuous manifold, which informs us howwe must interpret the distance-function of phase spacetransformations in a discrete manifold, to the effect thatthis proper interpretation of phase spaces yields a characteristic,metrical feature of the discrete manifold, whichis in projective agreement with the corresponding metricalfeature of action in the negentropic continuous manifold.The simple Pythagorean expression,ds = i Z (dx,) 2will not do (Figure 3). We require a measurement of d 2 sfor the case that the manifold is undergoing transformationfrom order n to n + 1.Such a correct approach to the metrical features of thediscrete manifold defines this value, d 2 s, as the uniquemeasure of incremental work. This work must be definedin respect to the work of increasing the potential relativepopulation-density of society through technological ad-16 November 1982 FUSION

Figure 4THE LOGARITHMIC (SELF-SIMILAR)SPIRAL ON A CONEThe conical form of the logarithmic spiral is generatedas the trajectory of a moving point on a cone, whoseangle a of ascent or descent, relative to the apex of thecone, remains constant (a). This curve has the characteristicproperty that each successive "loop," differsfrom the preceeding loop only in scale, with a constantof proportionality that depends only on the angle ofascent or descent chosen. Thus, in a "base 2" spiral, (b)each successive loop moving away from the apex ofthe cone, doubles the distance from the apex.An easily constructed instrument for generating suchspirals will be described in a forthcoming Fusion article.A rough approximation can be obtained by folding aquarter-circle as shown in (c). A circle, AB, is drawnwith radius half that of the original circle. Then, astraight line is drawn from the point D to B (this willform an approximation of the first loop of the spiral).Next, a circle with half the radius of circle AB is drawn,EF. The corresponding straight line, AF, gives an approximationto the second loop of the spiral. Thisprocess can be continued to generate a conical spiralwith almost constant pitch. d) Model of a cone constructed of clear acetatevancement. The negentropy of the system is the work ofthe system.For related reaons, Riemann's 1859 hypothesis, settingforth the determination of acoustical shock waves underexperimental conditions satisfying his "infinite cylinder"case, is the prototype of all valid scientific experiments,insofar as any experiments can be said to have authoritativebearing on knowledge of the lawful ordering of ouruniverse. It is for this reason that the 1859 paper has beenthe model for crucial experimental treatment of relativisticphenomena in a broad assortment of cases outside ofaerodynamics."Physical Topology"That we be neither misunderstood nor give premise formisinterpretation of what we are about to say, we stipulateat the outset that the imagery we now introduce is achoice of pedagogical device—if it pleases you to call itsuch, a "heurism."This writer does not assert that this is necessarily themost appropriate heurism which might be cooked up. Heinsists merely that it is the pedagogical imagery whichpresently appears the best compromise between the capacitiesof students and his own mental image of thematerial to be presented. Additionally, it contains no erroras an image with respect to the points to be presentedwith aid of such a representation.IImagine, first, a hollow sphere. You, the observer, aresituated such that your eyes are looking into the spherefrom a point we shall designate the "north pole" of thatbody. This sphere's south pole is sitting upon a flat surface.As you, the observer, attempt to view the images projectedon that flat surface outside the sphere, what you are ableto see is a shadow of those images. The shadow is thatFUSION November 1982 17

Figure 5CONICAL SPIRALS ANDTHE WELL-TEMPERED SCALEA striking example of the power of the self-similarconical spiral is its use for the construction of thewell-tempered scale. If we project a "base 2" spiral(one whose inclination is such that a 360° loop ofthe spiral going up to the apex halves the distanceto the apex), on the plane of its base perpendicularly(see a), we will create a flat exponential spiral. Bydividing the circle of the base into 12 equal sections(see b), the intersections of these 12 radii with theflat spiral determine a sequence of distances fromthe center of the circle to the spiral. These increasingdistances are the lengths of the vibrating strings(assuming constant tension) that will produce the 12Model by Dorothea Bunnell; photo Carlos de Hoyostones of a well-tempered scale. If we call the longestdistance the note C, the succeeding lengths movinginward on the spiral will correspond to D, D#, E, F,f#, and so on, as shown. Pairs of points correspondingto the same interval (for example, C and E withB and D, the major third) subtend the same angle atthe center of the circle. In this representation, intervalscorrespond to angles and transposition fromone tone to another to rotation through some angle.The strings on the model shown in c can beplucked to play the well-tempered scale.defined by a line of sight drawn through the surface ofthe sphere and to the points of the image on the flatsurface. You see the projection of that image on theinterior surface of the sphere.Now, to extend the description of this pedagogicalimagery to include other considerations: The images onthe plane surface are themselves projected images. Theyare the composite image of—in first approximation—severaldistinct cones, whose central axis may, in the simplestcase, intersect at some common point on the surface.The reader might, for example, construct a logarithmicspiral on a cone (Figure 4). Divide a circle into four equalparts, by means of two perpendicular diameters. In onequadrant, mark off inscribed quarter-circles by halving theradius successively. Now construct diagonal lines betweenthe arcs, in the obvious way, and then cut out and foldto-jointhe quarter-circle to form a cone. 8 This is anexcellent exercise for the amateur mathematician, whohaving completed this construction, should referenceJacob Steiner's program for geometry instruction as toprinciples of geometric proof, and should also comparethe result of this construction with Leonhard Euler's determinationof the "natural logarithmic" value.Having constructed the cone in this manner, imaginethat the spiral (helix, vortex) so portrayed had beengenerated by continuous, homogeneous action, startingfrom the apex of the cone. This, of course, requires afunction in terms of two variables. The first variable is adistance of displacement along the midline-axis of thecone, from the apex of the cone toward the base. The1« November 1982 FUSION