Boosted Regression (Boosting): An introductory tutorial and a Stata ...

Boosted Regression (Boosting): An introductory tutorial and a Stata ...

Boosted Regression (Boosting): An introductory tutorial and a Stata ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

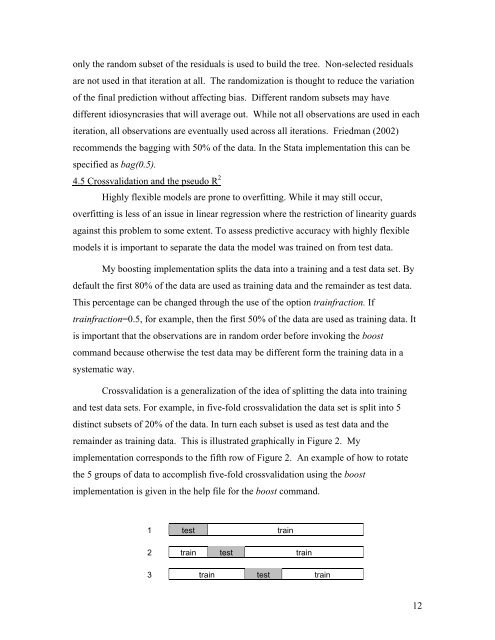

only the r<strong>and</strong>om subset of the residuals is used to build the tree. Non-selected residualsare not used in that iteration at all. The r<strong>and</strong>omization is thought to reduce the variationof the final prediction without affecting bias. Different r<strong>and</strong>om subsets may havedifferent idiosyncrasies that will average out. While not all observations are used in eachiteration, all observations are eventually used across all iterations. Friedman (2002)recommends the bagging with 50% of the data. In the <strong>Stata</strong> implementation this can bespecified as bag(0.5).4.5 Crossvalidation <strong>and</strong> the pseudo R 2Highly flexible models are prone to overfitting. While it may still occur,overfitting is less of an issue in linear regression where the restriction of linearity guardsagainst this problem to some extent. To assess predictive accuracy with highly flexiblemodels it is important to separate the data the model was trained on from test data.My boosting implementation splits the data into a training <strong>and</strong> a test data set. Bydefault the first 80% of the data are used as training data <strong>and</strong> the remainder as test data.This percentage can be changed through the use of the option trainfraction. Iftrainfraction=0.5, for example, then the first 50% of the data are used as training data. Itis important that the observations are in r<strong>and</strong>om order before invoking the boostcomm<strong>and</strong> because otherwise the test data may be different form the training data in asystematic way.Crossvalidation is a generalization of the idea of splitting the data into training<strong>and</strong> test data sets. For example, in five-fold crossvalidation the data set is split into 5distinct subsets of 20% of the data. In turn each subset is used as test data <strong>and</strong> theremainder as training data. This is illustrated graphically in Figure 2. Myimplementation corresponds to the fifth row of Figure 2. <strong>An</strong> example of how to rotatethe 5 groups of data to accomplish five-fold crossvalidation using the boostimplementation is given in the help file for the boost comm<strong>and</strong>.1 test train2 train test train3 train test train12