- Page 2 and 3:

Foundations of Python Network Progr

- Page 4 and 5:

To the Python community for creatin

- Page 6 and 7:

Contents ■Contents at a Glance ..

- Page 8 and 9:

■ CONTENTS Asking getaddrinfo() W

- Page 10 and 11:

■ CONTENTS Using Message Queues f

- Page 12 and 13:

■ CONTENTS Parsing Dates ........

- Page 14 and 15:

■ CONTENTS Telnet ...............

- Page 16 and 17:

About the Authors ■ Brandon Craig

- Page 18 and 19:

Acknowledgements This book owes its

- Page 20 and 21:

■ INTRODUCTION If you do know som

- Page 22 and 23:

C H A P T E R 1 ■ ■ ■ Introdu

- Page 24 and 25:

CHAPTER 1 ■ INTRODUCTION TO CLIEN

- Page 26 and 27:

CHAPTER 1 ■ INTRODUCTION TO CLIEN

- Page 28 and 29:

CHAPTER 1 ■ INTRODUCTION TO CLIEN

- Page 30 and 31:

CHAPTER 1 ■ INTRODUCTION TO CLIEN

- Page 32 and 33:

CHAPTER 1 ■ INTRODUCTION TO CLIEN

- Page 34 and 35:

CHAPTER 1 ■ INTRODUCTION TO CLIEN

- Page 36 and 37:

C H A P T E R 2 ■ ■ ■ UDP The

- Page 38 and 39:

CHAPTER 2 ■ UDP server with SSH.

- Page 40 and 41:

CHAPTER 2 ■ UDP them anywhere in

- Page 42 and 43:

CHAPTER 2 ■ UDP command-line argu

- Page 44 and 45:

CHAPTER 2 ■ UDP » » » » raise

- Page 46 and 47:

CHAPTER 2 ■ UDP world itself give

- Page 48 and 49:

CHAPTER 2 ■ UDP socket that is no

- Page 50 and 51:

CHAPTER 2 ■ UDP So binding to an

- Page 52 and 53:

CHAPTER 2 ■ UDP s.connect((hostna

- Page 54 and 55:

CHAPTER 2 ■ UDP else: » print >>

- Page 56 and 57:

C H A P T E R 3 ■ ■ ■ TCP The

- Page 58 and 59:

CHAPTER 3 ■ TCP situation), and t

- Page 60 and 61:

CHAPTER 3 ■ TCP » reply = recv_a

- Page 62 and 63:

CHAPTER 3 ■ TCP guess when the in

- Page 64 and 65:

CHAPTER 3 ■ TCP the system has no

- Page 66 and 67:

CHAPTER 3 ■ TCP » » » print '\

- Page 68 and 69:

CHAPTER 3 ■ TCP $ python tcp_dead

- Page 70 and 71:

CHAPTER 3 ■ TCP Using TCP Streams

- Page 72 and 73:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 74 and 75:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 76 and 77:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 78 and 79:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 80 and 81:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 82 and 83:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 84 and 85:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 86 and 87:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 88 and 89:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 90 and 91:

CHAPTER 4 ■ SOCKET NAMES AND DNS

- Page 92 and 93:

CHAPTER 5 ■ NETWORK DATA AND NETW

- Page 94 and 95:

CHAPTER 5 ■ NETWORK DATA AND NETW

- Page 96 and 97:

CHAPTER 5 ■ NETWORK DATA AND NETW

- Page 98 and 99:

CHAPTER 5 ■ NETWORK DATA AND NETW

- Page 100 and 101:

CHAPTER 5 ■ NETWORK DATA AND NETW

- Page 102 and 103:

CHAPTER 5 ■ NETWORK DATA AND NETW

- Page 104 and 105:

CHAPTER 5 ■ NETWORK DATA AND NETW

- Page 106 and 107:

C H A P T E R 6 ■ ■ ■ TLS and

- Page 108 and 109:

CHAPTER 6 ■ TLS AND SSL systems a

- Page 110 and 111:

CHAPTER 6 ■ TLS AND SSL • He wi

- Page 112 and 113:

CHAPTER 6 ■ TLS AND SSL discussio

- Page 114 and 115:

CHAPTER 6 ■ TLS AND SSL • The s

- Page 116 and 117:

CHAPTER 6 ■ TLS AND SSL The Links

- Page 118 and 119:

C H A P T E R 7 ■ ■ ■ Server

- Page 120 and 121:

CHAPTER 7 ■ SERVER ARCHITECTURE P

- Page 122 and 123:

CHAPTER 7 ■ SERVER ARCHITECTURE

- Page 124 and 125:

CHAPTER 7 ■ SERVER ARCHITECTURE N

- Page 126 and 127:

CHAPTER 7 ■ SERVER ARCHITECTURE L

- Page 128 and 129:

CHAPTER 7 ■ SERVER ARCHITECTURE F

- Page 130 and 131:

CHAPTER 7 ■ SERVER ARCHITECTURE

- Page 132 and 133:

CHAPTER 7 ■ SERVER ARCHITECTURE p

- Page 134 and 135:

CHAPTER 7 ■ SERVER ARCHITECTURE N

- Page 136 and 137:

CHAPTER 7 ■ SERVER ARCHITECTURE L

- Page 138 and 139:

CHAPTER 7 ■ SERVER ARCHITECTURE

- Page 140 and 141:

CHAPTER 7 ■ SERVER ARCHITECTURE s

- Page 142 and 143:

CHAPTER 7 ■ SERVER ARCHITECTURE c

- Page 144 and 145:

C H A P T E R 8 ■ ■ ■ Caches,

- Page 146 and 147: CHAPTER 8 ■ CACHES, MESSAGE QUEUE

- Page 148 and 149: CHAPTER 8 ■ CACHES, MESSAGE QUEUE

- Page 150 and 151: CHAPTER 8 ■ CACHES, MESSAGE QUEUE

- Page 152 and 153: CHAPTER 8 ■ CACHES, MESSAGE QUEUE

- Page 154 and 155: CHAPTER 8 ■ CACHES, MESSAGE QUEUE

- Page 156 and 157: C H A P T E R 9 ■ ■ ■ HTTP Th

- Page 158 and 159: CHAPTER 9 ■ HTTP Here, the URL sp

- Page 160 and 161: CHAPTER 9 ■ HTTP Relative URLs Ve

- Page 162 and 163: CHAPTER 9 ■ HTTP From now on, I a

- Page 164 and 165: CHAPTER 9 ■ HTTP • 303 See Othe

- Page 166 and 167: CHAPTER 9 ■ HTTP You cannot tell

- Page 168 and 169: CHAPTER 9 ■ HTTP Instead of stuff

- Page 170 and 171: CHAPTER 9 ■ HTTP POST And APIs Al

- Page 172 and 173: CHAPTER 9 ■ HTTP Content Type Neg

- Page 174 and 175: CHAPTER 9 ■ HTTP HTTP Caching Man

- Page 176 and 177: CHAPTER 9 ■ HTTP If the connectio

- Page 178 and 179: CHAPTER 9 ■ HTTP >>> import cooki

- Page 180 and 181: CHAPTER 9 ■ HTTP So the technique

- Page 182 and 183: C H A P T E R 10 ■ ■ ■ Screen

- Page 184 and 185: CHAPTER 10 ■ SCREEN SCRAPING Figu

- Page 186 and 187: CHAPTER 10 ■ SCREEN SCRAPING cont

- Page 188 and 189: CHAPTER 10 ■ SCREEN SCRAPING Thir

- Page 190 and 191: CHAPTER 10 ■ SCREEN SCRAPING Ther

- Page 192 and 193: CHAPTER 10 ■ SCREEN SCRAPING Beau

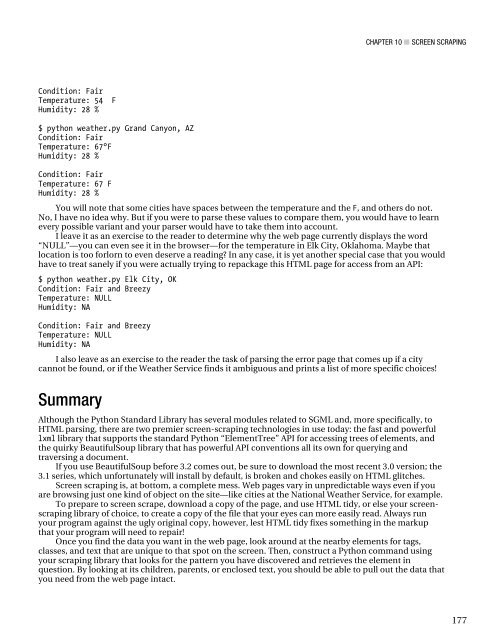

- Page 194 and 195: CHAPTER 10 ■ SCREEN SCRAPING If y

- Page 198 and 199: C H A P T E R 11 ■ ■ ■ Web Ap

- Page 200 and 201: CHAPTER 11 ■ WEB APPLICATIONS Thi

- Page 202 and 203: CHAPTER 11 ■ WEB APPLICATIONS But

- Page 204 and 205: CHAPTER 11 ■ WEB APPLICATIONS the

- Page 206 and 207: CHAPTER 11 ■ WEB APPLICATIONS •

- Page 208 and 209: CHAPTER 11 ■ WEB APPLICATIONS hig

- Page 210 and 211: CHAPTER 11 ■ WEB APPLICATIONS The

- Page 212 and 213: CHAPTER 11 ■ WEB APPLICATIONS the

- Page 214 and 215: CHAPTER 11 ■ WEB APPLICATIONS The

- Page 216 and 217: C H A P T E R 12 ■ ■ ■ E-mail

- Page 218 and 219: CHAPTER 12 ■ E-MAIL COMPOSITION A

- Page 220 and 221: CHAPTER 12 ■ E-MAIL COMPOSITION A

- Page 222 and 223: CHAPTER 12 ■ E-MAIL COMPOSITION A

- Page 224 and 225: CHAPTER 12 ■ E-MAIL COMPOSITION A

- Page 226 and 227: CHAPTER 12 ■ E-MAIL COMPOSITION A

- Page 228 and 229: CHAPTER 12 ■ E-MAIL COMPOSITION A

- Page 230 and 231: CHAPTER 12 ■ E-MAIL COMPOSITION A

- Page 232 and 233: CHAPTER 12 ■ E-MAIL COMPOSITION A

- Page 234 and 235: CHAPTER 12 ■ E-MAIL COMPOSITION A

- Page 236 and 237: C H A P T E R 13 ■ ■ ■ SMTP A

- Page 238 and 239: CHAPTER 13 ■ SMTP anyway. Outgoin

- Page 240 and 241: CHAPTER 13 ■ SMTP How SMTP Is Use

- Page 242 and 243: CHAPTER 13 ■ SMTP This mechanism

- Page 244 and 245: CHAPTER 13 ■ SMTP s = smtplib.SMT

- Page 246 and 247:

CHAPTER 13 ■ SMTP ETRN STARTTLS X

- Page 248 and 249:

CHAPTER 13 ■ SMTP » s = smtplib.

- Page 250 and 251:

CHAPTER 13 ■ SMTP exchange mail o

- Page 252 and 253:

CHAPTER 13 ■ SMTP username = sys.

- Page 254 and 255:

C H A P T E R 14 ■ ■ ■ POP PO

- Page 256 and 257:

CHAPTER 14 ■ POP ■ Caution! Whi

- Page 258 and 259:

CHAPTER 14 ■ POP finally: » p.qu

- Page 260 and 261:

CHAPTER 14 ■ POP Subject: Backup

- Page 262 and 263:

CHAPTER 15 ■ IMAP THE IMAP PROTOC

- Page 264 and 265:

CHAPTER 15 ■ IMAP '(\\HasNoChildr

- Page 266 and 267:

CHAPTER 15 ■ IMAP Examining Folde

- Page 268 and 269:

CHAPTER 15 ■ IMAP Listing 15-5. D

- Page 270 and 271:

CHAPTER 15 ■ IMAP key that IMAP h

- Page 272 and 273:

CHAPTER 15 ■ IMAP » » print def

- Page 274 and 275:

CHAPTER 15 ■ IMAP » From: Brando

- Page 276 and 277:

CHAPTER 15 ■ IMAP • \Flagged: T

- Page 278 and 279:

CHAPTER 15 ■ IMAP An IMAP message

- Page 280 and 281:

CHAPTER 15 ■ IMAP display or summ

- Page 282 and 283:

CHAPTER 16 ■ TELNET AND SSH cloud

- Page 284 and 285:

CHAPTER 16 ■ TELNET AND SSH Unix

- Page 286 and 287:

CHAPTER 16 ■ TELNET AND SSH Do yo

- Page 288 and 289:

CHAPTER 16 ■ TELNET AND SSH As we

- Page 290 and 291:

CHAPTER 16 ■ TELNET AND SSH tabif

- Page 292 and 293:

CHAPTER 16 ■ TELNET AND SSH repla

- Page 294 and 295:

CHAPTER 16 ■ TELNET AND SSH Listi

- Page 296 and 297:

CHAPTER 16 ■ TELNET AND SSH def p

- Page 298 and 299:

CHAPTER 16 ■ TELNET AND SSH We wi

- Page 300 and 301:

CHAPTER 16 ■ TELNET AND SSH • p

- Page 302 and 303:

CHAPTER 16 ■ TELNET AND SSH You w

- Page 304 and 305:

CHAPTER 16 ■ TELNET AND SSH » »

- Page 306 and 307:

CHAPTER 16 ■ TELNET AND SSH Listi

- Page 308 and 309:

CHAPTER 16 ■ TELNET AND SSH Summa

- Page 310 and 311:

CHAPTER 17 ■ FTP The biggest prob

- Page 312 and 313:

CHAPTER 17 ■ FTP f.login() print

- Page 314 and 315:

CHAPTER 17 ■ FTP if os.path.exist

- Page 316 and 317:

CHAPTER 17 ■ FTP f = FTP(host) f.

- Page 318 and 319:

CHAPTER 17 ■ FTP Windows servers

- Page 320 and 321:

CHAPTER 17 ■ FTP » try: » » f.

- Page 322 and 323:

C H A P T E R 18 ■ ■ ■ RPC Re

- Page 324 and 325:

CHAPTER 18 ■ RPC sort of proxy ex

- Page 326 and 327:

CHAPTER 18 ■ RPC The SimpleXMLRPC

- Page 328 and 329:

CHAPTER 18 ■ RPC Traceback (most

- Page 330 and 331:

CHAPTER 18 ■ RPC 8.0 If this

- Page 332 and 333:

CHAPTER 18 ■ RPC Note that the po

- Page 334 and 335:

CHAPTER 18 ■ RPC up being, simply

- Page 336 and 337:

CHAPTER 18 ■ RPC such as Python i

- Page 338 and 339:

CHAPTER 18 ■ RPC • Google Proto

- Page 340 and 341:

■ INDEX mod_python, 194 Qpid, 131

- Page 342 and 343:

■ INDEX Common Gateway Interface.

- Page 344 and 345:

■ INDEX international characters

- Page 346 and 347:

■ INDEX front-end web servers, 17

- Page 348 and 349:

■ INDEX deleting folders, 260 del

- Page 350 and 351:

■ INDEX mechanize, 138, 163 Memca

- Page 352 and 353:

■ INDEX pausing terminal output,

- Page 354 and 355:

■ INDEX resources. See also RFCs

- Page 356 and 357:

■ INDEX shutdown(), 48 shutting d

- Page 358 and 359:

■ INDEX terminals, 270-74 bufferi

- Page 360 and 361:

■ INDEX ■ V validating cached r