Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

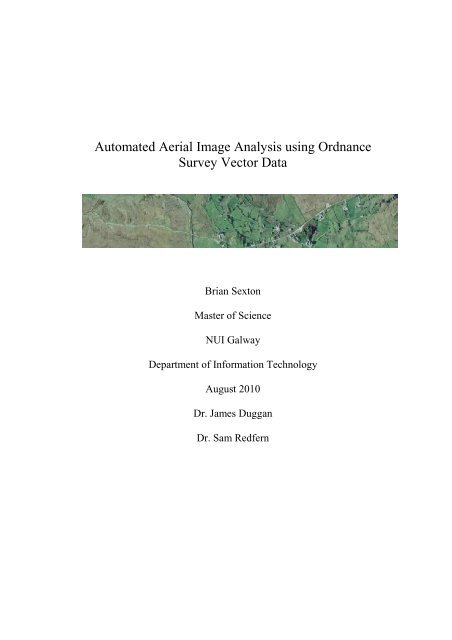

Automated Aerial Image Analysis using Ordnance<br />

Survey Vector Data<br />

Brian Sexton<br />

Master of Science<br />

NUI Galway<br />

Department of Information Technology<br />

August 2010<br />

Dr. James Duggan<br />

Dr. Sam Redfern

Certificate of Authorship<br />

i

Contents<br />

ii<br />

Page<br />

Certificate of Authorship .......................................................................................i<br />

Contents ..................................................................................................................ii<br />

List of Tables ........................................................................................................ iii<br />

List of Figures........................................................................................................iv<br />

Abstract..................................................................................................................vi<br />

1 Project Outline...................................................................................................1<br />

1.1 Project Overview .......................................................................................1<br />

1.2 General Introduction and Background.......................................................8<br />

2 Stepping through the Algorithm....................................................................18<br />

2.1 Initial Inputs.............................................................................................23<br />

2.2 Area Extraction........................................................................................27<br />

2.3 Spectral Value Comparison .....................................................................29<br />

2.4 Confirmation............................................................................................32<br />

3 Sampling for the Baseline Image Key ...........................................................34<br />

3.1 Roads .......................................................................................................35<br />

3.2 Water........................................................................................................42<br />

3.3 Marsh .......................................................................................................49<br />

3.4 Coniferous Forestry .................................................................................55<br />

3.5 Mixed Forestry.........................................................................................61<br />

3.6 Track ........................................................................................................66<br />

3.7 Shade........................................................................................................72<br />

3.8 Roof Areas ...............................................................................................78<br />

3.9 Pasture......................................................................................................86<br />

3.10 Rough Pasture..........................................................................................92<br />

4 Testing ..............................................................................................................98<br />

4.1 Pasture Test..............................................................................................99<br />

4.2 Rough Pasture Test ................................................................................109<br />

4.3 Marsh Test .............................................................................................119<br />

4.4 Bog Test.................................................................................................132<br />

4.5 Conclusion .............................................................................................146<br />

5 Literature Review..........................................................................................148<br />

5.1 Spectral and image considerations for the thesis...................................152<br />

5.2 Vector and polygon based studies of aerial photography......................159<br />

6 References ......................................................................................................167

List of Tables<br />

iii<br />

Page<br />

Table 1: Road sample values .................................................................................37<br />

Table 2: Road test sample value 1 .........................................................................39<br />

Table 3: Road test sample value 2 .........................................................................40<br />

Table 4: Road test sample value 3 .........................................................................41<br />

Table 5: Water sample values ................................................................................43<br />

Table 6: Water test sample values .........................................................................45<br />

Table 7: Marsh sample values................................................................................50<br />

Table 8: Marsh test sample values .........................................................................52<br />

Table 9: Coniferous forestry sample values...........................................................56<br />

Table 10: Coniferous forestry test sample values ..................................................58<br />

Table 11: Mixed forestry sample values................................................................62<br />

Table 12: Mixed forestry test sample values .........................................................63<br />

Table 13: Track sample values...............................................................................67<br />

Table 14: Track test sample values........................................................................69<br />

Table 15: Shade sample values ..............................................................................73<br />

Table 16: Shade test sample value 1 ......................................................................74<br />

Table 17: Shade test sample value 2 ......................................................................76<br />

Table 18: Shade test sample value 3 ......................................................................77<br />

Table 19: Roof pixel sample values.......................................................................81<br />

Table 20: Roof test sample value 1........................................................................84<br />

Table 21: Roof test sample value 2........................................................................84<br />

Table 22: Roof test sample value 3........................................................................85<br />

Table 23: Pasture sample values ............................................................................87<br />

Table 24: Pasture test sample values......................................................................89<br />

Table 25: Rough pasture sample values.................................................................93<br />

Table 26: Rough pasture test sample values ..........................................................95

List of Figures<br />

iv<br />

Page<br />

Figure 1: Aerial view of sample area.....................................................................34<br />

Figure 2: Road area and surrounding detail...........................................................35<br />

Figure 3: Road area and vector data.......................................................................36<br />

Figure 4: Typical Water Area Image .....................................................................42<br />

Figure 5: Water Area Image Modification.............................................................44<br />

Figure 6: Sample area as a mosaic of polygons.....................................................47<br />

Figure 7: Typical Marsh Area Image.....................................................................49<br />

Figure 8: Typical Mixed Forestry Area Image ......................................................61<br />

Figure 9: Typical Track Area Image......................................................................66<br />

Figure 10: Typical Shade Area Image ...................................................................72<br />

Figure 11: Histogram for Shade and Pasture .........................................................75<br />

Figure 12: Typical Roof Value Area Image...........................................................78<br />

Figure 13: Distribution of Buildings/Roofs in the Sample ....................................80<br />

Figure 14: Blue colour band pixel count for study area.........................................82<br />

Figure 15: Typical Pasture Area Image .................................................................86<br />

Figure 16: Typical Rough Pasture Area Image......................................................92<br />

Figure 17: Creating the ASCII file.......................................................................100<br />

Figure 18: Aerial view of pasture test 1...............................................................100<br />

Figure 19: Red colour band for pasture test 1......................................................101<br />

Figure 20: Green colour band for pasture test 1 ..................................................102<br />

Figure 21: Aerial view of pasture test 2...............................................................102<br />

Figure 22: Red colour band for pasture test 2......................................................103<br />

Figure 23: Green colour band for pasture test 2 ..................................................104<br />

Figure 24: Aerial view of pasture test 3...............................................................104<br />

Figure 25: Red colour band for pasture test 3......................................................105<br />

Figure 26: Green colour band for pasture test 3 ..................................................105<br />

Figure 27: Vector data for pasture test 4..............................................................106<br />

Figure 28: Aerial view of pasture test 4...............................................................107<br />

Figure 29: Red colour band for pasture test 4......................................................107<br />

Figure 30: Green colour band for pasture test 4 ..................................................108<br />

Figure 31: Vector data for rough pasture test 1 ...................................................110<br />

Figure 32: Aerial view of rough pasture test 1.....................................................110<br />

Figure 33: Red colour band for rough pasture test 1 ...........................................111<br />

Figure 34: Green colour band for rough pasture test 1 ........................................112<br />

Figure 35: Aerial view of rough pasture test 2.....................................................112<br />

Figure 36: Red colour band for rough pasture test 2 ...........................................113<br />

Figure 37: Green colour band for rough pasture test 2 ........................................114<br />

Figure 38: Aerial view of rough pasture test 3.....................................................114<br />

Figure 39: Red colour band for rough pasture test 3 ...........................................115<br />

Figure 40: Green colour band for rough pasture test 3 ........................................115<br />

Figure 41: Vector data for rough pasture test 4 ...................................................116<br />

Figure 42: Aerial view of rough pasture test 4.....................................................117<br />

Figure 43: Red colour band for rough pasture test 4 ...........................................117<br />

Figure 44: Green colour band for rough pasture test 4 ........................................118<br />

Figure 45: Vector data for marsh test 1 ...............................................................120<br />

Figure 46: Aerial view of marsh test 1.................................................................121

Figure 47: Red colour band for marsh test 1........................................................122<br />

Figure 48: Green colour band marsh test 1..........................................................122<br />

Figure 49: Blue colour band for marsh test 1.......................................................123<br />

Figure 50: Aerial view of marsh test 2.................................................................124<br />

Figure 51: Red colour band for marsh test 2........................................................125<br />

Figure 52: Green colour band marsh test 2..........................................................125<br />

Figure 53: Blue colour band for marsh test 2.......................................................126<br />

Figure 54: Aerial view of marsh test 3.................................................................127<br />

Figure 55: Red colour band for marsh test 3........................................................128<br />

Figure 56: Blue colour band for marsh test 3.......................................................129<br />

Figure 57: Aerial view of marsh test 4.................................................................130<br />

Figure 58: Red colour band for marsh test 4........................................................130<br />

Figure 59: Blue colour band for marsh test 4.......................................................131<br />

Figure 60: Vector data for bog test 1 ...................................................................132<br />

Figure 61: Aerial view for bog test 1 ...................................................................133<br />

Figure 62: Red colour band for bog test 1 ...........................................................134<br />

Figure 63: Green colour band for bog test 1 ........................................................134<br />

Figure 64: Blue colour band for bog test 1 ..........................................................135<br />

Figure 65: Vector data for bog test 2 ...................................................................136<br />

Figure 66: Aerial view for bog test 2 ...................................................................137<br />

Figure 67: Red colour band for bog test 2 ...........................................................137<br />

Figure 68: Green colour band for bog test 2 ........................................................138<br />

Figure 69: Blue colour band for bog test 2 ..........................................................139<br />

Figure 70: Aerial view for bog test 3 ...................................................................140<br />

Figure 71: Red colour band for bog test 3 ...........................................................141<br />

Figure 72: Green colour band for bog test 3 ........................................................141<br />

Figure 73: Blue colour band for bog test 3 ..........................................................142<br />

Figure 74: Aerial view for bog test 4 ...................................................................143<br />

Figure 75: Red colour band for bog test 4 ...........................................................143<br />

Figure 76: Green colour band for bog test 4 ........................................................144<br />

Figure 77: Blue colour band for bog test 4 ..........................................................145<br />

v

Abstract<br />

This study sets out an algorithm for the automatic analysis of controlled (flattened)<br />

aerial photography using ordnance survey vector data. It uses the vector to clip the<br />

aerial image into a set of small area polygons which are then analyzed for the<br />

spectral properties and classified according to the result. The study tests sections<br />

of aerial photography from a sample area in County Galway for specific spectral<br />

properties. This was to identify the type of ground cover and was achieved using<br />

an image key of spectral properties which was developed during the study. This is<br />

called training the image key. A testing section shows that it is possible to derive<br />

information about the land use type from these areas based on the range of values<br />

returned from a pixel count of spectral properties within a small area polygon.<br />

The study uses several open source software frameworks to complete the<br />

experiment, most notably the MATLAB based Mirone application, but can be<br />

extended to any software capable of handling irregular polygons in a projection<br />

system. The body of the study is set out in three chapters, the first detailing the<br />

process, the second detailing the sampling for unique values and the third details<br />

the testing which took place. Chapters 3 and 4 are sub divided into sections<br />

describing the research on specific land use types and their spectral signatures.<br />

Chopping an aerial image into a mosaic of (relatively) homogenous values, e.g.<br />

pasture, forestry, marsh etc., increases the accuracy of automated analysis. This is<br />

the first time that a spectral analysis has been attempted using ordnance survey<br />

Ireland small area polygons to clip the image. It is of interest to researchers,<br />

planners, developers etc., looking to simplify and automate this type of search<br />

over a large region.<br />

Note: Contact brian.sexton@osi.ie for a set of sample files for training the image<br />

key.<br />

vi

1 Project Outline<br />

1.1 Project Overview<br />

The following study is an attempt to devise an automatic method of analyzing<br />

aerial photography based on vector data. It presents an algorithm and calibration<br />

data for someone seeking to complete a search of aerial imagery based on a<br />

spectral signature. The premise on which the work was undertaken was that, given<br />

the small area polygons and coding data present in ordnance survey data, it should<br />

be possible to cut a controlled aerial photograph into a mosaic of sections and<br />

automatically identify the type of ground cover.<br />

The goal of the study was to identify a series of steps with which this could be<br />

completed. These steps are intended as a template for either a standalone<br />

application which could run searches over large geographical areas, or as a means<br />

of achieving the result for smaller areas using existing open source software<br />

libraries. This document is aimed at people seeking to develop a generic tool for<br />

completing a spectral analysis of aerial photography, or for anyone looking to<br />

execute a search of the Irish landscape for data which exhibits a distinct spectral<br />

value (crop disease, impermeable surface area, flooding etc.). The study differs<br />

from other methods of automatic image processing in that it takes existing<br />

analysis (in the form of Ordnance Survey vector data) and uses it to convert the<br />

spectral data into manageable sections. This summary presents a chronological<br />

overview of the work completed, and outlines the process used.<br />

The basis of this study is the clipping of raster data for spectral analysis, and the<br />

intention is to prove that this makes the process of image analysis easier.<br />

Traditional approaches can refine the analysis itself to reveal more about the<br />

region of interest than is completed here. For example, a lidar (aerial laser imaging)<br />

survey could provide researchers with data relating to the height of a tree canopy<br />

or the depth of peat in a bog. This study, while it does identify specific sets of<br />

values relating to land use types, focuses on identifying an easily replicated<br />

process for automatically obtaining data from aerial imagery.<br />

1

The process was designed so that it can be coded into a standalone process and has<br />

been tested using open source software. In this way the study is aimed at<br />

simplifying what can be expensive and time consuming into a series of steps that<br />

someone without a high level of training in either mapping or computer science<br />

could run.<br />

One of the difficulties presented by attempting to find data from imagery is<br />

identifying target areas within a region of interest. This is compounded by the<br />

nature of values returned by an aerial image –the clusters of pixels with similar<br />

values are often not bounded by clean borders and often display a gradual gradient<br />

of values when merging with another cluster. In other words to automatically<br />

determine the true values on the ground a program needs to know the extent of the<br />

set of data that the clusters sit in. One analogy might be tables in a relational<br />

database –by dividing the total set of pixels for a region of interest into discrete<br />

parcels of land reflected in the photograph the program has a database of tables to<br />

query for specific values. This study takes the vector data and uses it to create a<br />

mosaic of separate pixel groups for analysis. This in itself, however, still leaves a<br />

huge body of data to be analyzed. To further improve an analysis the parcels<br />

within the mosaic are classified according to known values taken from the vector<br />

coding, and these known values are then used to train an image key, which in turn<br />

allows the remaining parcels to be analyzed. It is this clipping process which is at<br />

the core of this study. This provides a means of accessing the raster data which<br />

readers can easily replicate and automate for their own purpose.<br />

Vector data has been used to target and control aerial image analysis in previous<br />

studies with a degree of success. The studies often involve additional user input to<br />

refine the region of interest so that automated analysis techniques like multivariate<br />

analysis of variance can be applied to the image. This process of refining the<br />

region requires a level of technical expertise which could make a spectral analysis<br />

of aerial imagery too time consuming for many users. An example of one of these<br />

processes is contained in the 2007 assessment of impermeable surface area by<br />

Yuyu Zhou and Y.Q. Wang (Zhou & Wang, 2007). The authors determined that<br />

segmentation would be the most important part of the study and applied an<br />

algorithm of multiple-agent segmentation and classification. This involved<br />

importing transportation data to create buffers along the major roads at varying<br />

2

distances from the centre to divide the imagery. This process allowed the authors<br />

to determine the nature of ground cover with a high degree of accuracy, revealed<br />

by random point sampling. A description of similar studies and methods is<br />

contained in the literature review at the end of this paper. The type of preparation<br />

for the analysis required to determine the appropriate extent of image segments<br />

required for similar studies can be difficult to automatically include in a study. For<br />

example, the knowledge of the availability and accuracy of transport data and how<br />

to use it to segment the photography such as in the previously mentioned study<br />

(Zhou & Wang, 2007). An alternative to this approach is to use vector data of a<br />

known accuracy for the image segmentation. This approach is something which<br />

depends on the availability of vector data and confines the focus of this study to an<br />

Irish context.<br />

One of the benefits of applying an algorithm, which involves automatically<br />

segmenting an aerial image into small area parcels, is that it creates a platform on<br />

which further image analysis can take place. This study takes a set of ASCII<br />

coordinates from the vector data and clips the imagery. These parcels are then<br />

classified according to their land use type. A user could then use these<br />

classifications to target specific sets for interpretation. For example, determining<br />

the type of growth present in marsh areas by using the set of polygons for marsh<br />

returned to identifying the percentage of the pixel count corresponding to the<br />

expected value for the growth<br />

There are a couple of pre-requisite data sets for running this type of analysis and a<br />

section from both of these sets (just west of Oughterard, Co.Galway) was used for<br />

this study. The first requirement is digital vector data from the Ordnance Survey<br />

and the second is colour (RGB) photography stores in GeoTiff format and<br />

projected using Irish Transverse Mercator (to match the vector data). It is possible<br />

to automatically re-project the imagery using GDAL_transform (GDAL, nd.)<br />

given other projections, but this was not used in this study. The software<br />

requirements are:<br />

• Something which can manipulate and interrogate vector data files.<br />

3

• Something capable of handling irregular polygons within a coordinate<br />

system.<br />

• Software capable of analyzing pixel values.<br />

In this study a commercial package called Radius vision was used to export the<br />

coordinate set for each small area polygon. The processing of the image polygons<br />

was completed using the Mirone MATLAB based framework tool developed in<br />

the University of the Algarve by Joaquim Luis (Mirone, 2009). The histogram<br />

values for the segmented image sections was obtained using PCI Geomaticas<br />

geomatica package. For each polygon tested in the study the following steps were<br />

taken, which are the basis for the proposed algorithm:<br />

• Extract the point data which surrounds the polygon(s) within the region of<br />

interest.<br />

• Import the point data into a software procedure to clip the aerial imagery<br />

and save the segmented image in GeoTiff format.<br />

• Run a histogram analysis for the image segment.<br />

• Run comparison procedures to classify the segment.<br />

The point data mentioned above refers to controlled data points which indicate<br />

fixed x and y positions on the ground and can be used to analyze the imagery.<br />

Attached to those points are vectors and coding which, for much of the image,<br />

indicate the type of land use present, e.g. forestry, water, buildings, etc. The<br />

comparison procedures mentioned above refer to values obtained during a<br />

sampling process conducted in the early part of this study. This sampling first took<br />

sections from known polygons and recorded the spectral values for these samples<br />

in order to calibrate an image key. Samples for parcels of types not coded into the<br />

vector data were then taken and the percentage variance between the two sets was<br />

recorded.<br />

The sampling for the study was completed on ten separate types of land use. Five<br />

of these types were identified from the vector data, while the remaining types<br />

were identified using the techniques developed in this study. Separate sections of<br />

4

the image were extracted for the analysis, ranging from three to ten for each area<br />

type. The samples were uniform clear examples of each type of terrain, clear of<br />

any biasing factors such as shade or overhanging vegetation so as to obtain clear<br />

baseline data. The samples were extracted as GeoTiff images and analyzed for<br />

their spectral qualities. A full description and tables for each sample are contained<br />

in chapter 3, which is divided into sections according to the land use type for easy<br />

reference.<br />

In general terms the results were what might be expected; large bodies of water<br />

(e.g. river, lake) produced a clearly identifiable signature while mixed forestry and<br />

rough pasture areas had a higher level of standard deviation than more uniform<br />

cover. The areas sampled fell under the categories of; roofs, roads, water, marsh,<br />

rough pasture, mixed forestry, pasture, track and shade. Although shade is not a<br />

distinct area, values for shade (manually identified from the imagery and clipped<br />

for analysis) were used to calibrate the image key so that these could be<br />

recognised when found in polygons identified by the vector data. In a similar way<br />

values taken as representative for spectral qualities present in roadways, for<br />

example, did not include overhanging tree cover which is present in polygons<br />

extracted based on the ground revised vector data.<br />

The aim of this part of the study was to identify a series of proportional values<br />

which could be used to indicate the presence of a land use type for an unknown<br />

polygon –for example, a mean red and green pixel values for the known areas of<br />

water was identified as 30 and 45% that of pasture; something which an automatic<br />

search could use to flag an area being used as pasture. This sampling was not<br />

intended to be comprehensive in terms of creating a key for use in every possible<br />

automated image search, but was undertaken to prove the potential for automated<br />

image processing based on segmenting the images using small area polygons.<br />

One surprising result from this sampling was in the values returned for roof<br />

polygons. These polygons did contain two identifiable ranges of values associated<br />

with the pitch in the roof, where the angle of the light created shade on one side,<br />

which might facilitate a process to determine the angle of the pitch. At the<br />

beginning of the study I had believed that these roof values would provide enough<br />

5

of a control to calibrate most of the image. However, the variation in the ranges of<br />

values from shade to light on the angled surfaces made roof values an unreliable<br />

source of control value for the study. Of the known values samples, the most<br />

useful in terms of providing a consistent control to base comparative procedures<br />

were water, roads and coniferous forestry. Of the unknown (in terms of being<br />

automatically identified from vector data) pasture and bog had the most distinct<br />

sets of values. The next phase of the study involved testing the algorithm against<br />

these identified spectral values to see if the irregular polygons (with internal<br />

distorting factors) matched the range expected from the sampling.<br />

The testing process followed the outline for the algorithm. Polygons were<br />

extracted from the vector data in the form of a set of coordinate points saved in an<br />

ASCII file which were then used to create a clipping path to cut the relevant<br />

section from the aerial image, which was then saved in GeoTiff format. This file<br />

was then analyzed for its spectral content and the resulting range of pixel values<br />

was compared to those expected for the land use type.<br />

The testing focused on sets of known polygon types for three typical areas (not<br />

coded to the vector data); pasture, marsh, bog and rough pasture. It should be<br />

noted that this testing section of the study represented an execution of the<br />

algorithm but the level of automation can be improved when the vector data is<br />

made available in GML format. Coordinate sets for multiple polygons can be<br />

extracted in one file with GML format, something which is expected in the next<br />

two years.<br />

The areas analyzed were polygons containing marsh, bog, pasture and rough<br />

pasture. Of these, pasture and bog produced the most distinctive spectral traits and<br />

matched expected values, allowing for any comparative procedure to<br />

automatically classify them. Both the marsh and rough pasture sets of samples<br />

contained high levels of deviation from the mean pixel value across the red and<br />

green colour bands with a similar range of values. However, these can be<br />

distinguished by a trough between values corresponding to shade and vegetation<br />

present in all of the red colour band values for rough pasture. A full description of<br />

testing can be found in chapter 4 of this study.<br />

6

The results of this study point to the value of accessing the spectral values<br />

contained in aerial imagery through ordnance survey vector data. In almost every<br />

land use tested the polygons returned a consistent pixel count for the type. It is<br />

important to qualify these results by noting that the values are based on an<br />

analysis of the red, green and blue colour bands (so restricted to colour imagery)<br />

and the process relies on the vector data. It does, however, present a relatively<br />

simple means for completing an analysis of aerial imagery. This in turn opens up<br />

the possibility of coding a standalone application for analyzing and comparing<br />

polygons within a region of interest. The procedure takes the form of a series of<br />

loops designed to eliminate known values. Once the known values, followed by<br />

the derived values have been eliminated, the user is left with a relatively small set<br />

of polygons to examine and can apply a key which has been further trained for the<br />

specific study. The thesis layout begins with a description of the background to<br />

the study followed by three chapters.<br />

7

1.2 General Introduction and Background<br />

An overview of the work of this study can be found in the executive summary;<br />

this section is intended to provide background information and explain some of<br />

the terms used. The study was written with the intention of making it easy for<br />

someone to access the part of the study relevant to them and then make use of any<br />

techniques identified. For example, if your intention is to identify the percentage<br />

of bog in your region of interest; read the sampling section on bog, followed by<br />

the testing and then read how to apply the algorithm (chapter 2).<br />

I think it might be helpful if I first explained my interest and motivation for this<br />

work. For the past decade I have been involved in the photogrammetric capture of<br />

the vector data used in this study and know that this type of surveying is difficult<br />

and can be extremely tedious, but I believe it does present a template for<br />

automatic capture of additional data from aerial imagery. A brief description of<br />

the nature of this surveying can be found at the end of this section. I believe that it<br />

should be possible with a robust key of spectral data for known polygons (parcel<br />

of surface area enclosed by controlled boundaries such as walls/ fences/ roads etc.)<br />

to automatically search the data for specific values. In other words someone with<br />

little knowledge of mapping or software could select a region of interest and<br />

search for a particular value from either a selection from the imagery or<br />

coordinates imported form a portable GPS device. This could take the form of a<br />

standalone application or through various freely available software packages. This<br />

study focuses on the use of open source software but suggests areas where a<br />

specialized tool could be developed. In general terms, a search of aerial imagery<br />

requires specialized tools and knowledge to access the information contained in<br />

the data (such as the spread of crop disease, level of impermeable surface area etc.)<br />

and can be a time consuming process. This study is an attempt to automate that<br />

kind of search using small area polygons; something which is unique in its<br />

approach.<br />

The study itself involves a mixture of computer science and mapping. As more<br />

and more of the surface of the earth becomes digitally captured and analyzed,<br />

8

these two fields will by necessity start to merge. This study looks at one small<br />

aspect of mapping and how automated software could be used to increase the<br />

amount of information available to a user. The premise of the study is that, given<br />

enough previously captured and accurate data, it is possible to automatically read<br />

the landscape. In short I hope to take point and line data, slice sections from aerial<br />

photography, and run a spectral analysis. The focus of the study is in the<br />

methodology so that a means for completing automated updating is identified. I<br />

should point out that by updating I am referring to providing information relating<br />

to the percentages of ground cover within a small area polygon. The task of<br />

physically capturing new structures, roads, height values (even when considering<br />

lidar) is probably something that will always require a human eye to interpret the<br />

data to some degree, for example, if the structure is temporary or if road works are<br />

underway.<br />

It might be useful at this point to introduce some more background to this study.<br />

The island of Ireland was fully digitally mapped in 2005 and recently a new<br />

database for this data has been introduced which allows small area polygons to<br />

retain unique identifiers linked to the surrounding geometry and features. The<br />

mapping is on an update cycle but for the most part it can be assumed that these<br />

polygons will remain constant (with the percentage change even lower following<br />

the building boom of the last decade). This opens up the opportunity for someone<br />

to visit the sections of surface area represented by the area polygons over<br />

successive runs of aerial photography and extract land use change data.<br />

I mentioned earlier that the focus of the study is on establishing a methodology;<br />

this was because the motivation for assessing land use change would vary<br />

according to the user. An example of this might be someone considering the<br />

potential for flooding within an area. This person might want to take a look at the<br />

water courses and new housing developments to determine the amount of<br />

impermeable surface area (paving, patios etc.) over the course of their study. To<br />

physically do this, either using a photogrammetry tool such as SOCKET SET or<br />

field GPS, would be an onerous task. The aim of this thesis is to provide a method<br />

for automatically doing this. It might seem an obvious point, but the more<br />

information available to a process when commencing an examination of an area,<br />

9

the higher the chances of successful data being returned. This is where this study<br />

differs from previous attempts at automated data capture. The process being<br />

suggested takes a large amount of previously captured and verified data to aid the<br />

algorithm. By this I mean most sections of the aerial imagery are extracted based<br />

on definite boundaries such as walls, streams, buildings etc. Internal polygons<br />

within the target area such as water, buildings, roads, forestry are also identified<br />

and used to aid the search. In this way the study is entirely dependent on<br />

previously surveyed data. This is something that has not been attempted before<br />

with Irish data. I did not find any similar study from overseas over the course of<br />

my research. I hope to prove to you that this is something that is possible to do<br />

and implement, using sample software.<br />

The software used in the study comes from several open source projects, and also<br />

from one commercial vector data manipulation package (Radius). These are all<br />

packages which could be considered to be generic tools. It is important to note that<br />

this is not in reference to their capabilities or any slight on the people who develop<br />

them but in that the functions being accessed are common to several similar<br />

software packages. For example, the ASCII coordinate files created using Radius<br />

could equally have been achieved using Arc<strong>View</strong> or Microstation (among others).<br />

The intention was to keep the algorithm as flexible as possible so that users could<br />

adapt it to their available resources.<br />

Some of the primary software tools being used in this study come from the GDAL<br />

(geospatial data abstraction) library. In particular, its facility for writing raster<br />

geospatial data format is used to manipulate the geoTiff files containing the aerial<br />

imagery being used in the study. GDAL came about as a project sponsored by the<br />

open source geospatial foundation, which is a non profit, non-governmental<br />

organization set up to support the development of open source geospatial software.<br />

The foundation also supports projects like geotools, grassGis, mapbender and<br />

mapgrade open source mapserver among others. In this study the GDAL library is<br />

accessed using another open source software library known as <strong>Open</strong>EV. This<br />

allows the GDAL library to be presented within an application for displaying and<br />

analyzing the data. As with GDAL, it is implemented in C but has the potential for<br />

manipulation with Python. In this study the processes will be run on a Windows<br />

10

platform and used as a means of accessing the raster data from the geoTiff<br />

imagery. It is necessary to access GDAL in order to open the geoTiff using the<br />

appropriate ITM coordinates for the search area point set being searched. This<br />

choice of access to the raster data should not suggest that GDAL and <strong>Open</strong>EV are<br />

unique. Similar software exists that could also have been used in the study.<br />

Another example is the ImageTool open source software library. This was<br />

developed in the 90’s in the department of dental diagnostic science in the<br />

University of Texas, and written using C++. GDAL and <strong>Open</strong>EV were chosen in<br />

preference because the data returned could be more easily modified to striate<br />

histogram and statistical data with these libraries due to the larger body of work<br />

contained within them. One of the most important software considerations was<br />

flexibility (and extensibility), as ideally the users of any suggested methodology<br />

would modify the process to suit their particular study. As was mentioned above,<br />

the preferred option was to build a top down processing tool tailored to the<br />

methodology which would accept methods from other libraries to create plug in/<br />

additional functions. For this study <strong>Open</strong>EV is substituted for that purpose.<br />

Outside of the basic software, two other core components exist. These are the data<br />

relating to the specific polygons being extracted and the key to represent the<br />

colour values being studied. As with most aerial image analysis studies, defining<br />

and validating this key forms the major part of the work involved. The process is<br />

aided by the availability of data which could be classed as controlled, that is the<br />

knowledge of areas of roof and water, which can be used to reference the other<br />

values in terms of their deviation from these known values.<br />

As mentioned at the start of this general introduction, a commercial geographic<br />

software package was used to extract the coordinates from the vector data (a vital<br />

first step in the algorithm). This particular software was not chosen for any<br />

specific capabilities other than the fact that I already had a licence and wanted to<br />

focus on proving the premise of the study (as opposed to executing the function<br />

over a specially tailored package). There are a number of commercially available<br />

image processing packages which have relevance to this study. One of these,<br />

SOCKET SET, could potentially assist the study. This software is a<br />

photogrammetry package developed by BAE systems for working on aerial<br />

photography. It allows the user to capture three dimensional data points from<br />

11

overlaid aerial imagery. This is due to a system of triangulation based on the<br />

position of the cameras when the photograph was taken. As much of the data used<br />

to extract the sections of aerial photography being analyzed in this study was<br />

captured using this process it is possible that the study could be completed during<br />

this point in data capture. I am referring here to map production, and the stage at<br />

which line and point data are taken from remote imagery. At this stage in map<br />

production it is possible that an-add on process linked to the photogrammetric<br />

software would allow the user to run an analysis of the polygon at the moment it is<br />

fully captured and coded, which in turn would mean that the area marker and<br />

associated polylines could then be given added data of value to the end user<br />

(spectral content of the polygon/ percentage land cover/ rough pasture/<br />

impermeable surface area etc.). I decided against investigating this further for two<br />

reasons. Firstly it would have involved a difficult and time consuming<br />

collaboration with the software provider. Secondly, and more importantly, this<br />

country has been fully digitally mapped and photogrammetric work is now<br />

confined to update only. This means that the application of any algorithm at the<br />

photogrammetric/ data capture stage would be confined to small, mostly urban<br />

areas.<br />

It is difficult to discuss the manipulation of spatial data without reference to the<br />

ArcGIS package of software created by ESRI. This is widely used in both<br />

commercial and educational entities for interpreting spatial data. In terms of this<br />

study the Arc<strong>View</strong> package within ArcGIS could have been utilized for image<br />

processing once the input files were converted to shapefile format. The limitations<br />

imposed by having to obtain a licence for the software (outside of trial/<br />

educational versions) precluded the use of this package. This is not to indicate that<br />

the software would not have been a useful tool for manipulating the imagery in the<br />

study, only that it was not practical at the time of the study..The value of this study<br />

is in allowing new data to be obtained and added to that originally obtained from<br />

an analysis of aerial imagery. While the study identifies one means of doing this,<br />

in terms of software and operating platform ( i.e. executing the process using parts<br />

of the GDAL library and ASCI input files), there are potentially numerous other<br />

software processes that could apply. The algorithm, however, is intended to<br />

remain as independent of software considerations as possible; being constrained<br />

12

only by the quality of the remote imagery and the accuracy of the captured data<br />

points and associated coding.<br />

Looking briefly at some of the other commercially available desktop GIS software,<br />

it is intended that the methodologies suggested could be applied to these products.<br />

However, due to the limitations involved in both learning to use the software and<br />

licensing issues this application of the study was not explored. These products<br />

include AutoDesk, Microstation, the ESRI Arc<strong>View</strong> product mentioned in the<br />

previous paragraph, IDRISI and MapInfo among others, such as the 1Spatial<br />

Radius platform used to edit the geometric input data being used in this study. All<br />

of the above products are useful in the case of updating and editing, that is to say –<br />

dealing with change. This study looks at read only data and could be described as<br />

a way of interpreting already captured data. One result of this is that the functions<br />

required to store and update change polygons and data values are not needed in<br />

the proposed algorithm. The ability to connect the statistical data to the unique<br />

identifier for the polygon should be enough to allow it to be input as an attribute<br />

by the spatial database management system. Outside of analysis the GIS software<br />

requirements relate only to coordinate transformation. As a result, while storage<br />

(of the statistics) remains a consideration, the necessity for creating, editing or<br />

updating (moving points etc.) do not form part of the requirements for the study.<br />

Even though a specific analysis tool (in terms of a standalone executable) is not<br />

presented in this study it is possible to create one and add to the existing body of<br />

open source work. <strong>Open</strong>EV, for example, allows for the addition of newly created<br />

functions using a Python compiler. This programming language allows a user to<br />

interface with GIS applications written in C and has the potential to be a flexible<br />

means of accessing C libraries (e.g., accessing GDAL from <strong>Open</strong>EV). It has been<br />

used to compile different software libraries such as GeoDjango, Thuban, <strong>Open</strong>EV,<br />

pyTerra, and AVPython. The language itself is not used in this thesis as it added<br />

another layer to the process but provides a possible means of packaging an<br />

extended experiment. One of the advantages of using Python as a programming<br />

language in preparing a GIS application is that the assignment of a variable does<br />

not have to indicate whether it is declaring a string, number, list etc. The variables,<br />

however, are case sensitive and follow the ESRI using a combination of lower and<br />

13

upper case, beginning with lower case. The acronym for the variable is at the<br />

beginning of the name, while the descriptive part follows, beginning with an upper<br />

case (Eg. htElev). In addition to modules such as math and string Python also has<br />

several geoprocessing modules. One of these, arcgisscripting, accesses all the arc<br />

toolbox tools. It should be noted that the geoprocessing object that might be being<br />

called is accessed differently, depending on the version of arcGis being used.<br />

Another package called gdal (which accesses the spatial data abstraction library<br />

being utilized in this thesis) allows for the manipulation of this library. In this<br />

Python module the language connects to the original gdal programming language<br />

(usually C or an object oriented variation) using a SWIG interface compiler. An<br />

example of Python in use in GID can be its application alongside ArcGis; ArcGis<br />

was built using hundreds of arc objects such as “featureClass”, “symbol”, “field”<br />

etc., each of which has properties and methods accessed by Python using dot<br />

notation. An example of this notation is the assignment of a variable name tr =<br />

arcGisScripting.create().<br />

Another possible method for implementing the process suggested in this study is<br />

through the .NET platform. In particular VB.NET provides a programming<br />

language that can be utilized to access GeoMedia software –which is a .NET<br />

oriented group of geographic software packages provided by Intergraph. This<br />

software allows the user to interact with ESRI shapefiles and also with spatial<br />

databases created using Oracle Spatial. It also allows for developed tools to tie in<br />

with graphical editing platforms such as Autocad and Microstation which would<br />

allow for interpretation of both images and associated geospatial data. This would<br />

also mean (given appropriate licence) that the developer could access specific<br />

ancillary products for database management (for databases based in Oracle) and<br />

others ranging from map production to 3D modeling. This programming language<br />

(VB.NET) was not used in this thesis because of the potential licencing issues<br />

which may have been involved.<br />

This thesis suggests procedures which lend themselves to C as they involve<br />

repeated loops of steps, from the pixel analysis to the classification of the<br />

polygons identified by the user through the region of interest. These procedures<br />

involve processor heavy analysis and lend themselves to being developed in C. In<br />

14

general terms, of the programming languages used in GIS development (and aerial<br />

image analysis), the C programming language is the most widely used to interpret<br />

geographic information. Many analysis programmes such as MITAB and Shape<br />

Library use C as a means of accessing geographical data. One of the advantages of<br />

using C is that processor heavy functions such as the analysis of pixels in order to<br />

categorize them into shades and variations from a mean can be best achieved in<br />

this language. This is probably most evident by the fact that most of the open<br />

source programming projects such as ImageTool or GDAL have all been written<br />

using the C programming language. This thesis makes use of a small aspect of<br />

these libraries and as such in turn uses the C programming language. This is not to<br />

suggest that the default programming language for this type of study should<br />

necessarily be C but that in order to make use of the available body of knowledge;<br />

previous studies can probably be best extended using C. It is important to note that<br />

the main problems encountered when analyzing aerial data/ imagery are those of<br />

co-ordinating the imagery so that it can be referenced and analyzed properly. The<br />

three main methods of this are geographic, projected and pixel.<br />

This thesis uses a mixture of both pixel and geographic. The fact that it is<br />

necessary to use both for the relatively simple cutting and analysis of image<br />

segments demonstrates the importance to GIS programming of being able to<br />

transform coordinates. Geographic coordinates refer to latitiude and longitude,<br />

while projected coordinates refer to a flat two dimensional coordinate structure.<br />

The C programming language (through the available libraries and its high level<br />

nature) provides an accurate means to execute these coordinate transformations.<br />

Note: Other options are available, such as the modules in .net –and coordinate<br />

transformation is something which can be achieved across all programming<br />

languages once the correct math functions are accessed.<br />

Another factor which needed to be considered while reviewing programming<br />

languages for this thesis was the limitations that would occur due to the<br />

programming experience of the author. Ideally, a processing algorithm, written in<br />

C with a Visual basic front end, which could also tie into OSI metadata would be<br />

the preferred solution. This could then be extended to allow a user to zoom in on a<br />

map window, identify a subject area, review the available photography and target<br />

15

a selected study area. This is possible using existing systems but falls outside of<br />

what could reasonably be achieved with the available resources for the study. The<br />

main purpose of the study is to determine whether the methodology being<br />

suggested is applicable and whether useful data can be returned from this type of<br />

study. The degree of success indicates the fesability of tailoring an application.<br />

The benefits from developing the already well researched methods of analyzing<br />

imagery; in terms of aggregating the pixels and deriving statistical data from a<br />

selected tile of aerial photography would be limited. This is because there is<br />

already a vast body of knowledge dealing with the subject available. In particular I<br />

am referring to work such as ImageTool, which, again written in C, was<br />

developed in California and is open source. It (along with several other open<br />

source image processing projects) effectively interprets imagery in terms of its<br />

spectral content. As I suggested earlier, one of the most important aspects of<br />

viewing and studying the surface of the earth remotely is the way it is projected.<br />

That is to project it into a format which can give valuable information to the user<br />

and this is where a large part of this study will focus. The study takes a captured<br />

and referenced coordinate grouping (set of data points) and uses these to analyze<br />

sections of the earth. These coordinate groupings are definite points along fixed<br />

boundaries which form physical barriers in terms of walls, streams, buildings and<br />

roads. These could probably be better explained in terms of a bull in a field. In<br />

general terms, any polygon which would prevent the bull from escaping forms a<br />

parcel, which is then analyzed. This means that the study areas are bounded by a<br />

series of fixed vectors/ polylines which are unlikely to deviate over time, allowing<br />

the suggested algorithm to be run over successive years of data capture. With a<br />

standard mean and control key for spectral values it should be possible to gain an<br />

insight into changes in land use in the specific semi-urban areas looked at the<br />

study.<br />

The study areas could possibly be extended to rural areas over time. The reason<br />

why the study does not extend to these areas is that it would have to account for a<br />

much larger study area and less well defined boundaries. This would probably<br />

only be effectively done using tried and tested values derived from more fixed (ie.<br />

no fuzzy data/ hazy boundaries where pixels gradiate and physical features such as<br />

man made fences and walls are not present) polygons.<br />

16

The start of this study contains a glossary of terms, which are probably familiar to<br />

most readers, and the next three chapters will refer to some terms which are<br />

specific to this type of analysis. The first term is aerial imagery which is a<br />

reference to all the spectral data obtained during the study. When described as<br />

aerial imagery or raster polygons the reference is to an aerial image corrected to<br />

allow for distortions such as slopes so that it corresponds to the vector mapping.<br />

The second term which is repeated is the vector data, which refers to ordnance<br />

survey data which was captured through a mix of photogrammetry and field<br />

surveying. Although most readers are probably familiar with the .tiff file format it<br />

is probably worth noting that the input photography files for the study are in the<br />

GeoTIFF format. This format complies with the TIFF 6.0 standard and gives the<br />

input data the flexibility to be accessed in a wide range of programs; which<br />

allowed the imagery to be viewed outside the study software as the work was<br />

undertaken. The key metadata components of this file format for this study are the<br />

georeferencing coordinates which allow the sections being analyzed to be<br />

accessed. This format is also recognized by the GDAL library being used in the<br />

study. The projection used in all the files used in the study is ITM. This is not vital<br />

to the success of the algorithm but making use of an additional projection requires<br />

the inclusion of a transform function whenever the datasets intersect. The<br />

following three chapters form chronological record of the study; starting with the<br />

suggested algorithm (Chapter 2), followed by the sampling section necessary for<br />

the basis of the procedure (Chapter 3) and finishing with a test on known polygons<br />

for specific search values (Chapter 4).<br />

17

2 Stepping through the Algorithm<br />

This thesis introduces a method for analyzing aerial imagery that can be translated<br />

into a procedure and run automatically. The operation is specific to two types of<br />

data<br />

• Digital vector files from the ordnance survey.<br />

• Controlled aerial photography stored as GeoTiff files.<br />

Both of these data sources are projected using ITM projection and are referenced<br />

during the study. The premise of the study is that it is possible to automatically<br />

capture additional information about area polygons from aerial photography using<br />

previously captured vector polygons as a guide. It is an attempt to fill in the blanks<br />

in terms of polygon attributes not included in the photogrammetry which led to the<br />

vector data. The focus is not primarily to obtain an accurate list of all polygons<br />

from the sample data but instead to identify a verifiable method for automatically<br />

doing so. As the focus is on the identification of methods, the process that is<br />

outlined can be extended to apply to searches for specific spectral qualities –in<br />

other words someone searching for a particular crop type might employ the<br />

algorithm here, but add a target set of data specific to their work. In short what<br />

follows is an attempt to take the two data sets mentioned above (photography and<br />

vector data), combining them and returning a new set of information derived from<br />

both. The process does not merge the data sources but uses the vector data (a large<br />

portion of which was derived from the photography) as a reference to cut<br />

segments from the imagery and treat these segments as smaller manageable pixel<br />

collections for analysis. This process is helped by the fact that the content of many<br />

of these polygons is known and has been coded to the vector data.<br />

What was completed in the sampling part of the thesis was an attempt to identify<br />

specific spectral qualities that can be applied to these known polygons, and then<br />

used to reference the unknown areas. This had a reasonable level of success with<br />

some polygon types making a more useful reference than others. A description of<br />

these can be found in the sampling section of this study. Automated aerial image<br />

analysis generally focuses on attempting to determine the values of the imagery<br />

18

from scratch. For an example of this type of work see Thomas Knudsens 2005<br />

study on aerial image analysis. What is unique about this study is that it attempts<br />

to use previously captured data as a basis for further image interpretation. This is<br />

something which, from the research into the data and contact with ordnance<br />

survey, has not been attempted before for Irish digital spatial data. All of the<br />

difficult remote sensing is complete (via the vector mapping) before this analysis<br />

begins, and control points, physical boundaries and closed polygons have all been<br />

identified. This study presents a method for developing software to extend the<br />

work completed, and assist users to identify specific traits in what would<br />

otherwise be an impossibly large store of imagery for the human eye to analyze<br />

(without using a team of trained analysts). The algorithm proposed here is<br />

essentially a way of looking for spectral values in small area polygons and<br />

comparing them to known values.<br />

The process outlined is for people intending to scan aerial imagery of Ireland for<br />

specific spectral properties. It is intended as an additional facility for users of<br />

aerial photography. At present it is possible for people to conduct specific research<br />

using the photography and a GIS tool. This algorithm is intended to make the<br />

process accessible to users who do not have the time or access to the resources or<br />

software licences to conduct this type of research. It can be used to identify<br />

specific land use types which are outside those currently captured by ordnance<br />

digital mapping and serve as an add on tool for anyone using that type of data. The<br />

purpose in compiling the data and researching software for the study was to<br />

outline a method and as such the application of the method is dependent on the<br />

user. In the case of this study a lot of emphasis was placed on the identification of<br />

pasture. This is because it is the major form of land use in rural and peri-urban<br />

areas and its correct identification helps in limiting the search for other types of<br />

cover to a relatively narrow number of polygons. In a similar way someone could<br />

take the same steps –identifying unique statistical properties of the pixel count in<br />

the types of area being studied and add them to the algorithm. This would involve<br />

including the additional search to the cycle of flagged polygons at the end of the<br />

third part of the search execution.<br />

19

The process can also be coded into a standalone application or as an extension of<br />

existing software for a specific use (such as searching for crop disease). One<br />

example might be with the python based raster viewer <strong>Open</strong>EV, where a user is<br />

analyzing aerial photography using the package. It is possible to extend the<br />

functionality of this to analyze statistical data using the GDAL library. A user<br />

concerned with a specific set of spectral values, or wanting to confine the research<br />

to a specific area polygon type within the image, could make use of the methods<br />

set out here to set the statistical function to return target data only (as opposed to a<br />

general application of the histogram function). In broad terms this study is for<br />

users of aerial raster imagery and the results of the sampling are based on samples<br />

of Irish data. It may be possible to execute similar studies for different regions but<br />

the small well defined polygon types with clear consistent (over large periods of<br />

time) boundaries are a vital part of the analysis. This is probably a result of<br />

relatively small property divisions and rigorous maintenance of the boundaries<br />

over hundreds of years and may be unique to Ireland. In short the study is a look<br />

at a possible coded routine to analyze the Irish landscape using all the available<br />

data.<br />

As mentioned in the previous paragraph this study is intended for users of aerial<br />

photography. The pre-requisites to this are that it is controlled and has the<br />

projection embedded in the file, and the users have access to ordnance survey<br />

vector data. Outside of these conditions the algorithm is intended for users who do<br />

not have a strong background in information technology as much as those who<br />

have a good knowledge of code and could easily convert the proposed steps into<br />

routines. The open source software described in the study has a familiar user<br />

interface to any GIS package (standard toolbars/ zoom/ measurement etc.) and it is<br />

possible for someone to run the algorithm without having to alter any of the steps.<br />

Ideally, however, the steps would be converted to add on to an existing piece of<br />

software that is being used (Arc<strong>View</strong>, Microstation etc.) so that the user can<br />

quickly run through large amounts of data. In this way the routine is designed for<br />

anyone who is interested in targeting specific properties of Irish topography that<br />

can be defined in terms of their spectral values. These areas range from forestry<br />

and agriculture to urban planning. The limitations of the study are in the quality of<br />

the imagery and it was shown that some potential applications of spectral analysis<br />

20

would not return accurate results. A study of sediment levels in drains or canals,<br />

for example, could not be developed using the methods outlined here because of<br />

the difficulty in getting a large enough sample to train the image key.<br />

The method outlined can be used against small area polygons, so can be applied to<br />

land use types across most of the country, with the exception of full urban areas<br />

and remote mountain areas (where the small land divisions are not found). It<br />

should be noted that the target areas described refer to peri-urban data. This is<br />

because fully urban areas are covered by large scale 1:1000 mapping and spectral<br />

analysis would not improve on the available data (outside of highly specialized<br />

heat radiation studies etc., which are not the intended use for this method). The<br />

method could also be used by someone seeking to trace patterns in land use over<br />

recent decades. This study would be confined to RGB photography as a<br />

comparative analysis of the properties of the pixel counts by colour band are a<br />

requirement for the process. Once the proposed key is calibrated for the particular<br />

run of photography, the algorithm could be set to run for a specified region of<br />

interest across the period when this type of photography was available.<br />

This differs from previous studies looking at automatic aerial photography in two<br />

ways. Firstly, the focus is specific to Irish ordnance survey data and concentrates<br />

on making use on the codes and known values that can be extracted from this.<br />

Secondly, the study uses small area polygons to target the spectral analysis of the<br />

imagery to relatively small sample areas. This is to reduce the difficulty posed by<br />

variations in pixel values found in large samples. In this the process is relatively<br />

unique as it takes the small area polygons as a guide and cuts the matching areas<br />

from the raster aerial imagery. This allows for automatic decisions to be made<br />

regarding the level of standard deviation present in the sample. In many ways this<br />

simplifies the process of image interpretation because most of the difficult image<br />

control work is completed and the software can then focus on variations specific<br />

to the ground cover being studied. While this has limitations on the extension of<br />

the work to other (general small scale) datasets it does outline a method for<br />

automating the search of imagery. Over the course of the last two years of this<br />

Masters study I have learned about how users can interrogate and manipulate data<br />

in large databases.<br />

21

Aerial imagery is a form of database. Once it is structured into tables it can be<br />

interrogated for spectral properties just like vector data in a spatial database. By<br />

using the boundary data points from the vector data the imagery is converted to<br />

manageable sections and properties can be determined and logged. This sub<br />

division of the image into a mosaic of areas, starting with known polygons,<br />

moving to polygons which can be easily classified (strong variation form the other<br />

known values with a low level of standard deviation such as cut pasture) and<br />

flagging any whose values fall outside the image key means the job of analyzing<br />

the image is made easier. This sub-dividing of raster imagery is something which<br />

has not been attempted with Irish ordnance data and aerial photography (to the<br />

best of my knowledge, I have conducted a search of research papers and similar<br />

work had not been undertaken within the ordnance survey). The focus of the study<br />

is on proving that this method is practical, and can be applied to a variety of area<br />

types. The methods suggested by this study are unique to the area divisions and<br />

available vector data, and present the steps necessary to train an image key to look<br />

for specific properties in the Irish landscape.<br />

The process works by taking the point data from the polygons contained within<br />

vector data representing an area of an image. Using this point data to crop the area<br />

of the image the polygon it represents and log pixel values for that area. This is<br />

repeated for every area in the region of interest. These are then compared to an<br />

image key and areas classified according to the presence of values specific to the<br />

key. One such key was developed during this thesis but could be re-calibrated for<br />

higher values. In other words a higher mean for an water bodies within a separate<br />

run of photography would increase the key values by that amount in the key.<br />

Within the key are known values (water, forestry, roads etc.) and the proportional<br />

difference (in terms of the mean pixel count for values in the red, green and blue<br />

colour bands, and the levels of standard deviation) between these known values<br />

and search values (such as pasture) is measured against the histogram values for<br />

cropped polygons of unknown use and a category applied for matches. In other<br />

words the process steps through locating, cutting and analyzing small areas of the<br />

image to enhance the available data and search specific values across the whole<br />

image.<br />

22

2.1 Initial Inputs<br />

The following four sections describe the work in terms of steps through the<br />

algorithm being proposed. This first section introduces the initial inputs<br />

required to define the region of interest to be analyzed:<br />

This study attempts to find an automatic method for image analysis using vector<br />

data as a reference, and in particular small area polygons and their associated<br />

coding. At the beginning of the proposed algorithm a user is required to input a<br />

region of interest for the process. This corresponds to the geographical area in<br />

which the user is interested. The most convenient way for someone to do this is to<br />

manually select the area from vector data or photography (or a combination of<br />

both displayed together) displayed in a window on a pc. The result of this should<br />

be a set of co-ordinates from which the study area can be extracted.<br />

The user also needs to input a sample target area for the study. This can be one of<br />

a set of values developed in this study or may take the form of a particular<br />

variation (such as a distinct type of crop etc.). In the second case a sample of the<br />

required value is needed. This can be obtained in the same way as the first part of<br />