Automatic Mapping Clinical Notes to Medical - RMIT University

Automatic Mapping Clinical Notes to Medical - RMIT University

Automatic Mapping Clinical Notes to Medical - RMIT University

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

formation was determined using the Connexor<br />

parser. This information was used <strong>to</strong><br />

identify the main utterance subject where dependency<br />

information was not available.<br />

• Morphology: Morphological tags, also generated<br />

by Connexor, were used <strong>to</strong> distinguish<br />

between first and third person pronouns, as<br />

well as between singular and plural forms of<br />

first person pronouns. Additionally, we used<br />

morphological tags from Connexor <strong>to</strong> identify<br />

imperative verbs.<br />

• Hand-constructed word lists: Several of the<br />

features used relate <strong>to</strong> closed sets of common<br />

lexical items (e.g., verbs of permission,<br />

interrogative words, variations of “yes” and<br />

“no”). For these features, we employ handconstructed<br />

simple lists, using online thesauri<br />

<strong>to</strong> expand our lists from an initial set of seed<br />

words. While some of the lists are not exhaustive,<br />

they seem <strong>to</strong> help our results and<br />

involved only a small amount of effort; none<br />

<strong>to</strong>ok more than an hour <strong>to</strong> construct.<br />

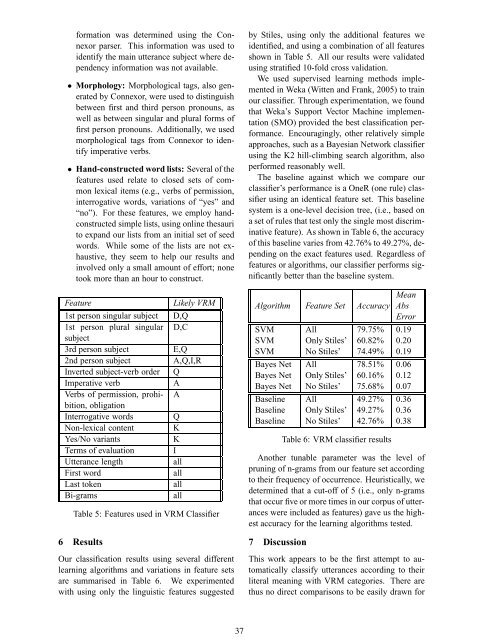

Feature Likely VRM<br />

1st person singular subject D,Q<br />

1st person plural singular<br />

subject<br />

D,C<br />

3rd person subject E,Q<br />

2nd person subject A,Q,I,R<br />

Inverted subject-verb order Q<br />

Imperative verb A<br />

Verbs of permission, prohibition,<br />

obligation<br />

A<br />

Interrogative words Q<br />

Non-lexical content K<br />

Yes/No variants K<br />

Terms of evaluation I<br />

Utterance length all<br />

First word all<br />

Last <strong>to</strong>ken all<br />

Bi-grams all<br />

Table 5: Features used in VRM Classifier<br />

6 Results<br />

Our classification results using several different<br />

learning algorithms and variations in feature sets<br />

are summarised in Table 6. We experimented<br />

with using only the linguistic features suggested<br />

37<br />

by Stiles, using only the additional features we<br />

identified, and using a combination of all features<br />

shown in Table 5. All our results were validated<br />

using stratified 10-fold cross validation.<br />

We used supervised learning methods implemented<br />

in Weka (Witten and Frank, 2005) <strong>to</strong> train<br />

our classifier. Through experimentation, we found<br />

that Weka’s Support Vec<strong>to</strong>r Machine implementation<br />

(SMO) provided the best classification performance.<br />

Encouragingly, other relatively simple<br />

approaches, such as a Bayesian Network classifier<br />

using the K2 hill-climbing search algorithm, also<br />

performed reasonably well.<br />

The baseline against which we compare our<br />

classifier’s performance is a OneR (one rule) classifier<br />

using an identical feature set. This baseline<br />

system is a one-level decision tree, (i.e., based on<br />

a set of rules that test only the single most discriminative<br />

feature). As shown in Table 6, the accuracy<br />

of this baseline varies from 42.76% <strong>to</strong> 49.27%, depending<br />

on the exact features used. Regardless of<br />

features or algorithms, our classifier performs significantly<br />

better than the baseline system.<br />

Mean<br />

Algorithm Feature Set Accuracy Abs<br />

Error<br />

SVM All 79.75% 0.19<br />

SVM Only Stiles’ 60.82% 0.20<br />

SVM No Stiles’ 74.49% 0.19<br />

Bayes Net All 78.51% 0.06<br />

Bayes Net Only Stiles’ 60.16% 0.12<br />

Bayes Net No Stiles’ 75.68% 0.07<br />

Baseline All 49.27% 0.36<br />

Baseline Only Stiles’ 49.27% 0.36<br />

Baseline No Stiles’ 42.76% 0.38<br />

Table 6: VRM classifier results<br />

Another tunable parameter was the level of<br />

pruning of n-grams from our feature set according<br />

<strong>to</strong> their frequency of occurrence. Heuristically, we<br />

determined that a cut-off of 5 (i.e., only n-grams<br />

that occur five or more times in our corpus of utterances<br />

were included as features) gave us the highest<br />

accuracy for the learning algorithms tested.<br />

7 Discussion<br />

This work appears <strong>to</strong> be the first attempt <strong>to</strong> au<strong>to</strong>matically<br />

classify utterances according <strong>to</strong> their<br />

literal meaning with VRM categories. There are<br />

thus no direct comparisons <strong>to</strong> be easily drawn for