APPROXIMATION

APPROXIMATION

APPROXIMATION

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

The fulfilment of the last two equations are only a necessary cond:.<br />

tion for a minimum. However, it is possible to show that we have obtained<br />

a minimum.<br />

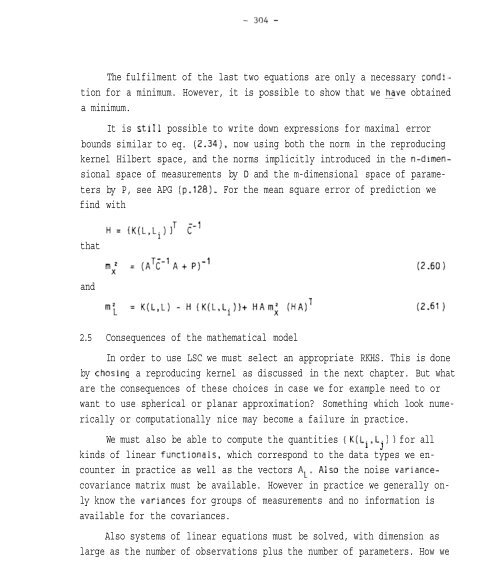

It is still possible to write down expressions for maximal error<br />

bounds similar to eq. (2.34), now using both the norm in the reproducing<br />

kernel Hilbert space, and the norms implicitly introduced in the n-dimen-<br />

sional space of measurements by D and the m-dimensional space of parame-<br />

ters by P, see APG (p.128). For the mean square error of prediction we<br />

find with<br />

that<br />

and<br />

2.5 Consequences of the mathematical model<br />

In order to use LSC we must select an appropriate RKHS. This is done<br />

by chosing a reproducing kernel as discussed in the next chapter. But what<br />

are the consequences of these choices in case we for example need to or<br />

want to use spherical or planar approximation? Something which look nume-<br />

rically or computationally nice may become a failure in practice.<br />

We must also be able to compute the quantities I K(Li,L.) l for all<br />

J<br />

kinds of linear functionals, which correspond to the data types we en-<br />

counter in practice as well as the vectors AL. Aiso the noise variance-<br />

covariance matrix must be available. However in practice we generally on-<br />

ly know the variance8 for groups of measurements and no information is<br />

available for the covariances.<br />

Also systems of linear equations must be solved, with dimension as<br />

large as the number of observations plus the number of parameters. How we