PhD Thesis Semi-Supervised Ensemble Methods for Computer Vision

PhD Thesis Semi-Supervised Ensemble Methods for Computer Vision

PhD Thesis Semi-Supervised Ensemble Methods for Computer Vision

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

3.5. Co-Training and Multi-View Learning 31<br />

Expectation Maximization In generative SSL methods, one of the most frequent optimization<br />

methods used is the expectation maximization (EM) algorithm. If the training<br />

data given is D = D L ∪D U , then the missing (hidden) variables are H = {y l+1 , . . . , y l+u }.<br />

The EM algorithm is an iterative method to find the model parameters θ that locally maximize<br />

p((D)|θ). EM in each iteration consists of two steps, an expectation step (E-step)<br />

and a maximization step (M-step). It keeps a distribution q t (H) over the hidden variables.<br />

In practice, EM was used <strong>for</strong> many SSL problems, e.g., text classification [Nigam et al.,<br />

2006], etc.. However, since it is a local optimizer, it can get stuck in local minima. We<br />

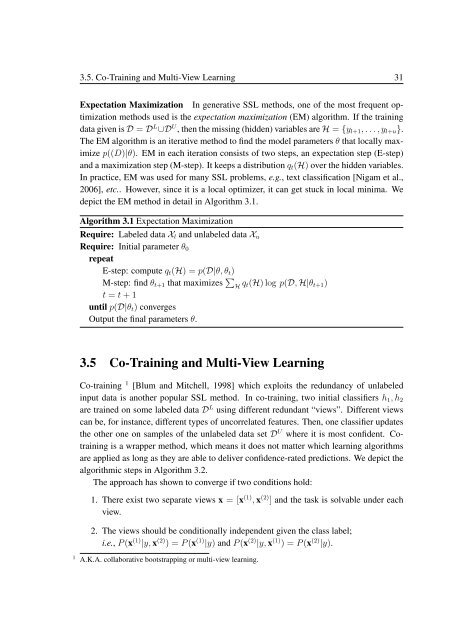

depict the EM method in detail in Algorithm 3.1.<br />

Algorithm 3.1 Expectation Maximization<br />

Require: Labeled data X l and unlabeled data X u<br />

Require: Initial parameter θ 0<br />

repeat<br />

E-step: compute q t (H) = p(D|θ, θ t )<br />

M-step: find θ t+1 that maximizes ∑ H q t(H) log p(D, H|θ t+1 )<br />

t = t + 1<br />

until p(D|θ t ) converges<br />

Output the final parameters θ.<br />

3.5 Co-Training and Multi-View Learning<br />

Co-training 1 [Blum and Mitchell, 1998] which exploits the redundancy of unlabeled<br />

input data is another popular SSL method. In co-training, two initial classifiers h 1 , h 2<br />

are trained on some labeled data D L using different redundant “views”. Different views<br />

can be, <strong>for</strong> instance, different types of uncorrelated features. Then, one classifier updates<br />

the other one on samples of the unlabeled data set D U where it is most confident. Cotraining<br />

is a wrapper method, which means it does not matter which learning algorithms<br />

are applied as long as they are able to deliver confidence-rated predictions. We depict the<br />

algorithmic steps in Algorithm 3.2.<br />

The approach has shown to converge if two conditions hold:<br />

1. There exist two separate views x = [x (1) , x (2) ] and the task is solvable under each<br />

view.<br />

2. The views should be conditionally independent given the class label;<br />

i.e., P (x (1) |y, x (2) ) = P (x (1) |y) and P (x (2) |y, x (1) ) = P (x (2) |y).<br />

1 A.K.A. collaborative bootstrapping or multi-view learning.