PhD Thesis Semi-Supervised Ensemble Methods for Computer Vision

PhD Thesis Semi-Supervised Ensemble Methods for Computer Vision

PhD Thesis Semi-Supervised Ensemble Methods for Computer Vision

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

50 Chapter 4. <strong>Semi</strong>Boost and Visual Similarity Learning<br />

+<br />

+<br />

?<br />

?<br />

-<br />

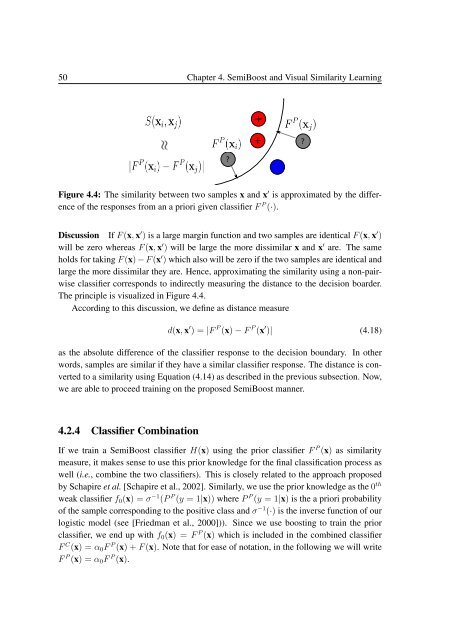

Figure 4.4: The similarity between two samples x and x ′ is approximated by the difference<br />

of the responses from an a priori given classifier F P (·).<br />

Discussion If F (x, x ′ ) is a large margin function and two samples are identical F (x, x ′ )<br />

will be zero whereas F (x, x ′ ) will be large the more dissimilar x and x ′ are. The same<br />

holds <strong>for</strong> taking F (x) − F (x ′ ) which also will be zero if the two samples are identical and<br />

large the more dissimilar they are. Hence, approximating the similarity using a non-pairwise<br />

classifier corresponds to indirectly measuring the distance to the decision boarder.<br />

The principle is visualized in Figure 4.4.<br />

According to this discussion, we define as distance measure<br />

d(x, x ′ ) = |F P (x) − F P (x ′ )| (4.18)<br />

as the absolute difference of the classifier response to the decision boundary. In other<br />

words, samples are similar if they have a similar classifier response. The distance is converted<br />

to a similarity using Equation (4.14) as described in the previous subsection. Now,<br />

we are able to proceed training on the proposed <strong>Semi</strong>Boost manner.<br />

4.2.4 Classifier Combination<br />

If we train a <strong>Semi</strong>Boost classifier H(x) using the prior classifier F P (x) as similarity<br />

measure, it makes sense to use this prior knowledge <strong>for</strong> the final classification process as<br />

well (i.e., combine the two classifiers). This is closely related to the approach proposed<br />

by Schapire et al. [Schapire et al., 2002]. Similarly, we use the prior knowledge as the 0 th<br />

weak classifier f 0 (x) = σ −1 (P P (y = 1|x)) where P P (y = 1|x) is the a priori probability<br />

of the sample corresponding to the positive class and σ −1 (·) is the inverse function of our<br />

logistic model (see [Friedman et al., 2000])). Since we use boosting to train the prior<br />

classifier, we end up with f 0 (x) = F P (x) which is included in the combined classifier<br />

F C (x) = α 0 F P (x) + F (x). Note that <strong>for</strong> ease of notation, in the following we will write<br />

F P (x) = α 0 F P (x).