C programming notes - School of Physics

C programming notes - School of Physics

C programming notes - School of Physics

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

C <strong>programming</strong> <strong>notes</strong><br />

file:///F:/my_docs/web_phys2020/C<strong>programming</strong><strong>notes</strong>.html<br />

39 <strong>of</strong> 40 19/03/2007 10:06 AM<br />

Reasons for worrying about how fast a program is include:<br />

if it is interactive, and reponsiveness is important,<br />

if it is pushing the boundaries <strong>of</strong> practicality (e.g., it might takes weeks to run).<br />

Hints on improving speed:<br />

use a clever algorithm.<br />

identify the time-critical parts <strong>of</strong> the program, and concentrate on improving those.<br />

examine memory use as well (e.g., try to reduce the amount <strong>of</strong> memory being used, and keep the usage localised<br />

as opposed to jumping around randomly).<br />

iterate most rapidly over the rightmost subscript in arrays, since this will lead to better localisation <strong>of</strong> memory<br />

use (and hence greater likelyhood <strong>of</strong> cache hits).<br />

use optimisation (e.g., -O1, -O2, or -O3; see "man gcc" for details).<br />

try optimising for small program size (-Os).<br />

in general, try not to be "too clever" - the C compiler can quite <strong>of</strong>ten do a better job if your program is clear.<br />

make the critical parts <strong>of</strong> the program as small (in terms <strong>of</strong> bytes <strong>of</strong> instructions) as possible - they will then be<br />

more likely to fit in the CPU's "instruction cache", resulting in better performance.<br />

declare <strong>of</strong>ten-called functions as "inline", which eliminates the expense <strong>of</strong> the function call and copying the<br />

arguments to/from the stack. This technique should be used with caution, since it makes the program larger, in<br />

which case it may no longer fit in the instruction cache.<br />

avoid printing out lots <strong>of</strong> unnecessary information. Formatting and writing text to the screen is quite CPU<br />

intensive.<br />

2D cellular automata - Conway's Game <strong>of</strong> Life<br />

In 1970, the mathematician John Horton Conway published the description <strong>of</strong> a simple 2D cellular automata which he<br />

called the Game <strong>of</strong> Life. Cells in a 2D grid were either "alive" or "dead", and their state in the next generation was<br />

determined by their current state and the number <strong>of</strong> nearest neighbours (from 0 to 8, inclusive). The rules were chosen<br />

to simulate some properties <strong>of</strong> biological systems (e.g., a cell would die <strong>of</strong> "overcrowding" if too many <strong>of</strong> its<br />

neighbours were alive, and die <strong>of</strong> "lack <strong>of</strong> support" if too few <strong>of</strong> its neighbours were alive).<br />

Here is a "simple" example <strong>of</strong> how to program the Game <strong>of</strong> Life on a computer.<br />

If we are interested in following the evolution <strong>of</strong> the game for many thousands <strong>of</strong> generations, it is advantageous to<br />

think <strong>of</strong> ways <strong>of</strong> speeding up our simple implementation. The first thing to try is to turn on full optimisation during the<br />

compilation, using the "-O4" switch. The next step is to make the critical functions "inline" (see here for the code). We<br />

can also think about the algorithm, and realise that a lot <strong>of</strong> our calculation <strong>of</strong> the number <strong>of</strong> nearest neighbours was<br />

involved in handling the special case <strong>of</strong> being on the boundary; we can re-write our program to separate out the<br />

boundary case, thereby allowing a simplified (faster) function to do most <strong>of</strong> the neighbour calculations, at the expense<br />

<strong>of</strong> increased complexity in handling the evolution from one generation to the next. Finally, we can try to use the fact<br />

that much <strong>of</strong> the Life "universe" tends to be sparsely populated, allowing us to produce a second<br />

neighbourhood-calculating function for the special case that the preceeding cell had no nearest neighbours. These are<br />

by no means the only speed-ups that are possible, but they give you some flavour <strong>of</strong> what is possible.<br />

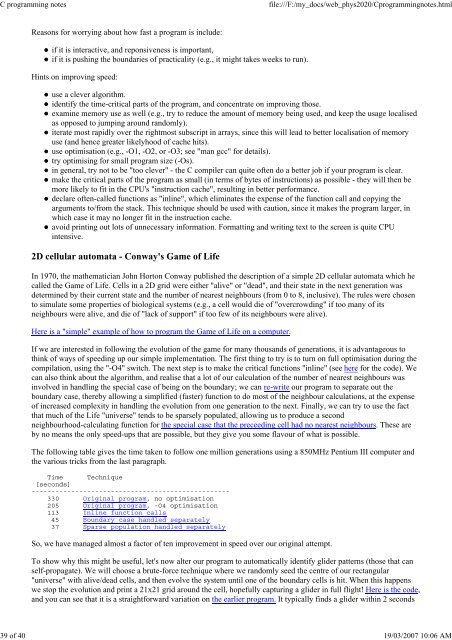

The following table gives the time taken to follow one million generations using a 850MHz Pentium III computer and<br />

the various tricks from the last paragraph.<br />

Time Technique<br />

[seconds]<br />

--------------------------------------------------<br />

330 Original program, no optimisation<br />

205 Original program, -O4 optimisation<br />

113 Inline function calls<br />

45 Boundary case handled separately<br />

37 Sparse population handled separately<br />

So, we have managed almost a factor <strong>of</strong> ten improvement in speed over our original attempt.<br />

To show why this might be useful, let's now alter our program to automatically identify glider patterns (those that can<br />

self-propagate). We will choose a brute-force technique where we randomly seed the centre <strong>of</strong> our rectangular<br />

"universe" with alive/dead cells, and then evolve the system until one <strong>of</strong> the boundary cells is hit. When this happens<br />

we stop the evolution and print a 21x21 grid around the cell, hopefully capturing a glider in full flight! Here is the code,<br />

and you can see that it is a straightforward variation on the earlier program. It typically finds a glider within 2 seconds