Semantic Interpretation of Digital Aerial Images Utilizing ...

Semantic Interpretation of Digital Aerial Images Utilizing ...

Semantic Interpretation of Digital Aerial Images Utilizing ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

4 Chapter 1. Introduction<br />

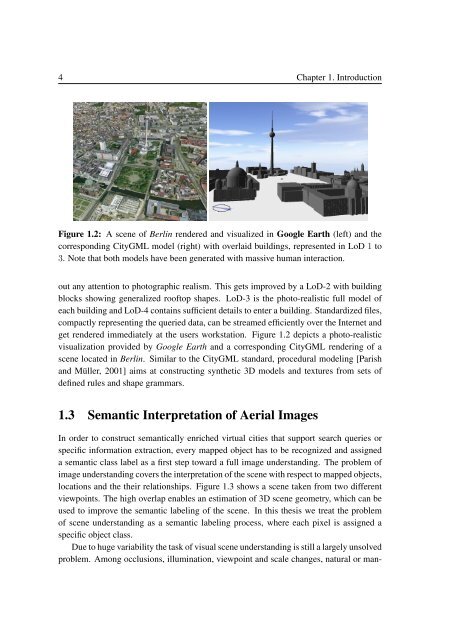

Figure 1.2: A scene <strong>of</strong> Berlin rendered and visualized in Google Earth (left) and the<br />

corresponding CityGML model (right) with overlaid buildings, represented in LoD 1 to<br />

3. Note that both models have been generated with massive human interaction.<br />

out any attention to photographic realism. This gets improved by a LoD-2 with building<br />

blocks showing generalized ro<strong>of</strong>top shapes. LoD-3 is the photo-realistic full model <strong>of</strong><br />

each building and LoD-4 contains sufficient details to enter a building. Standardized files,<br />

compactly representing the queried data, can be streamed efficiently over the Internet and<br />

get rendered immediately at the users workstation. Figure 1.2 depicts a photo-realistic<br />

visualization provided by Google Earth and a corresponding CityGML rendering <strong>of</strong> a<br />

scene located in Berlin. Similar to the CityGML standard, procedural modeling [Parish<br />

and Müller, 2001] aims at constructing synthetic 3D models and textures from sets <strong>of</strong><br />

defined rules and shape grammars.<br />

1.3 <strong>Semantic</strong> <strong>Interpretation</strong> <strong>of</strong> <strong>Aerial</strong> <strong>Images</strong><br />

In order to construct semantically enriched virtual cities that support search queries or<br />

specific information extraction, every mapped object has to be recognized and assigned<br />

a semantic class label as a first step toward a full image understanding. The problem <strong>of</strong><br />

image understanding covers the interpretation <strong>of</strong> the scene with respect to mapped objects,<br />

locations and the their relationships. Figure 1.3 shows a scene taken from two different<br />

viewpoints. The high overlap enables an estimation <strong>of</strong> 3D scene geometry, which can be<br />

used to improve the semantic labeling <strong>of</strong> the scene. In this thesis we treat the problem<br />

<strong>of</strong> scene understanding as a semantic labeling process, where each pixel is assigned a<br />

specific object class.<br />

Due to huge variability the task <strong>of</strong> visual scene understanding is still a largely unsolved<br />

problem. Among occlusions, illumination, viewpoint and scale changes, natural or man-