Takashi Yamano

Takashi Yamano

Takashi Yamano

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

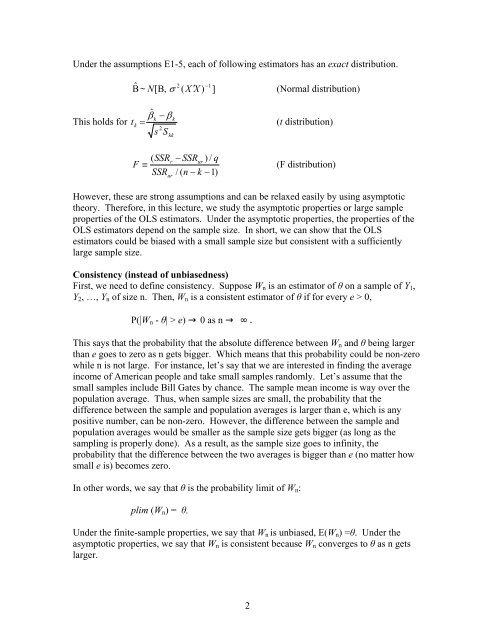

Under the assumptions E1-5, each of following estimators has an exact distribution.<br />

ˆ 1<br />

2 −<br />

Β ~ N[<br />

Β,<br />

σ ( X ′ X ) ]<br />

(Normal distribution)<br />

This holds for<br />

ˆ β − β<br />

k k<br />

t<br />

k<br />

=<br />

(t distribution)<br />

s 2 S<br />

kk<br />

F<br />

( SSR<br />

≡<br />

SSR<br />

r<br />

ur<br />

− SSR<br />

ur<br />

) / q<br />

/ ( n − k −1)<br />

(F distribution)<br />

However, these are strong assumptions and can be relaxed easily by using asymptotic<br />

theory. Therefore, in this lecture, we study the asymptotic properties or large sample<br />

properties of the OLS estimators. Under the asymptotic properties, the properties of the<br />

OLS estimators depend on the sample size. In short, we can show that the OLS<br />

estimators could be biased with a small sample size but consistent with a sufficiently<br />

large sample size.<br />

Consistency (instead of unbiasedness)<br />

First, we need to define consistency. Suppose W n is an estimator of θ on a sample of Y 1 ,<br />

Y 2 , …, Y n of size n. Then, W n is a consistent estimator of θ if for every e > 0,<br />

P(|W n - θ| > e) 0 as n <br />

This says that the probability that the absolute difference between W n and θ being larger<br />

than e goes to zero as n gets bigger. Which means that this probability could be non-zero<br />

while n is not large. For instance, let’s say that we are interested in finding the average<br />

income of American people and take small samples randomly. Let’s assume that the<br />

small samples include Bill Gates by chance. The sample mean income is way over the<br />

population average. Thus, when sample sizes are small, the probability that the<br />

difference between the sample and population averages is larger than e, which is any<br />

positive number, can be non-zero. However, the difference between the sample and<br />

population averages would be smaller as the sample size gets bigger (as long as the<br />

sampling is properly done). As a result, as the sample size goes to infinity, the<br />

probability that the difference between the two averages is bigger than e (no matter how<br />

small e is) becomes zero.<br />

In other words, we say that θ is the probability limit of W n :<br />

plim (W n ) = θ.<br />

Under the finite-sample properties, we say that W n is unbiased, E(W n ) =θ. Under the<br />

asymptotic properties, we say that W n is consistent because W n converges to θ as n gets<br />

larger.<br />

2