Magellan Final Report - Office of Science - U.S. Department of Energy

Magellan Final Report - Office of Science - U.S. Department of Energy

Magellan Final Report - Office of Science - U.S. Department of Energy

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Magellan</strong> <strong>Final</strong> <strong>Report</strong><br />

method to the Open <strong>Science</strong> Grid (OSG). An important aspect <strong>of</strong> porting Einstein@Home to run on OSG<br />

was strengthening <strong>of</strong> fault-tolerance and implementation <strong>of</strong> automatic recovery from errors. Fixing problems<br />

manually at scale simply isn’t practical, so Einstein@OSG eventually automated the process.<br />

LIGO used the <strong>Magellan</strong> serial queue and the Eucalyptus setup at NERSC to understand the advantages<br />

<strong>of</strong> running in cloud environments. A virtual cluster was set up in the NERSC Eucalyptus deployment for<br />

LIGO to access the virtual resources. The virtual cluster was set up to serve an OSG gatekeeper on a head<br />

virtual machine to provide the user a seamless transition between the traditional batch queue submission<br />

and the virtual cluster.<br />

Our work with LIGO shows how center staff might set up virtual clusters for use by certain groups.<br />

To keep the image size small, the gatekeeper stack (approximately 600 MB) was installed on a block store<br />

volume. In order to work around the virtual nature <strong>of</strong> the head node, it was always booted with a private IP;<br />

and a reserved public IP, which is static, was assigned after the instance was booted. The gsiftp server had<br />

to be configured to handle the private/public duality <strong>of</strong> the IP addressing. No other changes were required<br />

to the LIGO s<strong>of</strong>tware stack. LIGO was able to switch from running through the NERSC Globus gatekeeper<br />

to running through the virtual gatekeeper without major difficulties, and ran successfully.<br />

11.2.4 ATLAS<br />

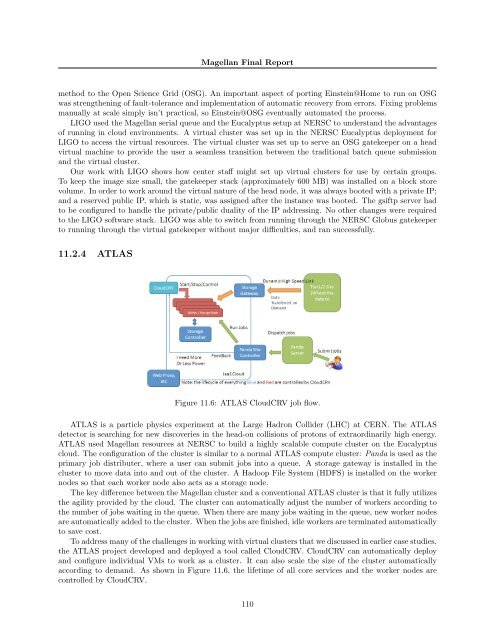

Figure 11.6: ATLAS CloudCRV job flow.<br />

ATLAS is a particle physics experiment at the Large Hadron Collider (LHC) at CERN. The ATLAS<br />

detector is searching for new discoveries in the head-on collisions <strong>of</strong> protons <strong>of</strong> extraordinarily high energy.<br />

ATLAS used <strong>Magellan</strong> resources at NERSC to build a highly scalable compute cluster on the Eucalyptus<br />

cloud. The configuration <strong>of</strong> the cluster is similar to a normal ATLAS compute cluster: Panda is used as the<br />

primary job distributer, where a user can submit jobs into a queue. A storage gateway is installed in the<br />

cluster to move data into and out <strong>of</strong> the cluster. A Hadoop File System (HDFS) is installed on the worker<br />

nodes so that each worker node also acts as a storage node.<br />

The key difference between the <strong>Magellan</strong> cluster and a conventional ATLAS cluster is that it fully utilizes<br />

the agility provided by the cloud. The cluster can automatically adjust the number <strong>of</strong> workers according to<br />

the number <strong>of</strong> jobs waiting in the queue. When there are many jobs waiting in the queue, new worker nodes<br />

are automatically added to the cluster. When the jobs are finished, idle workers are terminated automatically<br />

to save cost.<br />

To address many <strong>of</strong> the challenges in working with virtual clusters that we discussed in earlier case studies,<br />

the ATLAS project developed and deployed a tool called CloudCRV. CloudCRV can automatically deploy<br />

and configure individual VMs to work as a cluster. It can also scale the size <strong>of</strong> the cluster automatically<br />

according to demand. As shown in Figure 11.6, the lifetime <strong>of</strong> all core services and the worker nodes are<br />

controlled by CloudCRV.<br />

110