Magellan Final Report - Office of Science - U.S. Department of Energy

Magellan Final Report - Office of Science - U.S. Department of Energy

Magellan Final Report - Office of Science - U.S. Department of Energy

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Magellan</strong> <strong>Final</strong> <strong>Report</strong><br />

important design goal. The testbed was architected for flexibility and to support research, and the hardware<br />

was chosen to be similar to the high-end hardware in HPC clusters, thus catering to scientific applications.<br />

Argonne. The <strong>Magellan</strong> testbed at Argonne includes computational, storage, and networking infrastructure.<br />

There is a total <strong>of</strong> 504 iDataplex nodes as described above. In addition to the core compute cloud, Argonne’s<br />

<strong>Magellan</strong> has three types <strong>of</strong> hardware that one might expect to see within a typical HPC cluster: Active<br />

Storage servers, Big Memory servers, and GPU servers, all connected with QDR InfiniBand provided by two<br />

large, 648-port Mellanox switches.<br />

There are 200 Active Storage servers, each with dual Intel Nehalem quad-core processors, 24 GB <strong>of</strong><br />

memory, 8x500 GB SATA drives, 4x50 GB SSD, and a QDR InfiniBand adapter. The SSD was added to<br />

facilitate exploration <strong>of</strong> performance improvements for both multi-tiered storage architectures and Hadoop.<br />

These servers are also being used to support the ANI research projects.<br />

Accelerators and GPUs are becoming increasingly important in the HPC space. Virtualization <strong>of</strong> heterogeneous<br />

resources such as these is still a significant challenge. To support research in this area, Argonne’s<br />

testbed was outfitted with 133 GPU servers, each with dual 6 GB NVIDIA Fermi GPU, dual 8-core AMD<br />

Opteron processors, 24 GB memory, 2x500 GB local disks, and a QDR InfiniBand adapter. Virtualization<br />

work on this hardware was outside <strong>of</strong> the scope <strong>of</strong> the <strong>Magellan</strong> project proper; see [48, 86] for details.<br />

Several projects were interested in exploring the use <strong>of</strong> machines with large amounts <strong>of</strong> memory. To<br />

support them, 15 Big Memory servers were added to the Argonne testbed. Each Big Memory server was<br />

configured with 1 TB <strong>of</strong> memory, along with 4 Intel Nehalem quad-core processors, 2x500 GB local disks,<br />

and a QDR InfiniBand adapter. These were heavily used by the genome sequencing projects, allowing them<br />

to load their full databases into memory.<br />

Argonne also expanded the global archival and backup tape storage system shared between a number <strong>of</strong><br />

divisions at Argonne. Tape movers, drives, and tapes were added to the system to increase the tape storage<br />

to support the <strong>Magellan</strong> projects.<br />

<strong>Final</strong>ly, there is 160 terabytes (TB) <strong>of</strong> global storage. In total, the system has over 150 TF <strong>of</strong> peak floating<br />

point performance with 8,240 cores, 42 TB <strong>of</strong> memory, 1.4 PB <strong>of</strong> storage space, and a single 10-gigabit (Gb)<br />

external network connection. A summary <strong>of</strong> the configuration for each node is shown in Table 5.1.<br />

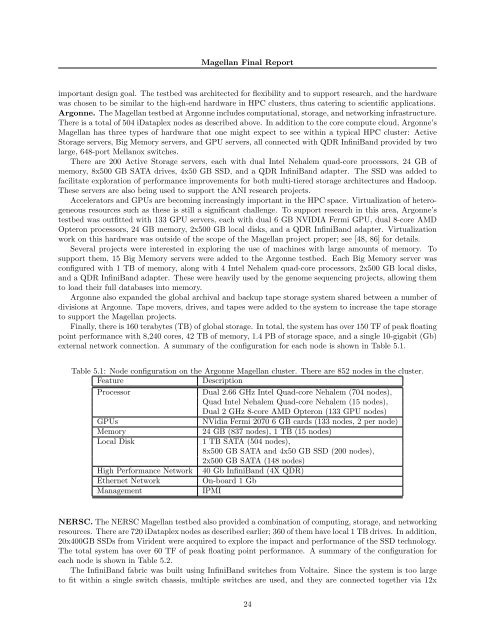

Table 5.1: Node configuration on the Argonne <strong>Magellan</strong> cluster. There are 852 nodes in the cluster.<br />

Feature<br />

Description<br />

Processor<br />

Dual 2.66 GHz Intel Quad-core Nehalem (704 nodes),<br />

Quad Intel Nehalem Quad-core Nehalem (15 nodes),<br />

Dual 2 GHz 8-core AMD Opteron (133 GPU nodes)<br />

GPUs<br />

NVidia Fermi 2070 6 GB cards (133 nodes, 2 per node)<br />

Memory<br />

Local Disk<br />

High Performance Network<br />

Ethernet Network<br />

Management<br />

24 GB (837 nodes), 1 TB (15 nodes)<br />

1 TB SATA (504 nodes),<br />

8x500 GB SATA and 4x50 GB SSD (200 nodes),<br />

2x500 GB SATA (148 nodes)<br />

40 Gb InfiniBand (4X QDR)<br />

On-board 1 Gb<br />

IPMI<br />

NERSC. The NERSC <strong>Magellan</strong> testbed also provided a combination <strong>of</strong> computing, storage, and networking<br />

resources. There are 720 iDataplex nodes as described earlier; 360 <strong>of</strong> them have local 1 TB drives. In addition,<br />

20x400GB SSDs from Virident were acquired to explore the impact and performance <strong>of</strong> the SSD technology.<br />

The total system has over 60 TF <strong>of</strong> peak floating point performance. A summary <strong>of</strong> the configuration for<br />

each node is shown in Table 5.2.<br />

The InfiniBand fabric was built using InfiniBand switches from Voltaire. Since the system is too large<br />

to fit within a single switch chassis, multiple switches are used, and they are connected together via 12x<br />

24