Voice Controlled Motorized Wheelchair with Real Time Obstacle ...

Voice Controlled Motorized Wheelchair with Real Time Obstacle ...

Voice Controlled Motorized Wheelchair with Real Time Obstacle ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

eginning to the end of the phoneme, and is not<br />

constant. The beginning of a "t" will produce<br />

different feature numbers than the end of a "t"<br />

[11].<br />

The background noise and variability<br />

problems are solved by allowing a feature number<br />

to be used by more than just one phoneme. This<br />

can be done because a phoneme lasts for a<br />

relatively long time, 50 to 100 feature numbers,<br />

and it is likely that one or more sounds are<br />

predominant during that time. Hence, it is possible<br />

to predict what phoneme was spoken.<br />

In the third step [11] to learn how a<br />

phoneme sounds, a training tool is used. We use<br />

over a hundred samples of the phoneme. The tool<br />

analyzes these samples and produces a feature<br />

number. It thus learns how likely it is for a<br />

particular feature number to appear in a specific<br />

phoneme. For example, for the phoneme "h",<br />

there might be a 55% chance of feature 52<br />

appearing, 30% chance of feature 189 appearing,<br />

and 15% chance of feature 53.<br />

The probability analysis done during<br />

training is used during recognition. Given a voice<br />

sample, the feature numbers corresponding to it<br />

are used to compute probabilities of various<br />

phenomes. The most probable phenome is then<br />

chosen.<br />

It is important that the speech recognition<br />

system adapt to the user’s voice and speaking<br />

style to improve accuracy. A word can be spoken<br />

<strong>with</strong> different pronunciations. However, after the<br />

user has spoken the word a number of times the<br />

recognizer will have enough examples that it can<br />

determine what pronunciation the user spoke.<br />

The communication between the software<br />

and the wheelchair platform is done through serial<br />

port of the system.<br />

V. OBSTACLE AVOIDANCE<br />

The obstacle avoidance processing is<br />

done using MATLAB. Our obstacle detection<br />

system is purely based on the appearance of<br />

individual pixels captured by the camera. Any<br />

pixel that differs in appearance from the ground is<br />

classified as an obstacle. The method is based on<br />

three assumptions that are reasonable for a variety<br />

of indoor and outdoor environments [5]:<br />

(i)<strong>Obstacle</strong>s differ in appearance from the<br />

ground.<br />

(ii)The ground is relatively flat.<br />

726<br />

(iii)There are no overhanging obstacles.<br />

The first assumption enables us to<br />

distinguish obstacles from the ground, while the<br />

second and third assumptions enable us to<br />

estimate the distances between detected obstacles<br />

and the camera. The key feature of the algorithm<br />

used is the global thresholding.<br />

The algorithm consists of two stages<br />

namely vision stage and decision stage. Vision<br />

stage deals <strong>with</strong> retrieving the image and the<br />

decision stage deals <strong>with</strong> classifying the image<br />

according to our wheelchair. The Vision stage<br />

includes a camera which is connected to the<br />

laptop. We have used Logitech QuickCam Pro for<br />

Notebooks for this purpose. The camera gives its<br />

output to Laptop. The whole system is placed on<br />

the wheelchair. On the Laptop MATLAB Image<br />

Processing tool is used for processing the image.<br />

MATLAB process the image and gives signal to<br />

the control circuit through Serial port. The time<br />

interval between “the time vision” and “decision”<br />

stages of the obstacle avoidance is the time taken<br />

to process the image which is about 50<br />

milliseconds. In an actual implementation a<br />

microprocessor will be used instead of a laptop to<br />

do the processing. This was tested and works<br />

perfectly. The control circuit then drives the<br />

motors accordingly via H-Bridges<br />

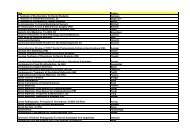

We have implemented the following<br />

algorithm:<br />

1. Take input from the camera in <strong>Real</strong> <strong>Time</strong>. The<br />

size of image is 320x240.<br />

2. Convert the image to grayscale<br />

3. Adjust the contrast of the image taken<br />

4. Take the Global Threshold of the image using<br />

Otsu‘s Algorithm [12]<br />

5. Convert the image to binary image using the<br />

threshold obtained in step 4.<br />

The binary image is used to check for the<br />

obstacles that may be present. The camera is<br />

slightly tilted towards the ground, After<br />

thresholding, the image that comes out gives<br />

white for the plane surfaces and black for any<br />

discontinuity. The black points give the obstacle<br />

location. We count the number of black pixels in<br />

the image and we use a threshold barrier that if<br />

more than 10% of the pixels present in the image<br />

are black then we have encountered an obstacle<br />

and a command will be sent to the microcontroller<br />

for the wheelchair to stop.