Chapter 2 Introduction to Neural network

Chapter 2 Introduction to Neural network

Chapter 2 Introduction to Neural network

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

The backpropagation algorithm can cause the weight update <strong>to</strong> follow<br />

a fractal pattern (fractal means it follow some rules which are<br />

applied statistical), (see figure 8.11)<br />

⇒ The stepsize and momentum term should decrease during training<br />

<strong>to</strong> avoid this.<br />

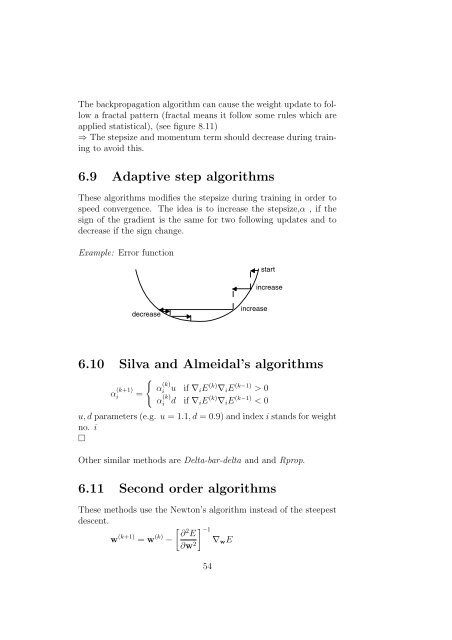

6.9 Adaptive step algorithms<br />

These algorithms modifies the stepsize during training in order <strong>to</strong><br />

speed convergence. The idea is <strong>to</strong> increase the stepsize,α , if the<br />

sign of the gradient is the same for two following updates and <strong>to</strong><br />

decrease if the sign change.<br />

Example: Error function<br />

start<br />

increase<br />

decrease<br />

increase<br />

6.10 Silva and Almeidal’s algorithms<br />

α (k+1)<br />

i =<br />

{<br />

α (k)<br />

α (k)<br />

i u if ∇ i E (k) ∇ i E (k−1) > 0<br />

i d if ∇ i E (k) ∇ i E (k−1) < 0<br />

u, d parameters (e.g. u = 1.1, d = 0.9) and index i stands for weight<br />

no. i<br />

□<br />

Other similar methods are Delta-bar-delta and and Rprop.<br />

6.11 Second order algorithms<br />

These methods use the New<strong>to</strong>n’s algorithm instead of the steepest<br />

descent.<br />

[ ] ∂<br />

w (k+1) = w (k) 2 −1<br />

E<br />

− ∇<br />

∂w 2 w E<br />

54